Heat and AI Reset the Grid: A Capacity-First Transition

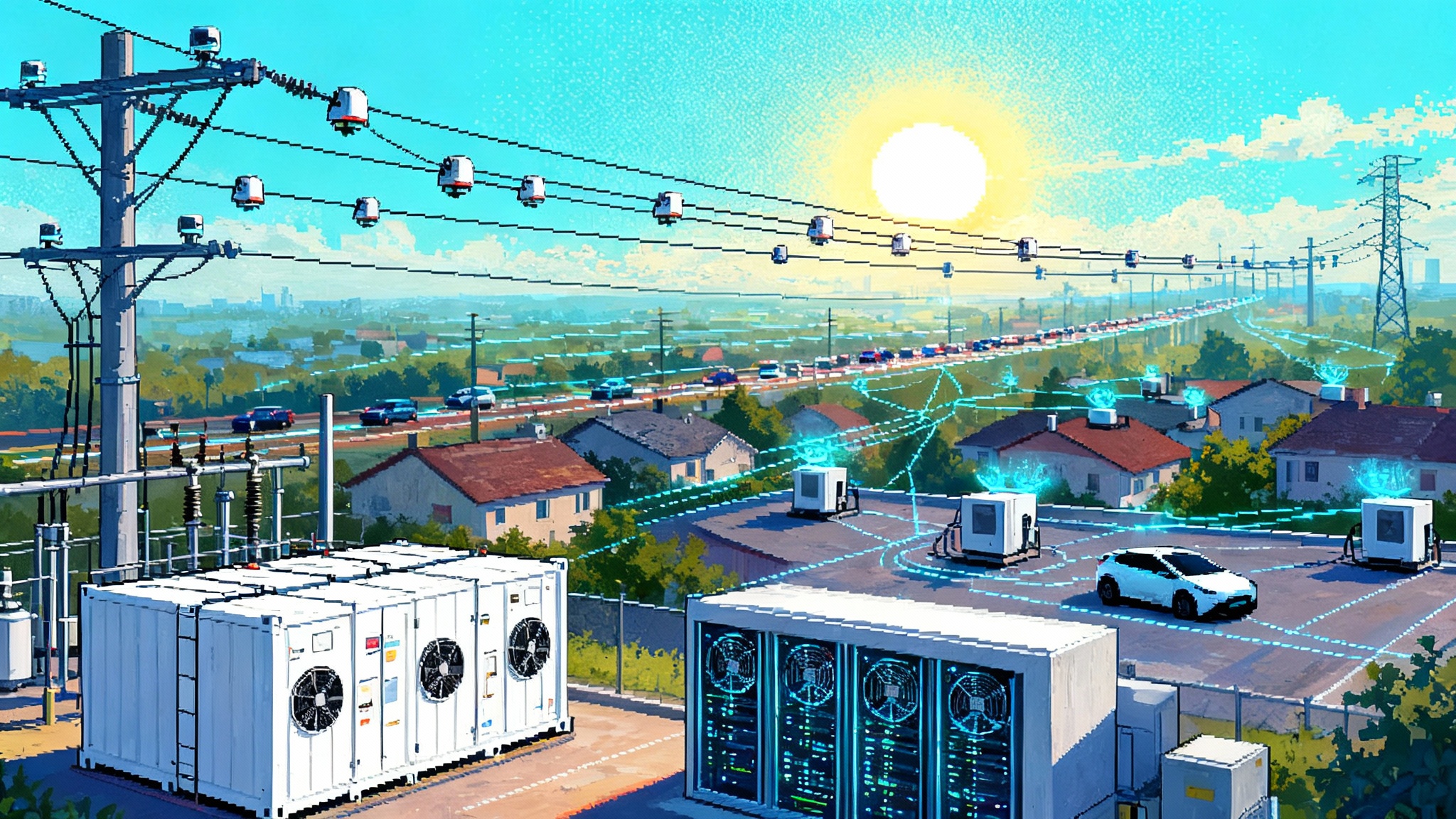

Late-summer heatwaves and the data center buildout pushed multiple grids to record or near-record peaks, exposing a new reality: demand is bigger and spikier. Operators are fast-tracking capacity tools now while rewriting adequacy math for what comes next.

The summer the grid quietly changed

In August and September, the power system’s old assumptions frayed. Heat stretched deep into the evenings. Air conditioners stayed on longer. At the same time, AI and cloud workloads kept humming. Several regional grids set new all-time peaks or brushed against them on back-to-back days, even after a decade of efficiency gains.

Operators did not wait for new highways of transmission steel. They reached for what could be deployed in months rather than years: two-to-four hour batteries, bigger and smarter demand response, virtual power plants, and software to squeeze more capacity out of the lines we already have. Interconnection queues that once felt abstract became immediate constraints as data centers asked for gigawatts and were told to pause or re-sequence projects. The message underneath the headlines was simple and stark. The grid is entering a capacity-first phase.

Capacity-first means the next three years are about having enough flexible power during the exact hours it is needed, not just annual energy totals or nameplate megawatts. It is triage and acceleration at once. The good news is that we have a toolkit that works today, and it is getting better quickly.

Heat meets AI: a new shape of demand

For decades, planners could rely on predictable seasonal peaks. Then the net load curve bent. Late-summer evenings now have long plateaus of high demand. Meanwhile, AI training clusters and hyperscale data centers layer on top with high, relatively constant load. The composite effect is like turning a gentle hill into a mesa.

A simple analogy: think of the grid as an airport. We used to have a busy holiday rush, then long quiet stretches. Now the weather is hotter, which means more takeoffs need extra runway. At the same time, a new airline with giant planes wants to schedule flights every hour. Without better scheduling and more gates, the tower must delay or divert flights. That is interconnection in a nutshell.

Late summer made this tension visible. Utilities in fast-growing corridors publicly sequenced or paused some large data center connections. Regional markets called on more emergency and reserve products. In parallel, batteries and demand response were dispatched in record volumes, flattening the top of the peak by crucial percentage points. Small actions accumulated into avoided blackouts.

The 36-month toolkit: fast capacity at human time scales

2–4 hour batteries as surgical capacity

Short-duration batteries are the ambulance of the power system. They do not replace hospitals, but they save lives by arriving fast. Two-to-four hour lithium iron phosphate systems can be built at substations, co-located with solar, or placed on the customer side of the meter. They charge when there is slack and discharge right through the evening peak.

What changed this year is how operators view them. Instead of seeing batteries as energy-shifting gadgets, planners are slotting them as dependable peak reducers. Utility pilots are becoming procurement programs. Interconnection rules are adapting so some storage can connect for peak-only service while larger upgrades trench forward. Where congestion is the constraint, batteries are being procured as “grid boosters” that sit on key nodes to keep lines within limits after a contingency. In practice, a 100 MW storage site might unlock two or three times that amount of headroom on a corridor during critical hours.

The trade-off is duration. Two-to-four hours is enough for a single evening plateau. It is not a solution for multi-day heat waves on its own. That is why you are seeing portfolios mix short batteries with targeted thermal upgrades, gas peakers that run a few hours per year, and demand response that can stretch across a week in small bites.

Virtual power plants make households dispatchable

Thermostats, water heaters, pool pumps, and EV chargers add up. Once coordinated, they behave like a power plant you can call on. This summer, VPPs turned in solid performance across several territories, cutting peak by hundreds of megawatts at a cost well below building new generation. The recipes are getting crisp. Auto-enrollment through utility marketplaces, default-on event participation with bill protection, and simple incentives tied to verifiable performance.

What is new is consistency. Reliability teams want to know that 500 MW will be there on Wednesday at 6:30 pm. That is about measurement and control, not marketing. The better programs now run test events, offer day-ahead pre-commitments, and penalize no-shows the same way they would for a power plant. The emerging best practice is to combine device-level control with household guardrails so comfort is maintained while a few degrees of flexibility is unlocked. Add EVs that defer charging and you have a fleet-scale resource that grows every month as more devices connect.

Flexible compute scheduling is the new interruptible load

Data centers historically bought firm, always-on power and built diesel farms for backup. AI shifts the balance. Training jobs are parallel, chunkable, and often time-flexible. Some inference is real time, but much of it can move by minutes or hours without breaking a product promise.

That opens the door to compute-flex. Think of it as an interruptible tariff for the cloud era. In exchange for a discount or a capacity credit, a data center commits to curtail or shift a slice of load during tight hours. The mechanism can be simple. Operators send day-ahead and hour-ahead critical hour notices. The facility pre-charges on-site batteries or thermal storage, throttles non-urgent jobs, and rides through the peak. If a site has 200 MW IT load, even a 10 percent flex band is 20 MW of dispatchable headroom. Replicate that across a cluster and you get a peaker’s worth of flexibility without pouring concrete.

This is already happening in pilot form. Contracts are being written that tie flexibility to measurable actions like IT power draw and UPS discharge. The key is clarity. What minimum notice must the operator give. How many hours per month. How are penalties handled. And what telemetry demonstrates delivery without exposing sensitive data. The win for developers is faster interconnection and better optics. The win for the grid is fewer diesel starts and fewer emergency notices on hot nights.

Grid-enhancing technologies: more lanes without new roads

When your freeway is jammed, you do not always need to build another. Sometimes you can open the shoulder, add metering lights, and speed traffic with better signs. Grid-enhancing technologies do the same for wires.

- Dynamic line ratings use sensors and weather data so lines can safely carry more when it is windy or cool. Many lines are conservatively rated for worst case. On most days, there is spare capacity.

- Topology optimization uses software to re-route flows by opening and closing switches, relieving congestion on the fly.

- Power flow controllers act like adjustable valves on the network, pushing current toward underused paths.

- Advanced conductors and smart relays increase capacity and let the system tolerate faults without tripping wide areas offline.

Regulators are pressing for these because they deliver capacity in months, not years. The cost is small compared to a new line. The risk is manageable because these are proven components. The main work is institutional. Planning teams have to budget for software and field sensors the way they budget for steel. Markets need to reflect the increased transfer capacity in their models so the benefit shows up in procurement decisions.

Rewriting the math of reliability

Planners use something called ELCC to value resources. Equivalent Load Carrying Capability is a mouthful, so here is the kitchen-table version. Imagine a crowded theater that must have enough chairs for the busiest show of the year. ELCC asks, if I add one more resource, by how many chairs can I reduce the reserve and still keep everyone seated during the busiest show.

For solar, ELCC is high at first and then falls as you add more because the sun is the same for all panels. For storage, the answer depends on timing and duration. As batteries pile in, their ELCC declines unless duration increases or dispatch is coordinated across the fleet. That is the shift now underway.

- Short batteries still score well because the biggest problem remains evening peaks that last a few hours.

- As penetration grows, planners will nudge toward longer duration for a portion of the fleet and count coordinated dispatch more strictly. You will see portfolios with a backbone of 4-hour systems, plus 6-to-8 hour units at key nodes.

- Data center flexibility will start to earn ELCC the way industrial interruptible load did in prior decades, but only with tested telemetry and credible penalties for non-delivery.

For VPPs, the frontier is standardizing performance. The more a VPP looks like a power plant in the capacity market, the more it will be valued. That means committed megawatts, measurement with one-minute granularity, and a demonstrated ability to perform during the exact system peak rather than during a mild shoulder-season test.

Finally, deliverability matters. Interconnection studies are evolving to recognize resources that only serve peak hours and do not worsen flows during off-peak conditions. Expect more experiments where storage or flexible load connects quickly with peak-only deliverability limits, while the full network upgrade plan advances in parallel.

Markets and tariffs catch up

Compute-flex tariffs with real SLAs

In the past, “demand response for data centers” meant interrupting the cooling system briefly or switching to diesel. That is not enough anymore. The new models treat compute as a schedulable industrial process.

A workable tariff has these parts:

- A defined flex band, for example 5 to 20 percent of IT load, with curtailments limited to specific hours and months.

- Notice periods that align with operator workflows, usually day-ahead with hour-ahead confirmations.

- Performance-based credits paid for verified delivery, with explicit penalties for falling short.

- Telemetry that reports IT power and UPS discharge without exposing customer data.

- An optional on-site battery that participates in grid services outside peak emergencies, creating a revenue stack that reduces PPA costs.

For operators, this is about converting interconnection risk into an asset. A facility that can flex reliably is easier to connect because it reduces the chance that one more megawatt tips a corridor into overload during a contingency.

Demand response that pays for being there, not just showing up once

Residential and small business DR is shifting from occasional events to a capacity product. The difference is commitment. Customers are recruited with clear value propositions: a fixed bill credit for being part of the fleet plus variable payments for high-value events. Utilities then manage a portfolio mix so they can confidently bid a number into the market.

Expect more auto-enrollment tied to new devices, with opt-out options and customer protections. The result is quietly significant. Every million smart thermostats can deliver roughly a mid-sized power plant worth of peak capacity for a few critical hours each summer, at a fraction of the cost and political friction of new generation.

Paying for wires that act like power plants

GETs blur the line between transmission and generation capacity. Markets are beginning to reflect that. Some regions are setting aside budgets for “no-regrets” upgrades such as dynamic line ratings and topology tools. Others are experimenting with treating large batteries as transmission assets in specific locations, with cost recovery through wires rates. The test for all of these is operational: do they reduce curtailment, increase transfer capacity during peaks, and improve reliability metrics. If yes, they should scale.

How builders can adapt now

- Data center developers: Design for flexibility. Specify UPS systems that can discharge to the grid with appropriate protection, aim for a 10 to 20 percent curtailable band in your compute plan, and include thermal storage on the cooling loop. Work with utilities to define clear SLAs so your flex is counted in resource adequacy. You will move faster in the queue.

- AI labs and cloud teams: Build schedulers that translate grid signals into job priorities. Make time-shifting a default for non-latency-sensitive training. Expose a simple interface so facilities can respond to day-ahead and hour-ahead calls without human drama.

- Utilities and ISOs: Procure capacity in months, not years. Stand up standard offers for 2–4 hour storage with peak-only deliverability. Pre-approve a package of GETs on the top 50 constrained lines. Publish a consistent telemetry spec for VPPs and compute-flex so developers can build once and deploy everywhere.

- Regulators and policymakers: Set targets for DR enrollment and GET deployments the way you set renewable targets. Allow utilities to rate-base grid-boosting storage and software where it demonstrably improves transfer capacity. Require that interconnection studies include a peak-only service track for storage and flexible load.

- Investors and OEMs: Package these solutions. Finance storage-as-transmission with clear performance contracts. Offer VPP-in-a-box for mid-size utilities. Bundle compute-flex analytics with the UPS and switchgear sale.

What could go wrong, and how to avoid it

- Diesel creep: If flex tariffs accidentally encourage sites to run diesel instead of curtailing, we lose on emissions and local air quality. Fix it by requiring minimum on-site battery energy for flex participation and by paying for verified load reduction rather than backup generation hours.

- Overpromising DR: Calling a press release a resource is how you get blackouts. Require test events, performance history, and financial penalties for non-delivery. Count only what performs during system peaks.

- Cyber and privacy concerns: VPP telemetry must be secure and minimal. Use device aggregators with strong segmentation, third-party monitoring, and clear data scopes.

- Equity blind spots: Ensure low-income customers benefit from DR and VPP programs with higher incentives, free devices, and bill protection. Otherwise, the capacity we build rests on unfair burdens.

- Thermal constraints on lines: Dynamic line ratings help, but in extreme heat with low wind they may not add capacity. Pair GETs with targeted reconductoring and substation upgrades so the system does not hit thermal walls on the hottest days.

The pace car for the next three years

Here is a pragmatic sequence that many regions are already following:

- Next 12 months: Procure thousands of megawatts of 2–4 hour batteries with peak-only deliverability. Expand DR enrollment through auto-enroll programs tied to new thermostats and EV chargers. Deploy dynamic line ratings on the top congested corridors. Launch at least one compute-flex tariff per major data center region.

- Months 12–24: Introduce 6–8 hour storage at key nodes where ELCC for 4-hour systems declines. Scale topology optimization and power flow controllers. Standardize VPP telemetry and bring residential DR into the capacity market with performance guarantees. Convert successful compute-flex pilots into standard service offerings.

- Months 24–36: Fold these gains into long-term planning. Lock in big transmission projects with broad regional support, but do not pause the near-term capacity program while waiting. Use the cumulative data from batteries, VPPs, and GETs to refine ELCC and portfolio design. By then, the peak will be meaningfully flatter, and interconnection will be less of a cliff.

This is not a fantasy of software swallowing physics. It is a short list of proven tactics that line up with how the grid actually works. The hard part is coordination, not invention.

Clear takeaways and what to watch next

- Heat and AI are reshaping peaks. Expect longer evening plateaus and higher baseload in growth corridors. Plan for capacity, not just energy.

- Two-to-four hour batteries, VPPs, compute-flex, and GETs are the near-term levers. They deliver in months and buy time for big wires.

- ELCC for storage is shifting. A backbone of 4-hour systems works today, but portfolios will add longer duration and coordination to sustain reliability value.

- Demand response must be counted like a power plant. Test events, penalties, and telemetry are non-negotiable if it is to stand in the resource stack.

- Compute-flex tariffs will mature quickly. Clear SLAs and performance pay can turn data centers from stressors into stabilizers during critical hours.

- GETs are the cheapest capacity you can buy this year. Prioritize dynamic line ratings, topology optimization, and power flow control on the most congested lines.

Watch next:

- Which regions codify peak-only deliverability for storage and flexible load in their interconnection rules.

- The first large-scale compute-flex portfolios and how they perform during multi-day heat waves.

- ELCC updates that shift procurement toward a mixed-duration storage fleet.

- DR enrollment rates crossing critical thresholds, especially through auto-enroll device programs.

- Regulatory dockets that treat batteries and software as transmission assets with cost recovery.

The grid is not waiting for permission. It is already moving to capacity-first. If we do the next 36 months well, the big projects that follow will connect to a system that is steadier, smarter, and ready for the next plateau.