Chrome makes Gemini the new default runtime for web agents

Google is building Gemini directly into Chrome, adding AI Mode in the address bar and a page‑aware assistant that can read, reason, and soon act across tabs. Here is what changes for search, ads, Workspace admins, and the open web.

The browser just became an agent runtime

A small button in the top right of Chrome looks unassuming. Click it, and the browser starts to behave less like a document viewer and more like an assistant that can read, reason, and soon act across the pages you have open. With Google’s September rollout of Gemini in Chrome, the browser is on its way to becoming the default runtime for AI agents. Google is positioning this as a reimagining of Chrome, not a bolt‑on sidebar. The shift is practical and strategic, and it goes to the heart of how the web is searched, monetized, and governed.

The company outlined the vision on September 18, 2025, explaining that Gemini in Chrome can answer questions about the current page, reason across multiple tabs, and will gradually gain the ability to take actions on pages. It also previewed AI Mode in the omnibox, a conversational layer in the address bar for complex queries and follow ups. These changes are rolling out first to U.S. desktop users in English, with mobile and broader locales to follow. See Google’s summary in Chrome reimagined with AI.

What is changing inside Chrome

-

Agentic browsing inside the browser: Gemini can parse the page you are on and draw from the context of several open tabs to summarize, compare, and explain. The assistive role is evolving from passive Q&A to task completion. In coming releases, Google says Gemini will be able to perform page actions like navigating to the right section of a long document, filling forms, and completing workflows you approve.

-

AI Mode in the address bar: The omnibox becomes a conversational entry point. Instead of short keyword strings, you can type a full intent with constraints and follow up in place. Chrome will blend a search result with a guided conversation, and it can propose questions related to the page you are viewing. This begins to collapse the gap between search, navigation, and assistance.

-

Deep Workspace tie‑ins: Gemini in Chrome can draw on Google apps context you already have access to, like Calendar and Drive, so tasks like cross‑referencing documents or scheduling meetings stay inside the browsing surface. For enterprises, Google is wiring the feature into the Workspace service model with admin toggles, reporting, and policy alignment. Details for admins are in Workspace admin controls for Gemini.

-

Enterprise controls and compliance posture: Admins can enable or disable Gemini in Chrome at the domain, OU, or group level, separate from the broader Gemini app setting. Usage shows up in Admin Console reports. Google points to existing Workspace guardrails like DLP, IRM, trust rules, and client‑side encryption to scope what Gemini can retrieve, reinforcing that the agent only sees what the signed‑in user is allowed to see.

-

Safety and fraud protections: Google is also tapping smaller on‑device models to help detect scammy overlays and scareware tactics inside web sessions and to trigger assisted password resets on supported sites. These are early signals of a runtime that is aware of session risk and can intervene.

Why this matters now

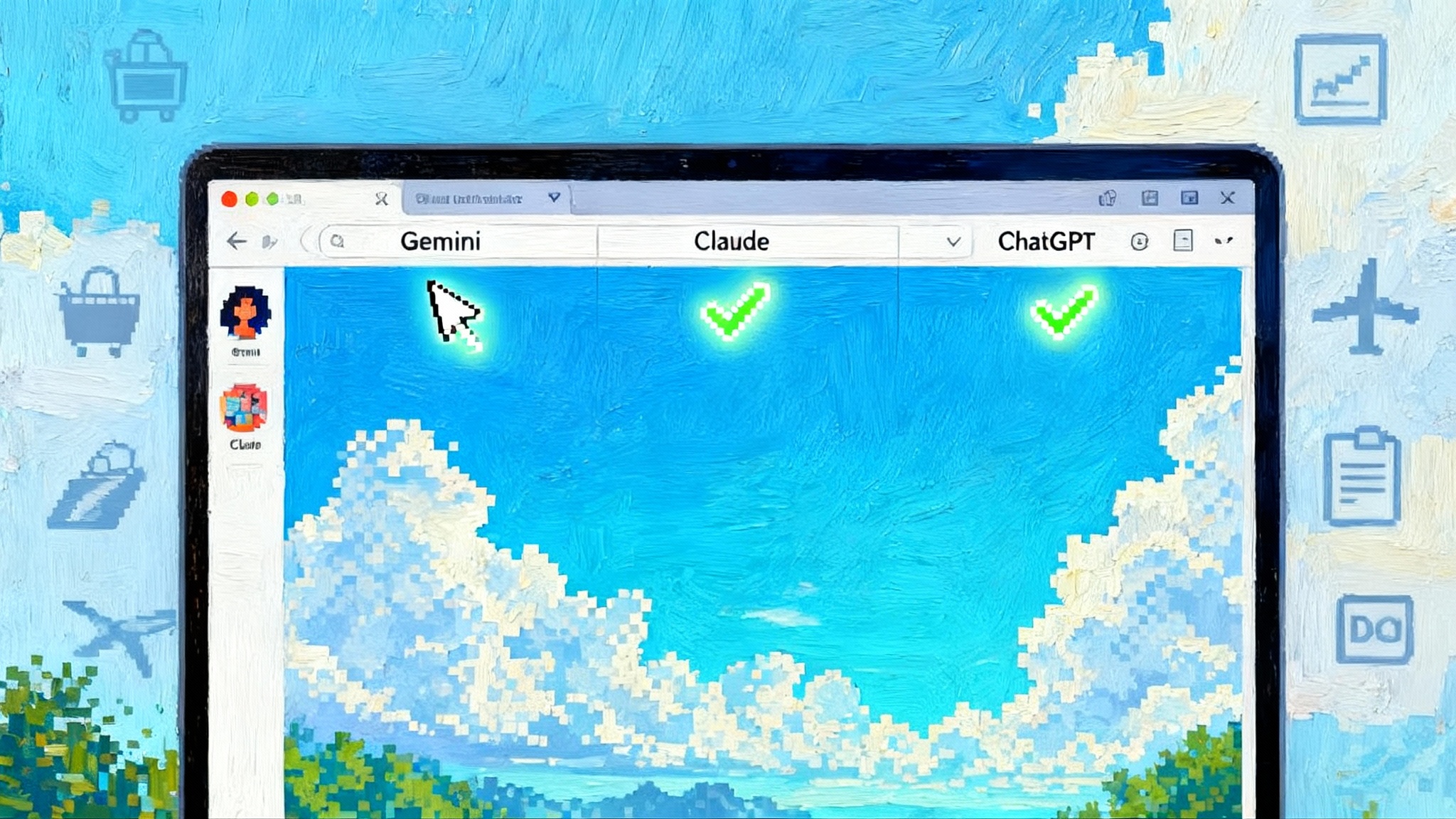

Distribution shifts from standalone AI apps to the browser

Browsers remain the world’s most widely distributed runtime. When agent capabilities are integrated at the browser layer, the default path for everyday automation is not a separate chatbot tab but the surface where work already happens. That changes who owns user attention and which toolchains developers choose. With agents in the browser, the first entry point becomes the omnibox or a page‑aware side panel. See how this echoes platform shifts in ChatGPT agent goes cloud‑scale.

Search and ad economics will bend toward conversations and actions

Two levers move here. First, conversational search inside the omnibox starts earlier and stays longer, which can compress click‑out traffic to traditional result pages. Second, when the agent can execute an intent directly on a page, the funnel from query to conversion shortens. Ads and affiliate economics will adapt. Expect more paid placements designed for agent consumption, like structured offers and machine‑readable promotions, and pressure for brand safety and attribution standards that prove whether an agent influenced a purchase.

A coming wave of page‑acting agents

The last generation of browser assistants summarized pages. The next generation will act. Booking, filling, submitting, reconciling, and cross‑checking will move into the flow of browsing, with a supervised handover model. The hard parts are not only model accuracy but also user agency. The system must request permission to act, explain what it will do, and show visible traces of actions taken. Expect a market in agent‑ready page components, similar to how checkout buttons and autofill formats spread through the web.

What is at stake

Permissioning that users can understand

Agent actions need a clear, granular permission model. Reading a page is different from clicking a button. Clicking a button is different from filling a credit card field. If Chrome becomes the runtime for actions, permissions will resemble mobile platforms: explicit, revocable scopes with defaults that lean safe and with visible affordances that show when an agent is acting on your behalf.

Key UX ideas that should become standard:

- Ask to act: agents propose a plan, highlight targeted elements, and require one‑tap approval to proceed.

- Just‑in‑time scopes: approvals expire and scopes are narrow, such as “fill this form once” or “read this tab for five minutes.”

- Session status: persistent indicators when an agent is in control of a page or is watching content across tabs.

- One place to revoke: a central panel that lets you see active scopes and kill them instantly.

Telemetry with boundaries

To make agents safe and helpful, the runtime will collect more signals about page structure, input fields, and user intent. That telemetry must be disclosed, minimized, and partitioned. Enterprises will demand separation between employee browsing behavior and agent operation logs. Security teams should study patterns in securing agentic AI to set guardrails early.

Runtime governance for the open web

Agentic browsing heightens risks that already exist. Instruction injection in page content can coerce an agent. Cross‑site flows can silently pull sensitive data between tabs. Extensions can collide with agent actions in surprising ways. Governance will require a blend of policy, enforcement, and technical standards. Expect rules about agent behavior on sensitive domains like finance and healthcare, default agent disabling in high‑risk contexts, and standardized headers for sites to opt out or constrain agent actions.

How Chrome compares to Edge and Brave

-

Microsoft Edge: Copilot Mode reframes Edge as an AI browser with a side panel that can comprehend what you are doing across tabs, compare information, and begin to automate steps like bookings when you opt in. Microsoft leans on enterprise posture through Microsoft 365 integration and is signaling future access to browser context and credentials with explicit permission gates.

-

Brave: Leo positions privacy as the differentiator. Leo is built into the browser with an opt‑in first run, proxies requests to reduce IP correlation, and does not retain conversations for training. Brave is also researching attack surfaces unique to agentic browsing, like instruction injection via third party content, and has been vocal about mitigations. Leo today focuses on summarizing and answering, but Brave is experimenting with page‑acting capabilities with a conservative default posture.

What is novel in Google’s approach is distribution scale and alignment with Workspace controls. Chrome already sits at the center of many workdays. If Gemini is switched on by default for eligible users, a large segment of the web gets agent capabilities without installing anything.

Developer implications: building for tool‑using web agents

Agents are only as capable as the actions they can safely take. Chrome’s direction points to a clear developer agenda:

- Make pages agent friendly: Structure your DOM and label fields clearly. Prefer semantic HTML, microdata, and ARIA roles that help an agent identify intent without brittle heuristics. Add affordances for agents such as predictable form markup, machine‑readable success states, and idempotent actions to guard against double submits.

- Design short, auditable workflows: Break long, multi‑step tasks into smaller, confirmable steps. Provide URL‑addressable states for each step and expose a way to roll back. Agents benefit from deterministic flows with clear checkpoints.

- Adopt action tokens and capability URLs: Move away from reusable session cookies for critical actions. Use short‑lived, scoped tokens that encode what is allowed, for how long, and on which resource. Agents can request a token, explain its scope to the user, and redeem it once.

- Instrument for supervision: Build an action log that shows what was done, when, and by which agent acting for which user. Keep the log user visible. Enterprises will require this for audits.

- Use emerging browser AI APIs: Chrome is incubating built‑in AI primitives like prompt, summarization, language detection, and translation APIs for both web apps and extensions. Treat these as accelerators for agent‑aware UI rather than replacements for domain logic.

- Prepare your extension surface: Many agents will arrive as extensions first. Audit your extension permissions. Expect a new class of manifest scopes for agent actions and plan for co‑existence with other extensions that might target the same elements.

- Test against agent threats: Add indirect prompt injection tests to CI. Validate that hidden instructions in templates, ads, or user‑generated content cannot cause harmful actions. Sanitize model outputs before executing a click or form fill, and require confirmation if the agent crosses origin boundaries. For reference architectures, see NVIDIA NIM blueprints.

The standards and regulatory wave that follows

Standards bodies and regulators are about to step into runtime behavior. The W3C is advancing bidirectional browser automation protocols that let controllers subscribe to browser events and act without resorting to brittle script injection. Expect that to underpin both testing and live agent operation in a safer, more observable way. Privacy groups will push for specifications that limit persistent cross‑tab observation, define visible agent state, and standardize consent prompts.

Policy pressure will come from three directions:

- Competition: Bundling an agent with a dominant browser will invite scrutiny in the U.S. and EU. Authorities will look at defaults, self‑preferencing, and whether agent actions steer users toward the platform’s services and ads.

- Privacy and security: Telemetry for agent operation, storage of action logs, and cross‑service data combination will be in scope. Expect rules that require clear consent for page actions, data minimization by default, and strict retention limits on agent behavior data.

- Consumer protection: When agents can buy, book, and sign, misfires have real costs. Expect requirements for human‑in‑the‑loop confirmation on high‑risk actions, standardized receipts for agent actions, and transparent dispute paths when an agent goes wrong.

For enterprises, the near‑term burden is governance. Map which classes of sites and workflows are eligible for agent help and which are off limits. Align DLP, IRM, and client‑side encryption policies with the reality that an agent only sees what the user sees. Use admin controls to stage rollout by group and to monitor adoption and impact.

The bottom line

The move to put Gemini inside Chrome is not just a feature drop. It is the moment a browser begins to act on the web on your behalf. With AI Mode in the omnibox and a page‑aware assistant that graduates from summarizer to actor, Chrome becomes an agent runtime. The payoff is obvious in productivity. The risks are just as clear in safety, privacy, and competition. The winners will be teams that design for supervision and consent, who structure pages for safe automation, and who treat the agent as a power tool with clear guards rather than a black box.

If September 2025 is the inflection point, the next year will decide the norms. Expect stronger admin controls, stricter permission gates, and early standards that define how agents see and act on the open web. And expect users to judge each browser not only by speed and battery life, but by how safely and helpfully its built‑in agent gets real work done.