From EDR to AIDR: Securing Agentic AI after CrowdStrike and Pangea

CrowdStrike’s plan to acquire Pangea puts AI Detection and Response on the map. Here is why agentic systems need their own security stack, how to stop agent hijacking and shadow AI, and what builders and CISOs should operationalize next.

The September signal: AIDR arrives

On September 16, 2025, CrowdStrike said it will acquire Pangea and ship what it calls a complete AI Detection and Response stack, or AIDR, across the Falcon platform. In CrowdStrike’s telling, prompts are the new endpoints and agent workflows are the new processes to monitor and control. That framing matters because it suggests a new control plane that spans the prompt layer, agent decisions, tools, identities, data, and the infrastructure they ride on. It is also a clear market signal that the center of gravity in security is moving into the agentic era. See the official note, CrowdStrike to acquire Pangea.

Call the moment what it is. EDR changed how we defend laptops and servers by capturing deep telemetry, applying fast detections, and orchestrating response. Agentic AI changes the substrate. Now we need visibility into prompts, plans, tools, and external resources that agents touch. We need policy and governance over who can use which model, with what data, for which task. And we need real-time defenses that blunt prompt injection and agent hijacking without drowning developers and analysts in false positives.

Why agents force a new category

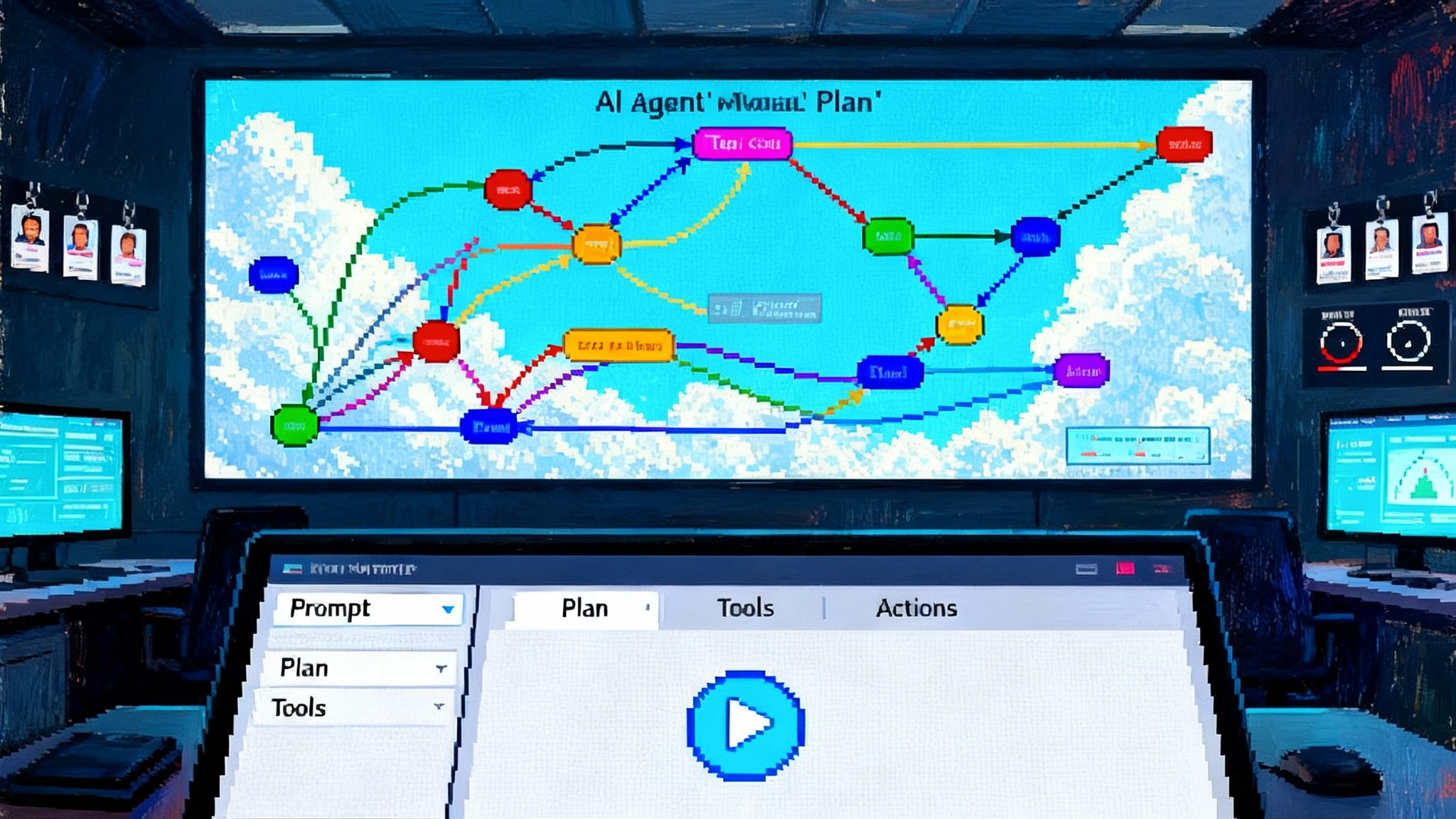

Agentic systems collapse application logic, user context, and third party content into a single dynamic loop. That loop looks like this:

- The user or system issues a goal.

- The agent plans steps, often chaining tools like code execution, search, email, spreadsheets, cloud APIs.

- The agent reads or writes to untrusted surfaces such as websites, documents, chats, or inboxes.

- New context flows back into the loop, altering plans and actions.

Each loop introduces three new classes of risk that classic controls only partially cover:

-

Indirect prompt injection and agent hijacking. Untrusted content can carry hidden instructions that the agent interprets as higher priority than the user’s intent. If the agent wields powerful tools or access tokens, a single compromised page or file can pivot into data exfiltration, account changes, or remote code execution.

-

Shadow AI. Teams quietly wire up SaaS agents, plugins, or local copilots that nobody has vetted. Without discovery, policy, and data egress controls, an enterprise cannot enforce acceptable use, confidentiality zones, or jurisdictional rules.

-

Cross identity and tool sprawl. Agents are identities. They also impersonate users through delegated scopes and API keys. Without agent identity lifecycle, least privilege, and strong audit, there is no reliable way to answer who did what, with which permissions, and why.

These realities make AIDR its own category. The detection surface is not just processes and ports. It is prompts, plans, tool calls, retrieved passages, external links, function signatures, and the side effects they produce. Standards and patterns are emerging fast, including the MCP standard for agents and enterprise design kits like NVIDIA’s NIM blueprints.

Government and academia are converging

The U.S. AI Safety Institute at NIST spent early 2025 probing agent hijacking as a concrete security problem rather than a philosophical one. Its technical staff documented how evaluation frameworks must adapt to multi attempt attacks, and how realistic hijacking scenarios can escalate into data exfiltration, remote code execution, and automated phishing. Their analysis showed that success rates change sharply when attackers can retry and tailor attacks to the target agent. See NIST’s guidance on agent hijacking evaluations for the operational lessons.

On the academic side, the WASP benchmark, published in April 2025, narrowed its focus to web agents under realistic constraints. The authors reported that agents start following adversarial instructions between roughly 16 and 86 percent of the time, but the attacker’s end to end goals complete between 0 and 17 percent of the time depending on the system and defenses. That gap is cold comfort. It means hijacks often begin, and as agents get more capable and tool rich, the final compromise rate can rise if we do not build guardrails now.

Together, these signals move the conversation from model safety platitudes to measurable, operational agent security.

From EDR to AIDR: what changes in practice

If EDR was built around processes, parents, hashes, sockets, and memory, AIDR pivots to:

- Prompts and plans. First class objects that need logging, lineage, and policy.

- Tool calls and capabilities. Each function is a potential blast radius with its own allowlist, rate limits, and runtime checks.

- Context objects. Retrieved snippets, files, and pages are untrusted until proven otherwise. Treat every context hop as tainted input.

- Agent identity. Agents need enrollment, attestation, credentials, and revocation just like people.

- Human in the loop. Many responses should require approvals or staged autonomy when risk exceeds thresholds.

AIDR also rethinks detection and response logic. Instead of watching for a suspicious binary touching LSASS, you are watching for a finance agent that suddenly pulls HR files after reading a web forum post with hidden instructions. Instead of isolating a host, you may pause an agent, revoke a token, null route a tool, or quarantine a conversation thread.

The core AIDR stack

Here is a practical decomposition that maps to the new attack surface:

1) Telemetry and observability

- Full prompt and response capture with redaction and role labels.

- Plan graphs and function call traces with arguments and return values.

- Content provenance for retrieved context including URLs, document IDs, and checksums.

- Agent identity and impersonation path. Who is the principal, what scopes were used, what approvals were granted.

2) Policy and governance

- Model and tool allowlists per business unit with purpose binding.

- Data zone rules that prevent sensitive classes from flowing to untrusted models or tools.

- Autonomy levels. Define when an agent can act unilaterally versus when it must request human approval.

- Just in time elevation and time bound scopes for high risk actions.

3) Real time defenses

- Prompt and context scanning for injection markers, instruction hierarchy violations, and data exfiltration patterns.

- Domain level isolation for browsing and retrieval, with canary content to catch exfiltration and untrusted instructions.

- Function gatekeepers that validate arguments against schemas, business rules, and side effect guards before the tool fires.

- Egress controls for messages, files, and API calls, including DLP checks and destination allowlists.

4) Response automation

- Playbooks to pause or kill agents, revoke or rotate tokens, detach risky tools, roll back actions, and notify owners.

- Forensics to reconstruct the decision chain that led to a bad action using prompt lineage and function logs.

5) Evaluation harness

- Pre deployment gauntlet with red team attack libraries and repeatable scenarios.

- Continuous testing that replays adversarial prompts on every model or prompt update and checks regression budgets.

What builders should operationalize next

If you are shipping agents or agentic features, make these your next two sprints:

- Instrumentation by default. Log prompts, plans, tool calls, and external context with stable IDs. Redact secrets in memory and at the edge.

- Explicit instruction hierarchy. Hard code a small set of unoverrideable rules that always supersede external content, then test that hierarchy under attack.

- Tool contract enforcement. Move tool argument validation out of the agent and into a gatekeeper that runs deterministic checks. Require allowlists for destinations like domains, repositories, and buckets.

- Response hooks. Register kill switches and quarantine actions on your agent framework so a SOC can pause or restrict autonomy instantly.

- Local sandboxing for risky tools. Anything that executes code, browses the web, or touches files should run in locked down sandboxes with short lived credentials.

- Canary prompts and documents. Seed decoy instructions and fake identifiers to detect exfiltration and hijack attempts early.

- Multi attempt threat model. Assume adversaries will retry. Your detectors should analyze sequences, not one shot events.

A runtime guardrail blueprint

Use this layered approach to reduce both hijack probability and blast radius:

- Input layer: scan retrieved pages, files, and messages for adversarial patterns, jailbreak lexicons, and suspicious markup. Tag tainted content and propagate those tags.

- Planning layer: impose budget and scope limits per plan step, constrain tool sequences, and require approvals when plans cross data zones.

- Tool layer: enforce strict schemas, domain allowlists, cross domain copy rules, and rate limits. For email or messaging tools, rewrite links to safe redirectors and inspect attachments.

- Output layer: run DLP, PII filters, and policy checks on every outbound artifact. Require secondary approval for high impact actions like wire instructions or permission changes.

- Identity layer: issue agents their own credentials, rotate often, scope narrowly. No shared human tokens.

The CISO RFP checklist for AIDR

If you are evaluating vendors or asking internal teams to harden agentic systems, add these items to your RFPs and architecture reviews:

-

Prompt and plan observability

- Can the platform capture prompts, tool calls, and plans with lineage while honoring data minimization and retention rules?

- Can we search and correlate across agents, users, and applications in near real time?

-

Injection and hijack defenses

- What detectors exist for indirect prompt injection, tool abuse, and instruction hierarchy breaks?

- Are detections multi attempt aware with tunable risk thresholds and ensemble methods?

-

Agent identity and permissions

- Do agents have first class identities with enrollment, attestation, least privilege scopes, and revocation?

- Are approvals and autonomy levels configurable per agent and task?

-

Tool governance

- Can we allowlist functions and destinations, validate arguments, and enforce side effect guards at runtime?

- Is there a catalog of approved tools with owners and security posture?

-

Data and egress controls

- Can we segment data zones and prevent sensitive classes from crossing into untrusted models or tools?

- Are outbound messages, files, and API calls inspected and controlled with clear policy outcomes?

-

Evaluation harness and reporting

- Does the platform support standardized attack libraries and benchmarks in CI, including replay on every prompt or model change?

- Can we generate attestation reports that summarize attack coverage, agent performance, and regression histories?

-

Response automation and IR integration

- Are there one click actions to pause agents, rotate keys, detach tools, and roll back actions?

- Does the system integrate with SIEM, SOAR, ticketing, and identity platforms with clear mappings to owners?

-

Compliance and audit

- Can we export immutable logs for audit, capture consent and approvals, and enforce retention by policy?

- Are privacy safeguards configurable per jurisdiction and business unit?

The shadow AI problem is solvable

Shadow AI thrives on convenience. People copy prompts into consumer chatbots, connect personal email, or install unvetted extensions that promise automation. Stop this in three moves:

- Discover usage. Pull browser extensions, SASL logs, SaaS OAuth grants, and outbound DNS to identify bots, plugins, and agents in use.

- Provide good defaults. Offer a curated model catalog, pre approved agents, and safe tool bundles that meet real team needs.

- Enforce guardrails. Block known risky endpoints, require conditional access for agent consoles, and gate sensitive exports through review queues.

When people have safe options that are faster than their shadow tools, usage shifts voluntarily. Back that up with simple policy and visible metrics.

Where consolidation is headed

The next year of consolidation will be about collapsing overlapping controls into an agent aware platform rather than stapling new boxes together. Expect these moves:

- AIDR meets EDR and identity. Endpoint, identity, and agent telemetry merge so that a single timeline shows what the laptop did, what the user did, and what the agent did with the user’s scopes.

- Tool governance becomes first class. What used to be hidden in app code becomes a policy layer where security can define function contracts across apps.

- Model and data catalogs plug into runtime. Static inventories evolve into dynamic routing and enforcement. The catalog decides which model an agent may call for a given task and data zone, and the runtime enforces it.

- Evaluation shifts left. Red team libraries and agent benchmarks run in CI on every change to prompts, tools, and model versions. Vendors ship with prebuilt attack suites and evidence of resilience, not just demo videos.

As the browser becomes a common agent runtime, expect tighter policy controls around browsing and retrieval. See how that is unfolding with Gemini in Chrome runtime.

What good looks like in 12 months

- Every agent has an owner, a purpose statement, a risk tier, and defined autonomy levels.

- Prompts, plans, tool calls, and outcomes are observable and searchable within seconds.

- Injection detectors and tool gatekeepers stop most hijacks at the context or function boundary, while approvals catch high risk actions before they commit.

- The SOC can pause an agent, rotate credentials, and roll back side effects with one click from the same console.

- Benchmarks like WASP and internal red team suites run weekly and gate releases. Regression budgets are tracked like SLOs.

Bottom line

Agentic systems will not wait for our playbooks to catch up. The shift from EDR to AIDR is already underway because the substrate of software changed. The CrowdStrike and Pangea announcement is a staging point, but the real work is operational. Log what matters, govern what matters, and put guardrails where it matters most. Do that and your developers can ship faster, your analysts can trust what they see, and your agents can help without becoming the newest breach vector.