Anthropic’s opt-in shift: five-year retention and your plan

Anthropic now lets consumer users opt in to training Claude on their chats, with data kept for up to five years. See what changed, how it compares to OpenAI and Google, and a practical plan for builders.

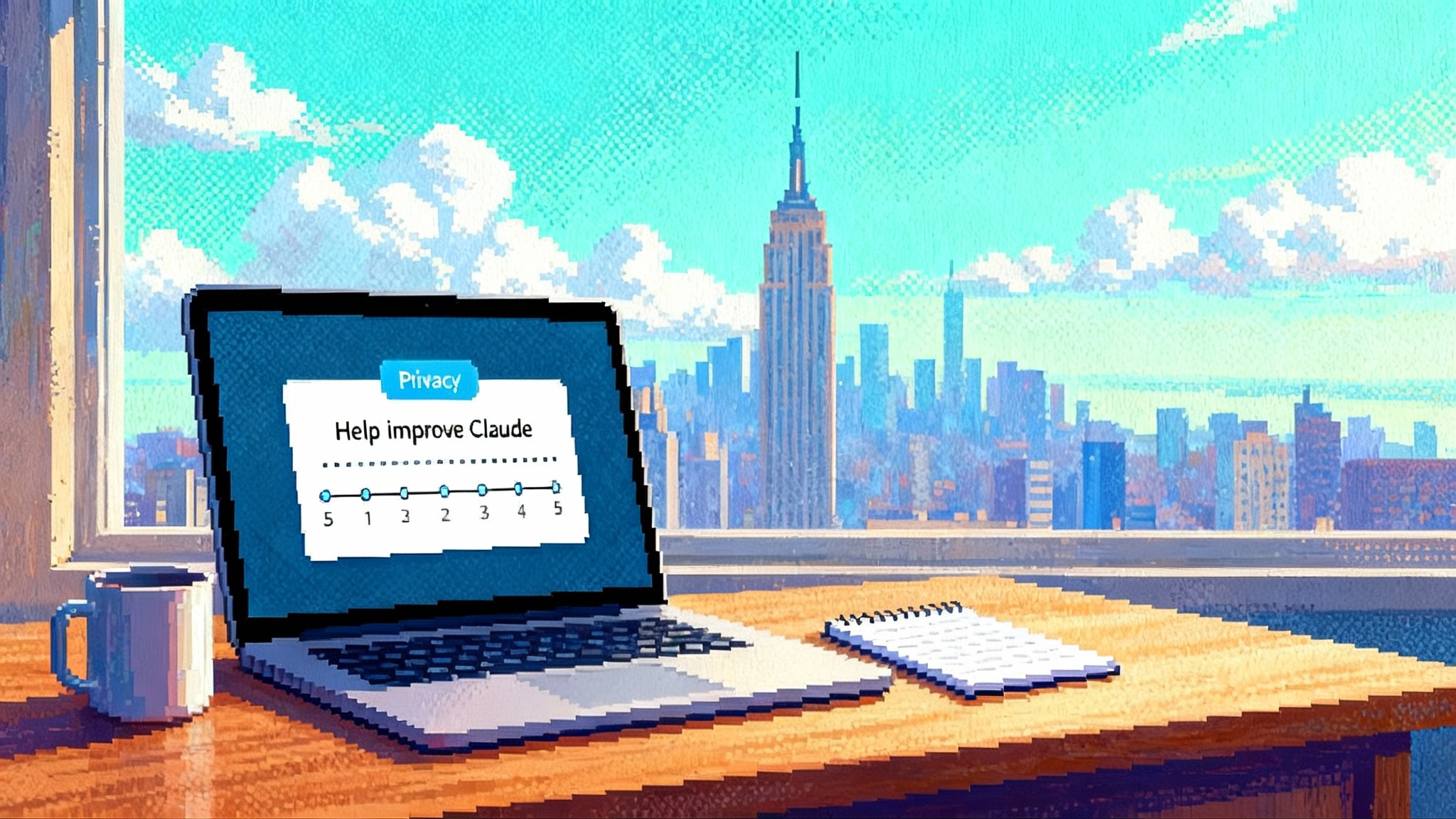

What changed in late September

Anthropic has begun presenting consumer users with a clear choice: allow Claude to learn from your chats and coding sessions, or keep them out of training. If you allow it, Anthropic will retain the relevant data for up to five years and use it to improve future Claude models and safety systems. The company’s privacy center summarizes the change and clarifies that it applies to consumer products such as Claude Free, Pro, and Max, including Claude Code on those accounts, as described in the recent Anthropic privacy update. It does not extend to enterprise or API deployments.

In product, the shift shows up as an in-app prompt for existing users and as part of onboarding for new users. Users can also make the choice later in settings. Two operational details matter for your mental model:

- The choice only affects new or resumed chats and coding sessions after you set it.

- If you opt in, retention for those chats becomes five years. If you do not, the standard 30-day backend deletion timeline continues to apply, subject to safety and legal exceptions.

Anthropic framed the change as a way to deliver more capable models while strengthening safeguards that require real-world data. Importantly for organizations, the company reiterates that commercial offerings such as Claude for Work and the Anthropic API are outside this consumer training scope.

Why Anthropic moved to opt in now

The timing reflects a convergence of product, safety, and competitive pressures:

- Real-world breadth improves models. Synthetic data and curated corpora only go so far. High-variance, real user conversations expose edge cases, domain shifts, and prompt styles that help both core models and safety classifiers generalize.

- Safety requires seeing misuse attempts. Classifiers that detect scams, harassment, and policy-violating requests improve when they are trained on attempts that look like the latest real behavior, not just benchmarks.

- Competitive parity. Rivals have for some time leaned on consumer data to tune and evaluate their assistants. Opt-in access gives Anthropic a way to keep pace while preserving a consent-based stance that aligns with its brand.

- Transparent consent over opaque defaults. Framing the change as a visible choice helps defuse concerns about surprise uses of data, and it gives users a reversible control.

For broader context on multi-model assistants in the enterprise, see how Copilot goes multi-model with Claude.

What this could mean for LLM behavior

Allowing models to learn from consenting user chats can translate into specific gains that builders will notice in day-to-day use and in agentic systems:

- More robust memory patterns. Exposure to longer, messier chats improves the model’s ability to pick up implicit context and restate goals. That tends to reduce context drift over extended sessions, even before you add a formal memory system.

- Better tool selection and parameterization. Real task sequences reveal how often users switch tools, what fails, and which parameters matter. Training on these traces can make tool-using agents choose more appropriate tools, pass cleaner arguments, and handle tool failures with fewer dead ends.

- Stronger long-horizon planning. Chains of subgoals from actual users surface where plans collapse and where checklists help. Models that see more of this structure become better at decomposing and checkpointing, which matters for coding, research, and ops automations.

- Safety with fewer false positives. Safety systems tuned on authentic, ambiguous prompts are less likely to block harmless content and more likely to catch genuinely risky behavior. That means fewer “Sorry, I cannot help with that” dead ends for benign requests.

For design implications, read our guide to designing agents that act.

The flip side is obvious. If training data captures sensitive fragments, biased phrasing, or misleading patterns, models may pick up unintended behaviors. That is why Anthropic highlights filtering and why builders must add their own guardrails around data flows, both before and after model calls.

Privacy and trust: the core tradeoffs

For individual users, the big variables are consent and retention. Opting in can help the broader community benefit from better models, but it also means a longer storage period for your data. The five-year retention window is intended to support multi-year training, evaluation, and safety audits. The company also underscores that you can delete conversations in your history, and that commercial offerings remain outside the consumer training scope.

For organizations, the trust calculus is simpler. Consumer accounts used on work devices still carry consumer controls. Enterprise and API deployments remain governed by commercial terms, with default non-training and options like zero-retention. The operational risk to enterprises comes less from Anthropic’s enterprise products and more from shadow IT, where employees use consumer assistants with work data. You will see why the checklist later is focused on prevention and design patterns.

How this compares to OpenAI and Google

- OpenAI. For ChatGPT as a consumer service, OpenAI may use conversations to improve models unless you opt out. For business products such as ChatGPT Team, Enterprise, and the API, training on customer content is off by default, as outlined in the official OpenAI data usage policy.

- Google. Gemini gives consumers a “Gemini Apps Activity” control that governs whether chats are used to improve services and whether samples of uploads can be used to help improve quality. When activity is off, Google still retains recent chats for a short period to operate the service and process feedback. Google also offers Temporary Chats that are not saved for personalization.

The common pattern is clear. Consumer chats can help improve models with a user-facing control, while enterprise deployments are excluded from training by default. Anthropic’s twist is explicit consent framing for consumers and a five-year training retention for those who opt in.

For risks to agent safety in production, review why agent hijacking is a gating risk.

For enterprises: Claude for Work and API stay out of scope

Anthropic states that enterprise and API offerings are not included in this consumer training policy. Commercial customers continue to receive the defaults and contractual options they already rely on, such as non-training, 30-day backend deletion, configurable retention, and the possibility of zero-retention APIs. If you are a security owner, your main action is to reduce the risk of employees using consumer accounts with company data.

A builder’s checklist for safe agent design

Below is a practical, opinionated checklist that balances richer model learning for consenting consumers with strong boundaries for your apps and agents. Use it as a template for PRDs and security reviews.

1) Data controls users can trust

- Present a clear, reversible “Help improve the model” toggle at onboarding and in settings. Use plain language that states what is shared, with whom, and for how long.

- Offer per-conversation controls. Add a private session mode that keeps interactions out of training signals and out of long-term logs.

- Build a deletion flow that works. Deletion should remove the conversation from user history immediately and from your backend within a defined window. Show the dates to the user.

- Provide granular scopes. Separate controls for chat transcripts, file uploads, and audio or screen shares. Each scope should have its own retention and sharing rules.

2) Opt-in UX that is actually informed

- Make the default transparent, not sneaky. If a toggle appears during a policy prompt, write the microcopy so users can see the effect of accepting with the toggle on or off.

- Design a second-chance nudge. After 30 days of regular use, show a non-intrusive card that reminds users they can change their choice. Many users choose conservatively at first.

- Explain the benefits with examples. Show before and after cases that highlight better tool use or fewer false positives when people opt in.

- Respect jurisdictional norms. If your product operates in regions that require explicit consent, ensure your telemetry and consent logs are auditable.

3) Evaluations that catch data leakage and drift

- Red-team prompts for leakage. Include tests that try to exfiltrate secrets from recent user documents and tool outputs. Score both direct and indirect leakage attempts.

- Run long-horizon simulations. Use synthetic multistep tasks that resemble real user flows and measure context tracking, plan repair, and tool failure recovery.

- Maintain holdout slices. Keep sensitive domains like healthcare and finance out of training and evaluate the model’s behavior on those slices to spot memorization.

- Track false-positive cuts. If you claim safer models, prove it by showing reductions in overblocking on a stable internal benchmark.

4) Auditability and oversight by design

- Immutable telemetry. Log prompts, tool calls, and policy decisions with tamper-evident hashing. You need this for forensics and compliance.

- Differential access. Restrict who in your org can view raw chat data and rotate access tokens. Use just-in-time approvals for sensitive scopes.

- Reproducible experiments. Tag model versions and training decision points so you can explain why an agent behaved a certain way months later.

- Privacy-preserving analytics. Aggregate metrics so you can learn from usage without storing identifiable content. Favor sketches, counters, and sampled traces with redaction.

5) Architecture patterns that prevent leakage

- Redaction at the edge. Before a request leaves the device or browser, apply client-side masking for common sensitive entities. Keep the redactor rule set updated.

- Retrieval with boundaries. When you use RAG, separate private corpora by tenant and attach scoped, expiring credentials to retrieval calls. Do not intermingle embeddings across tenants.

- Data diodes for tools. Build a one-way flow for tools that should never send back raw data. For example, a finance tool can return a signed summary that the model cannot reverse to the original records.

- Post-response scrub. Run outputs through a leak detector and policy checker before they reach the user or downstream systems. Include a safety override channel for human review.

- Secrets never in prompts. Use reference tokens for secrets and resolve them only inside tools. Do not inline passwords, keys, or tokens in model-visible context.

6) Operational safeguards for teams

- Shadow IT guardrails. Detect consumer AI usage on corporate networks and offer approved alternatives. Provide a safe enterprise assistant so employees do not reach for consumer tools.

- Data classification. Tag content by sensitivity and route it through appropriate pipelines. Sensitive classes default to private sessions and are excluded from training and analytics.

- Incident playbooks. Prepare for an inadvertent share by pre-writing user notifications, rotating credentials, and locking down affected stores.

- Vendor alignment. If you depend on multiple AI vendors, document how each handles consumer training, retention, and enterprise exceptions. Ensure your contract mirrors those expectations.

What builders should watch next

- How much this improves reliability at long horizons. Expect better plan repair and lower tool call error rates once sufficient opted-in data accumulates.

- Whether privacy controls broaden. Look for finer-grained toggles and event-level audit views that make consent and retention more tangible to users.

- The state of Temporary or Private Chat modes. These sessions are now a baseline expectation across assistants and reduce the tension between capability and privacy.

Bottom line

Anthropic’s late September shift signals a maturing moment in assistant design. The company is asking consumer users to help train Claude with a clear, reversible choice, and it is committing to a five-year retention for opted-in data. That will likely yield steadier memory patterns, better tool use, and safer classifiers. It also raises the bar for builders. If you want the benefits of richer model learning without leaking sensitive information, you must design your agents with consent, redaction, evaluation, auditability, and secure architecture patterns from day one.

Use this as your plan: make the choice visible, set strong defaults, test for leaks, keep an audit trail, and route data through guardrails before and after every model call. Capability and privacy can coexist when builders treat them as a single design problem rather than a trade you make at the end.