Microsoft 365 Copilot goes multi-model with Anthropic Claude

Microsoft is bringing Anthropic’s Claude to Microsoft 365 Copilot and Copilot Studio, enabling true model choice. Here is what changes, the governance you need, and a 30-day integration plan.

The end of lock-in starts now

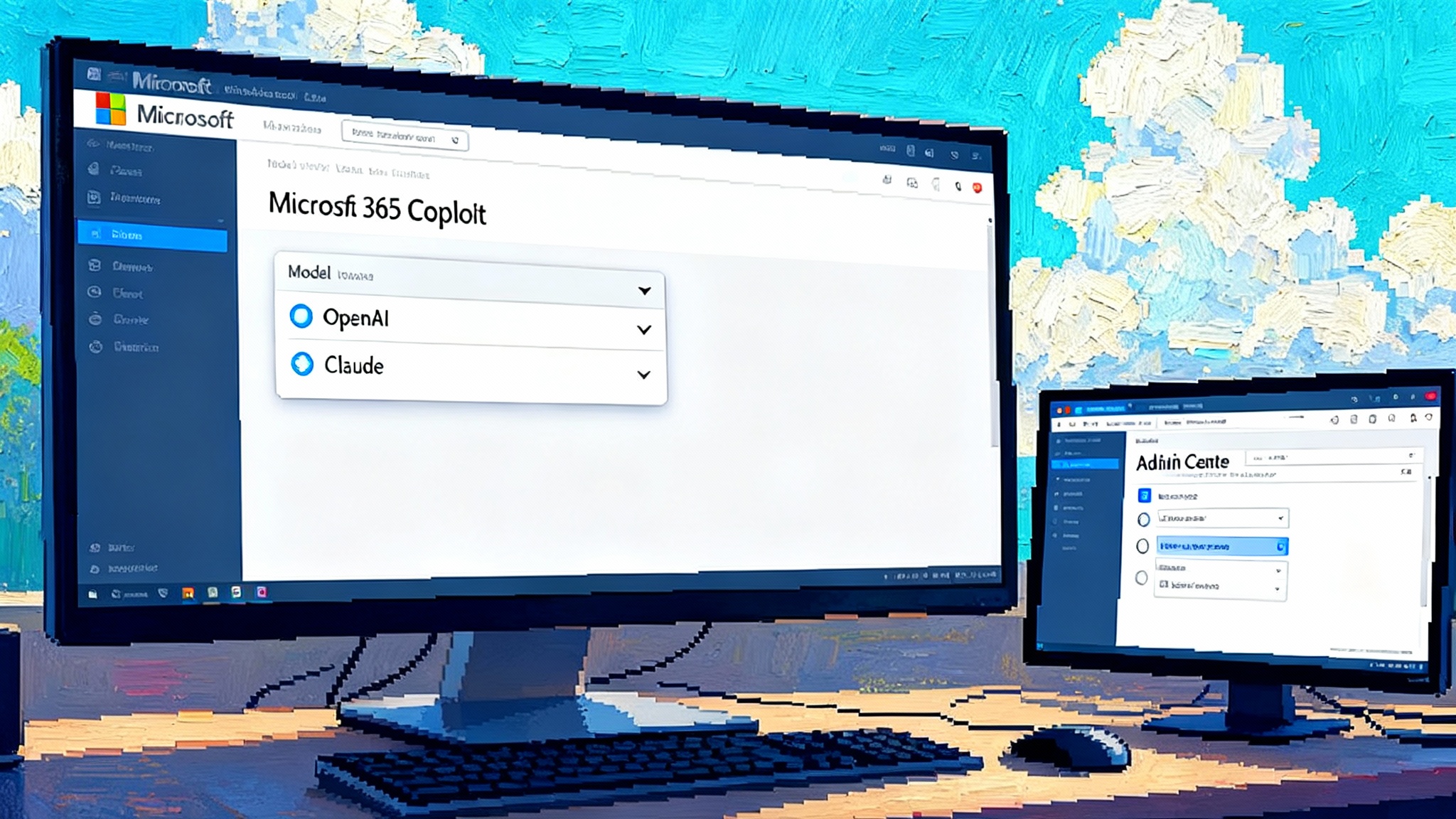

On September 24, 2025 Microsoft said it would bring Anthropic’s Claude into Microsoft 365 Copilot and Copilot Studio, alongside the OpenAI models that power Copilot today. The announcement confirms model choice in two places that matter most for enterprise work: the Researcher agent inside Microsoft 365 Copilot and the agent building canvas inside Copilot Studio. Microsoft names Claude Sonnet 4 and Claude Opus 4.1 as options, and administrators can enable access for licensed tenants that opt in. Microsoft also notes that Claude in Researcher is available through its Frontier Program for customers who choose to participate. You can read the Microsoft 365 post that details the rollout and where model selection appears in product surfaces in Expanding model choice in Microsoft 365 Copilot.

This is a turning point. Multi-model access inside the same enterprise assistant reduces vendor lock-in and opens the door to smarter routing, higher reliability, and portable agents that survive supplier churn. It also introduces new questions about data handling, regional residency, and shared responsibility.

This piece unpacks what changes, what to watch for, and how to get ready with a 30-day integration plan for IT and a developer playbook for building model-agnostic Copilot agents.

Why this move matters

- Reliability through diversity: When one model struggles, another often succeeds. Access to multiple reasoning profiles and refusal behaviors raises task completion rates for complex knowledge work. See how model failover strengthens reliability in Agentforce model failover at FedRAMP High.

- Cost and performance routing: Not every request needs an expensive deep reasoning pass. If your orchestration can choose the right model for the job, you cut token spend while keeping quality high.

- Portability and resilience: If you architect prompts, tools, and evaluation independent of any one provider, your agents remain usable even if your preferred model is rate limited, revised, or repriced.

- Governance in the open: Model choice forces explicit policy on which data can leave Microsoft-managed environments, how to record that decision, and how to explain it to users.

What actually changes in product

- Researcher agent inside Microsoft 365 Copilot: Customers that opt in can select OpenAI models or Anthropic’s Claude for deep, multi-step knowledge tasks. The change is designed to be a user-visible toggle, so teams can compare outcomes for the same prompt without switching platforms.

- Copilot Studio for custom agents: Builders can choose Claude Sonnet 4 or Claude Opus 4.1 during agent design and can mix models across multiagent workflows. That makes it practical to assign different models to different sub-tasks, such as reasoning, drafting, or classification.

- Admin control: Enabling Anthropic models is an explicit opt-in in the Microsoft 365 admin center. Organizations decide who gets access and where.

Data residency and governance, in plain language

Microsoft documents two crucial facts for organizations evaluating Claude access inside Microsoft 365 Copilot. First, Anthropic’s models are hosted outside Microsoft-managed environments. Second, if you choose to use Anthropic models, your organization is sending data to Anthropic under Anthropic’s terms, and Microsoft’s Product Terms and Data Processing Addendum do not apply to that processing path. See Microsoft’s guidance in Connect to Anthropic’s AI models.

What this means for you:

- Data classification breaks ties: If content is classified as confidential, regulated, or export-controlled, decide whether it is in scope for Anthropic processing before you enable access. Put that decision in writing.

- Regions and residency: Because processing occurs outside Microsoft-managed environments, do not assume existing data residency commitments apply. Validate where Anthropic processes data and how retention is handled. Record it in your Records of Processing Activities.

- Customer agreements and audits: Your internal audit map changes for any workflow routed to Claude. Update your control narratives to reflect a new processor, new terms, and new audit evidence.

- End-user transparency: Communicate clearly inside the product and in training when a given action may send prompts or context to Anthropic. Give users a simple rule of thumb about what they should avoid pasting into Claude-powered workflows.

A practical routing model for cost and performance

The simplest workable policy is a two-tier ladder:

- Default to a cost-efficient high-quality model for routine tasks like summarization, classification, extraction, and short drafting.

- Escalate to a deep reasoning model for multi-document synthesis, chain-of-thought planning, or complex analysis that fails on the first tier.

Make routing decisions with four signals:

- Task complexity: number of sources, required reasoning steps, and ambiguity in the question.

- Sensitivity and residency: if the data is in a restricted class, force routing back to Microsoft-managed models or a no-send offline path.

- Latency budget: meetings, copilot in the loop, or interactive chats favor faster models.

- Dollar budget: estimate tokens, cap at the department level, and throttle heavy jobs during month-end to protect spend.

Add automatic fallbacks:

- If a call times out, retry once on the same model, then fail over to the other provider.

- If your evaluation harness sees a drop in answer quality for a prompt family, shift a percentage of traffic to the alternate model while you investigate.

30-day integration checklist for IT

Week 1: Policy and scope

- Establish a decision record: why you are enabling Claude, for which use cases, and which data classes are out of scope.

- Map data flows: where prompts come from, which connectors may supply context, and where responses go. Include Researcher agent behavior that can read work content like emails, chats, meetings, and files.

- Legal review: capture that Anthropic processing is outside Microsoft-managed environments and under Anthropic terms. Update Product Terms and DPA references in your internal guidance.

- Records of Processing Activities: register Anthropic as a processor for in-scope workflows. Note retention, location, and purpose.

- Risk rating: rate each initial use case for business impact, data sensitivity, and user count. Approve or defer accordingly.

Week 2: Technical enablement in a safe sandbox

- Create a pilot environment or ring-fence a test tenant with realistic but synthetic content.

- In the Microsoft 365 admin center, enable Claude access for a small cohort. Limit by security groups and label all pilot users.

- Define data boundaries: exclude specific SharePoint sites, libraries, or mailboxes from being used as context when workflows route to Anthropic.

- Configure DLP and sensitivity labels to block copy-out of high-risk segments into Claude-powered steps.

- Instrument logging: ensure you capture prompts, model selection, token counts, latency, and outcome ratings. Route logs to your SIEM.

- Create break-glass procedures: how to disable Anthropic routing quickly, how to communicate a pause, and how to roll back.

Week 3: Pilot with real work

- Choose three to five high-value tasks: for example, competitive brief synthesis, multi-file QBR prep, and long-form email drafting.

- Run A or B traffic: assign one group to OpenAI defaults, the other to Claude, then flip after one week.

- Measure outcomes: task success, edits required, response time, hallucination rate, and user confidence ratings.

- Cost checks: compare token spend across models for the same task families. Identify low-risk high-volume tasks to standardize on the most cost-effective option.

- Red team review: probe for data leakage, prompt injection, and refusal edge cases. Capture incidents in your risk register.

Week 4: Rollout with governance

- Finalize allowed use cases and prohibited content with concrete examples.

- Update training: short video or handout that shows where model selection lives, how routing works, and what not to paste.

- Publish a routing policy: which model handles which tasks, how fallbacks work, and how to request exceptions.

- Update audits: control narratives, test procedures, and evidence locations for any external processing.

- Turn on production rings: enable for the next 10 to 20 percent of users. Schedule a 30-day checkpoint to revisit usage, cost, and incidents.

Developer playbook for portable, model-agnostic Copilot agents

Principle 1: Separate orchestration from providers

- Keep task routing, tool choice, and grounding in your agent graph. Keep provider choice behind a simple interface with a consistent function signature.

- Maintain a model catalog with capability tags, input limits, cost multipliers, and risk flags. Examples: deep reasoning, long context, strict JSON, fast short-form.

Principle 2: Write prompts to a contract

- Use clear roles and output schemas. Wrap instructions with visible delimiters and version every system prompt.

- Prefer objective constraints over style commands. Ask for numbered steps, bullet points, or a JSON schema with explicit fields and enums.

- Reference retrieval sources with citations placeholders that your code fills from your retrieval layer, not with free text instructions that differ across providers. For background on retrieval patterns, see the retrieval-first agents pattern.

Principle 3: Normalize tool use

- Define tool schemas once, in a provider-agnostic format. Avoid provider-specific function features unless you can polyfill them.

- When providers differ on function output shape, create a shim layer that adapts to your canonical type system.

Principle 4: Build a simple, testable router

- Route by task type and risk profile, not by gut feel. Start with rules, then add learned policies later.

# Inputs: task, sensitivity, latency_budget_ms, token_budget

CATALOG = {

"openai_default": {"capabilities": ["general", "long_context"], "cost": 1.0, "risk": "standard"},

"claude_sonnet4": {"capabilities": ["summarize", "classify", "align_text"], "cost": 0.7, "risk": "external"},

"claude_opus4_1": {"capabilities": ["deep_reasoning", "multi_doc"], "cost": 1.3, "risk": "external"}

}

def route(task, sensitivity, latency_budget_ms, token_budget):

if sensitivity in {"restricted", "regulated"}:

return "openai_default" # keep inside Microsoft-managed processing

if task in {"multi_doc_synthesis", "planning", "root_cause"}:

return "claude_opus4_1"

if task in {"summarize", "extract", "classify", "short_draft"}:

return "claude_sonnet4"

return "openai_default"

provider = route(task, sensitivity, latency_ms, token_budget)

try:

result = call_model(provider, prompt, tools, token_budget)

except TimeoutError:

# retry same, then fail over

try:

result = call_model(provider, prompt, tools, token_budget)

except Exception:

backup = "openai_default" if provider.startswith("claude") else "claude_sonnet4"

result = call_model(backup, prompt, tools, token_budget)

Principle 5: Evaluate continuously

- Create golden tasks with expected outputs and rejection tests. Run them nightly against all enabled providers and report regressions.

- Track per-task success, edit distance from final human output, and hallucination flags. Alert on drift.

Principle 6: Guardrails and data hygiene

- Ground before generate. Pass retrieved snippets with IDs, not entire documents, and instruct the model to quote only what is provided. For threat modeling, review agent hijacking gating risk.

- Policy gates first, then prompts. Enforce sensitivity label checks before any call that could route to an external provider.

- Strip PII and secrets upstream when possible. Keep a list of sensitive regex patterns and metadata-driven masks.

Principle 7: Portability tactics that pay off fast

- Maintain one prompt library per task with variables for provider quirks. Use templates to swap minor instructions that influence output style without changing content.

- Keep your agents’ memory, document chunking, and vector store out of provider runtimes. You want to move models without moving data.

- Favor deterministic formats for downstream steps, such as JSON with schemas, so you can compare providers mechanically.

What success looks like in six weeks

- Your users can switch models for the same research prompt and see clear differences in strengths. They understand when to choose which model and why.

- Your IT team can explain in one sentence which content is allowed to route to Anthropic and how to shut it off if needed.

- Your finance partner can report per-task cost and show that the router is saving money on routine work while preserving quality on complex tasks.

- Your security team has a live dashboard of prompts, outcomes, and incidents, with a plan to review new model versions before broad use.

Risks and how to mitigate them

- Shifting refusal behaviors: Different models draw policy lines in different places. Mitigation: maintain a compact negative prompt section and capture refusals as telemetry, then tune routing and prompt phrasing.

- Over-escalation to the most powerful model: People will click the biggest hammer. Mitigation: set sane defaults and auto-escalate only on quality failure, not user preference alone.

- Shadow integration: Teams may paste sensitive data into Claude without understanding the processing path. Mitigation: in-product notices, short training, and selective disablement where risk is highest.

The bigger picture

With Anthropic’s Claude available next to OpenAI models in core Microsoft 365 surfaces, the enterprise assistant is evolving into a true orchestration layer. That is good news for reliability, cost control, and long-term leverage. It also raises the bar on governance. The organizations that win will publish a simple routing policy, instrument their agents with evaluation and telemetry, and teach users to make good choices about which model to use for which job.

The lock-in era was simple but fragile. The multi-model era is messier, yet it is the path to trustworthy agents that keep getting better without tying your business to a single supplier.