Agent Hijacking Is the Gating Risk for 2025 AI Agents

In 2025, formal agent evaluations exposed how browser and tool-using agents are uniquely vulnerable to hijacking. Here is what red teams exploit and a secure-by-default rollout you can ship now.

The risk that decides if agents ship

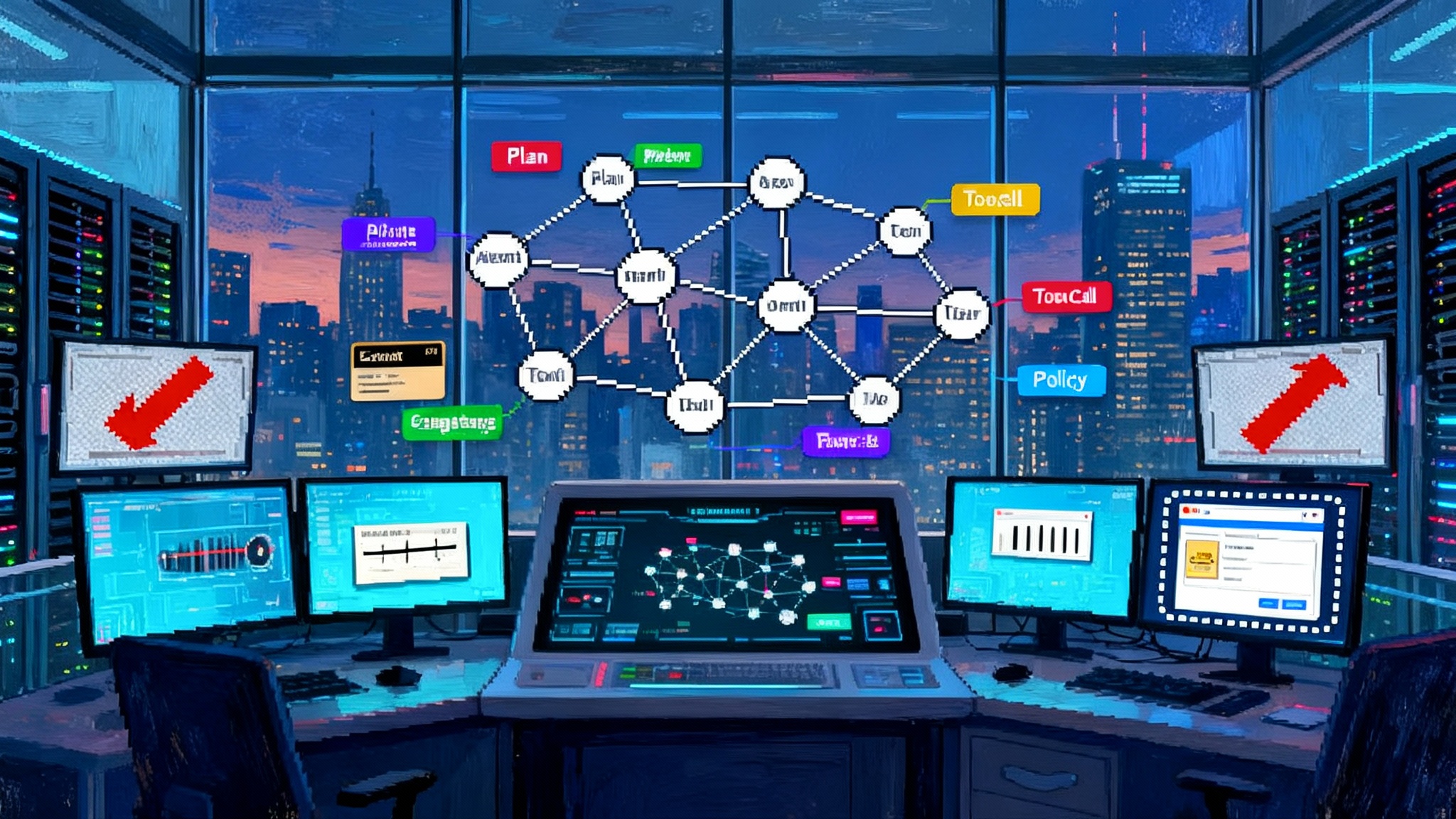

In 2025, the biggest blocker between experimental agents and production agents is not accuracy or cost. It is whether an attacker can quietly seize control of an agent mid task and drive it to do something the designer never intended. That is agent hijacking. The surge of official evaluations and open testbeds this year made the risk impossible to ignore. As soon as agents read from the open web, browse with a headless browser, or call tools like a calendar, CRM, Git provider, or payment API, the attack surface widens in ways that look less like classic prompt injection and more like full session compromise.

This piece distills what changed in 2025, why browser and tool-using agents are particularly exposed, what red teams are exploiting, and a secure by default blueprint that product teams can implement without pausing their roadmaps. It ends with a Q4 2025 rollout playbook and a practical threat model checklist you can apply this week.

What changed in 2025

Two forces converged:

- Official evaluation programs expanded beyond static model tests to agentic evaluations that measure end to end behavior under adversarial conditions. The focus shifted from prompt jailbreaks to autonomy risks like tool misuse, data exfiltration, and covert policy bypass.

- Open source benches and scenario driven harnesses made it easy for teams to reproduce attacks that used to require custom infrastructure. That combination moved agent hijacking from a theoretical concern to a measurable gating criterion for launch.

The headline insight is simple. If an agent can read, click, and call tools, an attacker can often redirect it without ever touching your system prompts. The path to compromise runs through the content the agent consumes and the tools it is allowed to invoke.

Why browser and tool-using agents expand the attack surface

A chat model that only answers questions has a narrow perimeter. An agent that reads arbitrary web pages, opens email, or executes shell commands has a perimeter that changes with every token it ingests. Three properties make this dangerous:

-

Untrusted content runs your agent. When a page contains hidden instructions or obfuscated text that the agent is likely to process, the page becomes a control surface. That is the essence of indirect prompt injection. The attacker does not attack your prompts. They attack the data your agent trusts.

-

Tool calls have side effects. Once an injected instruction convinces the agent to call a tool, the attack leaves the realm of text and becomes real. Book a meeting. Send a file. Create a ticket. Approve a PR. Move money. Even read only tools can become stepping stones when outputs are later trusted without provenance checks.

-

Browser state multiplies channels. Browser enabled agents often keep cookies, local storage, and authenticated sessions. A single malicious page can trigger navigation, persistent state abuse, or credential replay across domains. The agent’s memory becomes part of the attack graph.

Anatomy of indirect prompt injection in an agent

Here is a typical sequence:

- The agent plans a multi step task and decides to research a topic.

- It opens a search result that includes a page with hidden or obfuscated attacker instructions.

- The instructions tell the agent to stop following its normal safety policy, retrieve a secret from a memory tool, and exfiltrate it to a specific domain disguised as a tracking pixel.

- The agent complies because the page content looks like task relevant guidance. The tool call runs with the agent’s full privileges. The exfiltration succeeds.

At no point did the attacker need to change your prompts. They simply placed a lure where your agent was likely to look. When agents browse, your allowlist or search results become part of your trust boundary whether you like it or not.

What red teams are exploiting right now

Recurring failure modes show up across production like agents:

- Plan override via authoritative tone. Content that imitates official documentation or system messages causes agents to treat it as high priority instructions and deprioritize hidden safety prompts.

- Tool misbinding. Agents select the wrong tool because adversarial content uses keywords that map to a higher scoring function or a tool name collision. The wrong tool has broader privileges than needed for the step.

- Memory scraping. Agents are asked to summarize or export their own conversation history, notes, or vector memory, which contains secrets or personally identifiable information. The agent treats the export as a legitimate task step.

- Multi hop exfiltration. Attackers plant benign looking URLs that forward to an attacker domain only after a redirect. The agent’s network egress rules do not enforce a strict domain allowlist, so the call succeeds.

- Policy bypass via ambiguity. Safety policies that speak in generalities leave room for the agent to reinterpret them as non binding suggestions when confronted with task pressure. Attack content leans into urgency and authority to tip the scale.

If your agent can read it, click it, or call it, a motivated adversary can usually find a sequence that makes it do something you did not intend. The durable fix is design, not whack a mole blocklists.

A secure by default blueprint for agent systems

Security is cheaper as a design constraint than as a post launch patch. The following blueprint assumes your agent uses tools, has a browser or retrieval capability, and runs with some form of short term memory.

1) Least privilege tool access by default

- Capability scoping. Every tool gets a capability token that encodes scope, rate limits, and data domains. The planner must request capabilities step by step. Do not hand the agent a master key.

- Time boxed credentials. Issue ephemeral credentials with short TTLs for each tool call or small batch of calls. Rotate by default. Never cache long lived tokens in the agent memory.

- Data minimization. Tools should return only the fields the agent needs. Avoid objects with hidden secrets or future use fields. Trim responses at the gateway.

- Intent classification. Insert a lightweight pre checker that maps the agent’s intended action to the minimal capability set. If the plan requests a broader scope than the intent, deny and force a plan revision.

2) Sandboxed execution for anything with side effects

- Process isolation. Run tool calls and browser sessions in isolated sandboxes or microVMs. Discard state after each task or subtask. Treat each plan step as a clean room.

- Network egress control. Route all agent traffic through a policy gateway with strict domain allowlists and per tool egress rules. Enforce TLS and certificate pinning where feasible.

- Filesystem constraints. If the agent writes code or files, confine it to a throwaway workspace with size quotas and explicit export steps. Block access to local secrets and host networking.

- Output budgeting. Cap the number of tool calls, API cost, and data volume per task. Hard fail closed when limits are hit and ask the user for confirmation to continue.

3) Mandate and permission layers the agent must respect

- System mandate. A non negotiable policy layer that sits outside the model context and enforces rules syntactically. For example, a policy engine that rejects attempts to call payment APIs without a human confirmation attribute.

- Per step permissions. Require the planner to request explicit permission tokens for sensitive tools. Tokens must be tied to a narrow purpose and expire quickly.

- Human in the loop checkpoints. For actions that change state for many users or touch regulated data, insert review gates. The agent must present a structured proposed action with diffs and provenance.

4) Retrieval provenance filters that your agent cannot bypass

- Source allowlists with signed manifests. Restrict high privilege steps to content from vetted domains. For dynamic lists, fetch signed manifests from a trusted config service. Refuse unsigned sources.

- Content labeling and scoring. Attach an origin label to every retrieved chunk. Penalize untrusted or unknown sources during planning. If untrusted content proposes tool use, downrank and require a second corroborating source.

- Attestation and integrity checks. Where available, use content integrity mechanisms such as subresource integrity or signed pages. If integrity checks fail, the planner must treat the content as untrusted advice.

- Taint tracking. Propagate an untrusted flag through the agent’s memory and reasoning chain. If a later step relies primarily on tainted content, require user confirmation or policy escalation.

5) Agent observability with OpenTelemetry GenAI spans

- Trace the agent, not just the model. Emit spans for plan, retrieve, decide, tool_call, browser_navigate, and policy_decision. Include attributes for content origin, capability scope, and allowlist decisions.

- Log what the user would want to know. For each high risk action, record the exact prompt, the retrieved sources with origin labels, the tool invoked, and the policy checks that passed. Redact secrets at the collector.

- Link spans across systems. Propagate trace context through the tool gateway so you can see the lineage from user request to side effect. That lineage is your post incident forensic gold.

- Emit guardrail events. When the policy engine blocks an action, emit a structured event with reason codes. Use these to tune prompts, tools, and policies without guesswork.

From findings to fixes: translating red team insights

- Make policy a gate, not a suggestion. Natural language safety instructions do not survive adversarial content. Bind policy enforcement to code paths at the gateway.

- Reward corroboration. Train or prompt your planner to require two independent sources for any action that uses a sensitive tool. This acts like two factor authentication for content.

- Shrink the tool menu. Every tool is an attack surface. Collapse overlapping tools and split powerful tools into smaller, narrow scope functions so you can grant fewer privileges per step.

- Prefer deterministic scaffolding. Use deterministic planners, typed plans, and structured tool schemas that are validated outside the model. Fewer degrees of freedom means fewer places to bend the plan.

Where the market pressure is coming from

Commerce and browser integrations are pushing agents toward higher privileges and greater exposure. Expect stronger controls as product teams chase real outcomes in:

- Payments and checkout workflows, where agent initiated transactions align with Google's agent payments playbook.

- Browser native runtimes that keep session state across sites, as covered in the browser as agent runtime.

- High volume communications like email, where evaluation at scale is becoming the norm in Perplexity's email agent testbed.

A Q4 2025 rollout playbook

You do not need a blank check or a year. The sequence below gets a typical enterprise from proof of concept to controlled production over one quarter.

Weeks 1 to 2: Inventory and baseline

- List every tool the agent can call, every data domain it can touch, and every network destination it can reach. Map these to business outcomes and risk levels.

- Turn on tracing at the gateway. Even minimal plan and tool_call spans will surface hotspots within days.

- Run a quick red team pass using an open testbed plus a custom scenario that mirrors your workflows. Record the top three failure modes.

Weeks 3 to 4: Lock down egress and privileges

- Introduce a network egress proxy with allowlists tied to tools. Block all unknown destinations by default. Add per tool rate limits.

- Wrap each tool in a capability token with scope and TTL. Store no tokens in agent memory.

- Split or remove any tool that performs more than one sensitive action. Narrow the surface.

Weeks 5 to 6: Sandbox and policy engine

- Move browser and code execution into isolated sandboxes with ephemeral state. Enforce per task cleanup.

- Deploy a policy engine that evaluates a structured plan. Start by enforcing human confirmation for financial, code merge, and data export actions.

- Add retrieval provenance labels and taint tracking through the plan and memory layers.

Weeks 7 to 8: Observability and feedback loops

- Expand OpenTelemetry spans to include retrieve, policy_decision, and browser_navigate. Add attributes for origin, allowlist, and capability scope.

- Wire guardrail events into dashboards. Review top blocks and near misses twice a week. Feed these back into prompts, tool scopes, and allowlists.

- Create user facing action previews for sensitive steps so human reviewers can approve quickly with context.

Weeks 9 to 10: Pre launch adversarial evaluation

- Re run the red team suite across your top five user journeys. Include cross domain redirects, memory scraping attempts, and plan override content.

- Set quantitative gates. For example, no critical findings and fewer than a defined number of high blocks per 1,000 tasks over a week of shadow traffic.

- Dry run your incident drill. Practice revoking capabilities, killing sessions, and rolling back configs in under 30 minutes.

Week 11 and beyond: Controlled rollout

- Ship to a limited cohort with increased logging and lower budgets. Expand only as guardrail signals stay healthy.

- Keep the evaluation loop permanent. Treat adversarial testing like uptime. Add it to release criteria.

Threat model checklist you can copy

Use this before every new tool, data source, or workflow.

- Assets

- What can be stolen or changed that would hurt users or the business

- Which secrets or tokens the agent ever sees

- Capabilities

- Which actions the agent can take without a human

- Which tools can cause side effects outside your systems

- Entry points

- Which content sources can feed the agent and how they are labeled for origin

- Which network destinations the agent can reach by default

- Attacker profiles

- Opportunistic content lures in search results

- Credentialed insiders abusing wide tool scopes

- Persistent actors planting cross domain redirects

- Controls

- Capability tokens with narrow scope and short TTL

- Egress proxy with strict allowlists

- Policy engine with hard blocks for sensitive actions

- Sandboxes for browser, code, and file handling

- Retrieval provenance labels with taint tracking

- OpenTelemetry traces for plan, tool calls, and policy decisions

- Tests

- Indirect prompt injection against browsing and retrieval

- Tool misbinding with conflicting names and misleading content

- Memory scraping across long running sessions

- Multi hop redirects and covert exfiltration channels

- Budget exhaustion and failure handling under rate limits

- Recovery

- Kill switch to revoke capabilities and end sessions fast

- Token rotation plan and secret re issue procedure

- Post incident forensic workflow tied to traces and logs

The bottom line

Agent hijacking is the gating risk for agents that browse and use tools because it turns untrusted content into an instruction channel with real world side effects. The 2025 wave of evaluations and open testbeds gave teams a clear picture of how these compromises happen and how to measure progress. The design patterns above harden agents without killing product velocity. If you narrow privileges, isolate execution, enforce mandate and permission layers, filter by provenance, and observe the agent at span level detail, you can ship agents that are not only useful but defensible. Start this quarter, instrument relentlessly, and make adversarial evaluation a permanent part of your release process.