Agents Go Retail: Manus 1.5, $75M Scrutiny, and a 2026 Reset

Manus 1.5’s October 2025 release and a $75 million round led by Benchmark, now facing U.S. review, mark the moment autonomous web‑acting agents enter mainstream retail. As capital, policy, and reliability engineering collide, commerce and consumer trust are set for a 2026 reset.

The moment agents went retail

In October 2025, Manus shipped version 1.5 of its consumer agent, a release framed not as a demo but as a real tool you can pay for, trust with login prompts, and point at your weekend to do list. The timing was not subtle. Months earlier, the startup behind Manus announced a 75 million dollar round led by Benchmark, which soon drew a U.S. Treasury review, turning a product launch into a geopolitical story. The facts are simple. Fully autonomous, web‑acting agents are moving from spectacle to shelf space, and capital and policy will now shape who scales. Treasury’s review of Benchmark’s investment is a public sign that agents have crossed from novelty into strategic concern.

Manus 1.5 is pitched as faster execution, longer context, and a builder that will scaffold and deploy full web apps from a single prompt. In plain terms, it is trying to bundle research, coding, deployment, and product operations into one flow so that a single user can plan, build, ship, and iterate without switching tools. Whether you are a solo founder trying to spin up an invoicing tool or a parent booking dental appointments, the promise is that an agent can now read, click, type, purchase, and follow through.

From clever demos to paid products

The first generation of agents made for viral videos. A camera pointed at a screen as the bot clicked around, booked a table, and screenshotted a confirmation. That was a demo era. The new era is defined by repeatable economics and survivable failure. Repeatable economics means pricing that maps to tasks people actually run. Survivable failure means that the bot can get stuck, back up, ask for help, or refund a credit without breaking the day.

Manus and its peers have settled on credits as the basic unit of work. That design has a logic. Credits let a company budget the expensive parts of autonomy, such as long context, multi step plans, and retries, without hiding costs. A forty minute job that scrapes forms, files a claim, and submits a receipt will burn more credits than a quick search. Power users can add credits for a rush month or buy a team pool so colleagues do not stall out on an end of quarter deadline.

There are frictions. Users report failure loops where the agent stalls, wipes a page, or retries until the credits run out. Support forums show disputes over whether a refund should return credits to an account or to a card. In practice, the lesson is not that credits are broken. It is that autonomy pushes cost to the edges where logging, billing, and customer support must be engineered as tightly as the models themselves. If an agent fails on step six of nine while booking a visa appointment, the system should tag the state, rewind, and bill only the completed work. That requires a ledger that tracks sub steps, not just sessions, and a clear paper trail users can audit.

A related shift is expectation setting. Agents now show estimates for time and credit burn before they start. That turns each run into a mini contract. You authorize the plan, the agent executes, and you have grounds to dispute if it deviates. This is how autonomy becomes a consumer product rather than a research curiosity.

Platform answers: OpenAI, Amazon, Microsoft

The platforms are not standing still. OpenAI’s Operator research preview, which used its own browser to act on the web, graduated into ChatGPT Agents in July with broader availability and permission prompts for consequential steps. The pivot is explicit. ChatGPT now thinks and acts on a virtual computer, requests permission before it buys, and lets you seize control mid stream. This is not just a feature. It is a risk posture. By integrating agentic actions into the main product and gating purchases with clear prompts, OpenAI moved from a side experiment to a consumer grade control surface. The company laid out this evolution in its announcement of ChatGPT agent capabilities, describing how Operator’s browser skills folded into a unified system that can plan, click, and deliver artifacts.

Amazon has been seeding an alternative path with Nova Act, a research model and software kit for building agents that act inside a browser. The watchword is reliability. Nova Act breaks complex jobs into atomic commands such as search, fill, and checkout. Developers can add rules like do not accept the travel insurance upsell or always use the corporate card for flights. This developer first answer to consumer autonomy fits Amazon’s culture. It is not a single agent for everyone. It is a kit that retailers, publishers, and toolmakers can wire into their own flows. This also aligns with the broader shift to the browser as the agent runtime.

Microsoft is pushing at the operating system layer. Copilot Actions are rolling out as a way for Windows and Edge to perform tasks such as reservations or purchases directly from the desktop, with granular permissioning and on screen prompts. This model focuses on the last mile. If an agent can see your screen, it can act on your screen, which makes anything with a web interface fair game. The constraints are clear. Permissions are opt in, and actions remain local to the user’s session unless you authorize data to leave the machine.

The strategies rhyme. OpenAI is normalizing agent behavior inside its core product with strong prompts and audit trails. Amazon is offering a kit for builders who want controllable agents with domain specific guardrails. Microsoft is stitching actions into the operating system so ordinary users can reach for autonomy without learning a new app. Each approach recognizes the same shift. Acting on the web is the new baseline. The difference is where control and accountability live.

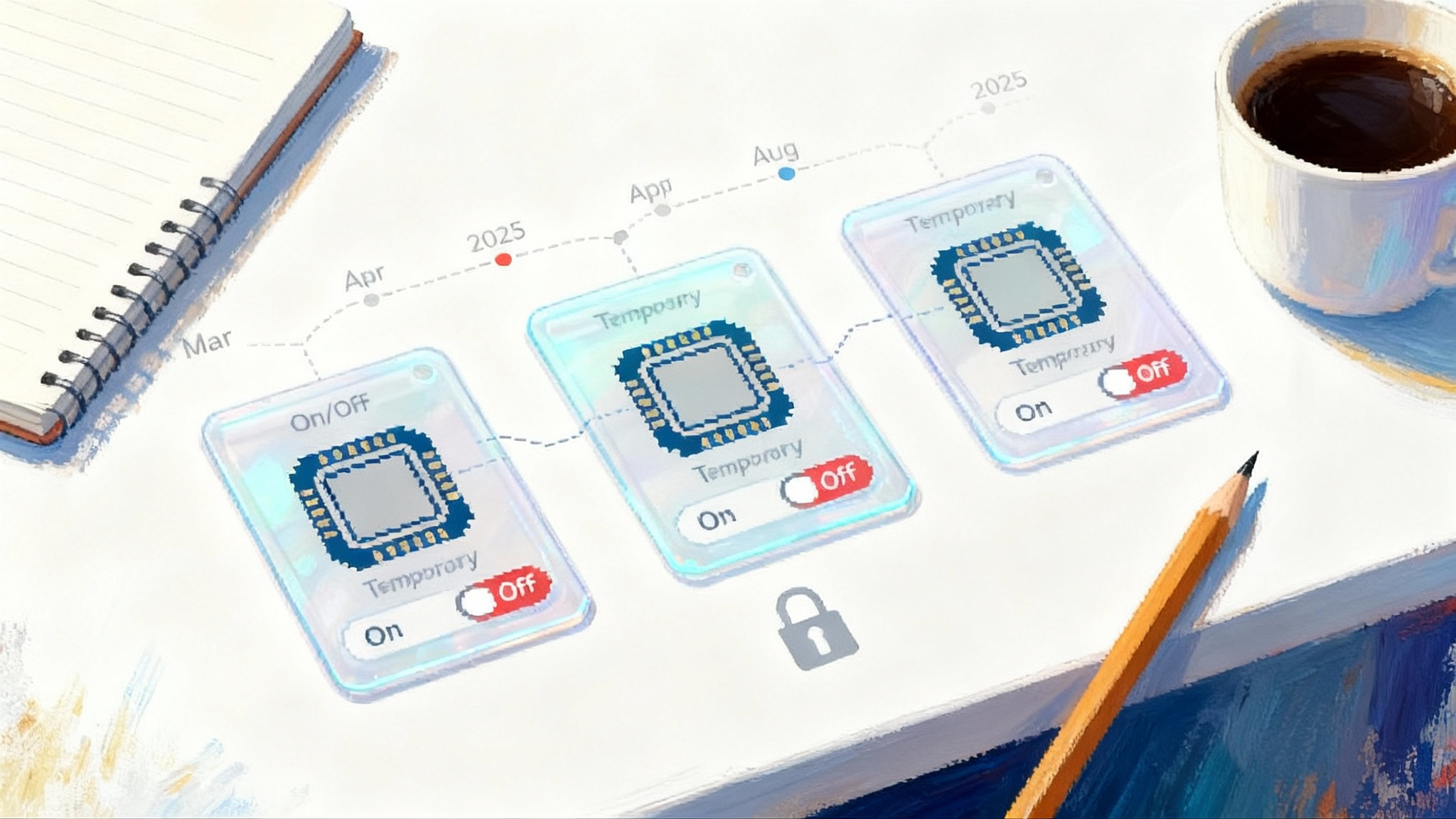

Reliability is the business model

For consumer agents, reliability is not a nice to have. It is the business. A consumer will forgive a chatbot for a wrong answer. They will not forgive a double order or a missed refund. The hard work sits beneath the models.

- State checkpoints. Agents need to save state after each significant step. If checkout fails because a page timed out, the agent should reopen the cart, reapply the discount, and try another card, not start from scratch.

- Deterministic replay. Support teams need a replay of what the agent saw and did, with timestamps and event logs. That lets the company credit a user within minutes rather than weeks because a human can verify step by step. This connects to rising interest in AgentOps and self healing observability.

- Cost accounting. Credits must map to discrete actions so customers can see where money went. Long black box sessions breed mistrust. Itemized sub steps turn billing into a ledger both sides can audit.

- Human interrupts. The system should invite the user to take over when it detects a fragile moment, such as a legal waiver or a payment mismatch. A quick nudge can save twenty minutes of futile retries.

Manus 1.5 gestures at this stack by promising faster runs, broader context, and a builder that can deploy and then self test. That is the right direction, but the threshold for consumer trust is high. The companies that win in 2026 will not be those with the flashiest demo. They will be the ones whose agents quietly recover from chaos.

Autonomy plus purchase permissions changes commerce

When an agent can both decide and buy, funnels compress. Instead of a shopper seeing five ads, a comparison table, and two email follow ups, an agent can set a goal, pull constraints, and check out with a single authorization. That shifts power to whoever sits closest to the decision and the wallet.

- Retailers can sell to agents directly. Product pages will evolve into agent pages with machine readable specs, warranty terms, and return logic. A human will still browse, but the agent will parse, verify, and enforce rules like do not buy extended warranties or only buy direct from the brand.

- Affiliates become choreographers. The most valuable affiliates will not be writers but agent designers. They will publish shopping recipes that route an agent through preference gathering, price checks, coupon tests, and loyalty points redemption. Attribution will track not just the last click but the agent’s recipe that produced the purchase.

- Guarantees replace slogans. If agents are to buy on our behalf, retailers will need product level service level agreements. Examples include guaranteed ship dates, false advertising penalties, and instant refunds if a spec is wrong. Those rules will be encoded so agents can enforce them at checkout.

There will be pushback. Some merchants will try to block nonhuman traffic. Others will tempt agents with bundles and upsells that waste credits or time. The counter is simple. The platforms will give users policy controls. If a site wastes resources, the agent will note it and avoid it next time. The new loyalty is to predictability.

Indie dev workflows will mutate first

Consumer agents are a headline, but indie developers will be the earliest to change how they work. Manus 1.5’s builder hints at a near future where agents bootstrap full apps, wire third party APIs, attach a database, and prepare a production deploy. That compresses the idea to launch cycle from weeks to afternoons.

- A solo founder can ask for a subscription web app to track rental applications, with user login, a simple dashboard, and a weekly export. The agent scaffolds the codebase, deploys to a host, sets analytics, runs smoke tests, and asks for the founder’s Stripe keys.

- A contractor can turn a vague brief into a clickable plan. The agent converts a voice memo into a project board with tasks, estimates, and dependencies. It then offers to knock out the first two tickets while the client reviews the plan.

- A small studio can give an agent a backlog and a budget. The agent executes all low risk chores, opens pull requests with tests, and flags anything that requires taste or careful judgment. Credits become a predictable line item.

The caveat is quality control. Indie teams will need conventions for agent code. Examples include a fixed directory structure, commit message rules, and a checklist for security scanners. The agent can follow your rules, but you must write the rules.

Who scales globally will be decided by policy

Export controls and safety gating will sort the global map. Three levers matter.

- Capital controls. Washington’s review of American investment in Chinese artificial intelligence ventures, including the Benchmark round for Manus, signals that ownership and influence are as important as model weights. If you expect to raise from U.S. firms or list on a U.S. exchange, design your corporate structure early so it can pass a future diligence test. Cayman shells and model wrapper arguments may not carry forever.

- Compute controls. Access to advanced accelerators is tightening. Companies that depend on frontier training or heavy inference should model a plan B on older chips and region specific rentals. Reliability at lower compute tiers is not just a cost win. It is resilience against policy shocks.

- Safety gating. Platforms are racing to standardize permissions for purchases, logins, and real world actions. The winners will ship granular controls such as per site allowlists, spend caps, and signed prompts that make consent auditable. If your agent needs to buy, it should request a budget, explain the plan, and return receipts by default.

The open question is alignment between the big three approaches. OpenAI centralizes in a single agent surface with strong prompts and auditability. Amazon distributes through a developer kit that gives partners hands on control. Microsoft embeds at the operating system layer so actions feel native. The most likely outcome in 2026 is a patchwork. Consumers will treat agents like payment methods, selecting the one their employer supports or the one their favorite retailer optimizes for, reinforced by the rise of the new agent app stores.

What to do now

If you build consumer products:

- Expose machine readable product specs, return windows, and warranty rules today. Keep it simple. A well structured page that an agent can parse is revenue you would otherwise lose to a clumsy scraper.

- Add an agent checkout path. Allow a session where an authorized agent can prefill data, skip dark patterns, and provide a signed manifest of its steps. Reward that path with fewer captchas and an immediate receipt API.

If you build agents:

- Treat credits as a ledger, not a meter. Itemize steps, checkpoint state, and publish a clear refund policy that maps to sub steps. Customers will pay more for a product they can audit.

- Put permission prompts where the risk is, not everywhere. Too many prompts cause blind consent. Prompt on spend, identity, and irreversible steps. Offer a live handoff button on fragile pages.

- Build replay tools for your support team before growth. You will thank yourself in your first viral month.

If you are a policymaker or investor:

- Ask for purchase path audit trails and per action consent logs in diligence. Verify that a company can answer a refund dispute with a screenshot level replay.

- Clarify what counts as a China based artificial intelligence business in cross border rules. If wrappers and shell entities are loopholes, close or codify them now. Ambiguity hurts honest actors and rewards arbitrage.

The bottom line

Autonomy plus the right to purchase will change both shopping and building. In 2026, the consumer will not compare ten blue links. Their agent will filter, plan, and buy within a budget and a set of personal rules. Indie developers will not spend two weeks gluing boilerplate. Their agent will ship a first draft and wait for edits. The companies that scale are already making the boring parts world class: credit ledgers that match reality, permission prompts that respect the moment, and replay tools that turn disputes into quick credits. The geopolitics are real, and the reviews of capital flows will continue, but the vector is set. Agents are becoming retail software. The next reset is not who can click the fastest. It is who can earn trust when real money moves.