AI That Remembers You: Memory Becomes the New UX Edge

In 2025, cross-session memory moved from demo to default. Here is how ChatGPT, Gemini, and Claude rolled it out, what the controls mean for privacy, and how to build it right.

Breaking: assistants now remember you across sessions

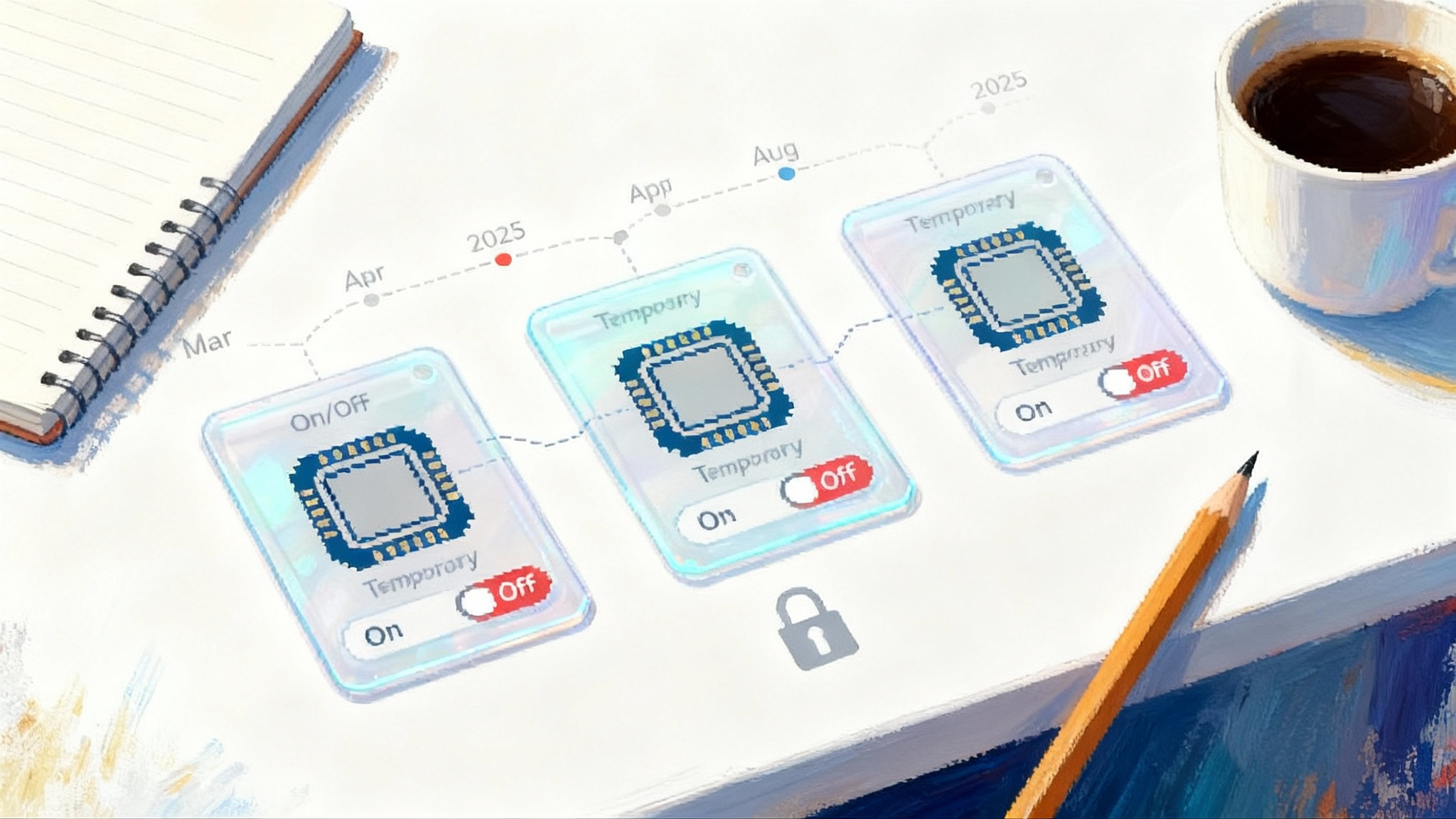

A year ago, most chatbots felt like friendly goldfish. Close a tab, lose the plot. In 2025 that changed. OpenAI expanded ChatGPT’s dual memory system in April and June 2025, so it can both keep explicit saved memories and reference signals from your prior conversations to personalize new replies, with user controls to dial each part up or down. The company detailed the rollouts and the toggles that govern them on its product page, including how to turn memory off entirely or use temporary chats that leave no traces. You can trace the specifics in OpenAI memory announcements and updates.

Google moved next. In March 2025 it introduced Gemini personalization that can draw on your Google apps, starting with Search history, to tailor responses. In August 2025 Google added the ability for Gemini to reference your past chats by default, a new Personal Context setting to turn it off, a Temporary Chat mode for one-offs, and a planned rename of Gemini Apps Activity to Keep Activity effective September 2. The changes started on Gemini 2.5 Pro in select countries, with a path to broader availability. For deeper context on Workspace usage, see how Google positions proactive work in Gemini Deep Research in Workspace.

Anthropic rounded out the year by making memory a first-class feature in Claude. On September 11, 2025, memory began rolling out to Team and Enterprise plans with incognito chats for everyone. On October 23, 2025, Anthropic expanded memory to Pro and Max subscribers, with project-scoped memory, a visible memory summary you can edit, and import or export options. Anthropic’s framing is straightforward: memory is optional, scoped to work by default, and designed to be audited and erased. See the company’s details in Anthropic memory for Claude.

The bottom line is simple. Cross-session memory is no longer a lab demo or a buried toggle. It is the new baseline for assistant experiences and the next competitive front for user experience.

How each vendor’s memory works today

This is not one feature with three logos. The mechanics differ in ways that matter for builders and teams.

-

OpenAI ChatGPT

- Two sources of continuity: user-designated saved memories, plus a system-driven reference to your past chat history.

- Granular controls: you can disable either source, use a temporary chat that does not use or update memory, review what ChatGPT remembers, and ask it to forget items on command.

- Availability in 2025 included Plus and Pro, with a lighter version for Free users over the summer. Rollout timing and defaults varied by region, particularly across the European Economic Area.

-

Google Gemini

- Personal Context allows Gemini to draw from your past chats to tailor replies. It started enabled by default in August 2025 on Gemini 2.5 Pro in select countries, with a control to turn it off.

- Temporary Chat gives you private one-offs that do not personalize future responses and are retained for a limited period to complete the conversation and process optional feedback.

- Personalization can also connect to Google apps, beginning with Search history in March 2025, with opt-in and additional privacy dashboards.

-

Anthropic Claude

- Memory is opt-in. It launched first for Team and Enterprise users in September 2025, then expanded to Pro and Max in October.

- Project-scoped memory keeps work streams separate, which reduces accidental bleed between personal and professional contexts.

- A visible, editable memory summary lets users inspect and prune what Claude retains. Incognito chats are available to skip memory entirely.

Three approaches, one destination. Users can now expect assistants to resume where they left off, to remember preferences like tone and format, to carry requirements across documents, and to surface helpful reminders before being asked.

Privacy and control: who defaults to what

Your comfort with long-lived memory depends on defaults, visibility, and escape hatches.

-

Defaults

- OpenAI uses two tracks. Saved memories and chat history reference can be managed separately. If you turn either off, it stays off. Temporary chat lets you run without memory for a conversation.

- Google’s August 2025 update enabled Personal Context by default in eligible regions and models, with a clear toggle to shut it off.

- Anthropic defaults to off. You must enable memory in settings, which sets a privacy-preserving norm by design.

-

Visibility

- OpenAI lets you ask what ChatGPT remembers, and you can delete individual memories or clear them all in settings.

- Google surfaces Personal Context controls and lets you delete past chats or run Temporary Chats that are not used for personalization and have a short retention window.

- Anthropic shows you a memory summary and allows edits, exports, and per-project scoping.

-

Administrative control

- Enterprise controls matter as assistants move into regulated teams. OpenAI restricts training uses for Team and Enterprise tiers and supports organization-wide controls.

- Google’s Keep Activity setting governs whether certain uploads may be sampled for service improvement. Organizations can use policies to disable features.

- Anthropic lets enterprise administrators disable memory for an organization and rely on existing data retention settings, while keeping memory project-scoped.

If you run a team, read those settings screens closely. The meaningful privacy line is not a policy headline, it is the default state of toggles, the speed to erase, and the clarity of the activity logs your users will actually touch.

Why memory changes the product surface

Think of memory as a personal knowledge base that the assistant builds with you. Without it, every chat is a cold start. With it, product surfaces shift in three ways:

-

Progressive setup disappears. Instead of asking users to configure tone, format, and goals in a profile page, the assistant infers and persists them in use. A sales manager who always asks for bullet-first summaries and a risk register does not need to restate it. The assistant learns and applies it.

-

The assistant turns proactive. If you asked Gemini last month to draft a weekly status in a three-part structure, it can offer the same template on Thursday morning. If you told ChatGPT your child is allergic to peanuts, it can flag that in a recipe search. If you keep a design glossary in a Claude project, it can apply that vocabulary when you open a new Figma file.

-

Reliability requires new guardrails. Long-lived memory can hallucinate just like content. False recall feels worse than a wrong answer, because it violates personal trust. UX must make memory legible, revocable, and auditable in the same flow.

What comes next: portable memory spaces and standards

We are headed toward a world where your assistant memory travels with you, just like a contacts list or a calendar does today. Here is what that future likely includes in the next 12 to 24 months:

-

Portable memory spaces. Users export the memory summary and key facts from one assistant, then import them to another. Anthropic already exposes import and export for project memory. Expect OpenAI and Google to offer simple flows that respect privacy choices and omit sensitive fields.

-

A standardized schema. Expect an open, minimal memory schema so assistants can exchange entries like preference entries, profile facts, constraints, and task-specific caches. Each entry includes source, timestamp, scope, and sensitivity label. For the cross-stack picture, see how agent interop and MCP standards enable shared primitives.

-

Redaction and time-to-live by default. Memory entries should carry retention policies. A travel booking confirmation is useful for weeks, not years. Builders will add field-level redaction for numbers and names, and automatic expiry by default.

-

Memory evals. We will treat memory like we treat search quality. Teams will measure false recall, missed recall, and inappropriate recall, alongside task success and user corrections. Benchmarks will include adversarial cases, such as attempts to coax private details from memory.

-

Scope and conflict resolution. Users will hold multiple memory scopes, for example Work, Personal, and Project X. When scopes conflict, the assistant must resolve by hierarchy and recency, not by the loudest hint in the current prompt.

Builder’s playbook: ship proactive, low-risk memory

You can ship memory in an agent today without creating privacy debt. Here is a concrete playbook.

- Consent and transparency

- Make it progressive. Ask for memory permission at the first moment it creates obvious value, for example when the user repeats a preference in a new chat.

- Show and tell. When memory updates, display a small banner that says what changed and provide a one-click undo. Make it routine, not rare.

- Offer three modes everywhere: On, Off, and Temporary. Temporary should be prominent, not hidden in a submenu.

- Add a “What do you remember about me?” command with a structured answer grouped by scope. Offer redaction buttons inline.

- PII handling and default safety

- Define a blocked list for sensitive categories that your assistant will not retain by default, for example financial numbers, health diagnoses, government identifiers, precise children’s data, and home addresses.

- Run a redaction pipeline that detects and masks sensitive patterns before they ever enter long-term storage. Keep a reversible vault for users who opt in, otherwise drop the raw values.

- Attach time-to-live to every memory row. Default to 90 days for task context, 365 days for stable preferences, 7 days for ephemeral one-offs. Let users change it per item.

- Maintain an audit log that records who created, read, updated, exported, or deleted each memory item. Expose it to users and administrators.

- Retrieval and application strategies

- Separate storage from use. Keep a lightweight key-value memory for stable facts and preferences. Maintain a vector index of episodic summaries for long projects. Query both, then resolve conflicts deterministically.

- Summarize rather than hoard. Collapse long threads into compact, scoped summaries. Store the summary, not the full transcript, unless compliance requires it.

- Gate memory use by task. A code review agent does not need your dietary restrictions. Require each tool or skill to declare which memory fields it can request.

- Rank by freshness and confidence. Blend recency, authoritativeness, and explicitness. A user-confirmed memory beats an inferred hint. A recent summary beats a stale one.

- Make application legible. When the agent uses memory, show a small chip like “Using: tone=bullet first, project=Q4 rollout.” Users trust what they can see.

- KPIs and red-team tests

- Coverage: percentage of sessions where memory was available and eligible.

- Correct utilization: percentage of times the assistant applied the right memory field in the right context.

- False recall rate: instances where the assistant cited a fact the user denies or that conflicts with the audit log.

- User corrections rate: times users click “that is wrong” on a memory chip or edit the memory summary.

- Forget-request latency: time to delete or redact an item, measured end to end and verified with a read-after-write check.

- Safety drills: adversarial prompts that try to extract private items, cross-project leakage tests, and region-scoped policy checks. For broader defense-in-depth, see the agent security stack.

- Team and enterprise controls

- Provide tenant-level toggles for memory and per-scope defaults. Allow administrators to disable memory globally, or restrict it to projects only.

- Support export for compliance and a dead-man switch that zeroes memory on account or project termination.

- Respect region policies. Align defaults and data retention with local requirements, especially in the European Economic Area, the United Kingdom, and Switzerland.

Vendor comparison at a glance

-

OpenAI ChatGPT: Two-track memory with separate switches, plus Temporary Chat. Explicit visibility and per-item forget. Coverage spans Free, Plus, and Pro, with regional nuances. The product page spells out that you can disable saved memories, chat history reference, or both, at any time. First movers on letting users ask, in natural language, what the model remembers.

-

Google Gemini: Personal Context and Temporary Chats, default on in eligible regions and models with a clear toggle to turn off. Personalization that can connect to Google apps like Search history, with settings to control whether uploads are sampled to improve services. The company has stated a 72-hour retention for Temporary Chats to process replies and feedback.

-

Anthropic Claude: Opt-in memory, incognito chats, and project-scoped compartments to prevent bleed between work and personal. A living, editable memory summary and import or export tools. Expansion from Team and Enterprise in September 2025 to Pro and Max in October 2025 shows a measured, work-first rollout.

The strategic shift for product teams

With memory, assistants stop being single-use tools and become ongoing collaborators. That reframes monetization and retention. The winner is no longer the model with the flashiest demo, it is the assistant that accumulates the right memory with the least friction and the clearest controls.

For startups, this is a wedge. Offer safer defaults and better memory legibility than the incumbents, and you can pry loose users who want continuity without surprises. For enterprises, memory is the feature that turns pilots into platforms, provided your governance and export story is as strong as your prompt quality.

The conclusion

The breakthrough is not that assistants can remember. It is that memory is becoming predictable, portable, and permissioned. OpenAI’s dual-track approach, Google’s Personal Context with Temporary Chats, and Anthropic’s opt-in, project-scoped design point to the same end state. Users get continuity without lock-in, and builders get a clean set of primitives to create proactive products that respect boundaries.

Ship memory like you would ship calendars or contacts. Make the schema small, the controls visible, the logs honest, and the delete button fast. The assistants that feel indispensable in 2026 will be the ones that remember just enough, for just long enough, and only when invited.