Inbox-native Gemini Deep Research makes Workspace an Agent OS

Google just moved AI into the heart of work. With Gemini Deep Research pulling trusted context from Gmail, Drive, and Chat, briefs become living documents, actions hit calendars, and governance stays audit-ready. Here is why that shift matters and how to pilot it next week.

The week Workspace became an Agent OS

Google just moved the center of gravity for work. With this week’s rollout, Gemini Deep Research can draw context from Gmail, Drive, and Chat, then synthesize that information alongside the public web to produce briefings and plans that feel like they were written by a teammate who reads everything and forgets nothing. The pivotal detail is not a bigger model. It is where the model runs and what it is allowed to see. Gemini now comes to your data rather than asking you to export your data to it. That makes Workspace feel less like a bundle of apps and more like an operating system for agents. For a parallel trend across productivity suites, see how workspaces as the AI runtime are redefining daily tools.

If you want the short version: agents that live in your inbox and drives, and that respect your permissions, are the real breakthrough. They replace the awkward dance of downloading files or pasting threads into a chat window with a simple instruction that says what you want done, not where to look. Google’s announcement spells it out clearly: Gemini Deep Research can now pull from your Gmail, Drive, and Chat when producing a report, and you can choose the sources in the flow so the model sees only what it should see. See Google’s Deep Research in Workspace for details and examples.

From bring your data to the model to bring the model to your data

For the last two years, the default workflow for serious research with an artificial intelligence assistant looked like this: collect links and files, upload or paste, then hope the assistant remembers what matters. That is like asking a chef to cook dinner by carrying ingredients to a borrowed kitchen down the street. It works, but it is slow, brittle, and easy to mess up.

Bringing the model to your data flips the script. The agent comes into your kitchen. It opens the pantry you already stock, the fridge you already maintain, and the recipe box that your team keeps in a shared folder. In practice, it means Deep Research can:

- Traverse long email threads to extract decisions, open questions, and blockers.

- Read spreadsheets and docs in Drive so it can quote actual numbers and page references.

- Pull relevant context from project chats and handoffs so reports are grounded in how the team talks about the work.

The result is not just a better summary. It is a new unit of work. For orchestration patterns that turn outputs into actions, compare with OpenAI AgentKit for orchestration.

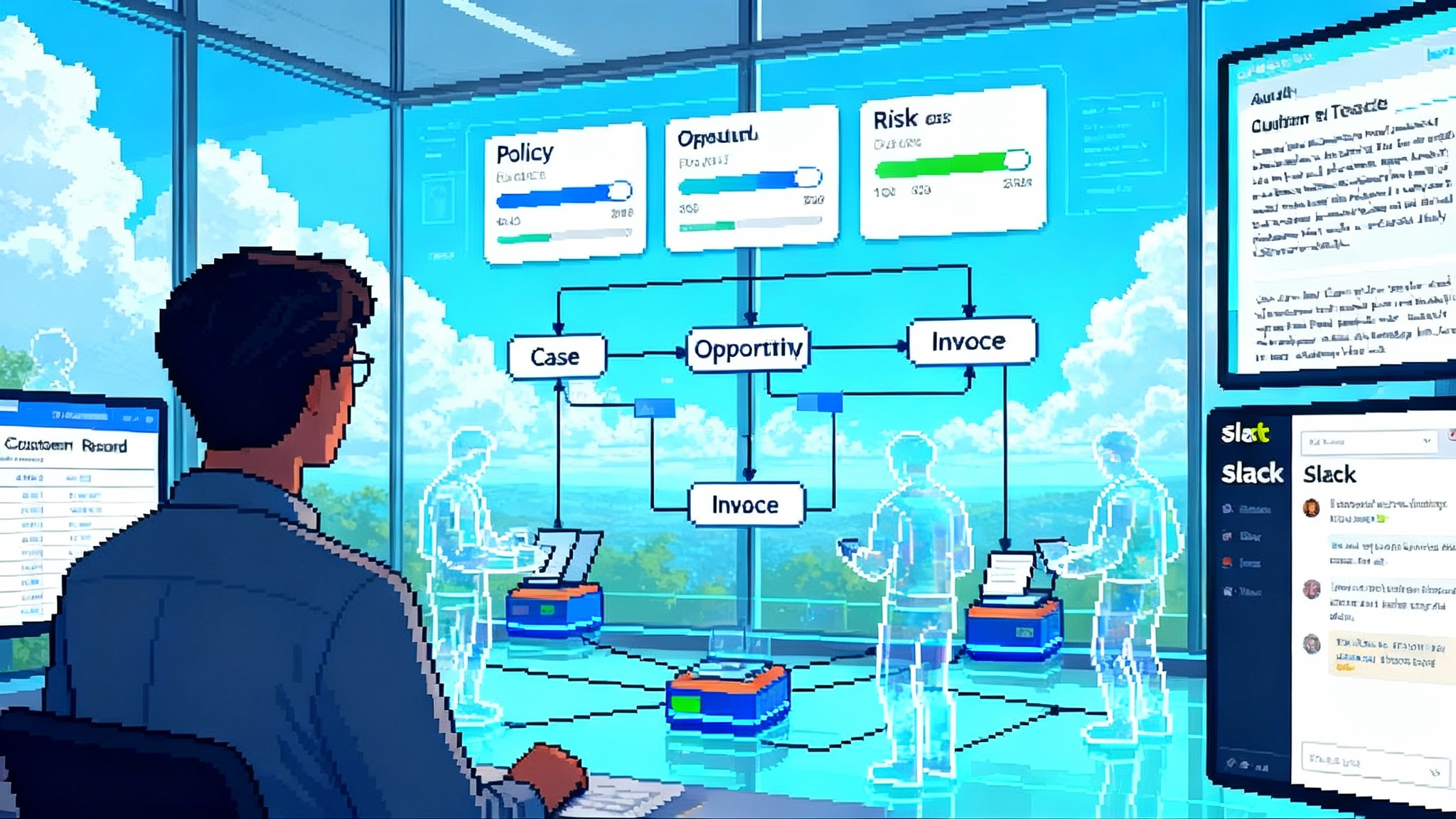

Living briefs, calendar-linked actions, cross-team synthesis

Think about briefs that never go stale. A living brief starts as a question, like: “What did we promise the customer about the Q4 pilot, and what are the risks to hitting that date?” Deep Research canvasses the last six months of emails with the customer, the scope doc in Drive, and the sprint conversations in Chat. It produces a brief with sources, highlights, and next steps. When new messages land or the scope doc changes, you ask the same agent to refresh the brief. Because it already knows where to look, the update takes minutes, not hours.

Calendar-linked actions are the natural next step. A brief that ends with “Set a stakeholder alignment review before code freeze” should be able to schedule the meeting, attach the brief, and assign owners. Workspace has had programmatic hooks for scheduling, document creation, and task assignment for years. The novelty is orchestration. With Deep Research pulling context and Gemini driving actions, your plan does not sit in a doc. It shows up on calendars, in team rooms, and inside the files people already use.

Cross-team synthesis is the sleeper hit. Sales keeps pricing assumptions in a spreadsheet. Support has a doc full of customer complaints. Product discusses risks in a Chat channel. Before, a human had to shuttle between these islands and build a single view. Now the agent can read across those islands while respecting the same access gates a human would face. You ask for the three largest drivers of churn risk next quarter, and it triangulates from all three sources, citing the cells, messages, and sections that matter.

What changes under the hood

Deep Research is not a magic reading lamp. It is a planner and an operator. Given a goal, it identifies sources, devises a multi-step plan, reads selectively, writes drafts, checks gaps, and revises. What is new is the ability to plan across private sources that live inside a company’s Workspace, not just across the public web. The planning loop is similar to a skilled analyst’s routine. Scan the inbox for decisions and commitments. Skim the shared drive for canonical numbers. Check the team chat for recent changes. Cross-check against public data to avoid circular reasoning. Then write.

This is also why the interface matters. Google’s update lets you choose which sources to include at the start of a Deep Research run. That choice is not cosmetic. It becomes the scope of the agent’s reading and the basis for auditing and trust later. The here-and-now benefit is speed: you do not waste prompts telling the model where to look. You declare intention and scope, and it does the rest.

Governance is the make or break

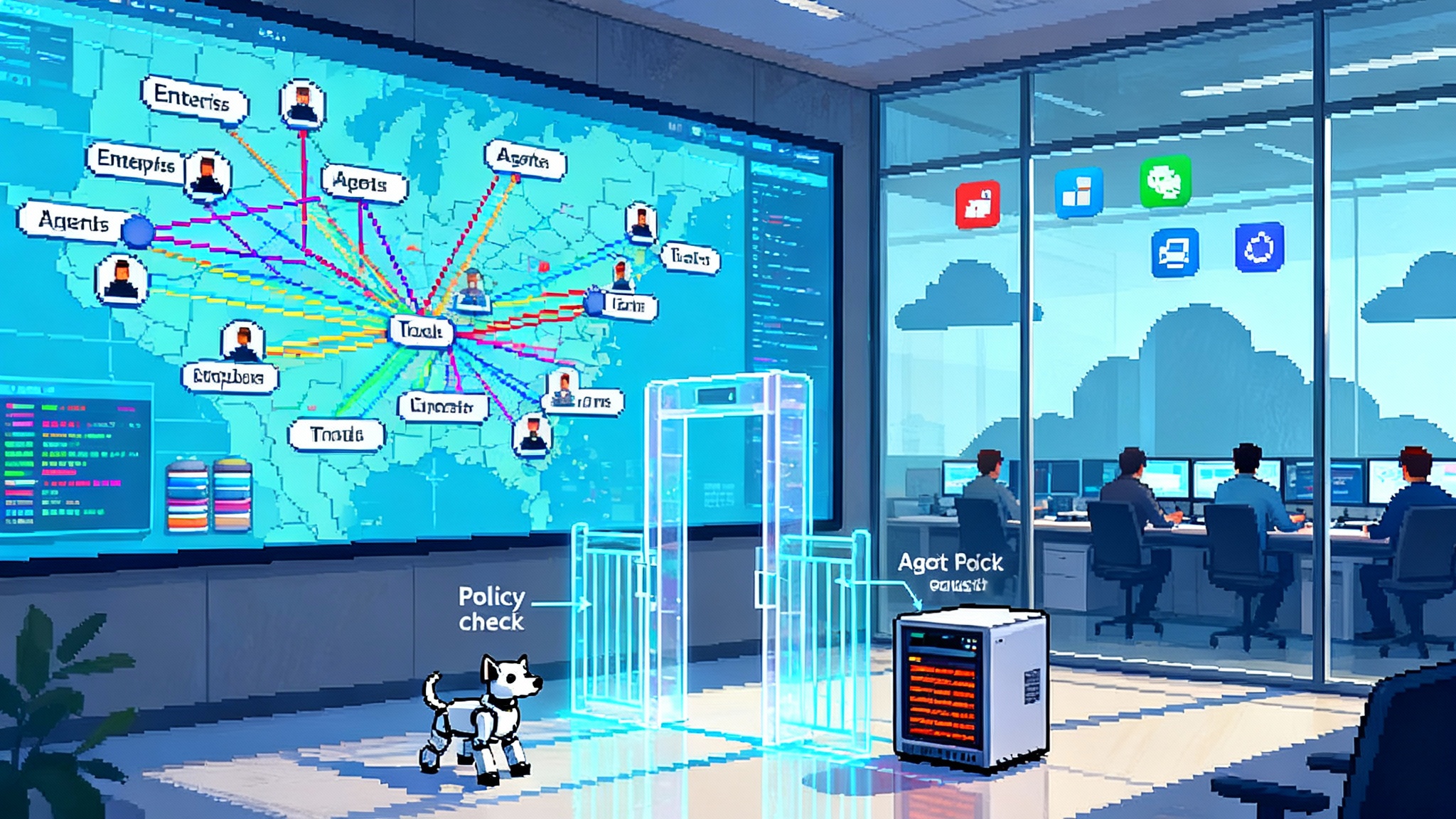

Inbox-native agents raise a healthy question: who saw what, when, and why. Practical governance patterns are emerging, and teams should adopt them early. For a broader blueprint, see our take on the Agent Security Stack.

- Per-source toggles at the moment of use. Users should explicitly opt in each source for each run. That prevents accidental overreach and creates a natural log of intent. Google’s flow already asks you to pick sources before the research starts, which is the right default.

- Least privilege enforced by the platform. The agent should inherit the user’s access rights and nothing more. If you cannot open a file or thread, the agent should not be able to either.

- Transparent footprints. Every agent run should leave a readable trail: what sources were accessed, what time windows were read, and which citations made it into the output.

- Admin-level observability. Security teams should be able to monitor aggregate access patterns by agent, team, and data type, similar to how they monitor login anomalies and file sharing today.

These are not theoretical guardrails. They are the difference between an assistant people love and an assistant people quietly avoid. If your organization does not already have these patterns, write them down now. If you have them, test them against a few messy real workflows and see where they bend.

The competitive picture: Google, Microsoft, OpenAI

Google’s move puts direct pressure on two competitors that have been defining the upper bound of agentic research this year. OpenAI’s deep research is an impressive web-scale researcher, and the company describes a multi-step agent that plans, browses, and produces a source-linked report that can take five to thirty minutes to complete. See OpenAI’s post, Introducing deep research.

Microsoft 365 Copilot has been building a different kind of advantage by sitting natively inside Outlook, OneDrive, and Teams. In March, Microsoft introduced Researcher and Analyst agents that promise multi-step synthesis across the Microsoft Graph and the web. That is the same core bet as Google’s update: the assistant must live where the work lives. The contest is no longer about the cleverness of a single model. It is about the quality of the operating environment around it.

Google’s edge is the sheer volume of day-to-day work that already flows through Gmail and Drive, plus the company’s long experience building narrow but reliable automation inside these surfaces. Microsoft’s edge is the depth of the Microsoft Graph and the way it ties identity, documents, and communications into one fabric. OpenAI’s edge is a head start on agentic planning at web scale and a pace of model iteration that keeps shifting the ceiling for reasoning. Expect the lines to blur fast as all three race to do both: web and workplace.

The 2026 scoreboard: latency, trust, recall

By the end of 2026, this market will be decided by three pragmatic metrics. Teams will not buy a brand. They will buy outcomes.

- Latency: How long from button press to usable brief. Deep research runs can be minutes long today, which is fine for quarterly strategy but painful for daily standups. The winning runtime will feel like an elevator ride, not a flight. Vendors will chip away at this with better planning heuristics, partial caching of common sources, and smarter refreshes that read only what changed. Your internal benchmark should separate cold starts from incremental updates. Measure both.

- Trust: Can you rely on the citations and the chain of reasoning. Trust improves when the agent shows its sources, quotes precisely, and flags uncertainty in plain language. Enterprises should require a consistent evidence format, a way to open the original email or file from any claim, and an option that forces the agent to show three corroborating sources for critical facts.

- Recall: Does the agent find the right things inside your own corpus. Recall inside private data will matter more than public search prowess. Test recall with seed sets that combine structured files, long email threads, and chat fragments. Score the agent on whether it pulled the key items and whether it incorrectly included files outside the intended scope.

These metrics are not in conflict. Reducing latency without losing trust is a design challenge. Improving recall without creeping into unauthorized sources is a governance challenge. Both are solvable with the right investment.

What to do next if you run a team

- Pilot on real work. Pick a project that spans email, files, and chat, then run two sprints where the project leads rely on living briefs. Track time saved and decision speed. Do not pilot on toy tasks.

- Decide on default source scopes. For most teams, starting with Drive plus project chat but excluding inboxes other than a shared alias will reduce noise. Add personal inboxes only when you have a repeatable template that benefits from them.

- Write your audit checklist. For every agent-produced brief, ask: which sources were allowed, what time window was scanned, which claims were cited, and what actions were taken. Store these checklists with the project docs.

- Train for prompts that set guardrails. Examples: “Use only documents updated in the last 45 days.” “Quote any numbers with file path and sheet name.” “Exclude email threads that do not include a customer domain.” Small guardrails pay big dividends.

- Coordinate with security now. Map agent access to existing data classifications. If your company labels documents confidential finance or restricted legal, the agent needs obvious behavior for each label. Test it.

What could go wrong and how to prevent it

- Anchoring on stale threads. Agents may overweight early emails that no longer represent reality. Fix this with time-bounded prompts and by making change logs first-class inputs.

- Permission creep. If you grow the default source scope too quickly, people will add sensitive inboxes out of convenience. Keep per-source opt in. Review defaults quarterly.

- Hallucinatory merges. When a doc and an email disagree, the agent might synthesize a middle that is wrong. Require explicit conflict flags and force the agent to cite each side.

- Over-automation. Calendar actions that schedule meetings without human confirmation are tempting. Start with drafts and confirmations. Promote to auto-schedule only after a month of clean performance.

Why this feels like a platform shift

Operating systems won by colocating compute with the user’s files and processes. The same is happening for agents. When the assistant lives in your inbox and drive, you remove the friction that kept agents as demos instead of daily tools. The winners will not only read and write, they will plan, act, and explain. That is what turns Workspace into an Agent OS, and it is why this week’s update matters more than another model benchmark.

The next year will be noisy. New names, new buttons, new claims. Keep your focus on whether the agent works inside your actual workflow, whether it respects boundaries you can explain to an auditor, and whether it is fast enough to use without thinking. If you can answer yes to those three questions, you will feel the ground shift. The agent will stop being a side panel and start being the place where work begins.