Reasoning LLMs Land in Production: Think to Action

Reasoning-first models now ship with visible thinking, self-checks, and native computer control. See what is real, what is hype, and how to launch a reliable agent in 90 days.

The week reasoning stopped being a demo

Over the past few months, three releases quietly changed what large language models can do in real products. On October 7, 2025 Google announced Gemini 2.5 Computer Use, a specialized model that can click, type, and navigate real software through the Gemini application programming interface, bringing a new level of browser-native action to developers. Google also introduced thought summaries and configurable thinking budgets to manage how long the model reasons before acting. Those details matter because they turn vague agent promises into controllable systems that can be measured and tuned. Read Google’s own overview in Introducing the Gemini 2.5 Computer Use model.

Earlier, on February 19, 2025, xAI previewed Grok 3 Think and Grok 3 mini Think. xAI claimed ten times more training compute than previous generations and published numbers from live contests like the 2025 American Invitational Mathematics Examination. The key change was not just scores. Grok 3 shipped visible thinking modes, longer reasoning time at inference, and explicit self-checking steps. The company framed this as teaching the model to reason for seconds to minutes before committing to an answer.

In August 2025, DeepSeek released V3.1 and described it as a hybrid reasoner with faster inference and stronger agent skills. The phrase hybrid reasoner signals a growing pattern across top models: combine different inference paths and verification routines within a single system to get stable output without spiking latency or cost. For more on why browser-native action matters, see the AI browser era shift.

Taken together, these launches show a clear convergence: test-time compute, self-verification, and browser-native action are landing together. That is the missing triangle agents needed to move from chatty assistants to dependable operators.

What is actually new, in plain terms

Let us separate the real breakthroughs from the hype.

- Test-time compute you can control: Models now let you dial how long they think on a problem. Google’s thinking budgets and xAI’s Think and Big Brain modes expose a knob that product teams can set per task. That means you can give a claim routing task 200 milliseconds of thought and a reconciliation task five seconds.

- Self-verification loops inside the model: Instead of producing one best guess, modern models generate several candidate solutions, critique them, and either vote or backtrack. The model searches its own solution space before responding. This is why math and coding accuracy improved first; both allow clear checks.

- Browser-native action: Instead of relying only on application programming interfaces, models can now control interfaces directly. Computer Use turns an agent into a careful user that clicks buttons, fills forms, scrolls lists, and handles captchas or unexpected pop-ups within policy limits. That unlocks long tail workflows where no official integration exists.

- Transparency on the model’s thought: Visible thinking is not a novelty feature. It lets you log and debug how the model arrived at an action, which is essential for auditing and compliance. Thought summaries give you the gist without exposing verbose chain of thought.

What is not new, despite the headlines:

- Agents are not self-driving employees. They still require scoping, guardrails, and evaluation. They do not replace experts. They multiply expert reach by automating the brittle middle of repetitive steps.

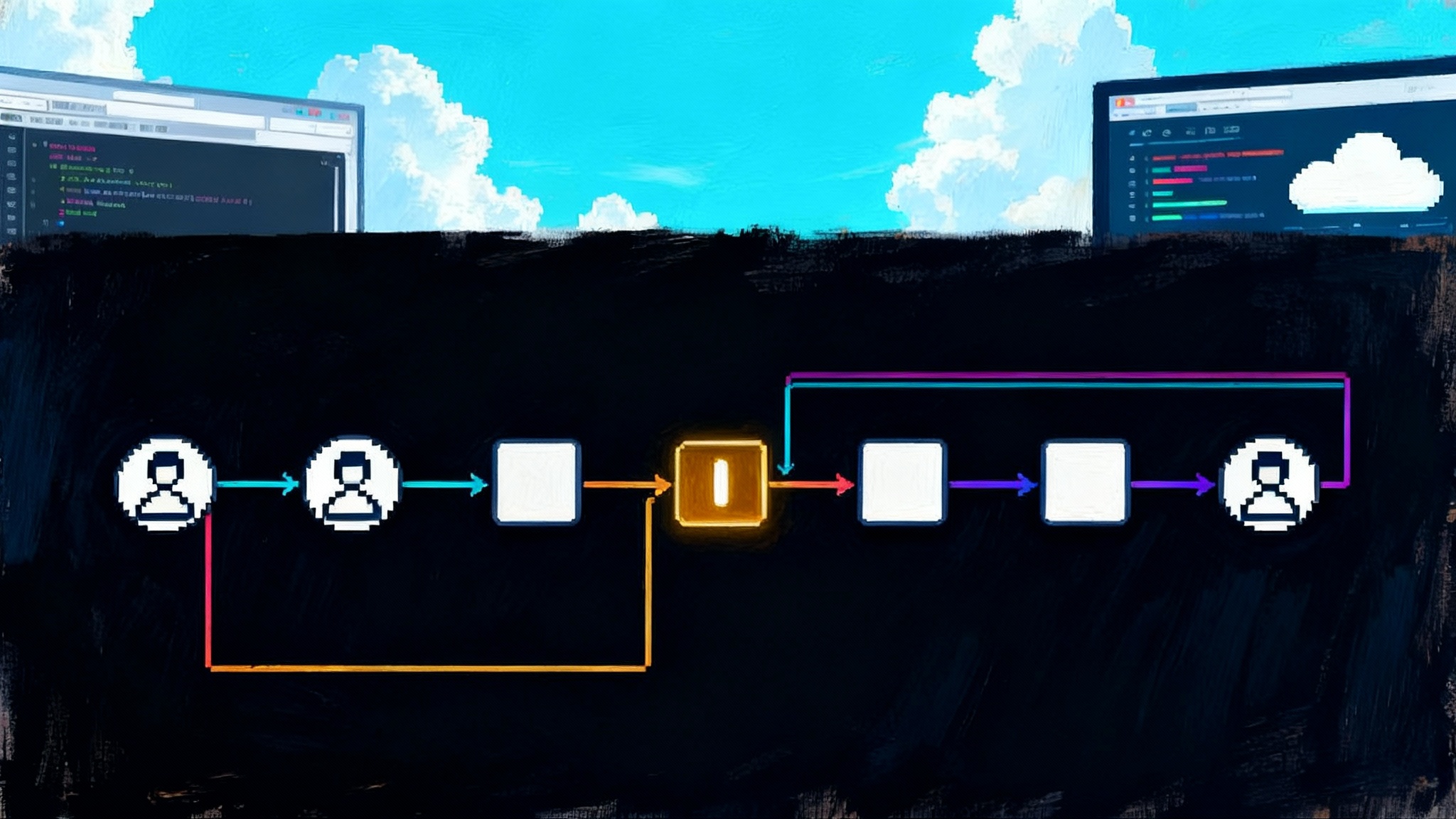

- A single model is not a system. You need a loop around the model that includes retrieval, tools, memory, and review. The win comes from the orchestrated whole. If you are designing this loop, study the open agent stack.

The architectural shift underneath

The new behavior does not come from a single trick. It is the product of several layered changes.

- Reinforcement learning on reasoning traces: xAI describes Grok 3 Think as trained with large scale reinforcement learning to refine problem solving strategies. The procedure rewards useful intermediate steps and penalizes dead ends. The result is a model that plans, revises, and checks.

- Cascaded inference paths: Hybrid reasoners route simple prompts through cheaper fast paths and escalate hard prompts to slower, deeper thinking paths. Think of a call center with a first line team and subject matter experts. Routing reduces average latency and cost while preserving reliability on hard tasks.

- Self-consistency with a verifier: Instead of trusting one chain of thought, the model samples several and uses a verifier to choose the best. For tasks with clear checks, like code or math, the verifier can execute tests. For softer tasks, the verifier uses constraints like policy rules, templates, and style guides.

- Action models trained for interfaces: Computer Use suggests that Google fine-tuned models to map from goals to sequences of clicks, keystrokes, and scrolls, with visual grounding. In practice this looks like a policy that reads the screen, plans the shortest path, and adapts if an element is missing or a dialog appears.

- Thought budgets and summaries as first-class tokens: When a model treats thinking as a resource, you can meter it, log it, and spend it where it matters. That is a governance shift as much as a technical one.

Why this makes agents more reliable

Reliability is not magic. It comes from closing loops.

- The model checks its own work before you ever see the result.

- The system can slow down automatically on harder problems.

- The agent can act in the real interface when an application programming interface is missing, which avoids brittle glue code that often breaks.

- You can audit the reasoning, which means you can fix the failure mode and add a guardrail.

Reliability is the elevation of the minimum quality, not just the peak performance on a leaderboard. The triangle of test-time compute, self-verification, and action moves the floor up.

Concrete near-term use cases you can ship now

Here are four categories where these capabilities change what is feasible in the next quarter.

- Operations automation

- Invoice triage without a vendor application programming interface: The agent opens the web portal, downloads statements, extracts totals, checks they match line items, and posts to your accounting system. When an invoice is out of tolerance the agent writes a summary and tags a human review.

- Inventory reconciliation: The agent logs into three supplier dashboards, screenscrapes current stock, and updates your central system. If a supplier limits access, the agent retries later with a lower thought budget and a different route.

- Quality assurance and user interface testing

- Spec-to-click tests: Feed a user story and a staging environment link. The agent generates test paths and expected outcomes, runs them through the browser, and saves screenshots at each step. On failure, it retries with a different locator strategy and smaller steps. This reduces flakiness by combining self-verification and real interaction.

- Accessibility checks: The agent navigates the interface, flags missing alt text, inadequate contrast, and keyboard traps, then opens pull requests with suggested fixes.

- Research and due diligence

- Structured literature reviews: The agent plans a search, visits target sites, summarizes findings, and fills a citation table. It uses self-consistency to ensure quotes are accurate and flags contradictions for human decision.

- Competitive analysis sweeps: It logs into a sandbox browser, visits app stores and pricing pages, screenshots relevant sections, and outputs a structured brief with evidence.

- Customer support enablement

- Case preparation: The agent opens the ticket system, reads previous interactions, reviews the customer’s plan, checks entitlements, and proposes the next best action. It uses test-time compute to be slow and careful on escalations, while staying fast on simple cases.

New vs hype, with receipts

There is substance behind the announcements.

- Google’s Computer Use model is available in preview for developers through Gemini and Vertex. The company calls out partners like UiPath and Automation Anywhere experimenting with it, and it emphasizes defenses against indirect prompt injection. Those are operational details, not slogans. See Google’s description in Introducing the Gemini 2.5 Computer Use model.

- xAI’s Grok 3 Think public post details reinforcement learning on reasoning and reports live contest performance. It also introduces visible thinking modes, which are essential for debugging and governance. Read the official announcement in Grok 3 Beta: The Age of Reasoning Agents.

Here is the hype to ignore.

- Reasoning solves everything. It does not. Reasoning mostly improves tasks with clear checks, structured plans, and available context. It helps less on open creative tasks.

- Agents end integration work. They reduce the need for formal application programming interfaces, but they increase the need for sandboxing, audit logs, and stable test environments. For a deeper look at enterprise defenses, see guardian agents and AI firewalls.

A pragmatic 90-day build plan

You can ship a useful agent in three months if you scope tightly and measure rigorously. Here is a plan that works for small product teams and enterprise task forces alike.

Weeks 1 to 2: choose a workflow and define success

- Pick one high-value workflow with clear acceptance criteria. Examples: reconcile monthly payouts, run smoke tests on a staging site, compile a weekly research brief.

- Write the System Intent: a one page spec with goal, inputs, outputs, guardrails, and when to hand off to a human.

- Define metrics: task success rate, median and 95th percentile latency per task, cost per completed task, and human edits per task.

- Create a safe sandbox: a dedicated browser profile, nonproduction credentials, and read-only data where possible.

Weeks 3 to 4: stand up a minimal agent

- Choose your reasoning model and action capability. If you need native interface control and enterprise governance, start with Gemini 2.5 Computer Use. If you need aggressive reasoning for math or code, evaluate a Think-style model in parallel.

- Build the action loop: observe screen, plan, act, verify. Use three simple tools first: navigate(url), click(selector or visual target), type(text). Add scroll and wait-for-state only when necessary.

- Implement self-checks: for each step write a verify function. Example: after login, verify that the profile avatar is visible and the URL matches the expected domain.

- Log everything: prompt, thought summary, actions taken, screenshots, verifier results, and final outcome.

Weeks 5 to 6: add retrieval and structure

- Add a retrieval layer for policy, product facts, and templates. Keep a short context with just what the step needs, rather than dumping whole manuals.

- Introduce plans with milestones. The model proposes a plan, and the verifier checks each milestone to reduce compounding errors.

- Build a red team suite of tricky cases: empty states, slow pages, consent dialogs, two factor prompts, and expired sessions.

Weeks 7 to 8: tighten guardrails and cost

- Set dynamic thinking budgets. Start small, then increase for steps that fail more often.

- Rate limit actions and add a kill switch. The agent should fail safe on unknown dialogs by pausing and asking for help with a screenshot.

- Add a second model as a verifier for critical steps, or use a rule-based checker for deterministic checks.

Weeks 9 to 10: integrate with your systems

- Move from sandbox to a controlled staging environment with real integrations. Keep a shadow mode where the agent runs and records actions but does not commit changes until a human approves.

- Add incident telemetry into your observability tools. Track failure clusters and teach the agent new recoveries.

Weeks 11 to 12: graduate to production pilots

- Run with real users on a small slice of traffic or a narrow set of accounts.

- Monitor the four key metrics daily. Ship fixes weekly. Expand scope only when success rate stays above your threshold for two consecutive weeks.

What you build first

- Operations: monthly payout reconciliation or invoice triage with clear success checks.

- Quality assurance: smoke tests that open the app, log in, click through the top flows, and confirm expected elements are present.

- Research: recurring industry briefs with a fixed template and a strict citation checklist.

Guardrails that separate toy from tool

- Progressive autonomy: start with propose mode, then partial autonomy on low-risk steps, then full autonomy for a small cohort.

- Least-privilege credentials and environment isolation: separate browser profiles and accounts per environment.

- Prompt injection defenses: strip unseen instructions from retrieved pages, warn on risky actions, and require human approval for operations that touch money or user privacy.

- Deterministic verifiers where possible: unit tests for code generation, regex checks for invoice totals, and schema validation for structured outputs.

How to choose between the new options

- If you need end to end action in arbitrary software with a strong governance story, Google’s Computer Use is a rational default since it exposes thinking budgets and integrates with enterprise platforms.

- If your workload is math and code heavy, a Think-style reasoning mode is often worth the extra seconds at inference. Use it selectively for the hardest steps.

- If you operate at massive scale or need very low latency on easy tasks, look for hybrid reasoners and cascaded inference. Route based on difficulty so you do not pay for deep thinking on every call.

What this moment means

For five years, agents have been mostly talkers. They could draft, summarize, and suggest, but they struggled to act. With Computer Use, visible thinking, and hybrid verification, agents can now operate software, check their work, and spend time where it matters.

The important shift is managerial. We can finally manage reasoning as a resource. You can budget it, audit it, and direct it to the riskiest steps. That is how you get reliability that feels like a system rather than luck.

If you start now, pick one workflow, add self-checks, and give the model a controlled way to click, you can ship something that saves hours every week by the end of a quarter. When the model hesitates, let it think a little longer. When it is confident, let it move. The future of agents is not a leap. It is a careful march from thought to action, one verified step at a time.