OpenAI greenlights open-weight models and the hybrid LLM era

OpenAI quietly authorized open-weight releases for models that meet capability criteria, signaling a first-class hybrid future. Here is what changed, the guardrails, the cost math, and blueprints to run local plus frontier stacks without compromising safety or speed.

The quiet line that changes everything

On October 28, OpenAI published a partnership update with Microsoft that contains a single line with outsized consequences: OpenAI is now able to release open‑weight models if those models meet capability criteria. If you have been waiting for a green light to build hybrid stacks that blend local models with frontier APIs, this was it. You can read the wording in OpenAI’s partnership update.

For years, enterprises have been forced into one of two choices. Either use closed frontier systems for maximum quality and broad capability, or adopt open models that are easy to run, modify, and ship to constrained environments but sometimes lag on complex reasoning. The new permission signals a third path becoming first class: a hybrid future where teams keep sensitive workloads close to home on open weights while bursting to frontier APIs for what only those systems can do.

This article unpacks what actually changed, the guardrails embedded in the deal, and why OpenAI’s newly announced custom‑silicon collaboration could push inference costs down while unlocking specialized on‑premise and edge deployments.

What changed on October 28

In its agreement summary, OpenAI enumerated several notable updates: independent verification of any Artificial General Intelligence declaration, extended intellectual property rights for Microsoft through 2032, refined scopes for research rights, the ability to jointly develop certain products with third parties, and the end of Microsoft’s right of first refusal on all new compute. Tucked among those bullets is the permission for OpenAI to release open‑weight models that meet defined capability criteria. That is the operational hinge.

There are three immediate implications:

- OpenAI can publish model weights under a license of its choosing, but only for families that clear internal safety thresholds. That keeps cutting‑edge systems under tighter control while letting smaller or more specialized models circulate.

- Enterprises get a sanctioned lane to run and fine‑tune OpenAI models locally, including on sovereign clouds, private data centers, and edge devices. This reduces latency, data movement, and compliance friction.

- Hybrid orchestration becomes the default pattern, as orchestration becomes the new AI battleground across vendors. Engineering teams can route tasks dynamically to the best value engine: local for routine, private, or latency‑sensitive operations; frontier APIs for complex reasoning, tool use, and broad generalization.

Open‑weight is not the same as open source

Definitions matter. Open‑weight means the actual parameter tensors are published for users to download and run. Licenses can still restrict commercial uses, redistribution, safety obligations, or benchmarking disclosures. Open source, by contrast, implies a license that meets the Open Source Initiative’s criteria. Expect OpenAI to choose licenses that preserve safety obligations and clarity on redistribution while enabling fine‑tuning and local deployment.

The practical takeaway: your engineers can inspect, quantize, prune, compile, and fine‑tune the model, then ship it into environments where always‑online APIs are either too slow, too costly, or not approved for data residency.

Why hybrid stacks become the new standard

Hybrid is less a philosophy than a load‑balancing strategy for economics, risk, and performance. Consider four everyday enterprise workloads and how they change under an open‑weight policy:

-

Retrieval‑augmented generation for knowledge bases. Today, many teams use a frontier model to synthesize answers over internal documents. With open weights, you can run a domain‑tuned smaller model on your own vector store for most questions, while escalating ambiguous or multi‑hop queries to a frontier API. You preserve privacy and slash average latency, while keeping a quality backstop for hard questions.

-

Structured extraction from forms and invoices. These are regular, predictable tasks where a small, quantized model excels. A locally fine‑tuned open weight can process batch jobs overnight without round‑trips to the cloud. Frontier models are reserved for exception handling and schema evolution.

-

Code assistance inside the firewall. Many firms block external code from leaving developer laptops. A local assistant based on open weights gives you fast autocomplete and repo‑aware refactors without sending snippets offsite. When a developer explicitly asks for design‑level help or complex reasoning, the request can be upgraded to a frontier API under policy and logging.

-

On‑device or edge experiences. From call‑center desktops to factory robots and clinical carts, open weights allow low‑latency inference on commodity accelerators. That enables voice, vision, and control loops even when connectivity is intermittent.

The hybrid pattern also improves resilience. If a provider throttles or changes pricing, your critical paths continue to run on local capacity. And when a new frontier capability appears, you can incorporate it gradually through routing rather than rip‑and‑replace upgrades.

The guardrails: what capability criteria likely mean

OpenAI did not publish the exact thresholds. The phrase capability criteria is doing careful work. It signals that only models below certain risk envelopes will ship as open weights. Expect a gating process that looks something like this:

- Safety evaluations on classes of harm. For example, resistance to misuse for biological design assistance, scalable cyber offense, targeted deception, or real‑world physical control. Models that score above risk thresholds in red‑team tests remain closed. This aligns with building guardian agents and AI firewalls.

- Alignment and refusal behavior. Open weights intended for broad distribution will likely be trained or instruction‑tuned to refuse unsafe requests and to default to helpful, harmless behavior out of the box.

- Transparency and provenance. Expect cryptographic signatures on released weights and versioned change logs so enterprises can track provenance and know exactly what they are running.

- License obligations. Redistribution rules, responsible use clauses, and perhaps a requirement to preserve guardrails in fine‑tuned derivatives. That still leaves ample room for domain adaptation inside your environment.

We should assume iteration. Initial releases will likely be modest in parameter count and capability, focused on utility per watt rather than one‑shot state‑of‑the‑art scores. If adoption is strong and safety incidents remain low, the ceiling rises.

The cost story: why open weights change the curve

Cloud APIs are phenomenal for bursty, irregular workloads. They are less great when you have steady, high‑volume traffic that looks like a baseline utility. Open weights let you convert a stream of per‑token fees into amortized compute.

Here is a simple way to model the trade. You only need three numbers:

- Your average daily tokens for the task.

- The realized tokens per second of your chosen model on your chosen hardware at your accepted latency. Realized means after batching and quantization.

- Your fully loaded hourly cost of that hardware, including power and support.

Worked example with round numbers:

- Assume 60 million output tokens per day for a customer‑support summarization service.

- A domain‑tuned 8 billion parameter model at 4‑bit quantization runs at 200 tokens per second on a single modern accelerator at your target latency.

- One accelerator therefore produces 17.3 million tokens per day at steady utilization. You need four to clear the load with a comfort margin.

- If each accelerator costs 2.50 dollars per hour fully loaded, four cost 240 dollars per day. Your floor cost per million tokens is about 4 dollars, before headroom for peaks and redundancy.

Now compare that to the equivalent ability bought from a frontier model. For some workloads the quality uplift is worth any premium. For others you will gladly pay a small upgrade tax only on the 10 percent of requests that truly need it. The routing policy, not ideology, decides which engine handles each call.

The big point is not the exact numbers. It is that open weights give you a lever to move expensive, predictable traffic into owned capacity while keeping the option to escalate for reasoning or complex tool use.

OpenAI’s custom silicon pact and why it matters

OpenAI also announced a strategic collaboration with Broadcom to design and deploy custom accelerators. If this sounds like a supply chain story, think again. Tight co‑design between models, runtime software, and silicon can lift tokens per watt and reduce memory bottlenecks. That shifts the entire cost curve. See the summary in OpenAI’s Broadcom collaboration.

There are three practical effects for enterprises, even if you never buy a custom chip:

- Elasticity. More diverse accelerator supply tends to reduce scarcity premiums. That can bring down the cost you pay for hosted inference as providers pass savings through or compete on price.

- Specialization. If OpenAI tunes hardware for the patterns its models use most, you get faster serving and training cycles on those platforms. That can mean lower latency at the same price tier, or similar latency at a lower tier.

- Portability pressure. As the ecosystem optimizes for a broader set of accelerators, runtimes and compilers improve. That makes it easier for you to run open weights on the hardware you already have, not just on a single vendor’s stack.

Architecture blueprints for the hybrid era

You do not need to rebuild everything to benefit. Two patterns will cover most use cases.

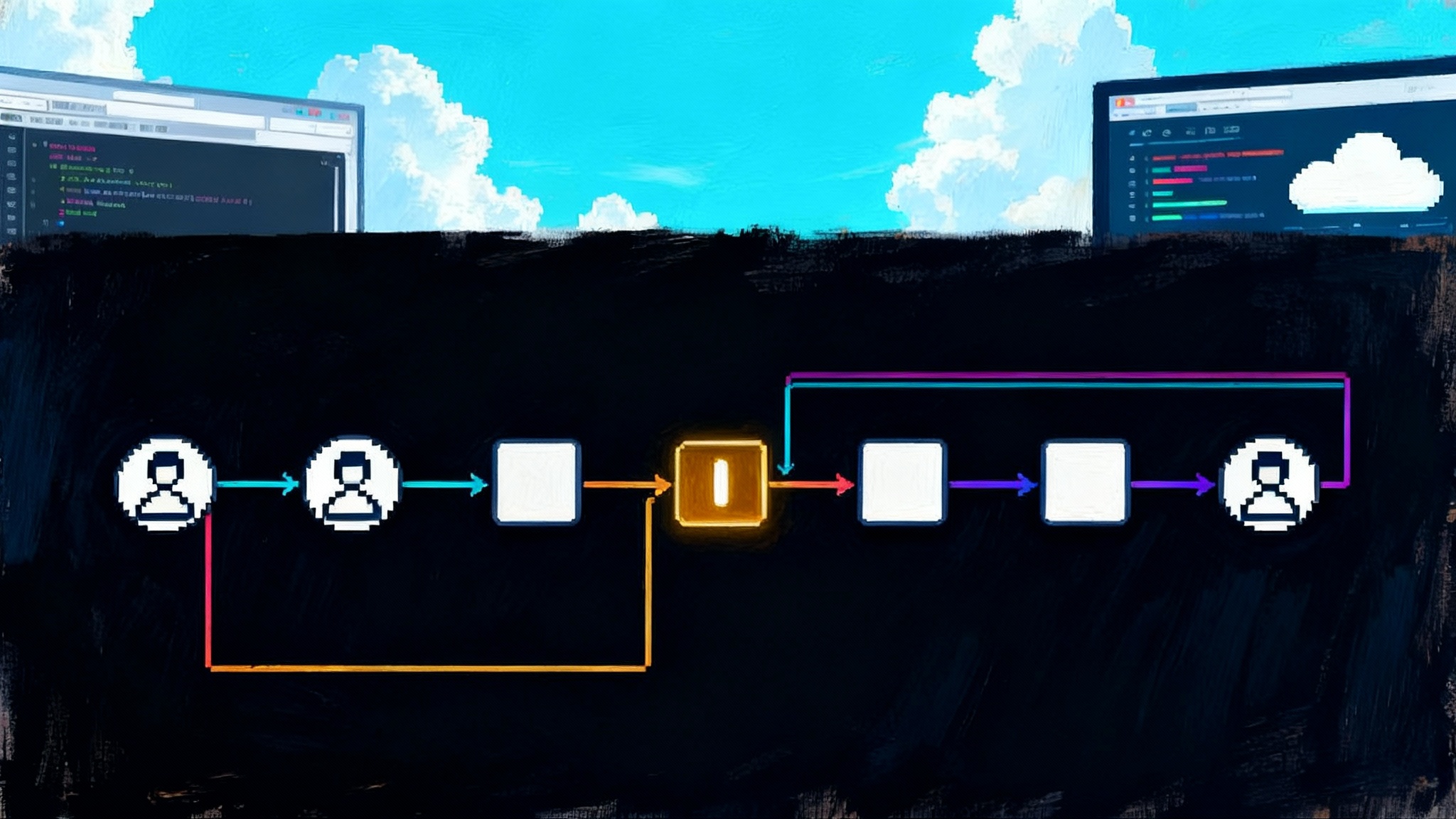

Pattern A: Local first, escalate on uncertainty

- Inference gateway with policy routing. Start with a simple rule: send requests to the local open‑weight model. If the model yields low confidence or detects high complexity, auto‑escalate to a frontier API and include the original context.

- Shared retrieval. Keep your embeddings and vector store shared between engines so both can ground on the same knowledge. You avoid divergent answers.

- Safety guardrails in both paths. Run prompt filters and output scanning in the gateway. Do not assume a closed model will always refuse unsafe prompts.

- Observability. Track token usage, latency, and satisfaction by route. If the local model’s accuracy drifts, you will see the escalate rate rise.

Pattern B: Task decomposition with role‑specialized models

- Planner on a frontier model. Use the big system to plan steps, select tools, and break down goals. Enterprises trialing Microsoft’s unified agent framework can map this pattern directly.

- Executors on local models. Run summarization, extraction, classification, and retrieval with small open weights tuned to your domain.

- Human‑in‑the‑loop stops. Insert checkpoints for actions with irreversible consequences, such as sending customer emails or creating tickets.

- Caching. Cache partial results and prompts so you do not pay twice for repeated work.

Both patterns are compatible with your existing security posture. Keep secrets and regulated data inside your network; let only anonymized or policy‑approved context flow to the frontier when you escalate.

What enterprises should do in the next 90 days

- Identify two high‑volume, low‑novelty workloads. Candidates include document classification, call summaries, invoice extraction, and internal search. These are prime for local open‑weight models.

- Build a small evaluation harness. Measure accuracy, latency, and total cost with one open‑weight baseline and your current frontier baseline. Use production data with privacy protections.

- Stand up a routing gateway. Introduce a confidence‑based escalate rule and measure how often you need the frontier model. This gives you your first‑order savings without quality loss.

- Decide your licensing stance. Coordinate legal, security, and procurement on acceptable licenses for open weights, redistribution rules, and where fine‑tuned derivatives can run.

- Prepare for on‑prem and edge. Inventory accelerators you already own. Evaluate quantization toolchains and compilers to reach your latency targets without blowing up memory.

What developers should do next

- Get comfortable with model surgery. Quantize, prune, and compile. The combination of 4‑bit quantization and operator fusion often provides the best early wins on general purpose accelerators.

- Treat prompt templates as code. Version them, test them, and couple them with your routing logic. When you deploy a new local model, your prompts will likely need a tune.

- Instrument with golden sets. Keep a small, known corpus to track regressions whenever you update weights or compilers.

- Automate fine‑tuning. Expect to train small adapters regularly with fresh examples. Build a pipeline that produces a signed model artifact with a clear changelog.

For teams building local agents, see how to get started quickly in GPT‑OSS makes local agents real.

Risks and open questions

- How detailed are the capability criteria. The initial post does not specify thresholds, escalation paths, or reporting requirements for open‑weight releases. That means the first models and their licenses will set the precedent.

- How restrictive will licenses be. If redistribution or commercial use is tightly constrained, some teams may prefer other open‑weight families that have fewer strings attached.

- What about safety updates. When OpenAI updates safety layers, how will those propagate to local deployments. Expect versioned releases and signatures, but you will still need a plan to roll updates through change control.

- Will the best models stay closed. Almost certainly. The point of open weights is not to replace frontier systems but to put capable, efficient tools where they make the most sense.

The signal for the market

OpenAI’s move puts competitive pressure on everyone. Meta and Mistral already ship open‑weight families. Databricks has promoted open governance models for enterprise use. With OpenAI stepping in, open weights stop being a side hobby and become one leg of a mainstream stack. Cloud providers will double down on managed services that host open weights with the same policy controls as their closed cousins. Chip vendors will compete not just on peak tera‑operations but on sustained tokens per watt for the specific operator mix these models use.

For buyers, the message is simple. Treat models like a portfolio. Own what is predictable. Rent what is rare. And route between them based on measured value rather than brand loyalty.

A closing thought

Hybrid is not a compromise. It is an engineering pattern built around clarity. Put the right work on the right engine at the right time. OpenAI’s decision to allow open‑weight releases creates room for that clarity to become standard practice. Pair it with careful policy, a small routing gateway, and a habit of measuring what matters. You will ship faster, spend smarter, and keep your options open while the frontier keeps moving.