MCP Goes Enterprise: The Interop Layer For Real AI Agents

Enterprise-grade MCP servers, OAuth-bound access, structured outputs, and elicitation just turned agent demos into deployable systems. Here is the practical playbook, risks, metrics, and reference architectures to ship governed action across ChatGPT, Claude, Gemini, and Amazon Q.

The week agents became a platform

Every platform era has a quiet moment that changes everything. For artificial intelligence agents, that moment is the move of the Model Context Protocol into the enterprise. The headlines may focus on shiny demos, but the real story is plumbing. This week brought enterprise MCP servers, new Microsoft 365 connectors for Claude, and cross‑vendor adoption that puts a common tool protocol within reach. For the first time, the major assistants can act on the same set of governed capabilities using a single, auditable contract.

If you lead engineering, security, or a line of business that depends on automation, this shift is practical, not theoretical. It means your finance bot, your sales copilot, and your developer assistant can all use the same catalog of tools with the same access rules, no matter which model you prefer. It means pilots that used to stall at the last mile now have a clear path to production.

What actually changed under the hood

The momentum is not about personalities or branding. It is about three boring but crucial ideas becoming real in enterprise tooling: OAuth‑bound access, structured outputs, and elicitation.

OAuth‑bound access

In the consumer phase of agents, a tool might connect to an email account or a document system with a long‑lived token hidden in a config file. That is unfit for enterprise life. OAuth‑bound access ties every tool call to a specific user or service identity, with scopes that map to your existing permissions. When an agent asks a calendar tool to schedule a meeting, the action occurs with the rights of the actual requester or a tightly scoped service principal. No more mystery tokens. Authorization is explicit, revocable, and auditable.

Two practical implications matter. First, least privilege finally sticks. You can issue a scope that only allows reading calendar free‑busy data without exposing the inbox. Second, incident response becomes routine. If a token is suspected, you revoke a single scope. The next attempt to call that tool fails fast and visibly, rather than silently working from a hidden cache.

Structured outputs

Agents do not become reliable by thinking harder. They become reliable by speaking in contracts. Structured outputs give tools a typed schema for inputs and outputs. Instead of asking for a free‑form paragraph describing an invoice, the agent asks a tool to return an object with fields such as vendor_name, total, currency, and due_date. The model still does reasoning, but the last mile is a parseable structure. That structure plugs cleanly into the systems that matter, from your enterprise resource planning platform to your data warehouse.

You also gain versioning. When the schema changes, the server can advertise v2 with a new optional field. Agents that have not upgraded continue to use v1. Breaking changes become a planned rollout instead of a surprise.

Elicitation

Most failures in agent workflows happen because a required parameter is missing or ambiguous. Elicitation is the practice of asking for what is missing in a structured way. An MCP server can declare that a field like project_code is required and that it must match a known set of values. When the agent does not provide it, the server returns a structured prompt. The agent then asks the human a clear, targeted question. This closes the loop with speed and accuracy. It also means your compliance team can encode what must be known before data moves or money moves.

Multi‑tenant isolation and durability

Enterprise servers must treat tenants as boundaries. MCP servers can run with separate storage, separate credentials, and separate rate limits per tenant. They can provide idempotency keys so that retried actions do not duplicate orders or messages. These are old ideas from service engineering applied to agent tools.

Observability and audit

Every call becomes a traceable event: who asked, what tool, what scope, what arguments, what result, and how long it took. This is not for curiosity. It is for safety and performance. With traces, you can measure task success rates, spot noisy tools, and prove that a sensitive action was approved by the right role.

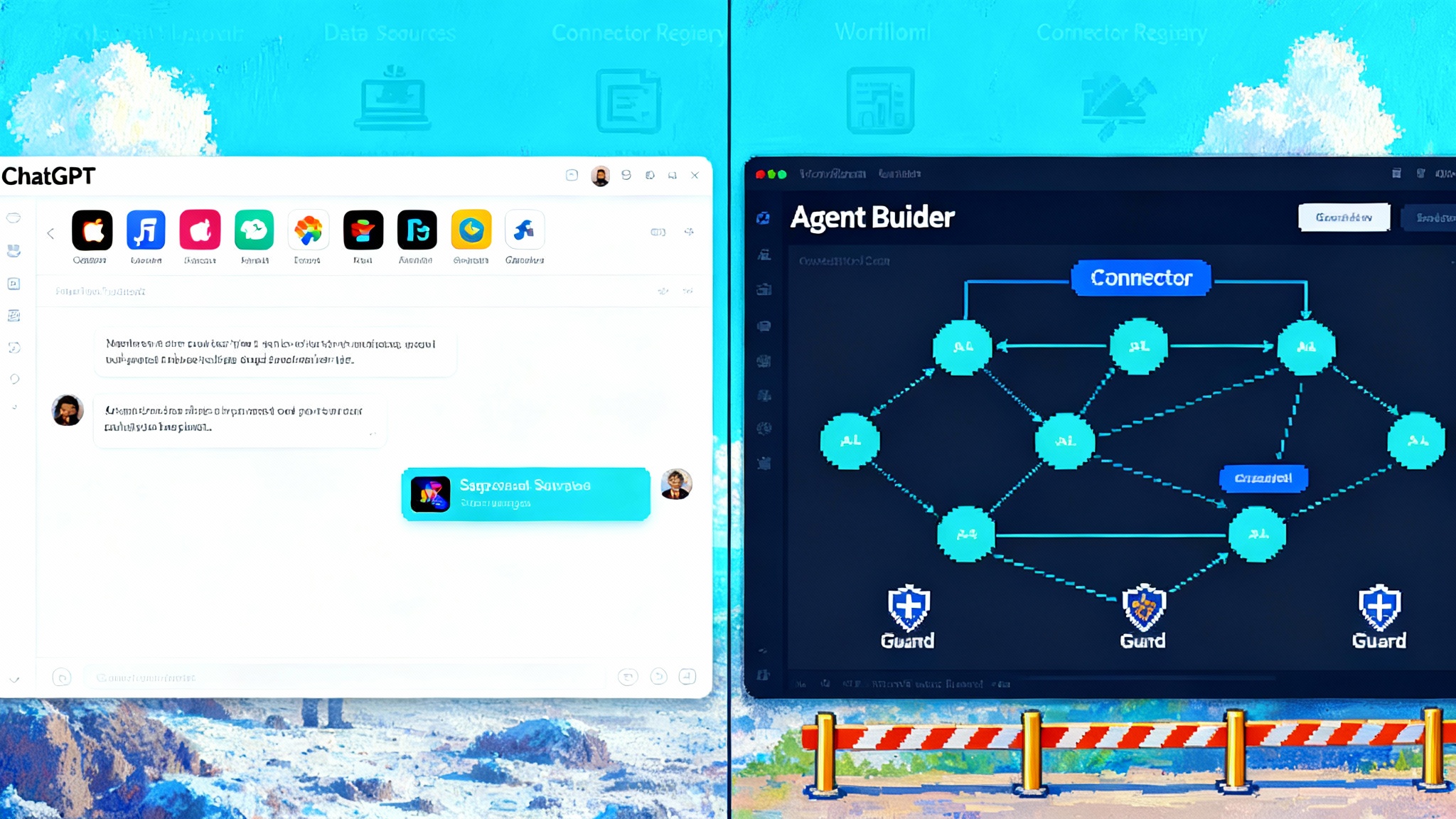

Why this unlocks governed, reliable action taking across stacks

Mixed‑model environments are the norm, not the exception. A support team may prefer ChatGPT for summarization because of its training and latency. A compliance team may use Claude for longer reasoning on policy text. A product team may rely on Gemini for tight integration with document and media understanding. A developer platform may integrate Amazon Q inside the integrated development environment. The problem has been tool fragmentation. Each assistant had a different connector story and a different way to express actions.

With MCP servers at the center, the assistants do not need to agree on models. They only need to agree on how tools are described, called, and governed. The MCP server becomes the switchboard. It advertises a catalog of capabilities, receives structured calls with explicit scopes, and returns structured results. Because the contract is common, you get consistency without forcing your organization onto a single assistant or vendor. Security sees one set of logs. Engineering maintains one set of adapters. Business users get faster iteration.

For deeper context on governance and skills, see how Claude skills as governed building blocks fit this model. For UI‑level action surfaces, compare how Gemini turns the web into action. And for OpenAI’s ecosystem shift, revisit the AgentKit and ChatGPT apps platform moment.

An everyday example: expense approvals across systems

Imagine a mid‑sized company with Workday for human resources, NetSuite for finance, ServiceNow for tickets, and Microsoft 365 for documents and email. Before this week, you might build four separate agent integrations, each with bespoke connectors that behave slightly differently.

With enterprise MCP servers and connectors, you stand up a finance_tools server that exposes two capabilities: submit_expense and approve_expense. Each capability declares an input schema and an output schema. OAuth scopes tie the calls to either an employee’s identity or a finance service role. Elicitation ensures that missing cost center codes prompt a clarifying question. A ChatGPT‑driven assistant can call submit_expense when an employee drops a receipt into a Teams channel. A Claude‑driven assistant can call approve_expense when it identifies a manager’s approval email. Gemini can reconcile a receipt image into the structured fields. Amazon Q can surface the status inside the developer portal. All four call the same two capabilities with the same permission model. The finance team audits a single set of traces.

The payoff is not novelty. It is repeatability. Once these two capabilities are stable, the next use cases add marginal effort, not a new integration project.

The interop layer in one metaphor

Think of MCP as industrial power sockets for actions. For years, we had a bundle of adapters and proprietary plugs. Some fit loosely. Some sparked. Now the walls have the same sockets throughout the enterprise. You can buy different appliances from different vendors. They all plug in, and the circuit breaker knows exactly what is drawing power, how much, and whether it is allowed.

A near‑term playbook for the next 90 days

You do not need a moonshot program to benefit. You need four moves that build on each other.

1) Stand up an internal MCP catalog

- Start with discovery. List the ten most common actions knowledge workers perform that cross system boundaries. Examples include create support case, schedule meeting, onboard user, request access, and generate quote.

- For each action, define a tool capability with a JSON‑style input and output schema. Keep schemas small and pragmatic. It is better to ship five tight capabilities than one monster tool.

- Host them in a single enterprise MCP server or a small set of domain servers. Put the catalog behind your existing identity provider. Expose metadata such as owner, version, required scopes, and sample calls.

- Add canary and staging environments. The catalog should support promotion gates that mirror your software delivery lifecycle.

2) Map role‑based access control and audit

- Do a one‑time mapping from roles in your identity system to MCP scopes. For example, Sales_Rep maps to read account and create quote. Finance_Approver maps to approve expense. Avoid broad wildcards.

- Enforce token binding. Every call must carry a token tied to a human or service role. Reject calls with vague or long‑lived tokens.

- Turn on full request and response logging with privacy controls. Mask fields like social security number and bank account in logs. Keep raw payloads for a short retention window and derived metrics longer.

- Build three standard reports: actions by role, actions by tool, and failures by reason. These become your weekly operating rhythm for agent reliability.

3) Wrap legacy systems as MCP servers

- Create a facade in front of systems that lack modern APIs. For example, a terminal‑bound mainframe order system can sit behind a small proxy that translates a create_order call into the sequence of keystrokes that the mainframe expects.

- Add idempotency keys and deduplication. Legacy systems often double‑post when network retries happen. The facade should reject duplicates based on a client‑supplied key.

- Capture domain errors and return structured failure types. Agents learn faster when they see explicit errors such as permission_denied or validation_failed instead of a generic stack trace.

- Where possible, add a read_only mirror for queries and keep write calls narrow. This lowers risk while giving agents useful context.

4) Pilot network‑aware routing for production‑grade agents

- Treat tools like services. Put them behind discovery, health checks, and circuit breakers. When a tool is slow or down, route to a replica or fail gracefully.

- Introduce policy‑based routing by data class. For example, route anything that touches customer identifiers through a high‑assurance path with stricter logging and slower rate limits.

- Add per‑vendor concurrency controls. If one assistant tends to burst requests, throttle at the MCP layer rather than hoping the model behaves.

- Test with synthetic traffic. Spin up a script that calls each capability with good and bad inputs every minute. Use this to catch drift before users do.

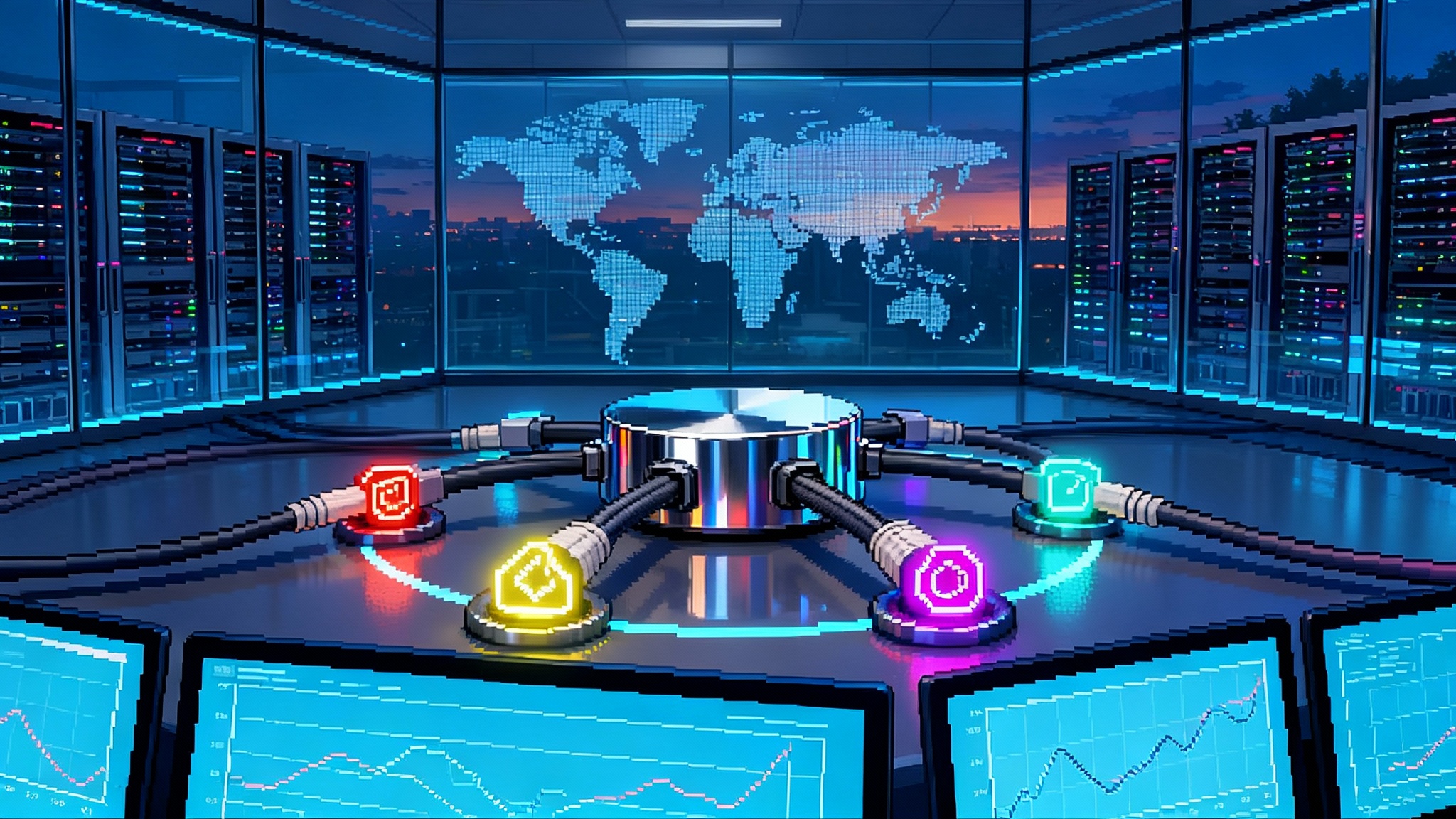

Reference architectures you can adopt

- Hub and spoke. A central MCP hub exposes shared capabilities such as identity, messaging, and files. Domain‑specific servers handle finance, support, and sales. Assistants discover tools by querying the hub. Scopes flow from a single identity provider.

- Sidecar model. A development team adds an MCP sidecar to their service. The sidecar exposes curated actions such as create_feature_flag or open_rollout. It handles OAuth and logging. The team keeps control of the code and deploy cadence.

- Federated catalog. Larger firms let each business unit run its own MCP server but register capabilities into a global directory with shared schema rules and linting. Agents pull the directory and decide at runtime which server to call based on latency, tenant, or data class.

Risks and how to mitigate them

- Shadow tools. Teams will be tempted to publish tools directly from notebooks. Require registration in the catalog and a minimal review checklist. Block unknown endpoints at the edge.

- Prompt injection by tools. A tool response that contains crafted language can steer a model into unintended actions. Mitigate by whitelisting function calls after tool responses and by stripping tool‑generated instructions from the model’s input.

- Data leakage through overbroad scopes. Use preflight checks. Before a call runs, the server simulates the scope against the requested resource and blocks if it exceeds policy. Monitor scope usage and rotate keys on a schedule.

- Vendor drift. As assistants evolve, they may add optional fields or new call patterns. Stick to the MCP core and validate incoming calls tightly. Provide shims in the server that adapt new patterns into your stable schema.

- Rate and cost blowups. Place budgets and per‑action cost alerts in the MCP layer. Fail fast when budgets are hit and provide readable error messages to agents and humans.

What to measure to prove it works

- Task success rate by capability. Are actions succeeding end to end without a human cleaning up after the agent?

- First‑time parameter completeness. How often does the agent supply all required fields without elicitation?

- Elicitation resolution time. When a tool prompts for missing data, how long does it take to resolve and with what outcome?

- Latency by tool and assistant. Track p50 and p95 to spot outliers in real workloads.

- Incident count by failure type. Permission issues, schema validation, and tool downtime should trend down as the catalog matures.

- Human trust signals. Measure opt‑in usage by teams and repeat runs of the same capability. Trust follows reliability.

Six design patterns to standardize now

- Token per action. Each sensitive action uses a short‑lived token minted for that call with exact scopes. It expires seconds after use.

- Schemas as code. Model input and output schemas live in the same repository as the tool implementation. Changes go through code review and continuous integration checks.

- Policy as code. Authorization rules are written in a policy language and versioned. The server evaluates policies at call time with context like user role and data class.

- Tool stubs for tests. Provide a stub of each tool that returns known results offline. This lets teams run agent regression tests without calling live systems.

- Catalog gating. New capabilities land in a beta lane with lower rate limits and tighter scopes. Graduating to general availability requires meeting stability and audit criteria.

Where this goes next

As the protocol hardens, expect your vendors to ship MCP servers as first‑class products. Your customer relationship management, enterprise resource planning, ticketing, and developer tools will expose a neat row of capabilities with schemas and scopes you can adopt rather than invent. Internal teams will publish their own, and the catalog will start to look like a service mesh for actions rather than a grab bag of scripts.

Procurement will care because switching costs go down. Security will care because audits get easier. Product teams will care because they can swap models without redoing integrations. The long‑term effect is a layer of action interop that feels as boring and solid as a database driver.

The conclusion

The agent story used to be split between clever reasoning and fragile execution. With enterprise MCP servers, OAuth‑bound access, structured outputs, and elicitation, the execution layer is finally catching up. Assistants like ChatGPT, Claude, Gemini, and Amazon Q can now speak a shared language for action with clear permissions and logs. If you want results in this quarter, not next year, build a small catalog, wire it to roles and audit, wrap the few legacy systems that block you, and route calls like a modern service. The rest of the magic follows. Agents stop being demos. They start being part of how your company gets work done.