Gemini in Chrome Turns the Web Into an Action Surface

Google is weaving Gemini into Chrome so your browser can plan, stage, and execute tasks with your permission. Here is what agentic browsing changes for users, developers, and marketers—and how to prepare now.

The moment the browser became a launchpad

A small switch flipped for U.S. users in September and October 2025. Google began placing Gemini directly inside Chrome and previewed Project Mariner, also called Agent Mode in some demos. The pitch is simple, and the implications are large. The browser is no longer only a place to read and click. It becomes an action surface that can plan a task across multiple tabs, collect what it needs, and ask for permission before the next step. In other words, the browser becomes the default runtime for artificial intelligence agents. Google calls its approach a careful rollout, and the effect is immediate: you ask the browser to do something, not just find something. The feature arrived with guardrails and more agentic browsing on deck, as described in an official Chrome update on Gemini.

For a view of how this trend hits operating systems, see how Microsoft is approaching it in Windows turns into the agent OS.

From autocomplete to autonomous steps

For years, browsers were largely passive. Autocomplete helped you type faster. Password managers stored credentials. Extensions automated a few clicks. Agentic browsing is different. It adds a planning loop and a stepper that can break a goal into subgoals.

The core ideas are straightforward:

- Understand the page and the goal. Parse the Document Object Model and the user’s intent.

- Plan multi‑page sequences. Break the goal into steps that may span multiple tabs.

- Execute with consent. Perform only the steps the user approves, with site‑scoped permissions.

- Explain and roll back. Record what happened so a human can review or undo.

In practice, this looks like a short checklist in the Chrome sidebar that updates as the browser works. The agent proposes, you approve, and each approval grants temporary capabilities that expire when the task finishes or the site changes.

What it can do today: real tasks, end to end

Concrete tasks matter more than demos. Here are three that already feel natural in an agentic browser.

- Book a trip with fewer context switches. Say, “Find a nonstop flight from Boston to Austin next Friday, then book the cheapest flight with a carry on, and put the receipt in my travel folder.” The agent opens airline sites and a comparison page, checks luggage policies, surfaces loyalty benefits, and highlights the total cost after fees. Before it fills forms, it asks to use your stored identity and payment information. You supervise the sequence instead of juggling tabs.

- Shop like a power buyer. Say, “I need a cordless drill under 200 dollars, brushless motor, and a warranty over two years.” The agent visits manufacturers and retailers, reads specification tables and warranty pages, and outputs a side‑by‑side matrix. When you approve a purchase, it requests a site‑scoped permission to fill your address, apply a coupon, and complete checkout.

- Run a multi‑tab workflow for work. Say, “Pull the latest three vendor proposals from our portal, extract the pricing sections, and draft a comparison memo.” The agent downloads files, pulls quotes, and assembles a summary with a clickable trace that shows which lines came from which document.

These are the direct consequence of Chrome integrating an on‑device planner, a page‑aware executor, and a permissions layer that behaves more like a mobile operating system than a traditional desktop browser.

Under the hood: a runtime inside your browser

Why is the browser the right place for agents to live? Two reasons. First, the browser already knows how to render and interact with the modern web. Second, it can enforce a security model that aligns agent capabilities with same‑origin rules.

An agentic browser needs four building blocks:

- Planner. A language model that translates goals into steps. Gemini plays this role in Chrome.

- Page understanding. A module that sees forms, tables, and scripts and maps them to intents like “select seat” or “apply promo.”

- Tool layer. Safe actions such as click, fill, submit, navigate, and read, restricted by origin and permission state.

- Explanation system. A trace that records actions in ways humans can understand.

For a governed approach to capabilities and safety rails, see governed building blocks for agents.

Project Mariner layers these elements behind a new interaction model. When an agent wants to do something meaningful, you see a clear request, like “Allow one‑time address fill on example.com.” If you approve, Chrome issues a short‑lived capability for that domain. This borrows patterns from mobile permissions and OAuth without forcing every site to implement a new login dance.

The new security model: scoped, explainable, and revocable

Agentic browsing pushes security to the foreground. A model can be tricked by carefully crafted content, known as prompt injection. In an agentic world there is a second layer of risk: the agent might act.

The reasonable response is defense in depth. Expect to see these protections:

- Site‑scoped capabilities. A permission to fill a form on one domain does not carry to another. Capabilities are time‑bound and purpose‑bound, and they can expire on layout change.

- Action previews. Before a sensitive action, the browser shows the exact fields and values. You can edit or cancel.

- Model timeouts and small steps. The agent cannot loop forever and must return control when uncertain.

- Content provenance checks. If an iframe from another origin is present, sensitive actions pause or require reapproval.

- Data minimization by default. Your address or card number stays in the browser’s secure vault and is revealed only to approved fields, not to the model’s raw context window.

- Audit trails. Every action yields a signed receipt in a local log so you can replay what happened.

Browsers already have many pieces. Identity flows are maturing with Federated Credential Management. Payments use the Payment Request interface. For automation, a bidirectional control protocol is becoming a reference for explainable automation in the WebDriver BiDi specification.

User experience resets incentives for publishers and marketers

When a person reads a page, design and copy drive outcomes. When an agent reads a page, structure and signals take the lead. Search engine optimization already rewards clear markup. Agent optimization raises the bar. If you want an agent to pick your product, you must make it easy to extract the facts that agents use for decisions.

Publishers should plan for three shifts:

- Structured data becomes table stakes. Use schema for price, availability, warranty, and return windows. If you do not publish JSON‑LD that agents can trust, you will be invisible in agent comparisons.

- Fewer clicks but higher intent. Agents summarize and shortlist. Expect fewer pageviews per session and a higher conversion rate on the visits that reach you. Separate human visits from agent‑mediated visits in analytics to see the new funnel.

- Ads move from slots to suggestions. Traditional banners are less visible to agents. New formats will appear, like suggested actions. A store might sponsor “add to cart and apply coupon.” This demands disclosures in the browser interface and strict rules that keep suggestions from overriding the user’s goal. For the payments side of this future, see the trusted agent protocol for checkout.

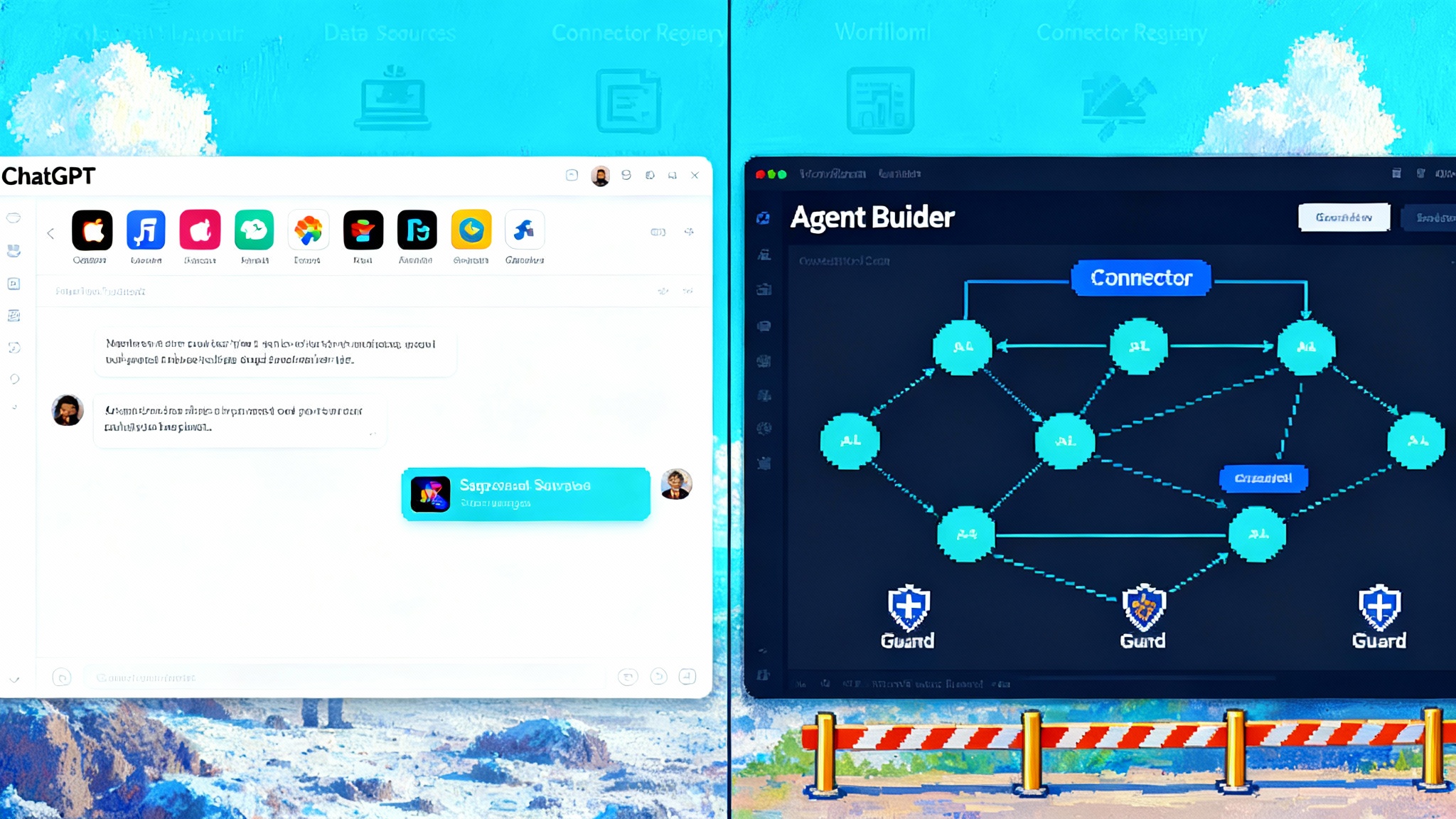

Rivals, open source, and the standards race

Google is not alone. Anthropic has previewed browser‑based assistance that reasons across tabs and can stage next actions, with an emphasis on careful behavior and enterprise controls. Open‑source projects are racing to make web agents more reliable. Research environments such as WebArena and BrowserGym expose agents to noisy pages so they can learn to generalize. Developer toolchains are adding agent controls. Test frameworks like Playwright and Selenium are adding model‑friendly hooks so a large language model can observe the page while tools execute precise actions.

All signs point to a likely outcome. Browser vendors, model providers, and standards bodies will define an Agent Permissions model and an Action Receipt format. The first defines what an agent is allowed to do on a site, for how long, and with which data. The second records what happened in a way that a person or a regulator can understand.

The takeaway for October 2025

The browser is becoming the default runtime for artificial intelligence agents because it sits at the intersection of pages, identity, and payments. Gemini in Chrome and the Project Mariner approach set the tone by pairing planning with supervision and by tying actions to site‑scoped permissions. If you build for the web, now is the time to make your pages agent‑friendly, to define capability scopes, and to adopt action receipts. If you buy things on the web, insist on previews and try agent‑staged tasks you can verify before committing. If you shape policy, push for standards that make actions explainable and reversible.

The change is practical, not mystical. We are moving from search results to completion funnels, from clicks to capabilities, and from persuasion to proof. The action surface is here. Agents are not replacing you. They are becoming the way your intent flows through the web with clarity and control.