Agents on Your Face: Meta’s Ray‑Ban Display and Neural Band

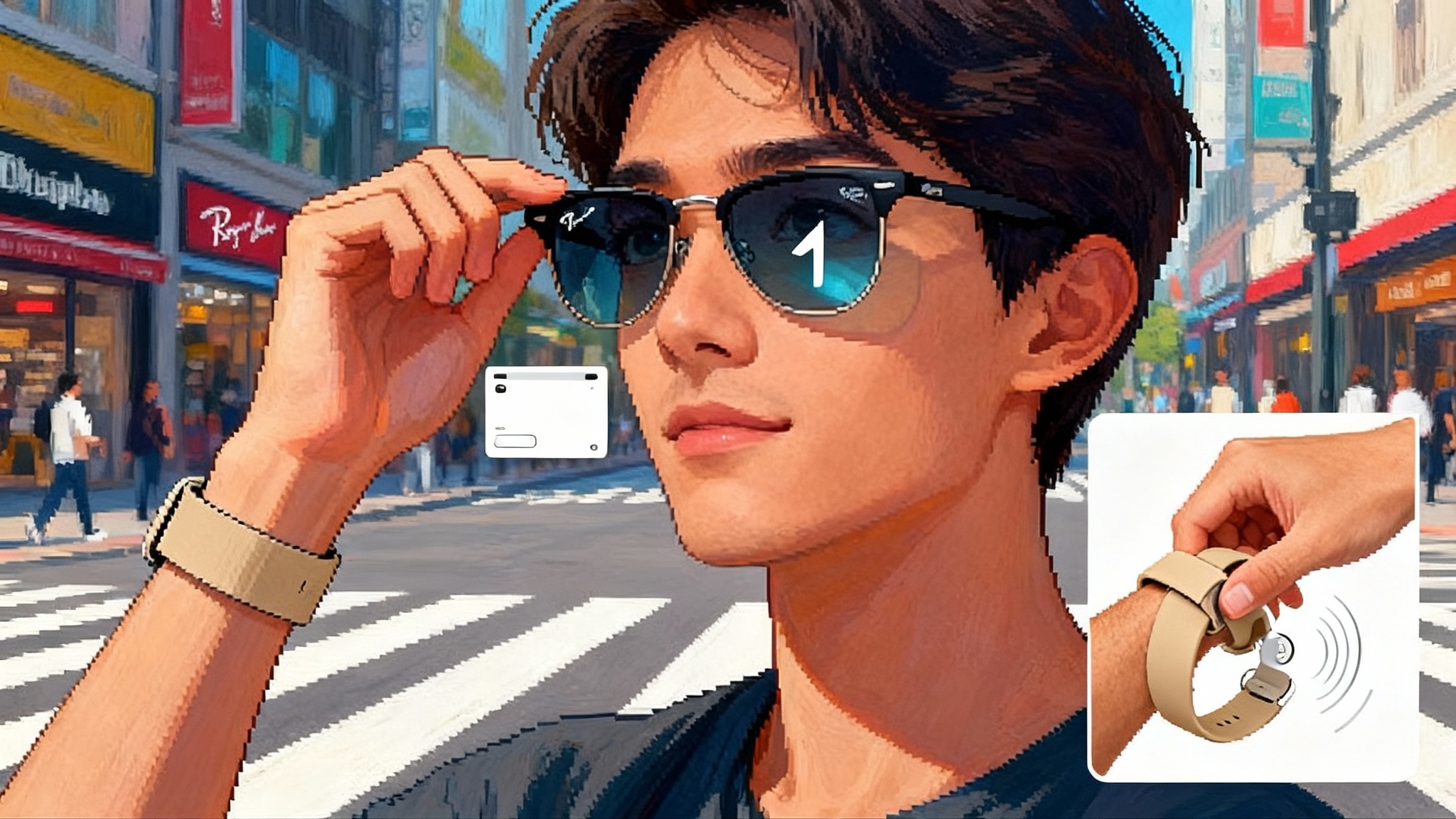

Meta just moved AI agents from your phone to your periphery. Ray-Ban Display smart glasses and the Neural Band turn micro-prompts and subtle gestures into real-world actions you can confirm in a glance.

The moment smart assistants left the chat window

On September 17, 2025, Meta used its Connect stage to ship a new kind of interface you wear instead of opening. The company introduced Ray-Ban Display smart glasses and a companion electromyography wristband called the Neural Band, a pair that puts an agent where your attention already is, at eye level and at your fingertips. Meta’s announcement details the headline realities clearly, including price, date, and what the pair can do together, such as glanceable translation, messages, navigation, and direct control via subtle finger movements. That is the breakthrough: the agent arrives without asking for your hands or your full visual field, and without asking the people around you to wait while you pull out a phone. Meta launch details.

A year ago, the phrase ambient AI sounded like a slogan. Today it is starting to look like a mode. The glasses show small cards in your line of sight only when you want them. The wristband translates tiny muscle signals into intent. Together they allow a new pattern of interaction that looks less like talking to a chatbot and more like steering a helpful colleague with glances and micro‑gestures.

The new user experience: micro‑prompts, not monologues

Chat interfaces made us verbose. Glasses make us concise. What you want while walking through a crowded station or making dinner is not a paragraph. You want to:

- Glance to confirm the next turn arrow

- Whisper “text yes” without breaking stride

- Flick your thumb to skip a song

- Tilt and pinch to zoom a viewfinder while your other hand holds a bag

Think of these as micro‑prompts. They are short, task‑shaped cues that map to immediate actions: capture, confirm, correct, or cancel. The Neural Band is important here because it reduces latency and social friction. Subtle finger movements become the binary primitives of a new interface: tap, hold, scroll, rotate. If voice is the sentence, electromyography is the punctuation.

Glasses are also relentless about context. They see what you see and hear what you hear, so they can cut the prompt down to the minimum. Instead of saying “Translate this menu from Portuguese to English and highlight vegetarian dishes,” you can glance at the menu and say “translate.” The agent already has the camera feed, your dietary preferences, and your language pack. The output appears as a lightweight overlay that does not flood your view. You get just enough information to act.

The practical magic is timing. A pleasing glasses flow feels like a drummer sitting exactly on the beat. Visuals arrive when you look, audio confirmations arrive between words from the person you are talking to, and haptics nudge you before you miss a turn. This demands millisecond budgets and model decisions that happen partly on device, partly in the cloud. Done right, it feels less like using a computer and more like your intuition getting a small boost. For the deeper model tradeoffs, see how test-time compute reshaping agents changes what runs locally versus remotely.

What is actually inside this leap

The hardware recipe is pragmatic rather than sci‑fi. Early teardowns and reports point to a waveguide in the lens that accepts light from a micro‑projector tucked into the temple. That display is tuned for glanceable cards rather than full field imagery. The Neural Band listens to the tiny electrical signals that precede your finger movements and uses trained models to map those signals to commands, which travel to the glasses over a low‑latency link.

Battery and thermals remain the governors of ambition. Meta quotes up to six hours of mixed use on a charge and a case that extends total time significantly, so the product is designed for day parts, not all‑day continuous display. The interface acknowledges this constraint. Cards are brief, animations are sparse, and much of the work happens only when you ask. This is a wearable designed to respect your cognitive and energy budgets at once.

Privacy and safety, now with social context

Glasses create new questions because the sensor is at eye height and always with you. Users and bystanders need clear signals and controls. The expectations are becoming more concrete.

- Visible cues. Capture indicators must be bright, consistent across modes, and visible in daylight. A small light that changes state for photo versus video reduces ambiguity for people around you.

- One‑gesture mute. A universal hush gesture, for example a long thumb press on the Neural Band, should silence microphones and pause visual capture. The glasses should confirm with an audible click and a brief on‑lens icon.

- Bystander privacy zones. In places like classrooms, hospitals, and theaters, the device should default to a no‑capture mode with only essential inputs allowed. Users can opt back in, but the default respects the venue.

- On‑device redaction. When the agent recognizes faces or sensitive documents in view, it should blur or redact by default unless you explicitly request otherwise.

- Memory boundaries. Keep the last few minutes of context on device, not in the cloud, and expose a clear “forget” gesture. Make retention choices legible, not hidden in settings screens.

These are not just norms. They are features with real product surface area, measurable latencies, and trade‑offs. Builders who ship the simplest and clearest controls will set the bar the rest of the market must meet.

The platform race is already on

Meta’s move forces a response because it changes the venue of the agent from a phone screen to your periphery. Three ecosystems have the pieces to contest this shift in the next year.

- Amazon. Alexa+ is graduating from a voice assistant into a subscription agent that can coordinate services, remember preferences, and act across devices in your home and beyond. Amazon has distribution through Echo and Fire devices, and it is incentivized to bring Alexa+ to glasses through partners because it drives commerce and services. The company is already positioning Alexa+ as an action engine, which maps well to glanceable confirmations on glasses. The obvious gap is a first party pair of display glasses. That gap can be bridged through partner frames, but the user experience will be best when voice, touch, and glance UIs are designed as a single flow.

- Google. Google seeded the field a year early with its Android XR platform, a headworn operating system that ties directly into Gemini agents and the company’s apps. At I/O, Google showed real‑time multimodal agents that understand the world around you and respond at conversation latency. The company has also begun to showcase glasses partnerships. That signals a clear intent to make glasses a first class device in the Android family. Android XR blueprint.

- Apple. Apple continues to refine Vision Pro as a developer and enthusiast platform while rumors and reporting point to simpler smart glasses in the 2026 to 2027 window. Expect an iPhone‑tethered design with Siri and a growing set of on‑device models to keep latency down. Apple will likely delay a display in the lens until it can match its standards for brightness, privacy, and fashion, but the day it pairs AirPods‑class audio, better Siri, and a camera in lightweight frames, the everyday agent will feel native to iOS.

The meta point is that every platform is converging on the same loop. See the scene, decide what matters, take action with minimal friction, and confirm in a glance. For interop stakes across ecosystems, study the agent interop layer MCP.

What a glasses‑first agent stack looks like

To build for the next 12 months, treat the glasses as a peripheral brain with three layers.

- Perception on device

- Sensors: forward camera, microphones, inertial units, and the Neural Band. These turn the world and your intent into tokens the agent can reason over.

- Fast models: a small speech model for wake words and dictation, a visual model for object and text spotting, and a gesture model for electromyography signals. The goal is twofold, reduce round‑trips to the cloud and keep micro‑prompts instant.

- Coordination in the cloud

- A larger reasoning model orchestrates tools. It knows your calendar, your route, your music, and your preferences. It calls maps, messaging, and partner services. It ships back the minimum viable card to the glasses. When tasks span web apps, the browser becomes the API.

- Memory is partitioned. Ephemeral short‑term context lives on device, personal long‑term memory is encrypted in the cloud with human‑readable controls. Offer in‑the‑moment consent prompts for new data uses.

- Presentation at the edge

- Cards not canvases. Each surface is small and task specific: a turn arrow, a two‑line message, a timer, a capture frame. If a task grows beyond a card, punt it to the phone.

- Multi‑modal confirm. Every action gets at least two forms of feedback, for example a tiny chime plus a one‑word caption. You should never need to wonder if the agent heard you.

- Recovery paths. Always give users a single gesture to cancel, and a second to undo. Agents make choices, but you are the editor.

Ten concrete bets for builders right now

- Design micro‑prompts. Invent two‑ to three‑word utterances that complete the top ten jobs to be done in motion, for example “save recipe,” “send arrival,” “mark expense,” “what is this.”

- Target a 200 millisecond round‑trip. Budget for on‑device speech start, a fast intent parse, and a tiny payload back to the lens. If you must go to the cloud, stream the first token of confirmation immediately.

- Build offline packs. Navigation, translation, and checklists should degrade gracefully without connectivity. Use downloadable language and neighborhood packs and swap them automatically.

- Treat the wrist as a dial. Map scroll, volume, and zoom to a pinch‑and‑turn gesture. It is a natural metaphor and it survives noisy environments where voice fails.

- Use scene anchors. Allow users to pin a timer over a pot on the stove or a task over a storefront. The pins should snap to objects and decay on their own after relevance fades.

- Limit cards to eight words and one icon. If you need more, you are in phone territory. Respect attention like a scarce battery.

- Make a social quiet mode. Detect conversation, then bias output to haptics and short visual confirmations. If the ambient sound rises, bias back to audio prompts.

- Build a witness log. Provide a chronological trace of what the agent saw and did, what it sent to the cloud, and why, with one tap to delete segments. This earns trust.

- Ship venue profiles. Publish a public spec so stores, schools, and venues can broadcast preferred capture rules. The glasses pick them up and apply them automatically, with a clear opt‑out for the wearer.

- Monetize outcomes, not taps. Sell bookings, deliveries, and premium routes, not menu clicks. A card should be the last step in a workflow, not the beginning.

Where the advantages lie

- Meta’s lead is end‑to‑end control. It has stylish frames, a working display stack, a practical wrist input, and a consumer agent already in the wild. If it uses that control to tighten latency and build the best default experiences, it will set the standard everyone else must reach.

- Amazon’s advantage is service execution at scale. If Alexa+ can complete multi‑step tasks across commerce, home, and entertainment, then mapping those actions to glasses becomes a rendering problem more than an AI problem.

- Google’s edge is multimodal perception and maps. If Android XR glasses inherit Project Astra’s conversational speed and Maps’ world understanding, they will feel native to everyday errand running and travel planning.

- Apple’s strength is coherence. The first Apple glasses that pair with an iPhone and AirPods will likely feel seamless, which matters more than raw capability in early mainstream adoption.

What changes for people

When agents live at your periphery, you stop treating them like apps. You treat them like sense amplifiers. The best example is capture. Instead of composing a message, you look at a whiteboard and say “save that.” The agent resolves it into a clear photo, transcribes it, files it to your shared project, and gives you a tiny checkmark in your lens. Or consider navigation. You say “walk me to the quiet entrance.” The agent uses time of day, local knowledge, and your past choices to pick a route and whispers a correction before you miss the turn.

These are small moments, but they add up. The agent becomes present but not performative. You get the task done and keep eye contact with the person you are with.

The next twelve months

Expect rapid iteration. Meta will extend the device graph around the glasses and band, particularly with new voice and hearing features that make conversation in noise easier and more private. Amazon will push Alexa+ from rooms to routes. Google will ship Android XR headsets, then press partners to deliver everyday glasses that carry Gemini in your periphery and keep the phone for heavy lifting. The most useful innovations will be the boring ones that shrink prompts, shave latency, and make privacy decisions obvious.

The big UI idea is smallness. Cards that appear only when needed, gestures that feel like you thought them rather than performed them, and agents that thread between the beats of your day. That is what ambient AI looks like when it is practical.

A smart finish

When the personal computer moved to your pocket, apps won. When the agent moves to your face and wrist, attention wins. The companies that respect attention with the smallest, fastest, clearest interactions will earn the right to handle bigger tasks. For builders, the opportunity is to craft useful micro‑prompts and low‑latency flows that feel obvious the instant you try them. For everyone else, the shift will be subtle, then sudden. One day soon you will look up, whisper one or two words, feel a quiet click on your wrist, and realize the assistant you used to chat with now lives where you live, in your line of sight.