AgentKit and ChatGPT Apps: OpenAI’s Agent Platform Moment

OpenAI collapsed the agent stack into one toolkit and opened distribution inside ChatGPT. Here is what AgentKit and the Apps SDK change, who benefits, and a 90 day plan to ship a production agent without lock in.

The breakthrough: agents get a home and a highway

On October 6, 2025, OpenAI did two things at once. It collapsed fragmented agent tooling into a single kit and gave those agents a first party distribution channel inside ChatGPT. That combination changes how agents get built and how they find users. Think of it as building a modern airport for agents and then switching on the moving walkways that bring travelers straight to the gates. See the official AgentKit announcement and the Apps in ChatGPT overview.

What changed, exactly

For two years, serious agent projects looked like a garage workshop: prompt files in one place, tool adapters in another, custom evaluation scripts, and a bespoke chat frontend that never felt quite right. Versioning was fragile. Admin controls were scattered. The result was slow experimentation and slower launches.

With AgentKit and ChatGPT apps, that mess consolidates into a kit with batteries included and a shelf to display the finished thing. The kit reduces time to first useful prototype. The shelf gives your agent a stage inside a product with hundreds of millions of weekly users. The new center of gravity is not a sandbox demo or a separate store. It is ChatGPT, where users already spend time and where your agent can be invoked by name or surfaced contextually when it is relevant.

The new stack in plain language

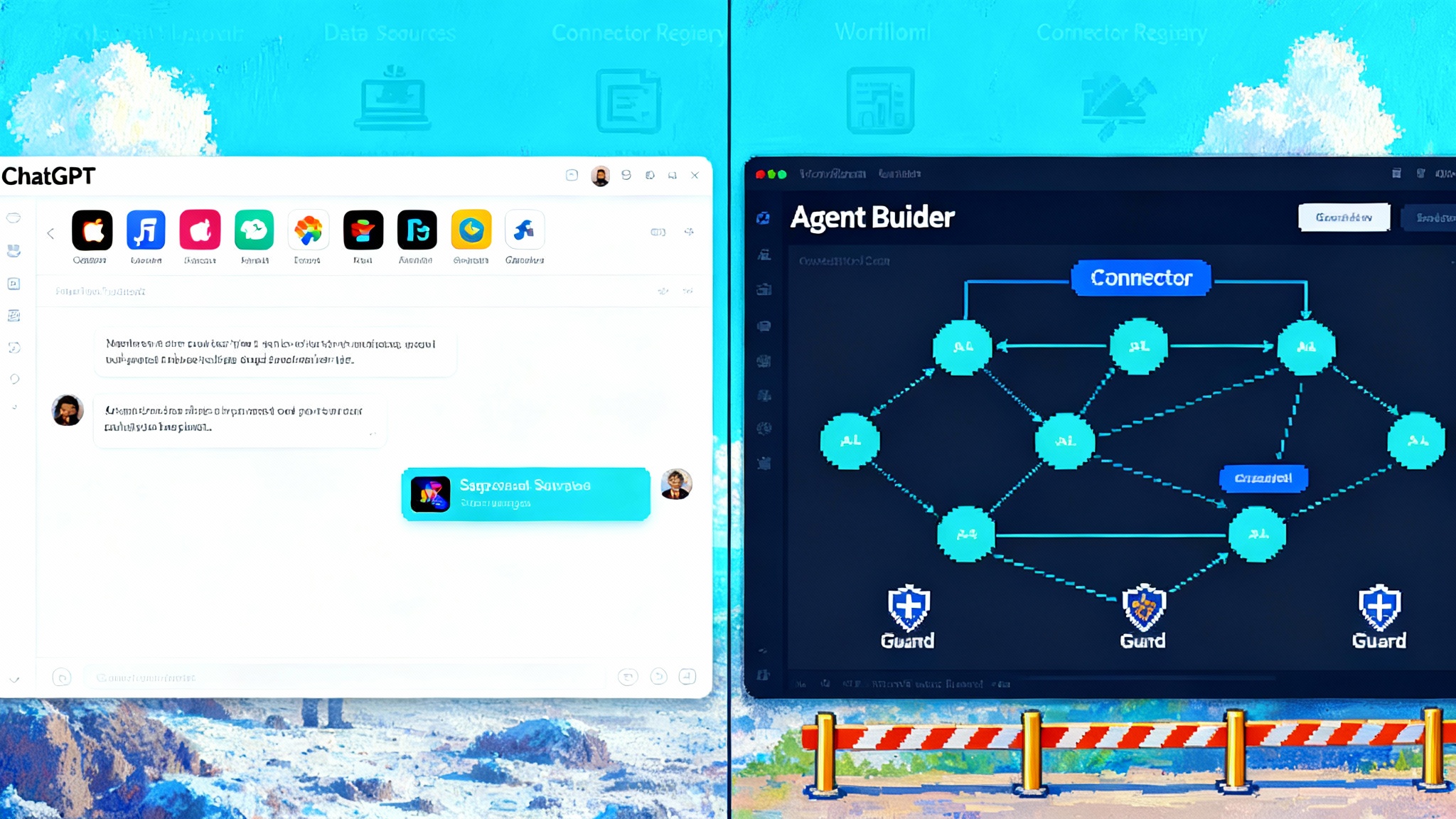

Agent Builder: a canvas for logic you can ship

Agent Builder is a visual canvas to design and version multi agent workflows. Imagine a circuit board for decisions: you drop in steps for retrieval, tools, approvals, and handoff to humans. You connect them and set criteria for success. Because it integrates with the responses API, you can go from a working diagram to a running agent without rewriting the logic. It also supports safety settings like guardrails, so the same place you design behavior is the place you define its boundaries.

How this helps: teams finally get a shared source of truth. Product defines the flow, engineering wires tools, compliance toggles the protections, and everyone looks at the same version.

ChatKit: a ready to brand chat experience

ChatKit gives you embeddable components to put an agentic chat in your website or app. Instead of spending three sprints building a chat panel with attachments, memory, and message traces, you drop in a tested interface that your team can theme and extend. In practice that shifts engineering time from plumbing to polish. It also makes multi surface launches easier because the same chat experience can show up on the web, mobile, and your help center with predictable behavior.

Evals for Agents: measure, then move

Agents fail in specific ways. They call the wrong tool, misread a field, or answer confidently on stale data. The new Evals capabilities introduce datasets for component level checks, trace grading to see where an interaction went off the rails, and optimization loops to tune prompts and tools against outcomes you care about. When the model updates, rerun the suite so your confidence does not rest on yesterday’s behavior.

Connector Registry: one door to your data and tools

Enterprises do not want every team building fresh connectors to the same systems. The Connector Registry moves that sprawl into an admin console. Security teams can approve which systems are connected, set scopes, and observe usage across ChatGPT and the API. That means the sales agent, the support agent, and the finance agent can all reach shared systems through the same governed connection, and revocation happens in one place.

Apps in ChatGPT and the Apps SDK: distribution meets context

Apps now live inside ChatGPT. Users can start a message with the app’s name or accept a suggestion when the conversation hints that the app can help. The Apps SDK lets you define both logic and interface, and it aligns with the Model Context Protocol vision of portable tools. For deeper background on that interop push, read our take on the USB-C standard for agents.

Why this favors default vertical agents

In consumer tech, defaults decide distribution. The search engine preselected in a browser shapes where traffic flows. With ChatGPT apps, a similar dynamic plays out in verticals. If you are shopping for a home, the right real estate app can be suggested in the flow. If you are designing a slide deck, a creative app can appear as you outline a talk. Once a user connects an app and has a good first run, the next time they need the same job they will likely accept the suggestion again.

Three reasons the structure of the platform tilts toward vertical defaults:

-

Contextual invocation beats catalog browsing. Being surfaced in the conversation at the right time is stronger than being listed in a store. If your travel planning agent appears when a user writes “I land in Boston at 7 pm, find me dinner near the hotel,” you are already ahead.

-

First party connectors shift the burden. With a governed registry, apps that integrate cleanly with common systems get faster to trust and deploy. That raises the bar for generic agents and favors domain experts who can promise accuracy, compliance, and clean handoffs. Payments are part of this story too as agents that can spend become common.

-

Measurable improvement compounds. Evals let you optimize for a vertical’s true outcomes: conversion for commerce, time to resolution for support, on time execution for operations. If your agent’s metrics improve month over month, your suggestion rank and word of mouth improve with them.

Strategic takeaway: pick a vertical task with repeat demand, own the last mile of quality, and make yourself the default the platform chooses when that task shows up in conversation.

A pragmatic 90 day plan to ship without lock in

Below is a plan that an enterprise or a startup can follow. It assumes one cross functional pod of five to eight people: a lead engineer, a product manager, a designer, a subject matter expert, and a security or compliance partner. Adjust headcount to your scale. The goal is a production agent that ships in under 13 weeks, with measurable outcomes and a clear path to portability.

Weeks 1 to 2: choose the narrowest valuable job

- Write a one page job spec that describes the agent’s single job, inputs, outputs, and what counts as success. Example: “Handle warranty claims for product X up to 500 dollars, target time to resolution under 6 minutes, customer satisfaction over 4.6 out of 5.”

- Inventory data and tools. Identify the smallest set needed for the first scope and the permissions required.

- Define a cold start policy. What is the agent allowed to do on first run versus after trust is earned. For example, allow draft responses in week 1, allow autonomous refunds only after two weeks of stable performance.

Weeks 3 to 4: wire data responsibly

- In the Connector Registry, configure connections to the target systems with least privilege scopes. Use service accounts rather than personal tokens. Tag each connector with an owner and an audit policy.

- Create synthetic datasets with realistic edge cases. For a support agent, stage tickets with missing attachments, contradictory notes, or sensitive information to exercise safety rules.

Weeks 5 to 6: design the flow in Agent Builder

- Build the happy path first. Model the steps from greeting to resolution with explicit handoffs. Add a step for a human approval if money changes hands.

- Attach guardrails. Mask personal data before storage, block tool calls that include unsafe parameters, and log trace events with a retention policy that matches your compliance posture.

- Decide where memory helps and where it hurts. For regulated flows, prefer explicit context windows over long lived memory.

Weeks 7 to 8: measure with Evals, then iterate

- Define three observable metrics and set targets. Example: task success rate, median time to resolution, and the rate of escalations to humans.

- Use trace grading to find the top failure modes. Address them with prompt edits, tool schema tweaks, or small workflow changes. Do not jump to model change unless needed.

- Run daily regression suites. When an underlying model version changes, rerun the tests so surprises do not appear in production.

Weeks 9 to 10: put the agent where people are

- Use ChatKit to embed the agent in your product’s help center or account portal. Keep the interface minimal. Show what the agent can do and what it cannot do.

- Start dogfooding. Roll out to internal users or a small customer cohort. Capture verbatim feedback and label it by failure mode.

Weeks 11 to 12: prepare the ChatGPT app

- Use the Apps SDK preview to define a lightweight app that exposes your agent’s core capability inside ChatGPT. Focus on one durable verb. For example, “track a shipment,” “prepare a quote,” or “schedule a technician.”

- Add sign in to connect existing accounts. Make consent clear. If you handle sensitive actions, require confirmation inside the app before execution.

- Draft your submission materials. Even while submissions are not yet open, write the description, list the permissions, and assemble screenshots. This shortens your path when the directory opens.

Week 13: go live and watch the right dials

- Launch the embedded experience first, then scale the app’s availability. For enterprises, coordinate with your security team for a staged rollout by department.

- Watch the three core metrics. If any fall below target, pause new traffic, fix, and resume.

- Move one scope boundary. Add a new tool call or widen a decision rule. Resist the temptation to add many small features. Depth beats breadth.

Avoid platform lock in without losing momentum

The fastest path is often the stickiest. Here is how to move fast and still keep your options open.

- Build to an open tool interface. Keep your business logic behind your own API so other clients can call it. If you need to run the same agent in another channel later, you can.

- Treat prompts and workflows as code. Store them in version control, write tests, and tag releases. If you move models or platforms, your behavior moves with you.

- Use a connector abstraction. Even if the registry provides a neat one click integration, implement a thin internal wrapper so you can swap providers without touching the agent logic.

- Keep observability vendor neutral. Export traces and metrics in open formats. If you rely on a single platform’s dashboard, you will struggle to compare behavior across environments.

- Maintain model plurality. For critical paths, test at least one alternative model and keep a fallback switch. This is not about being disloyal. It is about resilience.

Concrete examples of where to start

-

Retail operations. A returns agent that issues refunds under a policy and emails a prepaid label. It connects to order history, inventory, and payment systems through the registry. It is embedded in your account portal with ChatKit. It ships as a ChatGPT app that lets a user type “start a return” and complete the flow without opening your site.

-

Field services. A scheduling agent that coordinates technicians. It reads job types from your CRM, enforces drive time rules, and confirms appointments by text. Evals track the decline in back and forth messages per job. The ChatGPT app lets an end customer say “reschedule my appointment to Saturday morning” and get a confirmed slot.

-

Education. A course assistant that answers questions tied to a specific curriculum and flags unsafe or off topic requests. The agent runs inside your learning portal, and the app makes it easy for learners to ask for help in ChatGPT while watching a video without losing their place.

What to watch next

-

Submissions and the app directory. The preview period means you can build and test today, with submissions opening later this year and a directory to help discovery. Plan your listing like a product launch. Name, icon, first run, and safety disclosures matter.

-

Enterprise rollout. Support for Business, Enterprise, and Edu is planned. That will make procurement simpler and enable fleet wide control of which apps can run. This is where the Connector Registry and a global admin console will matter most. Also watch the policy front with the California chatbot law and UX.

-

More reusable components. Expect faster developer tooling and more building blocks in ChatKit and Agent Builder. That should push the cost to experiment even lower.

The bottom line

OpenAI did not just ship new features. It redrew the map. AgentKit removes the excuses to delay because the core pieces are now in one place, and apps in ChatGPT solve the hardest part of any launch by putting your agent in front of users at the right moment. If you want to win this platform moment, specialize. Pick a job that matters and make your agent the default for that job. Ship in weeks, measure relentlessly, and protect your future with open interfaces and portable logic. The teams that do this will earn the right to add more scope. The teams that wait will be bidding for attention after the defaults have formed.