Apple’s on‑device agents make private automation mainstream

On September 15, 2025, Apple switched on Apple Intelligence's on-device model and new intelligent actions in Shortcuts. That update turns iPhone, iPad, and Mac into private agents that work offline, act fast, and raise the bar on privacy.

The day local-first agents went mainstream

On September 15, 2025, Apple did something deceptively simple that will ripple for years. It let every developer ship features that talk to its on-device Apple Intelligence model and it upgraded Shortcuts with intelligent actions that chain these skills together. In plain terms, your phone, tablet, and computer are now capable of running small but capable software helpers that think, decide, and act without leaving your hands. This is not a lab demo. It is a system update. Apple describes the rollout as new Apple Intelligence features available today, including access to the on-device model and smarter Shortcuts. That is the moment this went from novelty to norm. See Apple’s own words in the Apple newsroom post announcing the release.

Think about an agent as a great personal assistant. Not a chatbot that gives you a paragraph, but a helper that sees a document, understands what you are trying to do, and carries it forward. The difference now is where that helper lives. It runs locally, on silicon you already own, rather than on a distant server farm. That one shift changes cost, speed, reliability, and privacy all at once.

Why local matters: latency, privacy, cost, and reach

- Speed: When the model runs on your device, actions complete without round-trip delays. Translate a screenshot and file it, or rewrite a paragraph and paste it, in less time than it takes to unlock your phone.

- Privacy: Your context stays on the device by default. Sensitive text, health notes, locations, and photos do not leave your hardware unless a bigger model is truly needed. We will come back to how that fallback works.

- Cost: Developers no longer pay per request to a cloud model for common tasks. That opens the door to features that would have been too expensive to run on every tap, like suggesting tags for every new note or summarizing every long email you open.

- Reach: The install base matters. Apple is lighting up iPhone, iPad, and Mac models that support Apple Intelligence. That is a distribution channel counted in the billions over upgrade cycles. Once something becomes a default capability on devices people already own, it stops being a gadget trick and becomes an expectation.

What Apple actually exposed to developers

Apple shipped a developer framework that gives apps direct access to the on-device foundation model that powers Apple Intelligence. The framework is tightly integrated with Swift. Developers can:

- Ask the model to generate or transform text and images, then receive results in a structured format. A budgeting app can request a structured list of merchant names and amounts from a pasted receipt. A study app can ask for bullet-point concepts rather than a freeform essay.

- Use guided generation so the model responds within a schema. That keeps outputs predictable and safe to wire into code paths. For example, a travel app can require an array of {city, date, link} objects. If the model returns anything else, the app can retry or fall back to a simpler path.

- Provide tools the model can call when it needs more information. Tools can be as simple as “search my local database of payees” or as sensitive as “propose a calendar event.” The app keeps control over what is callable, with parameters and guardrails.

- Run offline. The system decides when a request fits on device. If the task is too heavy, a privacy-preserving path called Private Cloud Compute can step in. More on that below.

Shortcuts also gains intelligent actions. These are prebuilt building blocks powered by Apple Intelligence that you can drop into a workflow, like “summarize text with Writing Tools” or “create an image with Image Playground.” Crucially, you can now route the output of a model step into the next tool with no copy and paste. That makes Shortcuts feel less like a conveyor belt and more like a team. For how this shifts the user surface, see why the agent is the new desktop.

A picture of an on-device agent

Imagine you are a contractor walking through a job site. You photograph a whiteboard sketch, record a short voice note, and open a project management app that supports the new framework.

- The app’s on-device agent detects a sketch with tasks and dates, transcribes your note, and proposes a structured task list with due dates.

- It calls a tool you exposed called createTask that writes to your local database. Before writing, it asks you to approve two ambiguous due dates it could not parse.

- It checks your calendar for conflicts and suggests alternative dates. Because this is local, it can read your calendar without sending events to a server.

- It assembles a brief update for the client, proposes a tone, and routes the draft to Mail. You review and send.

All of that happens offline if your device is capable. There is no account to set up, no credits to buy, no data trail across the open internet.

Shortcuts grows into an action hub for agents

Shortcuts has quietly been the operating system’s glue. The new intelligent actions give it a brain and a pair of hands. Here are concrete patterns you can build today:

- Inbox triage: Use an intelligent action to summarize long emails into key decisions, then pass those decisions into a “Create Reminder” step with tags and due dates.

- Research clipping: Highlight text on a webpage, run a shortcut that extracts citations, saves the summary to Notes, and files the source link in a reading database.

- Audio to spreadsheet: Record a meeting, transcribe locally, extract action items and owners, then append rows to Numbers.

- Field service: Scan a serial number, look up a local knowledge base, draft a ticket with parts and steps, and save a PDF for later upload when you reconnect.

Shortcuts is also the bridge between apps. If your app does not ship its own agent yet, you can still plug into this new world by offering well-named actions with clear parameters. The more you make your app scriptable, the more the system’s intelligence can help users get value out of it.

Early patterns for agentic app design

The first batch of apps using the on-device model gives us a playbook. Consider these patterns when you design your own agent features:

- Constrain the problem: Start with a narrow job and a fixed schema, not a general chat box. “Categorize this expense into one of eight buckets and return a JSON object” is easier to make reliable than “What is this receipt about.” If you are planning for production, study enterprise benchmarks for agent reliability.

- Use your data, not the model’s memory: Pull from a local cache or secure store of user data. Ask the model to label and structure, not to invent.

- Watch for intent boundaries: Every tool call is a decision. Show a lightweight confirmation when an action affects data or sends something outside the app. Avoid silent destructive actions.

- Make the happy path the fast path: On-device inference is fast. Design for single-tap approvals. Save verbose explanations for a details view.

- Fail predictably: If the model returns an invalid structure, retry once with an explicit reminder of the schema. If it fails again, fall back to a safe path and show the user what you did.

- Keep an audit trail: Log agent decisions, inputs, and outputs in a local, user-readable record. That builds trust and gives you data to improve prompts and tools.

Private Cloud Compute as a safety valve

Local agents will handle most of the day-to-day work. Some tasks still need more capacity. Apple’s answer is Private Cloud Compute, a design that keeps the privacy model of your device even when a larger model runs in the cloud. The idea is simple: your device decides when it cannot fulfill a request locally, then sends only the minimum data needed to specialized Apple silicon servers, and nothing is retained after the response. Researchers can verify the code that runs on those servers and the device performs remote attestation before sending a request. Apple has published a technical overview for researchers in the Private Cloud Compute security research post.

For developers, this means you can design for offline by default, then explicitly allow Private Cloud Compute for clearly labeled classes of requests. For example, a medical dictation app could run everyday transcriptions on device. If a user requests a specialized summary packed with medical codes, the app can ask permission to use Private Cloud Compute and show what will be sent. The user stays in control, and the flow remains reliable even when the device is under heavy load.

Tooling details that matter

- Structured prompts and responses: Guided generation gives you a validator at the edge. It turns a model from a creative writer into a form filler that happens to understand language. That is how you confidently wire outputs to code.

- Tool calling: Think of tools as the agent’s hands. They can fetch from a local index, create items, or trigger external integrations. Tools run in your app’s permission bubble and can request explicit user approval for anything sensitive.

- Adapter training: Apple offers a toolkit to train small adapters that specialize the on-device model for your domain. This can make classification and extraction much more reliable without shipping a full custom model.

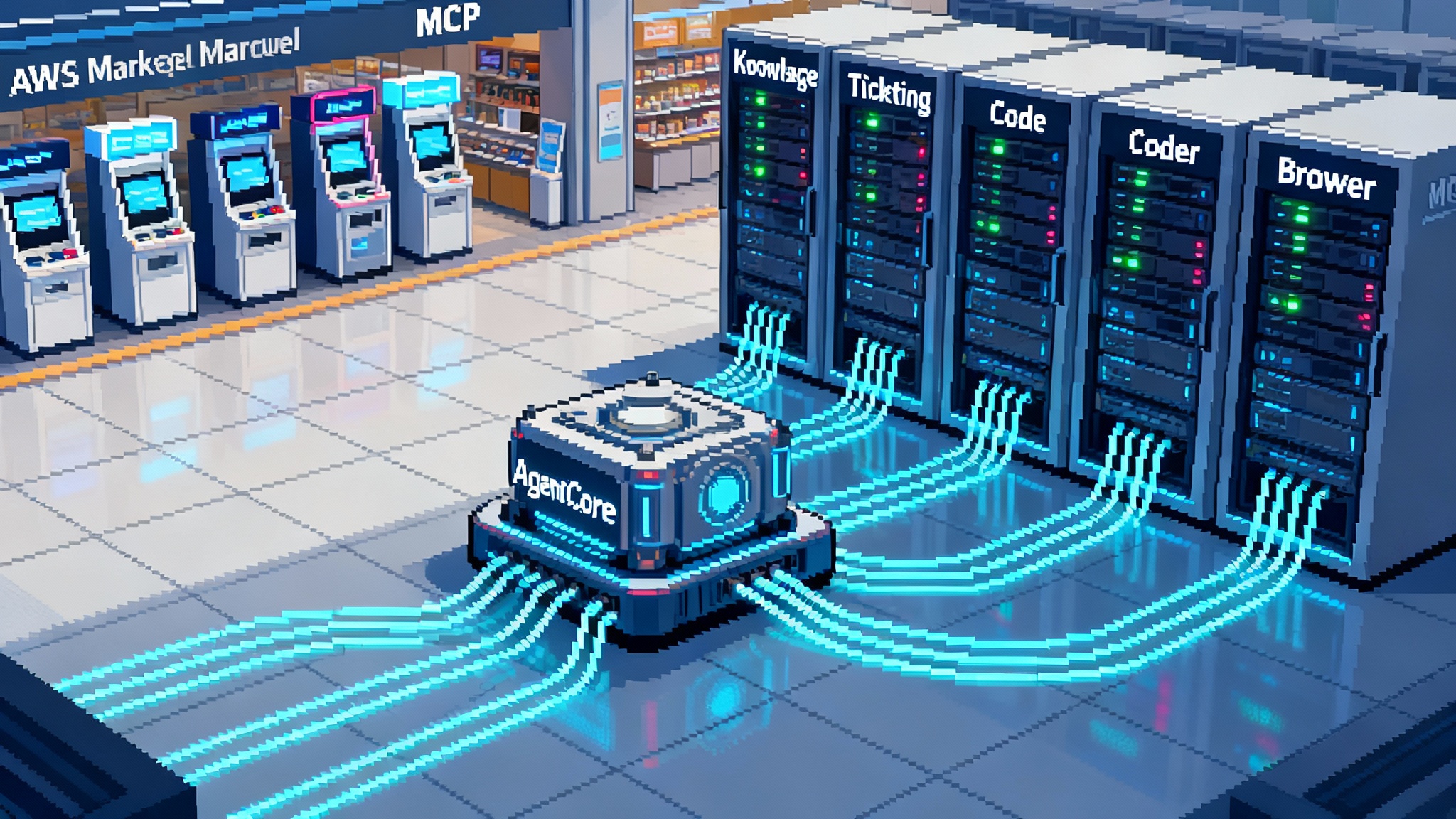

- System integration: Because this is a first-party framework, it works with system permissions, App Intents, and Shortcuts. It also plays nicely with Core ML and your existing on-device models. For a market view of stacks converging, see how AWS and MCP are unifying the enterprise AI stack.

What it means for accessories and regulated apps

Accessories live at the edge. They need reliability and do not get to pick the network. Local agents are a natural fit.

- Cameras and microphones: Capture, transcribe, and redact locally. Only sync the redacted artifact. A journalist can scrub names from audio before any cloud sync happens.

- Fitness and health: Turn raw sensor data into private insights on device. A watch can summarize a workout in natural language and flag anomalies without exporting a heart rate stream.

- Home and car: Keep intent and routine logic in the home hub or phone. A door lock can recognize patterns and suggest schedules without exposing presence data to a third party.

For regulated apps, the design surface grows more interesting.

- Health: Do clinical text extraction on device, log the transformation, and store the log with the record. If a specialist summary needs a larger model, ask permission, show exactly what fields will leave the device, and route through Private Cloud Compute.

- Finance: Run local categorization and risk rules. Only escalate edge cases to a larger model with an explicit paper trail.

- Education: Keep student data locally scoped to a school-managed device. Allow Private Cloud Compute only for vetted tasks when offline grading cannot complete on time.

In each case, the big win is that offline tool use becomes a first-class path. The cloud becomes an exception you can make legible to auditors and acceptable to users.

Competitive pressure and the new baseline

Apple is not the only company with on-device models. The difference is scale and default placement. When a platform owner wires agents into the operating system and ships them on every eligible device with no extra fee, it sets a baseline competitors must match. The implications are direct:

- Cloud optional becomes table stakes. Users learn to expect that private tasks run locally.

- Actions become the primary product surface. A model is not the feature. What it can do safely in your app is the feature.

- Latency tolerance collapses. As people get used to single-tap agent flows, slow round trips will feel broken.

How to ship an agent feature in 30 days

- Pick one high-value, low-ambiguity job. Example: expense extraction from receipts in a finance app.

- Define a minimal schema. Merchant, date, total, category, and a confidence score. That is it.

- Build two prompts. One for extraction, one for repair when the schema fails. Keep them short and concrete.

- Create two tools. One that looks up known merchants in your local store. One that creates or updates an expense record.

- Wire in guided generation. Reject anything that is not valid. Retry once with a stricter reminder.

- Add a Shortcuts action for “Extract Expense” so power users can chain your feature with others.

- Log every run locally with inputs, outputs, and decisions. Add an in-app viewer so users can trust it.

- Provide a fallback. If the image is too large or the device is under memory pressure, ask permission to use Private Cloud Compute and show what will be sent.

- Ship behind a toggle, measure success, and iterate.

The S-curve we are entering

Technology adoption tends to follow S-curves. Early on, changes feel slow. Then a single enabler removes a bottleneck and the curve bends upward. On September 15, 2025, Apple removed three bottlenecks at once. It gave developers a standard interface to an on-device model. It turned Shortcuts into an action router for that intelligence. It offered a privacy-preserving escape hatch for heavy jobs. That is enough to move agents from experiments into habits.

What comes next is both mundane and profound. Mundane because the first wave is unglamorous automation. Profound because those small automations compound into a new way of using computers. Files and apps recede. Intent surfaces and action chains take their place. People will not say they are using artificial intelligence. They will say their phone just did the right thing.

The takeaway

If you build software, assume your users now carry a capable, private agent in their pocket. Design features that let it act with precision inside your app. Expose clear actions, insist on structured outputs, and keep a clean audit trail. Use the cloud only when it brings real value, and make that choice visible. The winners of this next S-curve will not be the loudest model owners. They will be the teams that make everyday tasks bend to what users want, faster and more privately than before.

And if you are a user, explore the new Shortcuts actions, look for agent-powered updates in your favorite apps, and notice how often things get done without you thinking about them. Local-first agents are here. They will not ask for your attention. They will quietly return your time.