Agent Platform Wars Begin: Gemini Enterprise vs AgentKit

A new enterprise AI showdown is here. Google debuts Gemini Enterprise while OpenAI launches AgentKit, with AWS AgentCore close behind. We compare capabilities, build paths, and lock-in risks, then give you a 30/60/90-day plan to ship.

The starting gun just fired

Two announcements in the same week just turned a simmer into a boil. On October 9, 2025, Google launched Gemini Enterprise for business customers, with prebuilt agents and governed access to company data, as reported by Reuters coverage of the Gemini Enterprise launch. Three days earlier, OpenAI introduced AgentKit, a developer-to-enterprise suite for designing, deploying, and measuring production agents, detailed in OpenAI's AgentKit announcement. AWS, for its part, has been previewing AgentCore since the summer and says it is ready to run agents at massive scale once customers are ready to roll. The agent platform wars are officially underway.

Why does this matter now? The last two years were about chat assistants and copilots. The next two will be about software that can perceive, reason, and act inside your stack. The winner is not the model alone. It is the platform that gives teams a safe, fast path to production.

What each platform actually is

Let’s translate the product names into what builders will touch day to day.

-

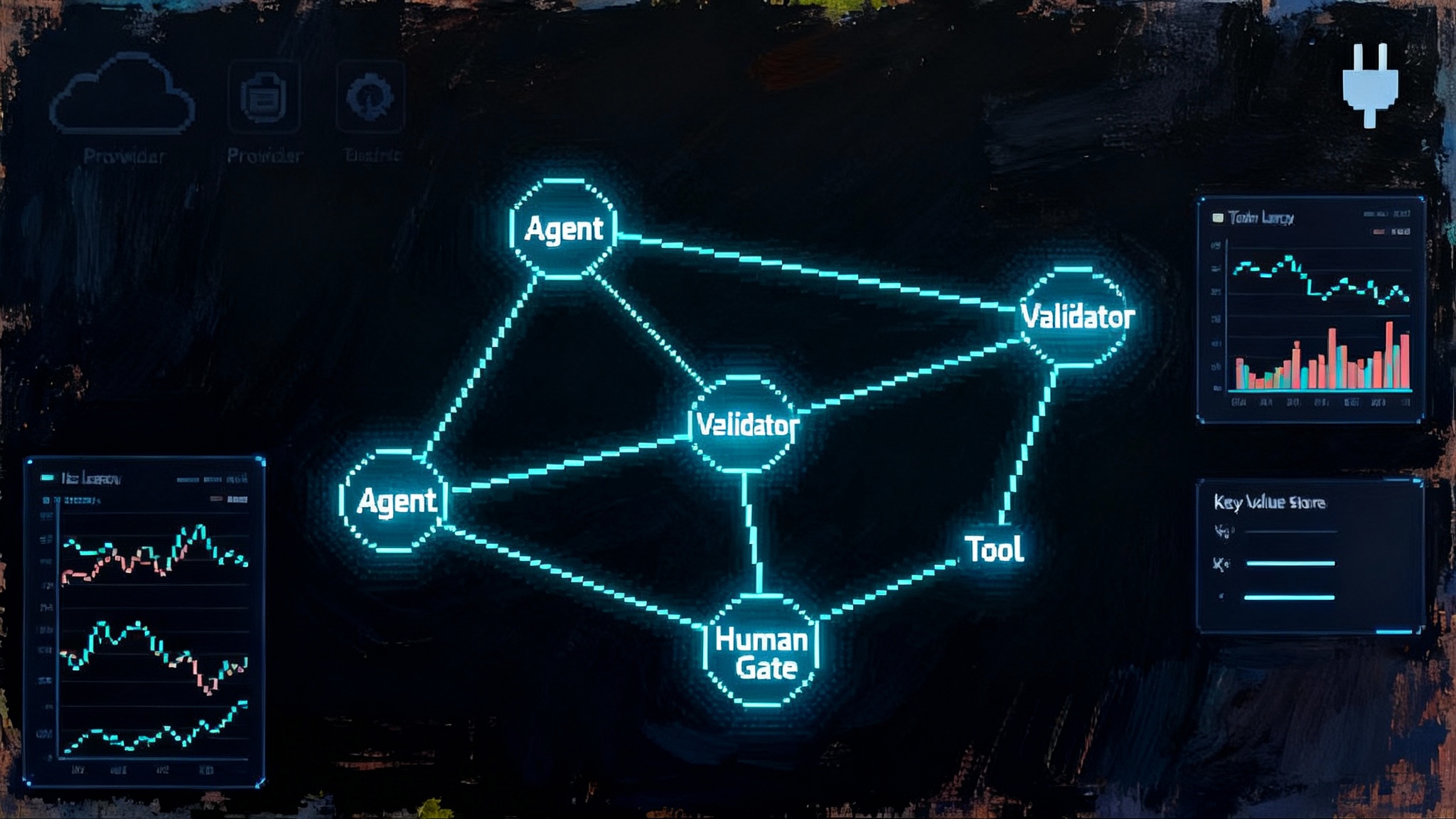

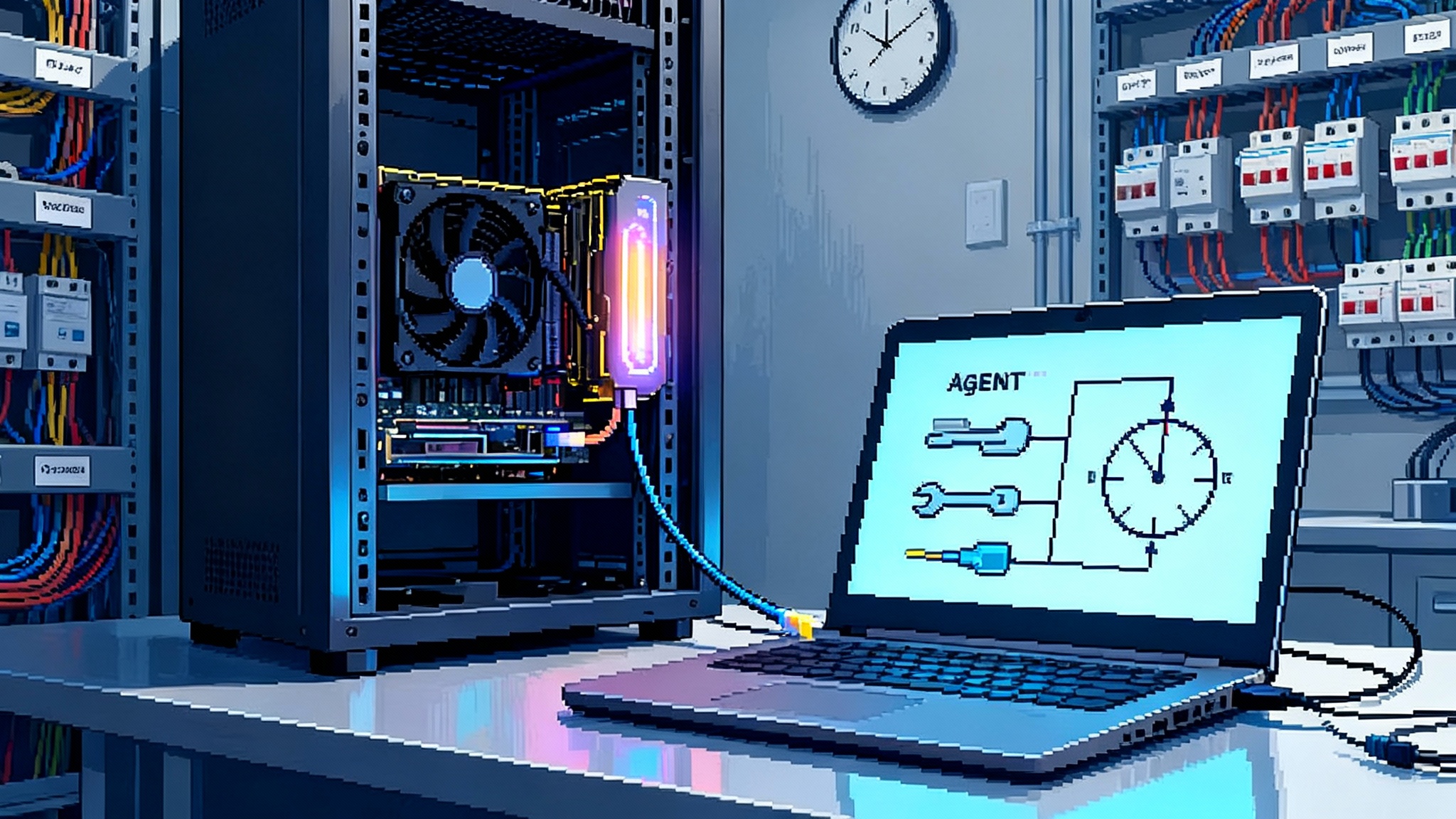

OpenAI AgentKit: a collection of building blocks that covers the agent lifecycle. Think visual workflow design, a code-first SDK, an embeddable chat interface for your app, centralized connectors, and first-party evaluation tools. Availability matters. OpenAI says some components are generally available, with others in enterprise beta. Reinforcement fine-tuning for reasoning models is expanding, including custom tool calls and custom graders.

-

Google Gemini Enterprise: an enterprise suite that puts agents into Workspace and Google Cloud while letting developers assemble custom agents with Vertex AI Agent Builder and register them to an internal catalog often described as Agentspace. Google is also pushing computer-use models that can operate a browser to complete tasks when no clean application programming interface exists.

-

AWS AgentCore: a set of services meant to take agents from prototype to production on Amazon Web Services. It includes a secure runtime with long session support, built-in memory services, identity integration, a gateway for tools, a sandboxed code interpreter, a managed browser tool, and observability out of the box.

Capabilities that matter in practice

-

Reasoning and tools: All three back platforms that can plan multi-step tasks and call tools. OpenAI emphasizes evals and reinforcement signals to teach models when to call which tool. Google leans on perception and computer-use so agents can click, type, and submit like a person inside a browser when an API is missing. AWS leans on a neutral runtime and gateway so agents can invoke cloud services or any registered tool with guardrails.

-

Connectors and data governance: OpenAI’s registry centralizes how admins approve and manage data sources across products. Google’s catalog provides a governed discovery layer for agents inside the company. AWS exposes identity and network controls so security teams can fit agents into existing permissions and logging.

-

Observability: Production agents are part application, part workflow, part model. AgentKit includes tracing and evaluation telemetry. Google provides traces and debugging in Vertex AI. AWS ships CloudWatch-powered dashboards and traces as a first-class capability.

-

Human-computer interaction: Google’s computer-use capabilities are highly practical for legacy websites and partner portals. If you run a claims process that still lives behind a web login, an agent that can browse, fill, and submit is not a demo trick. It is an unlock. For background, see our take on browser-native agents in Gemini. OpenAI focuses more on embedding agentic chat in your product so end users can interact in context. AWS positions for reliability and scale first, which appeals to teams that already instrument everything in Amazon Web Services.

Build paths: visual, code, or both

Every platform now offers a split screen: a visual canvas for fast iteration, and an API plus SDKs for deep control.

-

OpenAI: Agent Builder gives product managers and prompt engineers a canvas to compose multi-agent workflows with guardrails, while engineers wire tools via an SDK and Responses API. This reduces friction between prototype and pull request.

-

Google: Vertex AI Agent Builder brings traces, debugging, and an engine for registering agents so they can be reused across teams. This helps larger organizations avoid a zoo of one-off bots with duplicated prompts.

-

AWS: AgentCore takes a platform-engineering view. You get a runtime that supports long sessions, a memory service, identity integration, and rich observability. Teams that already operate microservices on AWS will feel at home.

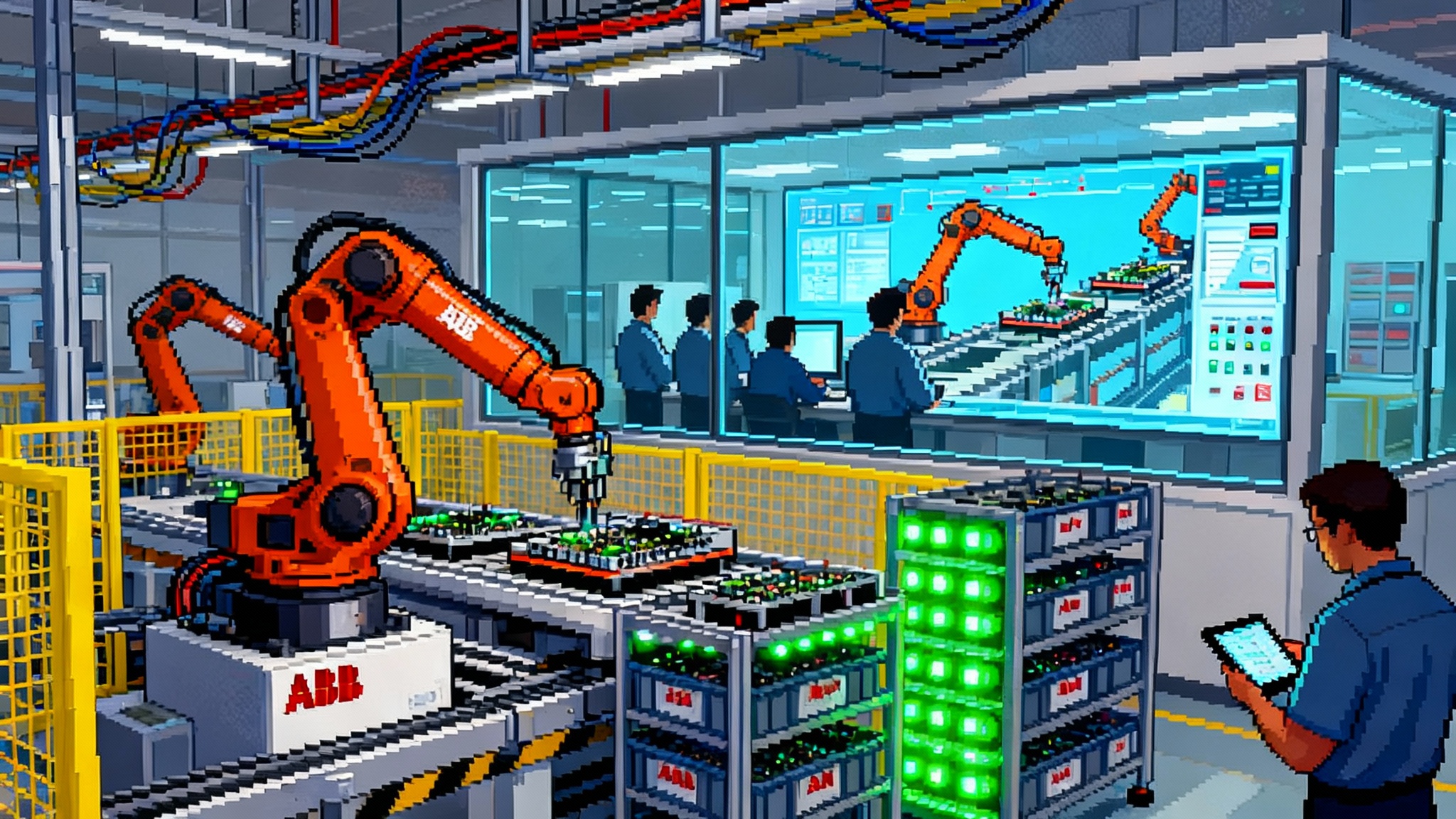

A simple metaphor helps. If agents are robots, AgentKit and Vertex AI provide the gears and a workshop to assemble them, while AgentCore gives you a factory floor with safety railings, power, and meters. Different teams need different starting points, but they all need a workshop and a factory if they plan to ship at scale.

Interoperability vs lock-in, and why MCP matters

A big question for CIOs: will this agent run only in one vendor’s walled garden, or can it use standard connectors and move across environments? The Model Context Protocol (MCP) aims to let models connect to tools and data through a common interface. Even with MCP, integrations still ride through each vendor’s security and governance stack. Your identity provider, token scope, and data classification policies still decide what an agent can do. MCP helps you avoid bespoke connectors for every model and vendor. It does not remove the need for clear permissions and audit paths. For a cross-vendor view, see how Microsoft's enterprise agent framework pursues interoperability.

Vendor positions at a glance

-

Choose OpenAI AgentKit when you want fast iteration on reasoning-heavy workflows, need a first-party evaluation loop, and plan to embed chat-based agents directly into your product with a custom interface. AgentKit is compelling for product teams that want to tune behavior with reinforcement signals and measure impact quickly.

-

Choose Google Gemini Enterprise when your users already live in Workspace or Google Cloud and when browser-based automation removes weeks of integration work. Vertex AI’s debugging and a central catalog help reuse agents in large organizations.

-

Keep an eye on AWS AgentCore if you are a platform team on AWS that values long-running sessions, explicit memory services, and CloudWatch-native observability. The preview shows a clear path to a production-grade operating model.

A pragmatic 30/60/90-day plan to ship without painting yourself into a corner

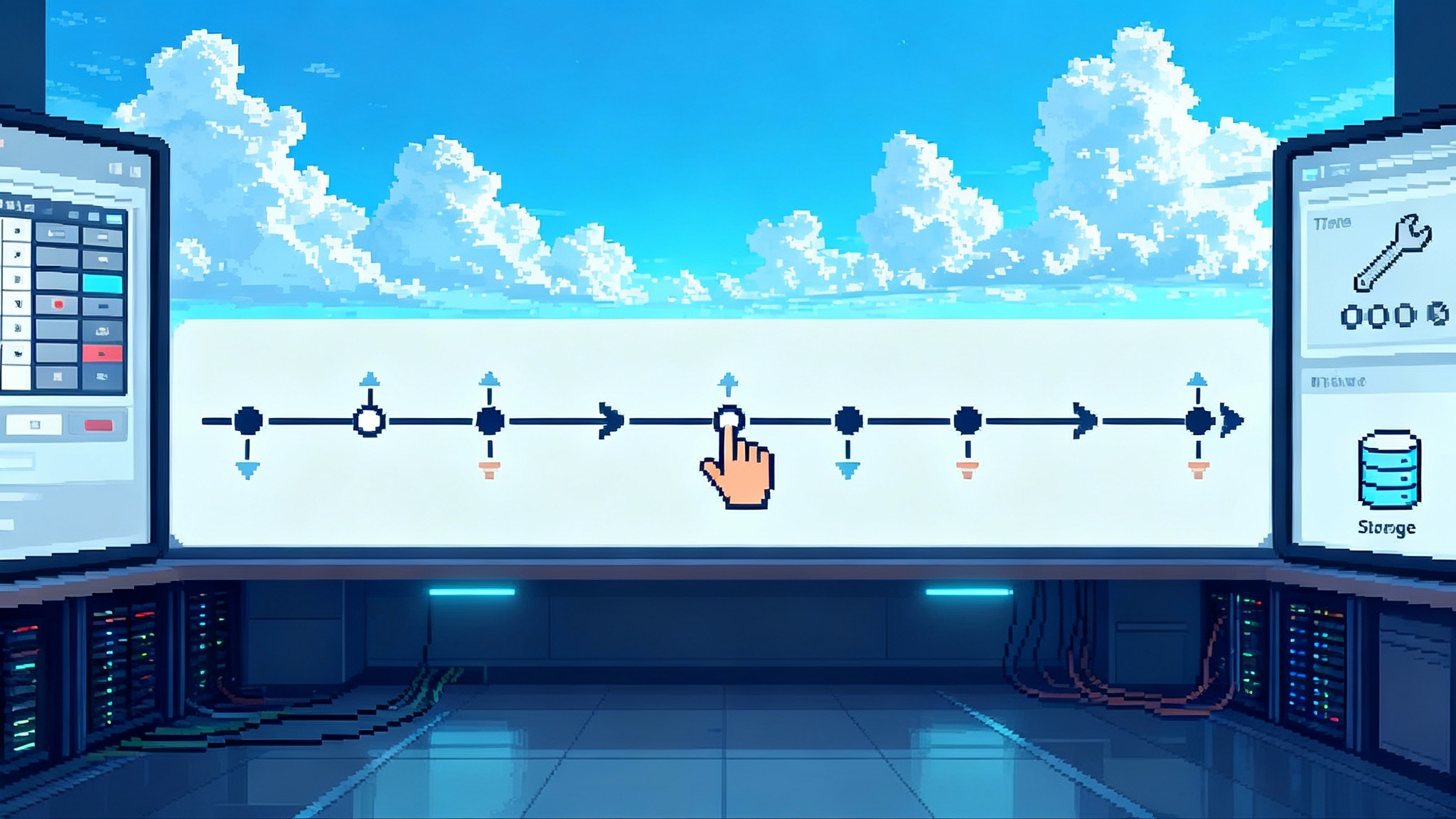

Days 0 to 30: prove value on one workflow

- Pick a process with measurable lag or backlog. Good examples are tier-one support routing, sales list research, or claims triage. Define an observable north star such as median handle time or deflection rate.

- Form a tiger team. Product owner, one application developer, one data engineer, one security partner, one domain expert. Keep the group small.

- Decide your lane. If your users live in Workspace and a browser, start a Google pilot that leans on computer-use for legacy sites. If your product needs an embedded chat surface, start with OpenAI’s chat components. If you require long sessions and deep observability, trial AWS AgentCore. Do not try all three at once.

- Integrate tools through a minimal connector strategy. Prefer MCP-compatible connectors or abstractions you can reuse across vendors later. Define three tools only: data search, a transaction writer, and a code interpreter or calculator.

- Establish an eval harness. Create a small labeled dataset and trace grading criteria. Run agents in shadow mode where they propose actions but humans click the buttons. Capture latency, accuracy, and intervention rates.

- Security first steps. Limit data scopes, set time-boxed tokens, require human approvals for any irreversible action like refund or deletion. Adopt a credential broker for browser agents. Log every tool call with actor, target, and result.

Days 31 to 60: harden for production

- Expand the dataset and add negative tests. Include adversarial prompts and noisy inputs to surface failure modes.

- Add guardrails and escalate only on confidence. Use pattern detectors to catch sensitive data leaks. Require out-of-band approval for high-risk actions.

- Introduce cost controls. Cap daily spend per environment. Track inference, browsing, and tool costs separately so you can compare platforms later.

- Pilot two deployment modes. One embedded experience where end users chat with the agent, and one back-office automation where the agent proposes actions for an operator to accept.

- Start an A/B or switchback test. Compare agent output to current process on the same tickets or tasks to get a clean delta.

- Prepare production runbooks. Define retry logic, fallbacks when tools fail, and an escalation path to a human on call.

Days 61 to 90: scale with optionality intact

- Canaries and staged rollout. Turn on the agent for 5 percent of traffic, then 25 percent, then 50 percent as confidence grows.

- Build an agent registry. Record purpose, tools, prompts, owners, and risk profile for each agent. Require review for changes.

- Benchmark a second vendor. Recreate a thin version of the workflow on the alternate platform using the same connectors. This confirms you can move if pricing or performance shifts.

- Negotiate contracts with data egress and evaluation rights. Lock in terms for logs, traces, and datasets so you can measure and migrate.

- Train operators and support. The agent is not a person. Teach the team when to accept, override, or pause it.

Risk checklist you can paste into your issue tracker

Identity and permissions

- Is the agent using separate credentials from the user, with least privilege scopes and short-lived tokens?

- Can we revoke access by agent, by environment, and by tool without code changes?

Action safety

- Do irreversible actions require human approval or a two-person rule?

- Do we have a deny list of operations that the agent is never allowed to perform in production?

Data governance

- Are prompts, traces, and tool inputs classified and retained according to policy?

- Is training on our data disabled unless explicitly approved? Audit this per vendor.

Observability

- Do we trace every step with input, tool, output, and latency? Are alerts wired to on-call?

- Do we measure drift in accuracy and changes in model behavior over time?

Cost and performance

- Are we tracking cost per resolved task and per tool call? Do we have budget caps?

- Do we have a fallback or degrade mode if a provider exceeds latency service level objectives?

Interoperability

- Are connectors MCP-compatible or wrapped so they can be reused across platforms?

- Can we export prompts, eval datasets, and traces in a portable format?

Legal and compliance

- Do vendor contracts give us rights to audit logs and to evaluate the system with synthetic data?

- Are we tracking which models and versions touched end-user data?

Incident response

- Is there a kill switch per agent and per tool?

- Can we replay an incident from traces to understand what happened and why?

The bottom line

The old question was which model is smarter. The new question is which platform lets your team ship a valuable agent next quarter without sacrificing control or exit options. OpenAI AgentKit aims to accelerate iteration with strong evaluation loops and an embedded user experience. Google Gemini Enterprise brings agents into the daily tools your employees already use and adds practical browser-level skills for the messy web. AWS AgentCore bets on scale, isolation, and cloud-native operations. You do not need to pick a forever partner today. You do need to pick a place to start, define clear success metrics, and keep connectors and datasets portable.

Treat this moment like the early days of cloud. Move a real but constrained workload, instrument everything, and keep your architecture flexible. If you do, you will get the value of agents fast and keep the freedom to switch horses as the race evolves.