OpenAI’s open-weight pivot: GPT‑OSS and the edge agent era

OpenAI’s August 5, 2025 GPT-OSS release puts strong reasoning on a single 80 GB GPU and even 16 GB devices with quantization. Here is how it rewires procurement, stacks, and agent standards.

What changed on August 5, 2025

OpenAI released GPT-OSS models at 120B and 20B parameters under Apache 2.0, bringing open-weight reasoning to local and edge hardware. The 120B target runs on a single 80 GB GPU, while the 20B target can fit on 16 GB devices with 4-bit or lower quantization. This unlocks private by default agents, lower total cost of ownership, and new deployment topologies across data centers, factory floors, hospitals, and field service.

What open-weight really means

Open-weight licensing allows organizations to download, host, modify, and integrate model weights within their own environments. For enterprises, that means:

- Portability across clouds and on-prem clusters

- Data residency and privacy without sending prompts off network

- Observability and control over tokens, logs, and safety layers

See how this aligns with compute-agnostic reasoning and split stacks.

Performance and deployment envelopes

- Targets and parity: 120B aims for near o4-mini class reasoning on a single 80 GB GPU. 20B aims near o3-mini class reasoning when aggressively quantized.

- Latency profiles: Sub-1 second first token on high end GPUs, mobile class devices favor streaming outputs and tool-calling to mask latency.

- Memory planning: Expect 4-bit to 6-bit quantization in edge form factors, plus speculative decoding or sparse activation where supported.

Tooling and compatibility

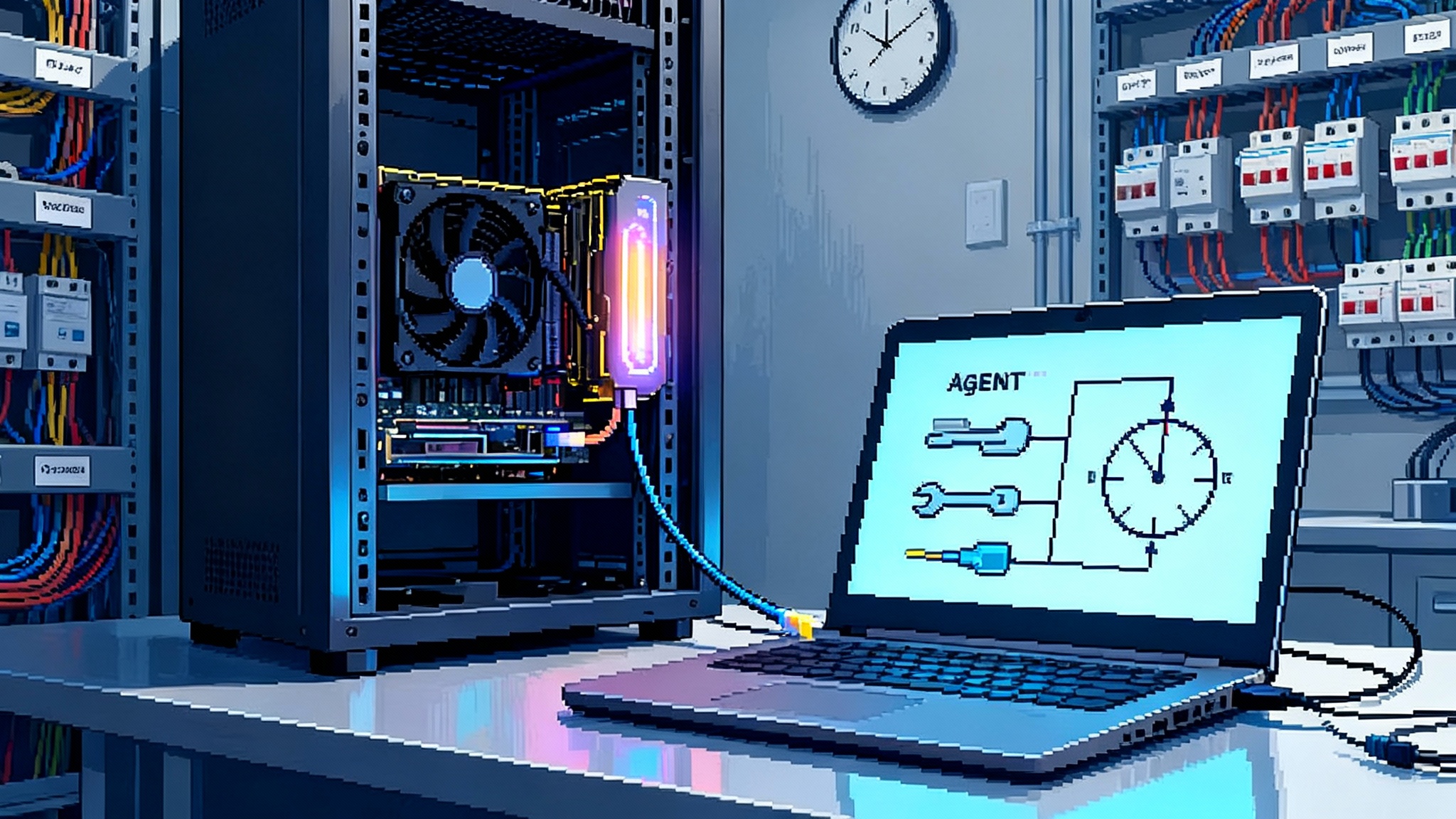

Teams can stand up GPT-OSS using vLLM for servers, Ollama for developer laptops and small servers, and llama.cpp for mobile and embedded builds. Hugging Face tooling remains useful for training adapters, evaluation harnesses, and dataset management.

Related trend: browser-based control surfaces are converging with agents. See browser-native agents and computer use.

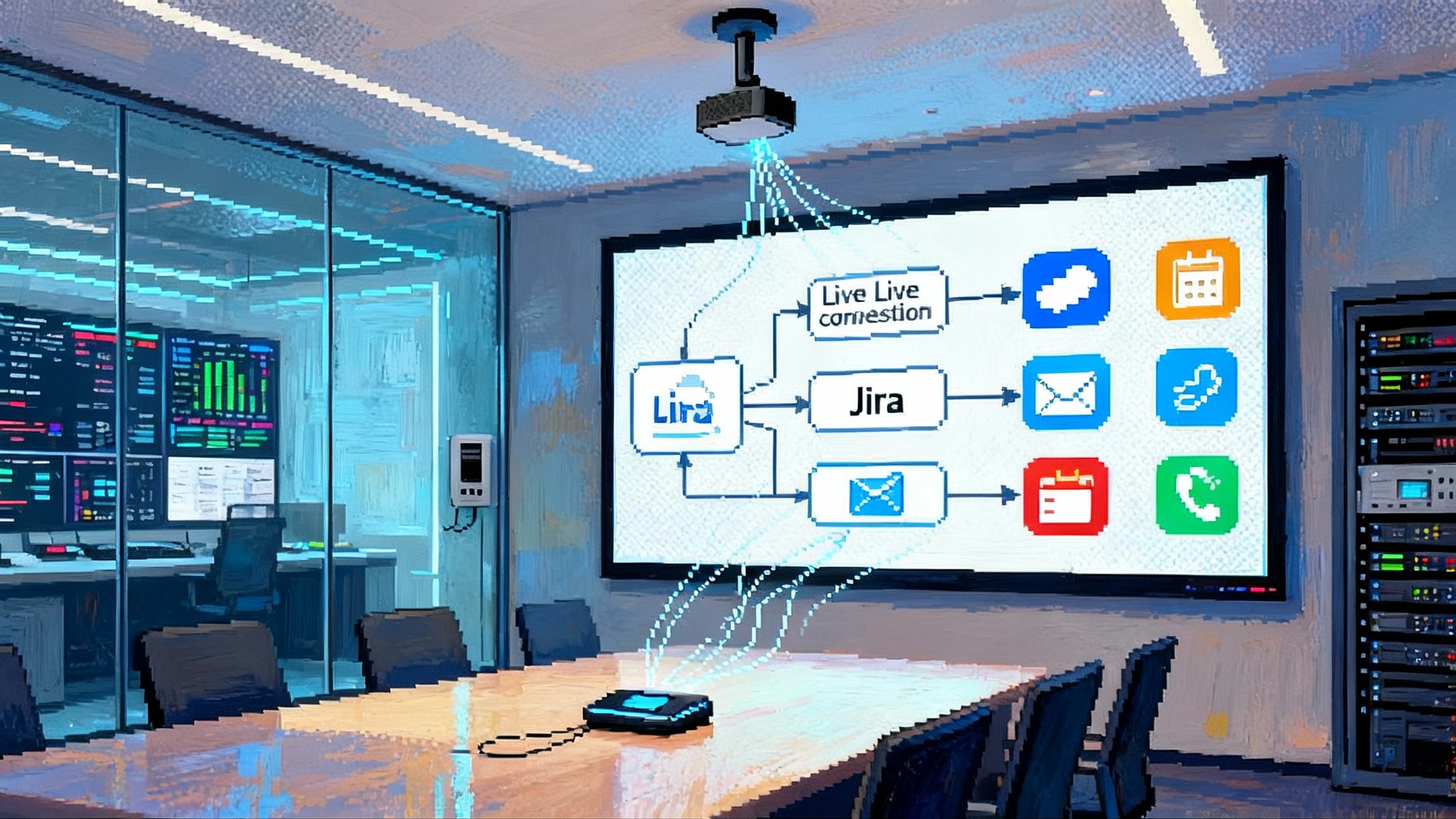

Agent-first design patterns

- Event driven loops: sensors or business events trigger tool use, then LLM planning, then write-backs

- Local first retrieval: vector stores or SQL on device, with cloud fallbacks only when needed

- Safety interlocks: deterministic policy checks before effectful actions, with human-in-the-loop for elevated scopes

- Identity and permissions: capability tokens and service principals enforced at the tool boundary

Deepen the enterprise angle with Windows backs MCP and identity.

Procurement implications

Open weights reshape RFPs and long term contracts:

- Cost: reduced egress and per-token fees, predictable capex for edge GPUs or NPU devices

- Privacy and compliance: data stays on device or in VPC, easier mapping to sector controls

- Avoiding lock-in: swap models without rewriting the stack if you adhere to common inference APIs

A concise RFP template

Include these sections and scoring weights:

- Models and licenses: weights, quantization support, fine-tuning rights

- Inference performance: tokens per second, cold start, throughput at P95

- Security: SBOM, signed weights, model provenance, attestation options

- Observability: structured logs, span traces, token accounting, red team hooks

- Interoperability: OpenAI-compatible APIs, OpenAPI specs for tools, MCP or equivalent

- Governance: audit trails, retention, policy packs, abuse monitoring

Policy, safety, and evaluation

Adopt a layered evaluation plan:

- Red teaming: jailbreaks, prompt injection, data exfiltration

- Safety filters: content classification, tool permission whitelists

- Operational risk: rate limits, backoff, circuit breakers

- Alignment tests: scenario suites tailored to your domain

Interoperability and standards

Prioritize interfaces that ease model swap and agent portability: OpenAI-compatible chat and tool calls, Model Context Protocol style adapters, and OpenAPI descriptions for tools. For networked agents, favor message schemas that survive across runtimes.

Eighteen month adoption curve

- Pilot, months 0 to 3: narrow tasks with measurable ROI, shadow production

- Edge rollout, months 4 to 9: expand to offline or low-connectivity sites, introduce safety interlocks

- Ecosystem turn, months 10 to 18: third party tools, model choices per use case, repeatable governance

30, 60, 90 day enterprise plan

- Day 30: select 2 pilot tasks, stand up vLLM or Ollama, wire logs and metrics, baseline cost and latency

- Day 60: add retrieval and tool use, ship a red team report, implement policy guardrails, begin device trials for 20B

- Day 90: run A or B against production baselines, tune quantization, publish a procurement-ready RFP, prep rollout kits

Risks and mitigations

- Capability gaps: use tool use and retrieval to boost smaller models

- Compliance drift: map logs to audit requirements up front

- Supply constraints: qualify at least two hardware targets or cloud SKUs

- Model sprawl: standardize on one inference API and a small set of evals

Bottom line

GPT-OSS shifts AI from cloud default to edge friendly by design. If you adopt open weights with clear guardrails, you gain privacy, portability, and predictable cost while accelerating useful agent deployments.