Chat Is the New Runtime: AgentKit and Apps Start the War

OpenAI just turned chat into a first-class runtime. AgentKit brings production-grade agents and the ChatGPT Apps SDK makes distribution native to conversations. The moat now shifts to data access, trust, and chat-native UX.

The moment chat became the place software runs

On October 6, 2025, OpenAI reframed what it means to ship software. The company introduced AgentKit, a toolkit for building production‑grade agents that can plan, call tools, and act on behalf of users, with built‑in evaluation and telemetry. It also unveiled a way for developers to build apps that live inside ChatGPT via a new Apps Software Development Kit. This is not a minor feature drop. It is a bid to make chat the primary runtime and distribution layer for artificial intelligence software. OpenAI’s detailed OpenAI AgentKit overview explains the building blocks, and the OpenAI Apps SDK overview outlines how apps appear directly in ChatGPT.

If a mobile app is a little store on a home screen, a chat app is a street where people already gather. OpenAI’s move relocates the storefront and the cash register to that street. Instead of forcing users to switch contexts, open a tab, sign in, and learn yet another user interface, software comes to the conversation the user is already having. This shift reinforces how the moat shifts to identity when access, consent, and permissions become the differentiator.

Put these together and you get a platform: agent capabilities and app packaging, bound by telemetry, governance, and a discovery surface. The result is simple to say and profound to absorb. The chat window is the operating surface. The models are the compute. Connectors are the drivers. Evals and telemetry are the observability stack. And the Apps SDK is how software arrives.

Why chat is becoming the runtime

Think of chat as a universal command line with training wheels. It combines three things that rarely coexist in software:

- Intent capture: users can express goals in normal language without navigating menus.

- Context carryover: the thread remembers prior steps, files, preferences, and constraints.

- Mixed initiative: the system can ask clarifying questions, propose options, and take actions.

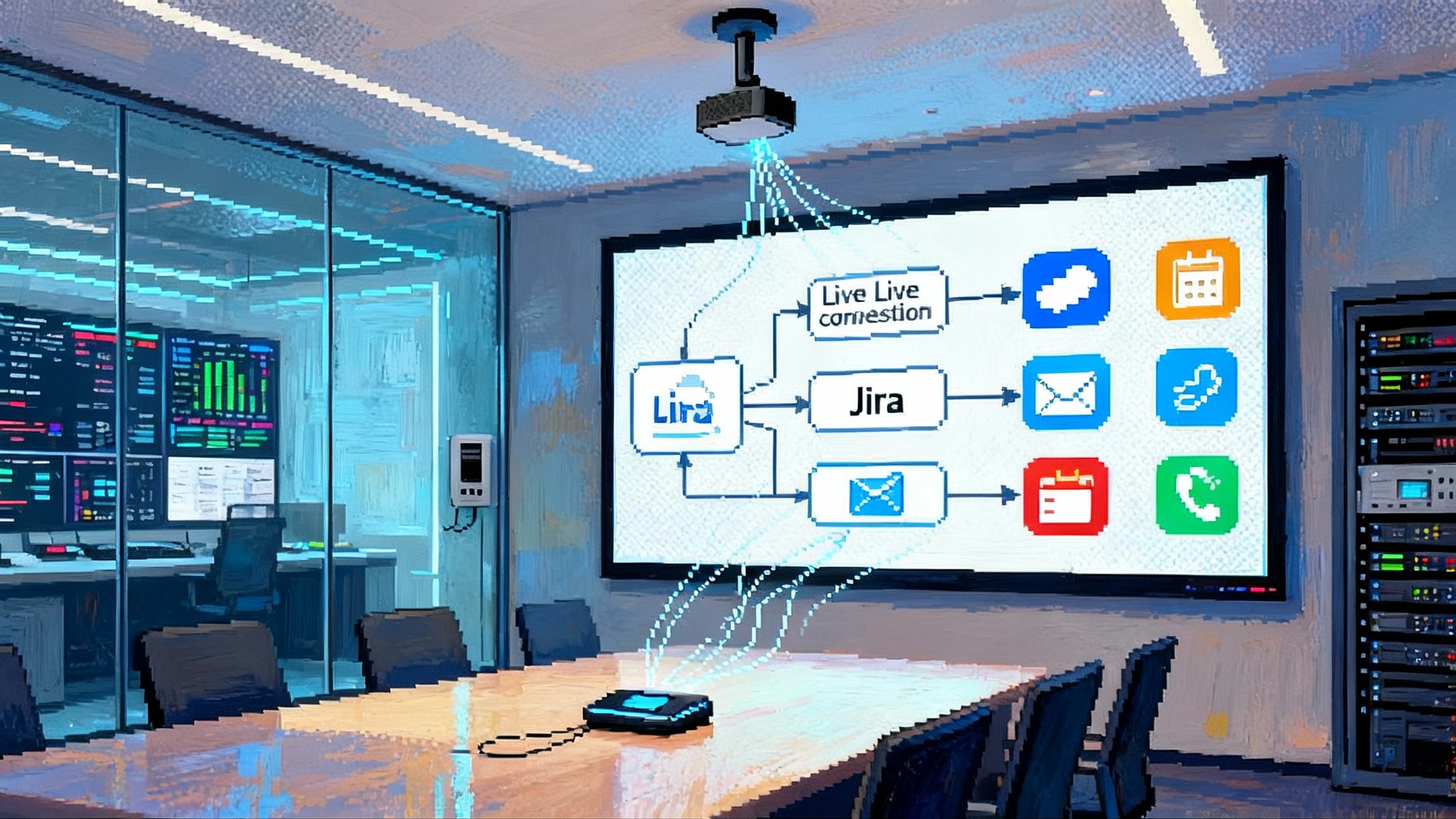

Traditional apps excel at precision but struggle with intent capture. Websites excel at distribution but lack context. Chat holds both. It is not just input and output. It is a loop that accumulates shared state. That loop makes it a natural runtime for agents that need to plan, fetch data, and act, while keeping a human in control. We are seeing adjacent surfaces, like meetings, evolve in the same direction, where meetings become agent operating systems.

For developers, this collapses two hard problems. You do not have to build a bespoke interface for every task, and you do not have to bootstrap distribution from zero. Your agent or app enters an ongoing conversation, gets the user’s consent for the right data and tool scopes, and then does the job. If it fails, the same chat thread is the debug console.

The new moat: data access, trust, and UX

When a platform standardizes models and hosting, differentiation shifts. With AgentKit, the ingredients for a competent agent are on the shelf: tool use, connector registry, evals, reinforcement fine‑tuning, and deployment patterns. Once those are available to everyone, raw model size matters less. Winning depends on three things.

- Data access and quality

Agents are hungry for accurate, timely data. Built‑in connectors turn what used to be months of integration work into a configuration exercise. The team that can legally, safely, and quickly route the right data to the right agent wins. That includes unglamorous tasks like normalizing fields across systems of record, implementing row‑level permissions, and scoping read and write access.

Mechanism: connectors and tool calls formalize how an agent touches data. They create a contract between the model and external systems. If that contract is well designed, you can swap models without re‑plumbing your company.

Implication: proprietary datasets and permissioned access become a network effect. Every successful use case enriches the traces you can train and evaluate on, which in turn compounds performance.

- Trust and safety

Eval and telemetry change trust from a slogan into a score. If you measure failure modes like hallucination rate, tool call precision, or escalation rate to a human, you can tune for reliability. A robust evaluation harness lets you gate releases and roll back poor regressions before customers notice. Telemetry closes the loop in production.

Mechanism: eval suites act like unit and integration tests for agents. Telemetry works like observability for microservices. Together they turn agents from art projects into systems with service‑level objectives.

Implication: vendors that can prove reliability with live metrics, not just benchmarks, will win enterprise deals. Trust becomes a real moat because it is earned in production under constraints.

- User experience inside chat

The Apps SDK defines how interactive surfaces appear in a thread. That puts user experience design back in the spotlight. Good agent UX is not just a pretty panel. It is clarifying questions, explicit consent prompts, precise summaries of actions taken, and clean fallbacks when the agent is unsure. In chat, you do not get a full screen to distract users. You get one message at a time. Clarity wins.

Mechanism: chat‑native components enforce consistent patterns like inline forms, result tables, and approval prompts. They make good UX the default, not an afterthought.

Implication: in this platform, the best experiences will feel like working with a skilled colleague who knows your tools and your data, not like wrangling an app.

What to ship in the next 90 days

The window to establish a foothold is now. Here is a practical build list for both startups and enterprises.

For developers and startups

- A single sharp app inside ChatGPT

- Pick a painful, high‑frequency task where chat context helps. Examples: customer email triage with templated replies, post‑meeting action item extraction, spreadsheet cleanup from pasted data.

- Scope one or two connectors maximum. Favor systems with clean application programming interfaces and strong OAuth flows.

- Ship a minimal interactive surface with an explicit approval step before any write action.

- An agent that does background work

- Use AgentKit’s tool calling to chain three steps end to end. Example: pull a support ticket, summarize history, draft a response, and open a pull request to update a knowledge base.

- Add a human‑in‑the‑loop checkpoint. If the agent is more than 80 percent confident, request approval. Otherwise escalate with a crisp summary.

- A real eval harness

- Write 50 to 100 scenario tests that mirror how customers actually speak. Include messy inputs and trick cases. Track success rate, tool call correctness, and time to completion.

- Run evals on every change and block deploys if scores drop below a threshold.

- Production telemetry from day one

- Log tool calls, connector latencies, error codes, and user approvals. Build a dashboard that shows success rate by customer, by scenario, and by model version.

- Set alerts for unusual patterns like repeated permission prompts or high cancellation rates.

- Consent and scope as features

- Design your first‑run experience around permission clarity. Explain what the app can see and do, and what it never accesses.

- Offer granular scopes and a clear off switch. Make it easy to revoke access.

- Pricing experiments that match value

- Try metered pricing by successful task, not by token. Offer a free tier with limits that encourage upgrade after a small number of wins.

- Add a team plan that unlocks shared connectors and shared eval dashboards.

- Distribution inside chat

- Choose a name that is short, pronounceable, and easy to type. Provide two sentence prompts users can copy to invoke your app in context.

- Optimize your first message. The opening exchange is your landing page.

- A portability story

- Document your connectors and tool schemas so an enterprise buyer knows they are not locked in. Portability builds trust and shortens sales cycles.

For enterprises

- Start with one internal agent that pays for itself in 30 days

- Pick a function with clear metrics. Examples: sales research preparation, vendor risk intake, IT help desk triage.

- Pair a business owner with a platform owner. The business owner defines quality. The platform owner enforces governance.

- Establish a connector registry with least‑privilege defaults

- Centralize identity, secrets, and domain restrictions. Require approval for write scopes. Segment test and production data.

- Implement row‑level access based on user identity propagated through the agent.

- Build an eval culture

- Write evals that mirror your policies. For example, an agent should never email external recipients without an approval chain. Codify that as a failing test.

- Track fairness and privacy metrics alongside accuracy. Include masked data scenarios in evals.

- Telemetry, audit, and rollback

- Log every tool call with inputs, outputs, and approval IDs. Retain traces with appropriate data loss prevention rules.

- Set a standard for safe rollbacks. If a new model or prompt worsens outcomes, revert in minutes, not days.

- Human‑in‑the‑loop by design

- Define when agents must escalate. For legal or financial actions, require a human signature. For routine classifications, allow auto‑approve with spot checks.

- Procurement and legal readiness

- Update data processing addenda to cover agent actions, connector scopes, and cross‑border data handling.

- Map your regulator’s expectations to your telemetry and audits. Be ready to produce evidence, not anecdotes.

Collision course with cloud and mobile marketplaces

If chat is where software runs, distribution power shifts. For a decade, the public cloud marketplaces of Amazon Web Services and Microsoft Azure have been the dominant enterprise distribution channels. They plug into procurement, offer private discounts, and bundle spend commitments. Meanwhile, Apple’s App Store and Google Play own consumer discovery and payments on mobile.

Agent apps in chat do not replace these marketplaces overnight. They do create a new layer on top of them. The cloud still hosts the data and the backends. But the first meaningful touch with the user happens inside the chat thread. That is where intent is captured, consent is granted, and value is demonstrated.

Expect three near‑term tensions:

- Billing and bundle pressure: enterprises will ask whether agent apps can be billed through existing cloud commitments. Vendors that make this easy will win procurement faster.

- Policy alignment: mobile store rules were built for screens and taps, not tools that email customers or edit databases. Expect new review guidelines for agent behaviors, consent prompts, and logging.

- Search and ranking: app discovery inside chat will favor relevance and reliability. Vendors with better eval scores and clearer prompts will rank higher. This rewards operational excellence, not just marketing spend.

The play for cloud providers and mobile platforms is to lean in. Expect tighter integrations between chat platforms and marketplace identities, spend controls, and security reviews. Expect mobile platforms to expose deeper Siri and Assistant invocation hooks that route to agent apps. The boundaries will blur, accelerating the ambient agent era arrives.

Risks and how to govern them as ecosystems scale

New platforms attract new failure modes. Treat the following as a starter risk register with concrete controls.

-

Over‑permissioning and data exfiltration

- Control: scope tokens that map to least privilege, enforced in the connector layer. Short‑lived tokens with automatic rotation. Redaction by default for sensitive fields.

-

Prompt injection and tool misuse

- Control: define a strict tool call schema with whitelisted parameters and rate limits. Use content filters on inputs and outputs. Add a dry‑run mode that shows intended actions before execution.

-

Silent failures and regressions

- Control: eval gates on every deploy, with canary rollouts and automated rollback. Track regression budgets like an engineering org tracks error budgets.

-

Supply chain and app impersonation

- Control: signed app packages, reproducible builds, verified publisher badges, and runtime checks that the app is calling only approved connectors.

-

Cost blowouts

- Control: budget alerts by scenario and by customer. Hard caps per thread. Favor models that meet the task’s quality bar, not the biggest model available.

-

Vendor lock‑in

- Control: standardize tool and connector schemas so you can switch models or platforms without rewriting the business logic. Keep an internal mirror of prompts and evals.

-

Human factors and misuse

- Control: clearly visible consent prompts, undo buttons, and audit trails that individuals can review. Training for staff on what an agent can and cannot do.

A practical playbook for builders

- Choose the job, not the model. Define a narrow workflow where an agent can complete a multi‑step task end to end with clear success criteria.

- Start telemetry on day one. If you do not measure tool call accuracy and escalation rate, you will not know when you are improving.

- Make consent a first‑class surface. Show users what will happen before it happens. Use simple language and a visible off switch.

- Build evals before polish. A reliable ugly app beats a pretty unreliable one every time.

- Design for handoffs. Agents should hand off cleanly to humans, to other agents, or to external systems. Treat handoffs as part of the user experience.

- Keep your connectors clean. Normalize schemas. Write tests for edge cases like empty fields, timeouts, and mismatched encodings.

- Document your portability. Enterprises will ask. If you can answer in one page, you will win deals faster.

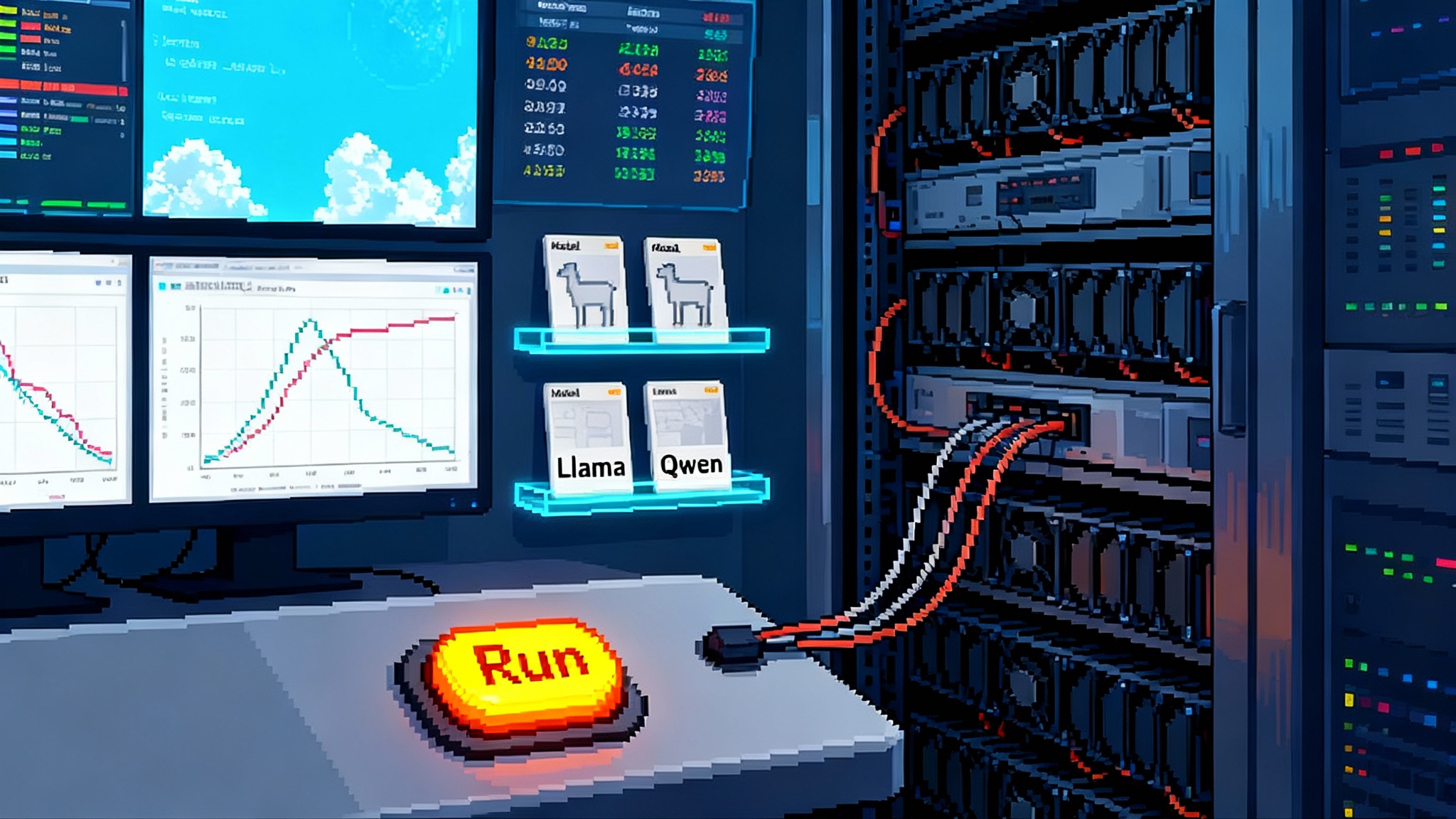

The end of model maximalism

For the last two years, strategy often meant buying the biggest model and hoping the rest would work out. That era is ending. With AgentKit’s production building blocks and apps that live inside ChatGPT, the competition shifts to who can safely route the best data, earn the most trust, and design the clearest chat experience under real constraints.

This is what a platform transition looks like in the wild. The browser wave in the 1990s moved software from shrink‑wrap to web pages. The mobile wave moved it to icons on a home screen. The agent wave moves it into conversations. If you are a developer, your next release should assume the chat thread is where your product meets its user. If you are an enterprise, your next governance document should assume agents are colleagues who need permissions, supervision, and performance reviews.

The competition is not about who has the largest model. It is about who earns the right to act on a user’s behalf, inside the place they already work and talk. That makes chat the new runtime. It makes trust the new platform tax. And it makes the next 90 days the best time to plant your flag.