Durable AI Agents Arrive: Claude 4.5’s 30‑Hour Shift

Anthropic’s September launch of Claude Sonnet 4.5, Claude Code 2.0, and a new Agent SDK pushes agents from chatty helpers to shift-length workers. Learn how long-horizon autonomy works, what to pilot first, and how to keep it safe.

The moment durable agents became real

On September 29, 2025, Anthropic said its new Claude Sonnet 4.5 kept focus on complex, multi-step work for more than 30 hours, and shipped it alongside major upgrades to Claude Code and a new Claude Agent SDK. That claim is not about a flashy demo. It is about staying on task through hundreds of tool calls, environment changes, and distractions, long enough to matter for real jobs. In Anthropic’s write-up, the company pairs the model with context editing, a memory tool, and an agent framework built for permissions and checkpoints, then reports that the system held coherence for a full working day plus overtime. The Anthropic Sonnet 4.5 announcement sets a new bar for what an agent can do without a human nudging it every few minutes.

If you squint, this is the upgrade from “assistant” to “shift worker.” Assistants chat, answer, and draft. Shift workers clock in, execute a plan, and hand off cleanly. The difference is durability: goal consistency across time, tools, and interruptions.

From assistants to shift-length workers

Durability is the ability to maintain intent and quality across long horizons. It requires three ingredients:

- A stable working memory that carries the plan and state forward across hours.

- A way to summarize and store knowledge that will still be useful tomorrow.

- A runtime that limits drift, confirms outcomes, and recovers from errors.

Claude Sonnet 4.5’s 30-hour result matters because it signals progress on all three. The model can keep its head while the scaffolding keeps its hands steady.

Picture a capable junior engineer taking an overnight shift. They triage a queue of issues, reproduce a bug, write a fix, run tests, and open a pull request. They do not ask you to restate the goal every ten minutes. They leave a summary at the end of the shift. Durable agents promise the same cadence.

What just shipped, in plain terms

Here is the stack Anthropic put on the table:

- Claude Sonnet 4.5. A frontier model tuned for long, tool-heavy work. The headline is sustained task focus over a full day.

- Claude Code 2.0. A reworked command-line and editor companion with checkpoints and a refreshed terminal experience. Checkpoints are save points for the agent’s progress, so you can roll back bad branches without losing the whole shift.

- Claude Agent SDK. Production primitives for building agents the way Anthropic built Claude Code. This is not just a chat API. It is a set of controls to manage memory, permissions, subagents, and recovery.

- Context editing and memory tool. Automatic housekeeping that clears stale tool outputs before the context fills up, and a file-based memory that persists key facts and decisions outside the context window. Together they extend how long an agent can run without losing the plot. For background on why memory matters, see our take on the memory as the new data layer.

Taken together, this turns long-running autonomy from a prompt-engineering parlor trick into an operational pattern you can deploy.

Inside the new agent stack

Think of the agent as a careful shift worker inside a controlled workshop.

- Planning bench. The model drafts a plan, decomposes it into steps, and keeps a running log. Plans are not just paragraphs. They are checklists that get ticked off, amended, or re-scoped as evidence comes in.

- Tool belt. Bash, file edit, test runners, browsers, spreadsheets, and service connectors. The agent does not guess. It executes and reads real outputs.

- Checkpoints rack. Every meaningful milestone captures a snapshot: the working tree, the tests, the plan, and the evidence. If the agent wanders, you branch from the last good point.

- Memory cabinet. Design decisions, off-path findings, and resolved edge cases get written to a persistent memory folder so the agent can pick up tomorrow where it left off.

- Permissions panel. Sensitive actions require approval. You can set budget, scope, and time caps, plus human sign-offs for high-risk moves like changing infrastructure.

- Debrief station. Agents finish a shift with a crisp handoff: what changed, what is left, risks, and links to artifacts.

Engineers will recognize these parts. Durable agents are opinionated about the boring details of real work. That is the point.

Why durability is different from more context

A million tokens of context helps, but it is not the same as durability. Long horizons need garbage collection and state hygiene. Context editing removes stale tool outputs so the model sees current signals. Memory files preserve decisions without bloating the prompt. Checkpoints make recovery cheap. These mechanics, not just raw context size, turn a long conversation into a long job.

A helpful metaphor is a road trip. A bigger gas tank lets you drive farther without stopping. Durability is cruise control, a navigation app that re-routes around traffic, a glove compartment with printed directions if your phone dies, and friends in the car checking the map. You only reach the destination when those pieces work together.

Concrete use cases that unlock with 30 hours

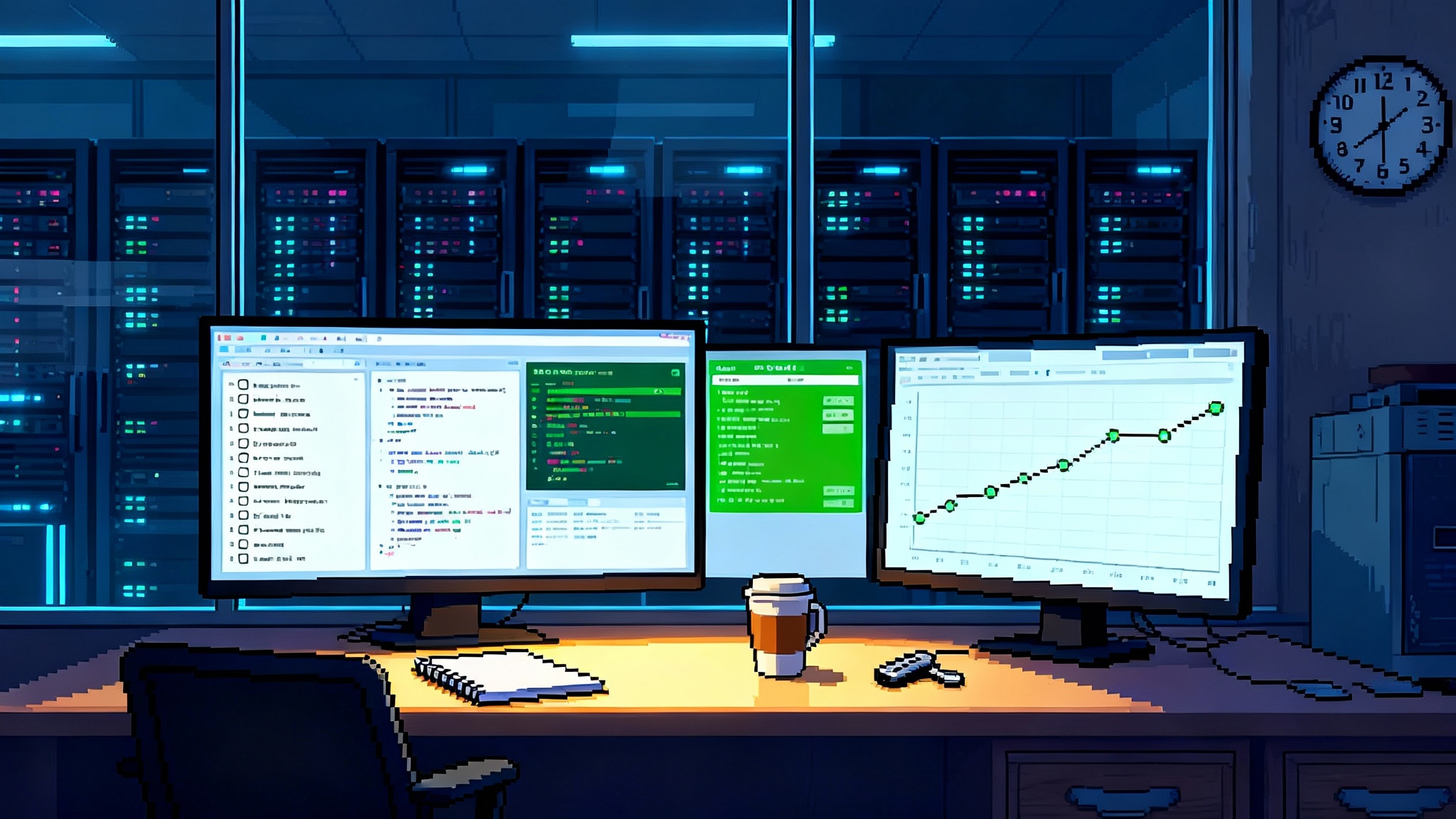

- Multi-day refactors. The agent ingests the codebase, writes safety tests, proposes an architecture, and edits files in batches. It runs the full test suite overnight, bisects failures, and continues with a clean branch in the morning.

- Compliance runs. The agent assembles the current policy set, scans repositories and configurations, opens issues for gaps, drafts remediation pull requests, and prepares evidence for auditors. It handles drift by rescanning just the deltas each shift. For context on market direction, see this agentic compliance operations model.

- Research builds. The agent scopes a prototype, pulls primary sources, extracts facts to a working memory, writes a design doc, and assembles a skunkworks demo. It does not forget why you pivoted on page seven because the rationale is in memory.

- Data quality sweeps. The agent runs profiling jobs, flags anomalies, proposes validation checks, and files pull requests to add tests to pipelines. It tracks what it tried and why.

- Customer support automation. The agent reviews unresolved tickets, drafts responses, files product bugs with repro steps, and assembles a weekly postmortem with common root causes.

These are not single-turn tasks. They are shift-length loops with tooling, evidence, and handoffs. That is where durability pays off.

What is still brittle

Long-horizon autonomy does not remove the hard parts. Today’s systems still struggle with:

- Tool flakiness. Agents can over-trust logs or miss silent failures. Guard with idempotent commands, structured outputs, and retries.

- Prompt injection. When agents browse or read untrusted content, hidden instructions can subvert goals. Sanitize inputs, constrain selectors, and apply allow-lists for domains.

- Drift over time. Summaries can compress away critical details. Require evidence links in memory entries and pin key artifacts at checkpoints.

- Non-determinism. Multi-hour runs can diverge. Lock versions, pin seeds where possible, and compare diffs at each checkpoint.

- Environment churn. Browsers update, package mirrors lag, and test data moves. Use reproducible containers and hermetic build steps.

- Cost discipline. Long shifts can run up compute bills. Enforce budgets per run and per tool, and prefer batch evaluation to chat loops.

Durability reduces babysitting. It does not remove engineering.

Guardrails that keep agents honest

Durable agents call for runtime controls that mirror good operations:

- Checkpoints with branch-and-rollback. Store code, plan, and evidence. Make rollbacks cheap and frequent.

- Sandboxed computer use. Run in locked containers with read-only mounts by default. Escalate to write access only when a test passes.

- Permissions and step limits. Require approvals for actions that move money, change infrastructure, or touch customer data. Cap total steps and wall time.

- Contracts for tools. Every tool returns structured results and a status. Agents must treat non-zero statuses as hard failures.

- Test-gated progress. No merge without green tests. No deploy without health checks. No spreadsheet write without schema validation.

- Memory hygiene. Write short, referenced notes. Link to logs and commits. Prune aggressively. Prevent memory from turning into a junk drawer.

- Observability. Log every tool call with inputs, outputs, timestamps, and cost. Aggregate per run so humans can audit quickly. Deeper guidance on telemetry is in our view of the agent observability control plane.

- Kill switch. Every run is preemptible by policy and by operator.

If you already run a software delivery pipeline, you already run most of these controls. Durable agents fit where you have discipline.

How Claude compares to Gemini 2.5 and open-weights

Google’s Gemini 2.5 era focuses on computer use and control surfaces. At Google I/O, the team emphasized Project Mariner for computer use, thought summaries that expose model thinking, and thinking budgets that cap how much the model reasons before acting. Those ingredients help agents remain debuggable and cost controlled, and bring the platform closer to enterprise expectations. See Google’s official overview for details on the new computer use capabilities and developer controls in the 2.5 family: the Google Gemini 2.5 update.

On durability specifically, Anthropic planted a clear flag by stating an observed 30-hour focus on complex multi-step tasks with Sonnet 4.5. Google’s framing emphasizes transparency and budgets more than long continuous runs. In practice, both approaches can converge. Gemini’s thought summaries and budgets echo the checkpointing and context editing patterns. Anthropic’s memory tool echoes the need to persist knowledge outside the context window.

Open-weights are also moving fast. Community agents built on modern open models are improving at computer use, and academic groups have started to report stronger results on realistic environments. The gap is narrowing for targeted tasks, especially when teams invest in data, environment scaffolds, and test-time compute. Enterprises that prefer open-weights for control and cost can adopt the same durability patterns: strict tool contracts, checkpoints, memory files, and outcome-based evaluation.

Pricing and why outcome beats tokens

If an agent works for 30 hours, charging by token is the wrong mental model. Durable agents should be paid like contractors, not like printers. That means outcome-based pricing where possible.

- For code work, pay per merged pull request that passes tests and code review, with a bonus for zero rollbacks in seven days.

- For compliance, pay per control verified with attached evidence and per remediation completed.

- For research, pay per decision memo accepted by the leadership team with linked sources and reproducibility notes.

Outcome pricing does two useful things. First, it motivates vendors and internal teams to optimize for reliability, not verbosity. Second, it clarifies what evidence the agent must produce to count as done. When tokens are the meter, everyone loses focus. When outcomes are the meter, agents become accountable.

The 90-day durable agent playbook

Use the next quarter to turn interest into results.

Week 1 to 2: Frame the bet

- Pick two high-signal use cases from refactors, compliance runs, research builds, data quality sweeps, or support triage.

- Define success in one sentence each. Example: “Merge a refactor that replaces our legacy auth middleware with zero regressions.”

- Map systems and permissions. Write down which repos, tools, environments, and secrets are needed, and which are forbidden.

Week 3 to 4: Build the guardrails

- Provision a sandbox. Containers, ephemeral credentials, test data, and a read-only mirror of production.

- Implement checkpoints. Save plan, diffs, test results, and memory snapshots every N steps.

- Wire permissions. Set step limits, wall-time caps, and approval hooks for risky actions.

- Instrument everything. Log tool calls, cost, and status. Create a one-page run report template for humans.

Week 5 to 8: Run shift-length pilots

- Start with two four-hour shifts, then move to a full 8 to 12 hours, then to 24 hours. Measure completion rate, defect rate, mean time to recovery after an error, and budget adherence.

- Enforce test-gated progress. No exceptions. Require evidence links in memory entries.

- Rotate one human lead reviewer who can pause, approve, or terminate runs. Capture their feedback after each shift.

Week 9 to 10: Compare models and stacks

- Run the same jobs with a Claude 4.5 agent configured with checkpoints and memory, and with a Gemini 2.5 agent configured with thought summaries and thinking budgets. Keep the sandbox identical.

- For open-weights, test a focused task in a smaller environment where you can reproduce results. Use the same controls.

Week 11 to 12: Decide and scale

- Choose the stack that delivered outcomes with the lowest defect rate per dollar and the cleanest handoffs.

- Move to outcome-based pricing internally or with vendors. Lock the playbook into your software development life cycle or compliance calendar.

- Publish a brief inside your company describing the guardrails, the metrics, and the intake process for new durable agent jobs.

Enterprise implications

- Talent mix shifts. Durable agents handle the first 70 percent of grunt work, so human specialists spend more time on architecture, verification, and exception handling. Upskill reviewers on agent observability and recovery.

- Governance becomes runtime. The old model of quarterly policy reviews is too slow. Durable agents move governance into the run itself with approvals, checks, and memory hygiene rules.

- Security posture changes. Agents are now semiautonomous users. Treat them like accounts with least privilege, session limits, and audit trails.

- Platform teams as force multipliers. Many companies already built the pipelines durable agents need: continuous integration, testing, feature flags, and container orchestration. The lift is wiring the agent to those rails and enforcing rules from the start.

Why pilot now

Durability compounds. The first refactor agent leaves a memory of pitfalls that helps the second refactor. The first compliance run codifies a control checklist that auto-updates. The first research build seeds a knowledge base that accelerates the next prototype. Waiting six months means giving up a half year of compounding improvements in your agent’s memory and your team’s operating discipline.

The risk is not that durable agents fail. It is that they succeed without guardrails and create invisible debt. The solution is to pilot now with tight runtime controls and outcome contracts. The prize is significant: a team that does more deep work with less drift and less rework.

The bottom line

Claude Sonnet 4.5’s 30-hour staying power, paired with Claude Code 2.0 and the Claude Agent SDK, marks the arrival of durable agents you can put on a shift. Google’s Gemini 2.5 shows a parallel path with strong computer use and developer controls. Open-weights are close behind on focused jobs. The practical move is simple. Start small, wire the guardrails, and pay for outcomes. The companies that learn to run shift-length agents in Q4 will set the pace in 2026, not because they chased a demo, but because they built a new way to work that keeps going when the clock keeps ticking.