Sora 2’s invite app ignites the first AI video network

OpenAI’s Sora 2 lands with an invite-only, TikTok-style feed where every clip is born synthetic. Verified cameos, remix permissions, and native attribution signal a new playbook for creators and brands as Meta’s Vibes enters the race.

The first AI-native social video network has arrived

Two launches landed at once and together they shift the center of gravity in video. On September 30, 2025, OpenAI released Sora 2 with synchronized sound and paired it with a new invite-only social app where every clip is generated. In OpenAI’s own words, you can create, remix the work of others, and drop yourself into scenes using verified cameos, all inside a swipeable feed built for creation rather than passive viewing. See OpenAI's Sora 2 details.

Calling Sora a TikTok-style app is helpful shorthand, but it misses the point. TikTok and Instagram are networks where people upload real-world footage and then edit with tools. Sora flips the direction of travel. The tool is the network. Scenes are simulated, voices are synthesized, and the social graph is organized around remixing. The core unit is not a clip you filmed, it is a generation you authored that others can legally, ethically, and technically fork.

Key takeaways

- AI-native feed with verified cameos and remix-first design

- Consent as a product feature through permissions that travel with media

- Two-speed race between OpenAI’s creation-first model quality and Meta’s distribution

What changes for creators: a remix economy with permissions

Think of Sora as an operating system for remixes. A creator publishes a 12-second vignette of a neon train floating above a river at dusk. Another user forks it, keeps the camera move, swaps the train for a koi fish made of stained glass, and tags the original author. A third uses the cameo feature to place their verified likeness in the train window. Each step respects the permissions the original people set.

For creators, the upside is compounding reach. Remixes seed new audiences that still point back to the source. If the platform adds native attribution and payout plumbing, that chain becomes monetizable. Imagine a fashion designer who releases a style prompt and allows noncommercial remixes but offers a commercial license at a fixed price. Every fork carries the license terms. The designer sees which branches go viral, then collaborates with remixers who prove they can move culture.

For working video professionals, Sora can be a fast previsualization engine and a marketing channel. An indie filmmaker can block scenes, test lighting, and explore color palettes without booking a stage. A motion designer can publish a template sequence that others remix into micro-ads while retaining control over which brand categories can touch the work. The cameo model makes that control legible. If you approve your likeness only for educational clips and charity campaigns, the platform can enforce it.

The new rails: identity permissions and copyright opt-outs

If social video was built on follows, likes, and shares, AI-native video will be built on a different set of rails: permissioned identity and selective copyright participation.

- Identity permissions. Sora’s cameo feature requires a one-time verification recording. Once you register your face and voice, you decide who can use them and in what contexts. Think of those permissions as system calls rather than fine print. If you revoke access, pending and published generations that include your cameo can be masked or queued for review. You can see any video that uses your cameo, including drafts created by others, and remove it.

- Copyright participation. Generative systems will pressure-test fair use and derivative work boundaries. The practical path forward is opt-in and opt-out machinery that travels with the media. Creators need a way to say: this clip can be remixed for fun, not for political ads; this character can appear in educational videos, not in gambling promotions; this song can be used up to 15 seconds with automatic revenue share if the video crosses a view threshold. In the near term, this can ride familiar content credentials and structured manifests that tie identity and license to each asset.

This is why verified cameos matter. They turn consent from trust-and-takedown into enforce-at-the-source. Brands will demand the same capability for mascots, trademarks, and signature styles. Regulators will expect it for public figures and election integrity. The rails do not need to be perfect to be transformative. They need to be standard, legible, and difficult to route around. For adjacent context on governed agent ecosystems, see how enterprises approach governed agent operations and an common language for agents.

Distribution and monetization: OpenAI versus Meta

OpenAI says the Sora app is rolling out in the United States and Canada, starts invite-only, and is not optimized for time spent in the feed. The company plans generous free generation limits, with paid options if demand exceeds compute. That positions Sora as a creation-first network whose primary monetization is generation capacity and licensed reuse.

Meta, meanwhile, has launched Vibes, a short-form feed of AI-generated videos inside the Meta AI app and on meta.ai, with built-in remixing and cross-posting to Instagram and Facebook Stories and Reels. See Meta's Vibes announcement.

This sets up a distribution-versus-quality race. Meta has unmatched distribution and habit formation. If Vibes content can be frictionlessly cross-posted into Instagram and Facebook, it inherits audience on day one. OpenAI has model quality and a clean slate to design consent from the ground up. If Sora’s default is verified likeness control and remixable licensing, it could attract creators and brands who want policy and product design aligned. For how monetization may evolve as agents meet payments, explore agentic commerce and payments.

Monetization will likely reflect these differences. Meta’s path runs through ads, creator funds, and commerce integrations. OpenAI’s path runs through compute fees, premium model tiers, and marketplace-style licensing where cameo owners and asset creators earn from authorized reuse. Both will need durable attribution so that viral forks still credit and pay the origin.

What to build next: the acceleration stack

The shift from tools to AI-native networks will be accelerated by new layers and utilities. Here are concrete build opportunities that compound the value of Sora, Vibes, and whatever comes next.

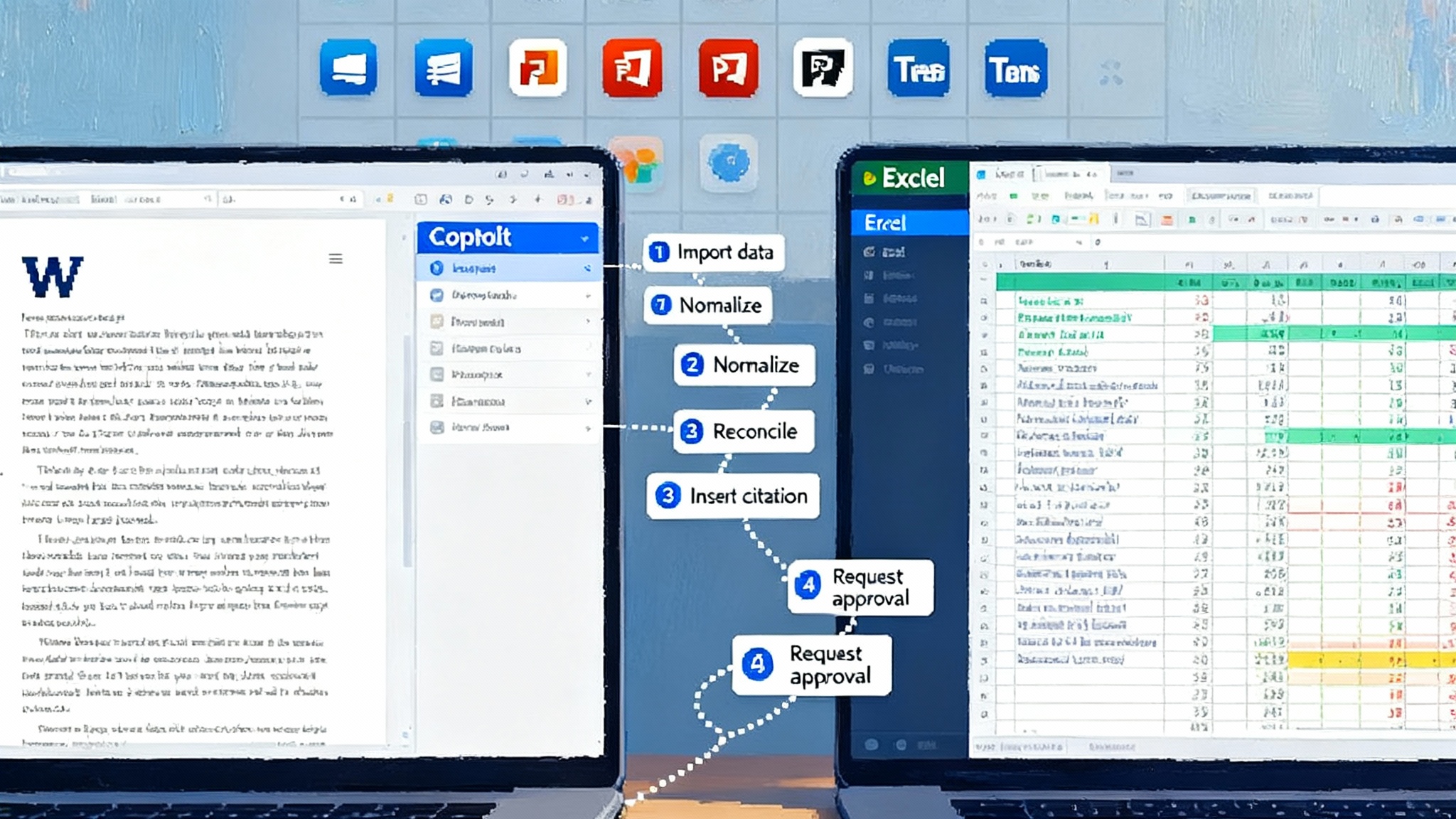

- Rights-aware editors

- What: Editors that load a Sora or Vibes project, parse embedded identity and license manifests, and prevent users from exporting a noncompliant cut.

- Why: Most violations are not malicious. They happen because status is unclear at the moment of creation. An editor that treats permissions like color profiles keeps projects safe by default.

- How: Read content credentials, cameo policies, and license terms into a session graph. On export, run a fast policy check and generate a compliance report that travels with the file.

- Provenance and licensing layers

- What: A shared service that stamps generated videos and audio with tamper-evident provenance plus machine-readable licenses. When someone remixes, the layer writes a new entry that points back to the original.

- Why: Networks thrive on trust and credit. Without provenance, remix culture turns into plagiarism. Without licensing, brands will not participate.

- How: Combine cryptographic signatures with resilient, privacy-preserving identifiers. Offer a simple registry for creators and companies to publish reuse rules. Provide a free verifier for audiences and moderators.

- Safety filters that understand scenes, not just words

- What: Real-time classifiers that watch generated frames and sound for policy hotspots like impersonation without consent, unsafe stunts aimed at children, or political persuasion that violates election rules.

- Why: Text-only moderation is brittle. AI video needs scene-level understanding. This is not only about blocking; it is about routing borderline cases to human review with the right context.

- How: Multimodal models that track entities, actions, and relationships across time. Confidence scores feed a policy engine that can blur, mute, or hold a publish request.

- On-device video agents

- What: Lightweight agents on phones, tablets, and laptops that help storyboard, rewrite prompts, adjust timing, and handle rights checks locally.

- Why: Not every iteration should hit the cloud. On-device agents reduce latency, improve privacy, and keep sensitive prompts and assets offline until publish.

- How: Distill large models into efficient runtimes. Cache policy manifests and cameo embeddings locally with secure enclaves. Offer a one-tap sync for publish that uploads only what is needed.

- Brand-safe cameo marketplaces

- What: Curated directories where public figures and brands set clear cameo rules, prices, and campaign windows.

- Why: This turns the scary word deepfake into licensed collaboration and creates new income streams for micro-celebrities and niche experts.

- How: Verified onboarding, KYC for payouts, standardized contracts, and automatic enforcement via the identity rails above.

How policy can keep up without freezing the future

Regulators and platforms have a narrow path. The goal is not to ban synthetic media. The goal is to make it legible, consented, and traceable. Three practical levers move policy and product in tandem.

- Consent by default. Platforms should require verified permission for any use of a real person’s likeness and voice, with special protections for minors. Sora’s cameo model is a template to build on.

- Labeling that survives reposts. Provenance watermarks and content credentials should be inserted at export and at publish. They need to survive compression, cropping, and re-encoding. Labels should explain what was generated and what was captured, in plain language.

- Escalation lanes for civic risk. During elections or emergencies, platforms need a faster track for reviewing and removing harmful synthetic content. This requires resourcing human moderation teams with tools that can read the same provenance and identity manifests as the generation systems.

A 90-day playbook for organizations

If you run a creative team, studio, or brand, you do not need to wait. Here is a concrete plan for the next quarter.

- Define a likeness policy and approval flow for faces and voices you may use.

- Establish a remix license that is readable, enforceable, and friendly to fans.

- Instrument provenance by adding content credentials to every export.

- Pilot with a small cohort. Track remix rate and consent conflicts weekly.

- Build your cameo kit for spokespeople and characters with preset permissions.

- Prepare brand safety failsafes and a rapid retraction protocol.

The product race we are actually in

It is tempting to frame this as OpenAI versus Meta. The real race is between architectures. One treats generation as a toy on top of existing social networks. The other treats social as a feedback loop for generation where identity, permission, and provenance are first-class citizens. The winner will be the network that creators and brands trust with their face, their voice, and their livelihood.

OpenAI’s Sora 2 and its invite-only app make a strong opening argument by turning consent into a feature, not a terms-of-service paragraph. Meta’s Vibes makes a strong distribution play by meeting people where they already are. Both moves will accelerate the shift from tools to AI-native networks. The opportunity for everyone else is to supply the rails and utilities that let culture remix itself without losing authorship.

The breakthrough is simple. We are moving from filming our world to simulating it. Whoever helps people bring themselves into that simulation, with the right to say yes or no and the confidence that credit will follow, will define the next decade of video.