UiPath turns RPA into agents with OpenAI, Snowflake, NVIDIA

On September 30, 2025, UiPath announced partnerships with OpenAI, Snowflake Cortex, and NVIDIA that reposition RPA as an enterprise agent platform. This breakdown explains what changed, why it matters, and a 90 day plan to ship your first production agent.

Breaking: the day automation grew up

On September 30, 2025, UiPath moved robotic process automation from script runners to agent orchestration. Three announcements anchored the shift. First, UiPath and OpenAI said they will deliver a connector so frontier models can reason over business context and then act inside governed workflows, as outlined in the UiPath and OpenAI collaboration. Second, UiPath and Snowflake connected the agent platform to Snowflake Cortex so insights grounded in enterprise data can be turned into actions without leaving the data cloud. Third, UiPath and NVIDIA aligned on serving model microservices through NIM and Nemotron for sensitive, high trust scenarios, detailed in the NVIDIA NIM and Nemotron press release. Together, these moves draw a clear blueprint for how enterprises will build production agents that are safe, auditable, and fast.

This is more than a set of integrations. It is a statement that the automation platform is becoming the hub where intelligence, data, and execution meet. UiPath provides the orchestration, identity, and runtime controls. OpenAI provides reasoning and tools for computer use. Snowflake Cortex provides grounded answers over governed data. NVIDIA NIM provides a portable, enterprise ready way to run model capabilities as microservices when you need tight control over environments, latency, or privacy. For how standards are emerging, see OSI arrives for agent interoperability.

The new stack: from insight to action

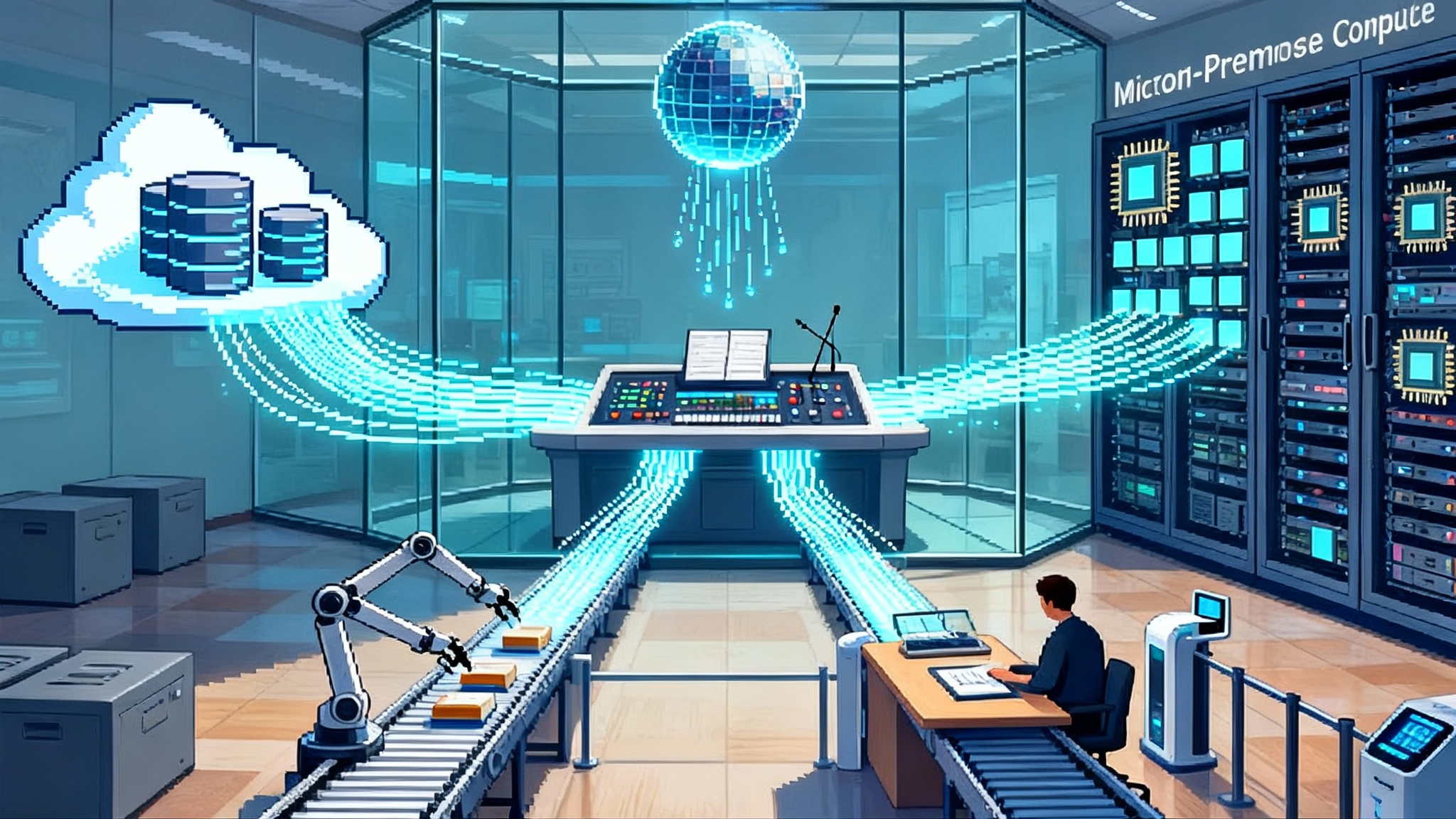

Think of the emerging agent stack as a relay team passing a baton from question to decision to verified action. Each runner is specialized, and the handoffs are where programs used to drop the baton. Those handoffs are now designed.

- Data grounding: Cortex Agents in Snowflake retrieve and compose trusted answers across structured and unstructured data using high accuracy retrieval and search inside Snowflake’s security boundary. This reduces hallucinations and keeps lineage and permissions intact.

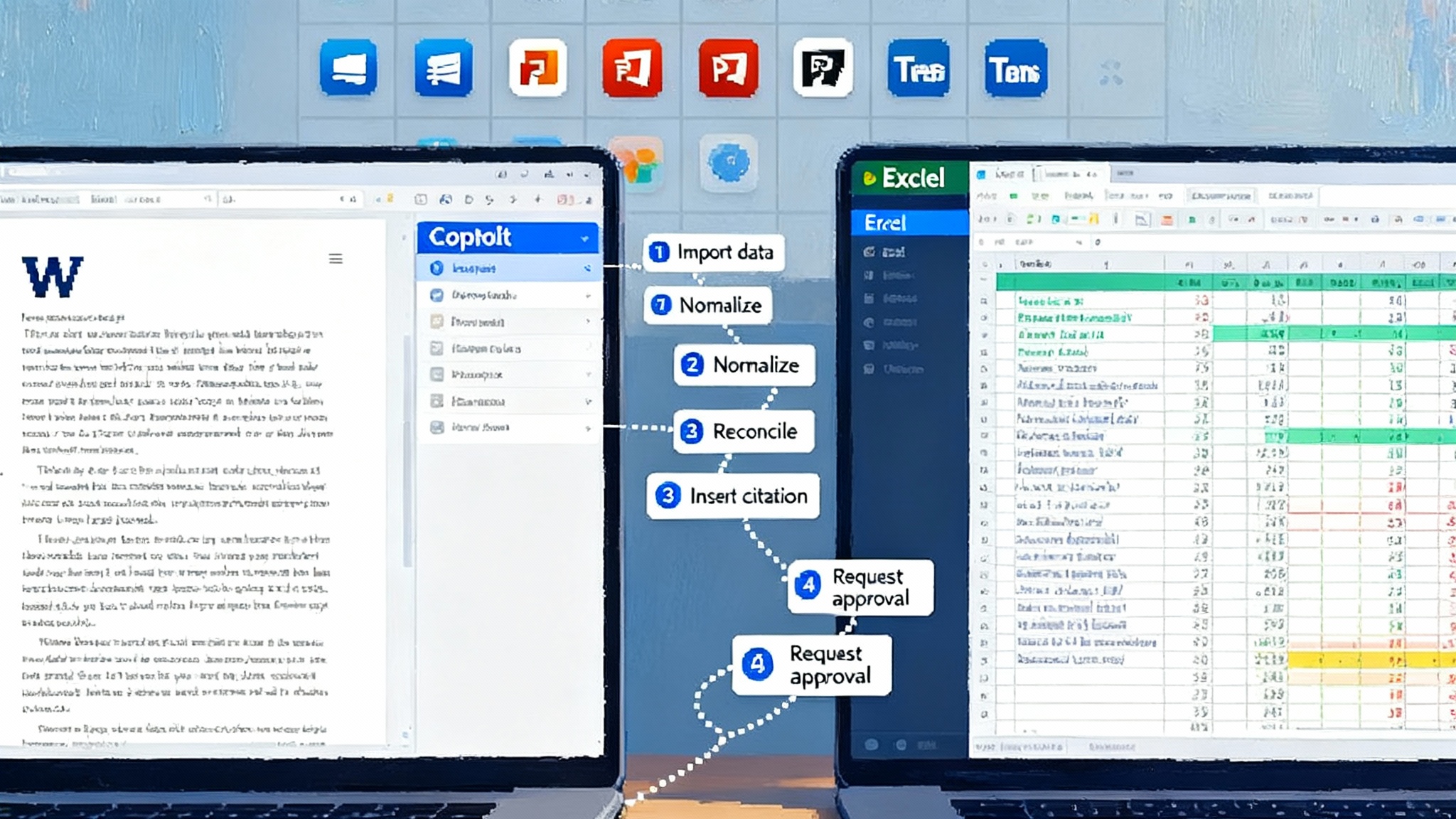

- Orchestration: UiPath Maestro models the end to end process, sequences agents and robots, manages long running work, handles exceptions, and records every decision and action with audit trails. It is where business rules, approvals, and human in the loop live.

- Identity and governance: The platform enforces role and attribute based access, data minimization, and policy controls so an agent can only act where it is allowed to act. When the workflow crosses systems, the orchestrator maintains the chain of identity and the chain of custody.

- Model microservices: With NVIDIA NIM you can package capabilities like document understanding, vision, or domain tuned language models as networked services. That lets you deploy in regulated, on premises, or air gapped environments while keeping performance predictable.

Put simply: data grounding gives the agent the facts. Orchestration gives it a plan. Identity and governance give it permission. Microservices give it skills. The result is an insight to action loop you can audit and scale.

What is fundamentally different from chatbots

Chat interfaces answer. Production agents deliver outcomes.

- State and memory that matter: Chatbots keep conversational context. Agents keep process context. Maestro tracks work items, prerequisites, deadlines, and exception states so the agent knows where it is in a multi day workflow and what must happen next.

- Tool use with guardrails: A chatbot can suggest how to file a claim. An agent can file the claim, fetch policy data, cross check eligibility, request missing documents, route to a human for judgment, and post results to the core system. Every external call, credential, and write is policy controlled and logged.

- Grounded answers by design: Instead of pasting data into a prompt, the data agent queries Snowflake through Cortex so answers inherit your governance, lineage, and row level permissions. The output is not just plausible. It is tied to sources.

- Portable skills: With NIM, model functions are served like any other enterprise service, which means you can place them near your data, scale them, and validate them like you validate any critical microservice.

If chatbots are smart assistants, these agents are trained colleagues who can check the policy, use the right credentials, and finish the job. If you are moving from demos to governance, compare with governed workloads with Azure Agent Ops.

A concrete example: the claims agent that closes the loop

Imagine a health insurer with rising fraud alerts and rising costs. Today a human investigator triages alerts, reads medical notes, emails providers, and updates two legacy systems. The new agentic stack changes that.

- A Snowflake Cortex Agent retrieves the claim, member history, provider patterns, and similar cases. It produces a grounded summary and risk score.

- UiPath Maestro receives that summary and evaluates business rules. If the risk exceeds a threshold, Maestro launches a path that combines a document review task for a human and a data collection task for a robot.

- An OpenAI model evaluates free text notes for inconsistencies and proposes next actions, like asking for a pre authorization letter. The agent posts a templated request, tracks the due date, and pauses the case until a response arrives.

- For scanned documents or medical codes, a NIM hosted model performs vision and entity extraction in the company’s private environment, with outputs versioned like any service.

- Maestro compiles the trail: who saw what, which model produced which output, and why the agent chose the final disposition. That record feeds both audit and continuous improvement.

The outcome is not a better chat. It is a faster, cheaper, more accountable claims decision that meets evidence and compliance standards.

The 90 day build roadmap for your first high trust agent

The goal is to ship one data aware agent inside an existing workflow with measurable impact, not to build a platform from scratch. Work in three 30 day sprints.

Days 0 to 30: choose one workflow and harden the foundations

- Select a target process with real pain and clear owners. Good first bets are invoice exception handling, claim intake triage, collections outreach, and pricing approvals. Define one outcome metric, one quality metric, and one safety metric, for example average handle time, first pass accuracy, and policy violation rate.

- Map the minimum viable journey in Maestro. Model the steps and handoffs using the existing systems and approvals. Define the human decision points you will keep. Reduce scope until you can draw the entire flow on a single page.

- Ground the data. Create a Snowflake Cortex Agent that can answer the five questions your operators ask most often, strictly using the tables they already trust. Verify row level permissions and masking are correct for every role.

- Connect identity. Use service accounts and least privilege roles for every tool call. Decide which actions the agent is allowed to take without a human and which require an approval step.

- Establish evaluation harnesses. For reasoning tasks, collect 50 to 100 real examples with correct answers and policy notes. For data tasks, define query timeouts, result sizes, and error handling. Set acceptance thresholds before you write code.

Deliverable at day 30: a modeled workflow in Maestro, a working Cortex Agent answering real questions on governed data, identity and roles set, and a test set with target thresholds.

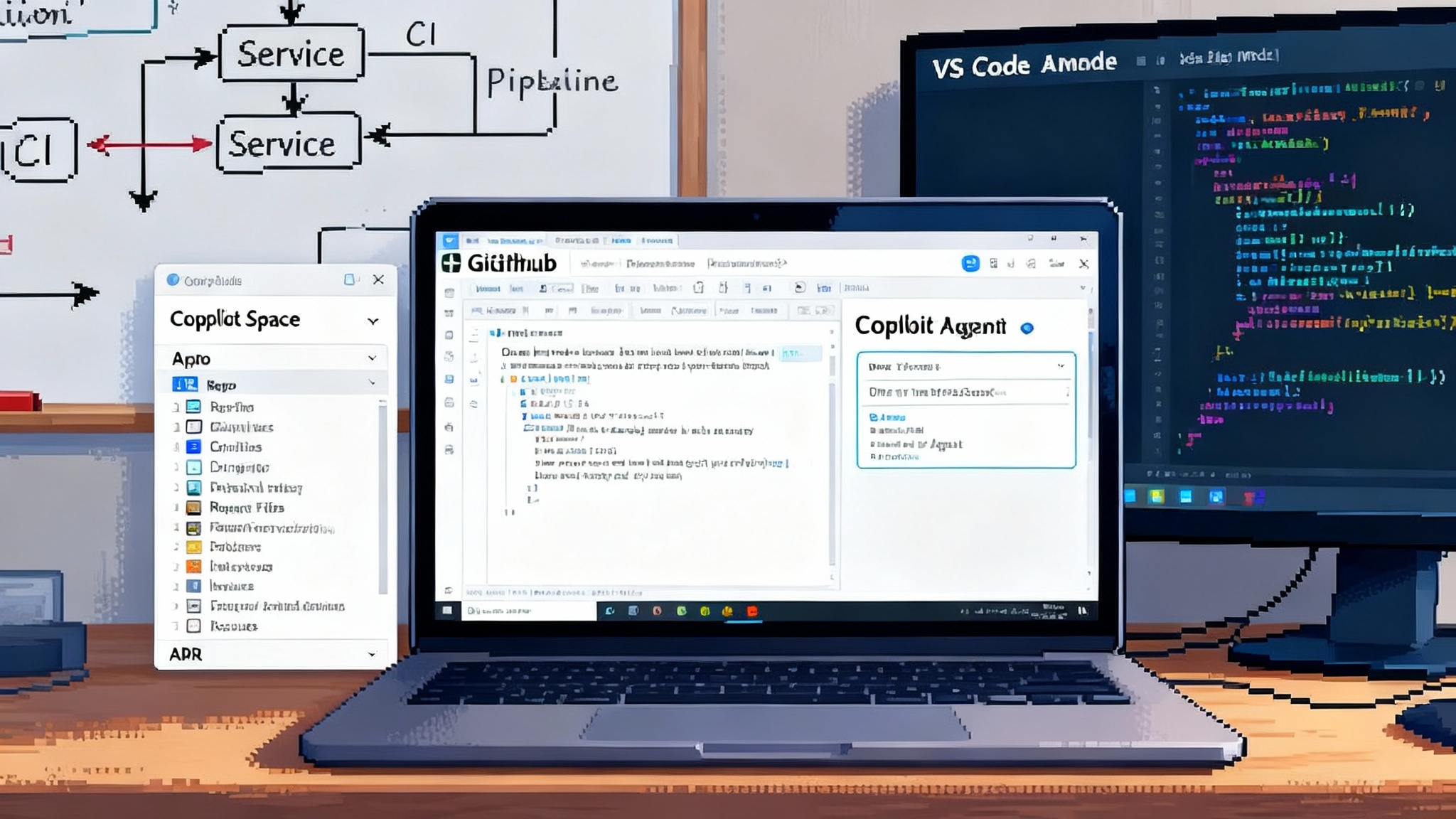

Days 31 to 60: build the agent and ship a controlled pilot

- Add reasoning. Use the OpenAI connector to propose next actions and draft content that a human will approve in the first pilot. Start with narrow prompts tied to your process steps and decision tables, not general chat.

- Add skills as microservices. If the workflow needs document classification, vision, or domain tuned text models, host them behind NIM so you can control versioning, throughput, and placement. Treat them like any internal service with contracts and monitoring.

- Instrument everything. Log every prompt, tool call, and decision with correlation identifiers. Sample 10 to 20 percent of cases for human review. Add outcome labels so you can compare agent versus baseline.

- Run the pilot with real users on real cases. Limit scope by region, product line, or time window. Maintain a rollback plan and a parallel manual path.

Deliverable at day 60: a working agent in a controlled pilot with monitoring and human in the loop, plus a baseline report of speed, quality, and safety.

Days 61 to 90: reach production and scale safely

- Remove the training wheels where evidence supports it. Convert the most reliable steps from human approval to post action review. Keep approvals for expensive, irreversible, or high risk steps.

- Build failure modes. Define what happens if the agent is uncertain, a tool times out, or a policy check fails. Add self healing paths such as retry with backoff, alternate tools, or escalation to a queue.

- Close the governance loop. Implement model and prompt versioning, policy checks on every deploy, and automated evals on a nightly run. Fill out the audit packet that your compliance team will review: data sources, permissions, model versions, and test results.

- Prove value with a clean before after comparison. Publish the pilot metrics against the targets you set at day 0 and decide the next two processes to onboard.

Deliverable at day 90: a production agent with a maintenance plan, documented controls, and a roadmap for the next two use cases.

What to ask your vendors now

- How does the agent prove the origin of every fact it uses and every action it takes, and where can an auditor view that trail in one place.

- If we must run models in a private or air gapped environment, what is the supported path to deploy model capabilities as microservices and how are they versioned and monitored.

- Which parts of the orchestration layer are declarative and which are code. Can business rules be expressed with Decision Model and Notation and reviewed by non engineers.

- How are agent actions scoped by identity, and how are those permissions enforced across systems where the agent does not own the identity provider.

- What standard evaluation sets are available for our use case, and how do we add our own tests to block a deploy when quality drifts.

Implementation pitfalls to avoid

- Starting with a greenfield use case. Pick a workflow you already measure and that already has owners. You will need their help to label data, set thresholds, and make decisions about when the agent is allowed to act.

- Overbuilding your prompts. For production, short prompts that reference system state and decision tables beat long narrative prompts. Make the agent read the process, not guess it.

- Skipping the rollback plan. Long running processes will fail in surprising ways. Always have a manual queue and a controlled switch to pause new work while letting in flight work finish.

- Letting pilots run forever. The point of the pilot is to collect evidence. Decide up front what success looks like and when you cut scope, expand, or stop.

The industry shift behind the headlines

It would be easy to see the September 30 news as a marketing wave. The deeper story is that enterprise automation platforms are maturing into agent hubs. Orchestrators like Maestro sit at the center, not as dashboards, but as the runtime that unifies models, data, people, and robots under one set of controls. Data clouds like Snowflake provide the governed memory. Model platforms like OpenAI and NIM provide the skills in a portable form. The baton passes cleanly now because the handoffs are designed, typed, and governed. For security use cases, see how enterprises are adopting AI agents in the SOC.

A smart finish

The winners in this next phase will not be the teams with the most clever prompts. They will be the teams that ship working agents into existing workflows with clear guardrails and measurable outcomes. If September 30 was the day automation grew up, the next 90 days are when your company proves it. Start small. Design the handoffs. Measure everything. Then let the agents run.