K2 Think and the small but mighty turn in reasoning AI

MBZUAI’s K2 Think signals a shift to smaller, faster reasoning systems. With long chain-of-thought, verifiable RL, and plan-first prompting, a 32B model can rival giants while staying deployable.

The small but mighty turn

Something shifted on September 9, 2025. The Mohamed bin Zayed University of Artificial Intelligence released K2 Think, a 32B parameter reasoning system that aims for parity with today’s heavyweight models while being small enough to move quickly and deploy widely. The team framed it as a system, not just a checkpoint, and the message landed because it challenges a long standing assumption that only massive models can reason well. The CNBC report on K2 Think captured the core claim plainly: comparable reasoning at a fraction of the size.

This is not only about competitive benchmarks. It is about a recipe that makes long chain-of-thought practical at production speeds, with a training and inference stack that enterprises can actually own. K2 Think’s open weights and hardware versatility push against the idea that the frontier must be synonymous with hyperscale GPU clusters.

What MBZUAI shipped on September 9

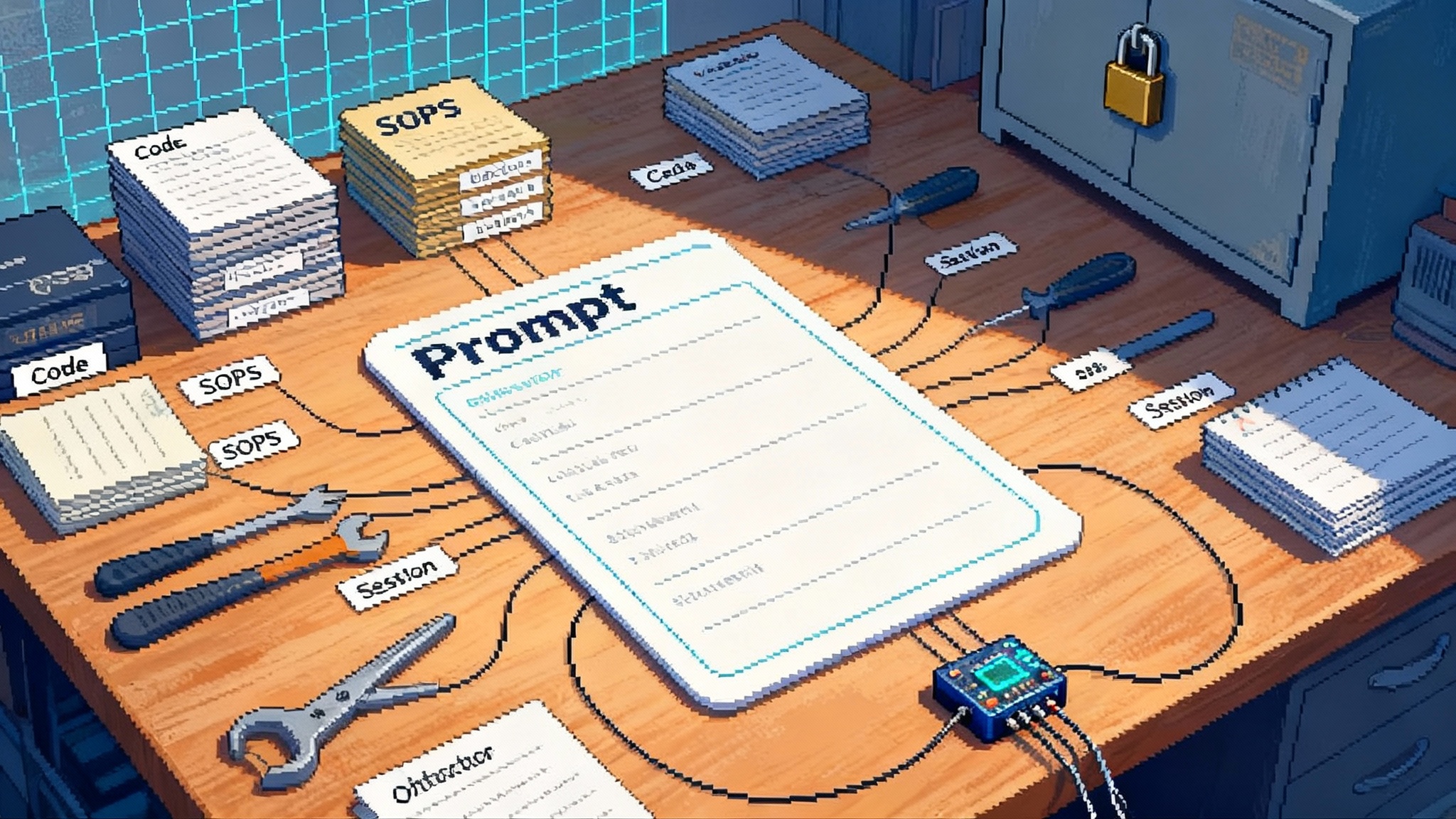

K2 Think is a 32B parameter system built on an open backbone and post trained to reason across math, code, and science. The team emphasizes three pillars:

- Long chain-of-thought supervision to teach explicit intermediate reasoning.

- Reinforcement learning with verifiable rewards so the model can optimize against tasks that have checkable outcomes.

- Agentic planning that asks the model to draft a concise plan before it starts to think in detail.

Around those pillars sit test time strategies like best of N sampling with selection by verifiers, along with generation optimizations to keep latency predictable. MBZUAI also positions K2 Think as fully open in practice, with code, weights, and deployment scaffolding available. See MBZUAI’s launch note for the institutional framing.

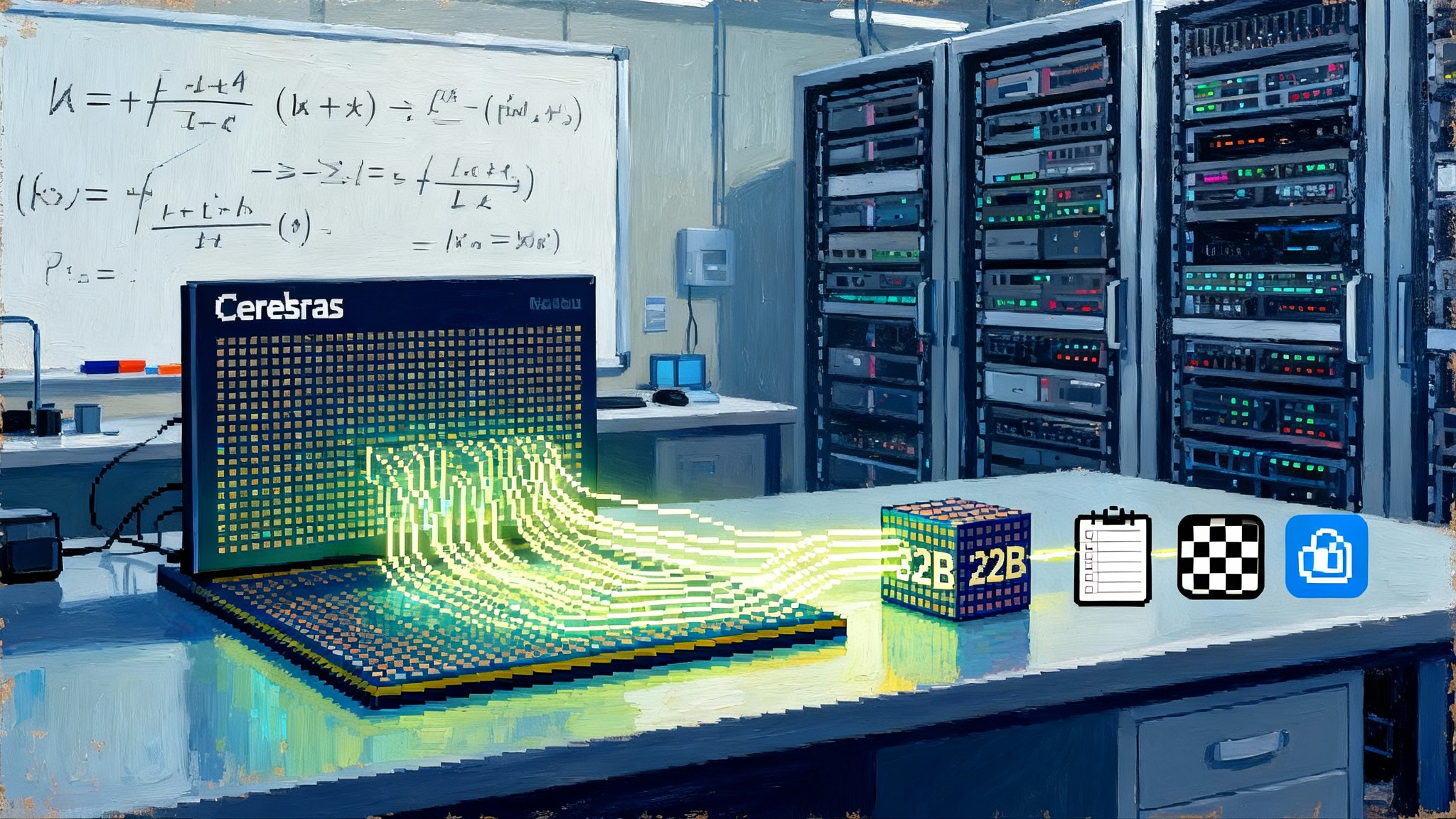

Two other details matter. First, the team says K2 Think was built on top of a Qwen 2.5 class base model, then adapted with long-form reasoning supervision. Second, the system was run and tested on Cerebras wafer scale hardware in addition to more common stacks. That combination is what unlocks the speed profile that makes long reasoning chains usable in real applications.

Why 32B can punch above its weight

Reasoning gains are now coming as much from post training and inference policy as from raw scale. K2 Think’s recipe leans into that reality.

- Long CoT supervision does not just improve accuracy. It regularizes the structure of answers so the model externalizes intermediate steps, which in turn makes verification and selection work better.

- RL with verifiable rewards targets domains where you can write a checker. Math with unit tests, code with execution and assertions, science questions with symbolic or numerical verifiers. Optimizing directly against those checks can reduce the noise and drift seen in preference-only pipelines.

- Agentic planning is a small but powerful change. A compact plan first can shorten the downstream chain and reduce search. K2 Think treats plan tokens as a budgeted stage, not a free for all.

- Test time scaling via best of N is no longer a luxury. When your per sample latency and tokens are under control, running several diverse attempts and selecting the best becomes a normal part of the stack rather than a special mode.

Put those together and a 32B model can land where people once assumed only 100B to 600B class systems could. The benefit is not just better scores. It is that the same quality arrives with lower variance, fewer runaway responses, and a predictable wall clock.

Speed as a first class design goal

Reasoning is only useful if you can afford to run it. Many teams discovered this the hard way when early long chain models produced great demos and poor dashboards. K2 Think’s design accepts that constraint.

- Planning before thinking sets a token budget from the start.

- Verifiers and selectors allow smaller N to achieve the same or better quality than a single long chain.

- Hardware choices matter. Cerebras’ wafer scale engines can produce high output rates that make multi attempt search feel instant to an end user. The result is a system that can run long chain reasoning in real time for many interactive tasks.

That last point is what turns a research recipe into a product toolkit. If your chain is short and your output speed is high, the felt latency of a multi step agent loop collapses. It becomes viable to allocate more of your budget to breadth and verification rather than to a single monologue.

K2 Think, DeepSeek R1, and the frontier

A brief, practical comparison helps situate K2 Think.

-

Size and complexity

- K2 Think: 32B dense backbone with a post training and test time stack.

- DeepSeek R1: a behemoth by comparison. CNBC cites a 671B parameter footprint across components. This class of system is powerful, but its inference is inherently heavier.

- Frontier closed reasoners: configurations vary, often with larger dense or mixture of experts stacks that trade raw capability for higher inference costs.

-

Training emphasis

- K2 Think: long CoT supervision, RL with verifiable rewards, and plan first agentic prompting. The focus is on verifiable domains and structure.

- DeepSeek R1: extensive reinforcement learning and test time scaling, plus heavy use of synthetic reasoning traces. It catalyzed the field’s focus on long chain training earlier this year.

- Frontier closed models: a blend of supervised traces, preference alignment, and proprietary RL. Details are sparse, but these models increasingly expose thinking modes within rate limits.

-

Inference and latency

- K2 Think: designed for short chains, parallel attempts, and verifier selection on hardware that can push very high token throughput.

- DeepSeek R1 and frontier peers: impressive accuracy, but often with longer chains and more expensive search. Many deployments reserve long thinking for a small subset of requests.

-

Openness and deployability

- K2 Think: open weights and reproducible scaffolding. Suitable for on premises, sovereign, or regulated environments where data residency and vendor diversity matter.

- DeepSeek R1 and most frontier systems: access through hosted APIs or restricted weights. Easier to try, harder to own.

The takeaway is not that one is strictly better. It is that K2 Think marks a turning point in the cost structure of competitive reasoning. When a tuned 32B model can stand next to giants on hard tests, the strategy map for builders changes.

Agent economics: planning and inference budgets

Most teams now manage two tight budgets: the planning budget and the inference budget.

-

Planning budget: how many agentic steps can you afford before users lose patience or costs spiral. Plan first prompting with strict token caps gives you a knob to allocate thinking time. You can ask for a sparse plan in a few dozen tokens, run a bounded number of attempts, and let a verifier pick the winner.

-

Inference budget: how many tokens and how much wall clock for each leg of an agent loop. If your model outputs quickly and your chains are short, you can spend the same budget on breadth rather than depth. Best of N with verifiers becomes viable at scale.

K2 Think’s design rewards teams that treat these as first class product settings. It encourages a shift away from single long chain calls toward short planned chains, parallel exploration, and automated selection. That shift can reduce tail latency, lower variance, and create a smoother user experience.

Sovereign and regulated deployments

Open weights change who gets to build advanced reasoning locally. K2 Think arrives with a clear path to sovereign use cases where sensitive data cannot leave a private boundary. Financial services, healthcare, critical infrastructure, and public sector workloads benefit from models that run on hardware they control, with auditability and reproducibility.

Running on Cerebras adds another dimension. It broadens the hardware menu beyond Nvidia GPUs and hyperscaler offerings. For some buyers, that is about cost. For others, it is about supply chain diversity, locality, and energy profiles. The point is choice. With a performant 32B model and a hardware option that excels at high throughput generation, a wider set of operators can meet regulatory and operational constraints without ceding capability.

Implications for enterprise architecture

A small but strong reasoner touches many layers of the stack.

- Model layer: a 32B checkpoint with long CoT habits will likely sit next to a compact 7B to 14B general model. Use the reasoner for targeted tools and flows rather than as the default chat endpoint. For adjacent context, see the enterprise agent stack goes mainstream.

- Orchestration: adopt plan then think prompts as templates. Wrap best of N and verifier selection in first class functions. Treat verifiers as versioned services with dashboards, not as one off scripts.

- Retrieval and tools: keep documents and tools close to the model to reduce round trips. Shorter chains amplify the cost of latency elsewhere, so index, cache, and colocate thoughtfully. Practices from retrieval-first agents are rising apply here.

- Hardware and placement: where possible, colocate the reasoner with data and tools. If you use Cerebras for high throughput reasoning, front it with a router that can fall back to a GPU path if needed, and measure end to end time to first useful token, not just tokens per second.

- Governance: open weights make it easier to audit training data boundaries, but they do not absolve risk. Track where verifiers fail, log selection rationales, and apply rate limits to long chains even when they feel cheap. Lessons from FedRAMP High model failover are instructive.

How to pilot K2 Think in the next 30 days

If you are an applied team looking to validate the small but mighty approach, a simple plan works.

- Pick two tasks where answers can be checked. Math with tests, code with execution, or structured analysis with canonical outputs. Wire up verifiers first.

- Prototype the plan then think prompt. Limit the plan to a fixed short budget. Ask for the plan as bullet points, then have the model execute it in the next message.

- Run best of three with selection by a checker. Measure accuracy, tokens, and wall clock. The goal is lower variance and predictable latency rather than a single heroic chain.

- Compare to your incumbent. If you use a large frontier reasoner, hold quality constant and measure the budget you save. If you use a smaller model, hold cost constant and measure how much quality improves with planning and verifiers.

- Decide your placement. If you already have access to wafer scale inference, test K2 Think on that path for interactive workloads. Otherwise, confirm that your GPU cluster can maintain the output rate needed to keep chains short.

By the end of 30 days you should know whether a 32B reasoner can replace a larger endpoint in specific flows, or serve as a complementary specialist that lowers unit cost.

What to watch next

- Independent, standardized tests where pass rates and attempt budgets are both reported. Accuracy alone is not enough unless you see the cost to reach it.

- Verifier libraries that are robust, language agnostic, and easy to extend. The easier it is to write a checker, the broader RL with verifiable rewards can reach.

- Better plan formats. Shorter, clearer plans reduce downstream chains. Expect templates and tool aware formats to become shared assets.

- Latency budgets that move from rules of thumb to service level objectives. Expect teams to publish time to first token and 95th percentile end to end times for agent loops.

The bottom line

K2 Think is a clear signal that reasoning is entering a small but mighty phase. The model is not small in absolute terms, but it is compact relative to the giants it aims to match. The key is a sober recipe. Teach the model to think out loud with long chains. Optimize where you can check the answer. Plan before you think. Run several short attempts and pick the best. Do it all fast enough that users never notice the machinery.

For enterprises, that combination reshapes the economics of agents and opens the door to deployments that were difficult under closed, hyperscale assumptions. Whether you adopt K2 Think outright or emulate its design, the pattern is now on the table. The next wave of reasoning systems will not only be smarter. They will be right sized to run.