OpenAI × Databricks: The Enterprise Agent Stack Goes Mainstream

OpenAI’s frontier models now meet Databricks’ lakehouse controls, turning agent pilots into production programs. See how identity, retrieval-to-action, observability, and cost governance align on the data plane, plus build patterns, risk tactics, and a practical 30-60-90 rollout.

Why this partnership matters now

The first wave of enterprise AI agents proved two things at once. They can create real leverage across knowledge work, and they can also run head first into governance, reliability, and cost walls. What changes with a direct bridge between frontier models and the Databricks data plane is that the rails enterprises already trust for security, lineage, and spend finally meet the reasoning power that makes agents useful. Instead of hauling data out to a black box or rebuilding controls from scratch, companies can keep data, policy, and telemetry where they live today while unlocking more capable planning and tool use.

This is not a small shift. It moves the center of gravity for agents from demo environments to the lakehouse and the operational systems that surround it. CIOs get a path to production that maps to existing controls. Builders get permission to plug in richer tools. Finance gets the dials it needs. And security teams get to say yes without holding their breath.

The enterprise agent stack on the lakehouse

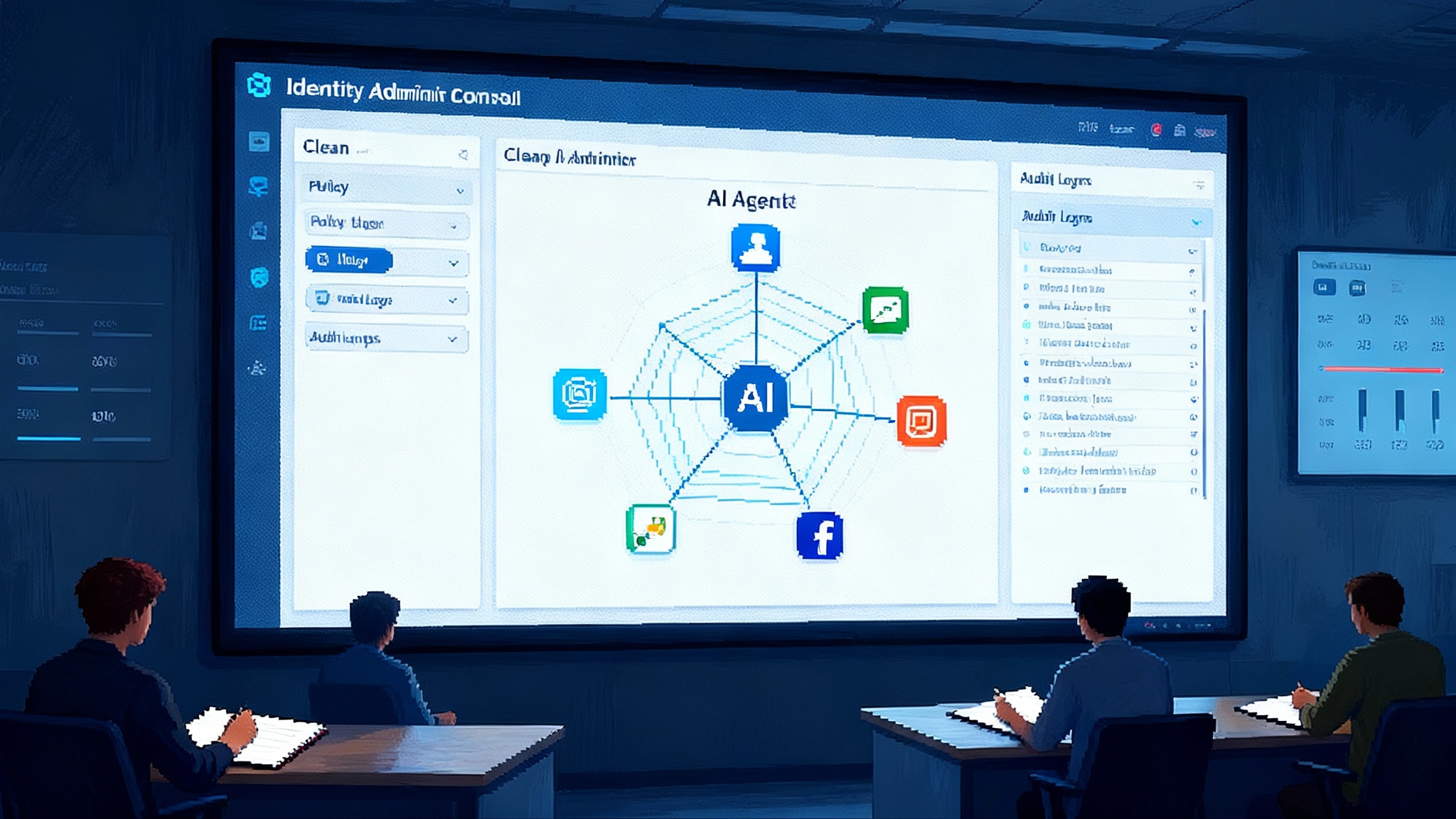

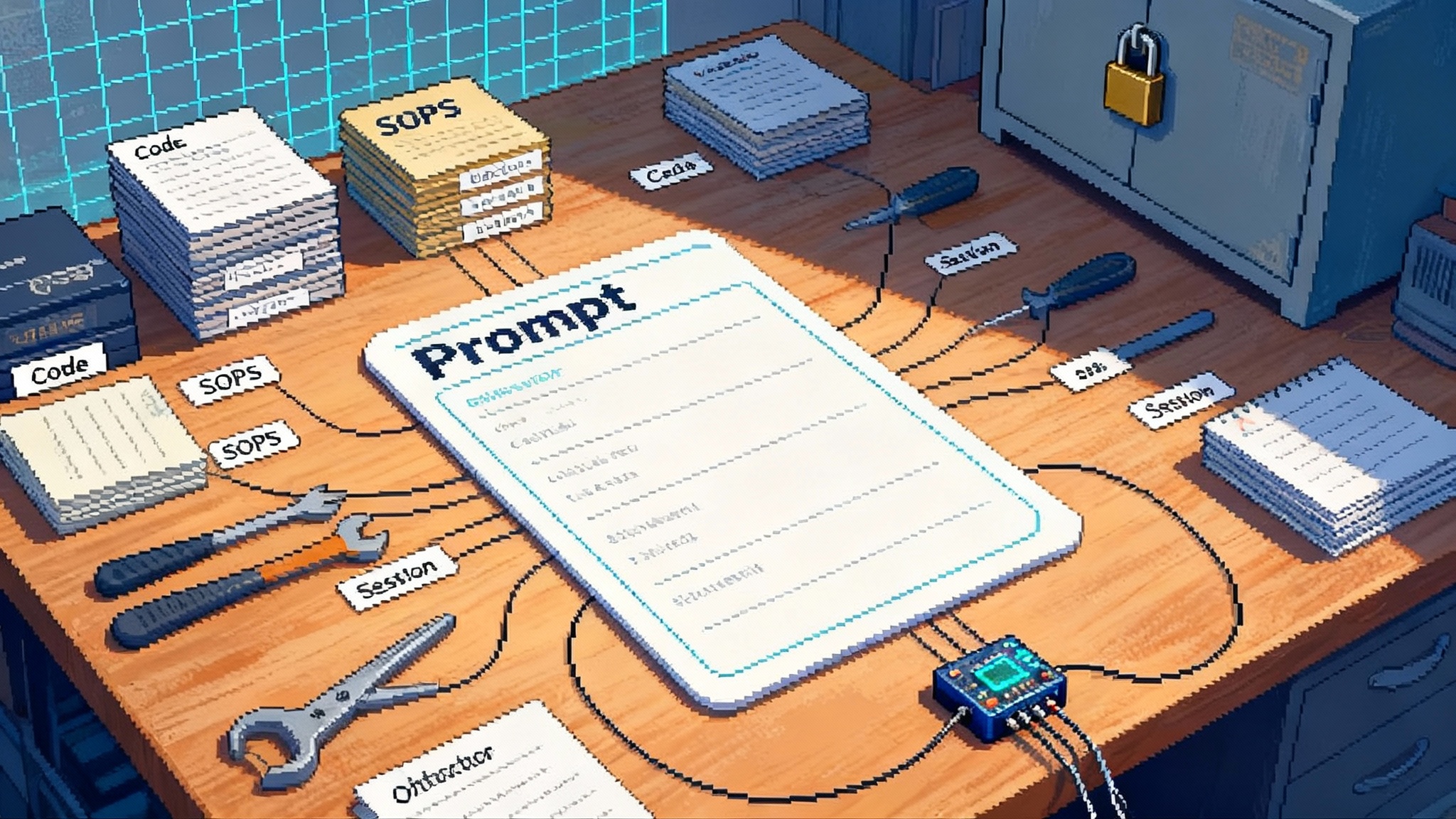

Think of the modern agent stack in four layers that sit on top of the lakehouse and transact with operational systems:

- Identity, access, and governance

- Service principals for agents, not shared user tokens

- Role and attribute based access mapped to tables, views, and functions

- Data masking, row filters, and column lineage that propagate into agent calls

- Secrets and connection configs managed centrally

- Retrieval to action pipeline

- Retrieval routes the agent to the right context: vector stores, SQL, or feature tables

- Planning composes tools: read, reason, write

- Guarded execution with reversible writes, human in the loop checkpoints, and transactional boundaries

- Observability and evaluation

- Traces for prompts, tool calls, intermediate steps, and outputs

- Quality and risk metrics that are queryable like any other table

- Continuous evaluation that replays traffic and compares models or prompts

- Cost and performance controls

- Budgets, rate limits, and quotas per user and per agent

- Caching and response reuse across teams

- Hybrid inference policies that match model to task, latency, and sensitivity

With a tight OpenAI link into the Databricks plane, each layer gets better because it is anchored in the controls you already use for analytics and machine learning. The lakehouse is not just the place where your data sits. It becomes the execution ledger for agent behavior.

Secure data access that security can sign off

Enterprises do not run agents on hope and prayers. They run on scoped identities and least privilege policies. Treat identity as the control plane and bind every agent to explicit roles. The practical move is to mint a service principal per agent, attach it to Unity Catalog style permissions, and bind all external tool connections through managed secrets. That yields three immediate wins:

- Deterministic access: every query, retrieve, or write is explainable back to a role and a policy. No ghost privileges from copied API keys.

- Enforceable masking: sensitive columns can be tokenized or hashed in the retrieval layer, preventing overexposure during context assembly.

- Auditable lineage: reads and writes show up in lineage graphs so you know which agent touched which tables before a human hits approve.

Security teams also get a simple review artifact: a policy pack that lists which tables, views, vector indexes, and external tools the agent may call. That artifact can go through the same change control as a data product.

Retrieval to action without leaving the guardrails

Most enterprise wins happen when an agent does two things in one flow: retrieve authoritative context and then take a bounded action. The pattern looks like this:

- Intent router: a small fast model routes requests to skills such as analytics Q&A, contract analysis, or ticket triage.

- Context assembly: the skill uses a hybrid retrieval plan that can stitch together vector search over documents, SQL over curated views, and feature lookups. Each step runs with scoped credentials.

- Planner: the frontier model builds a plan with explicit tool calls. Plans are validated against a schema and a policy checker before anything executes.

- Executor: tools run in a secure compute context. Writes go through staged tables or APIs with compensating transactions, so rollbacks are possible.

- Confirmation gates: high risk actions pause for human confirmation with a compact summary and diff of proposed changes.

Because the agent is operating inside the lakehouse guardrails, you can add a reversal path. If an action drifts outside the policy envelope, the system cancels or reverts it and captures a trace for evaluation.

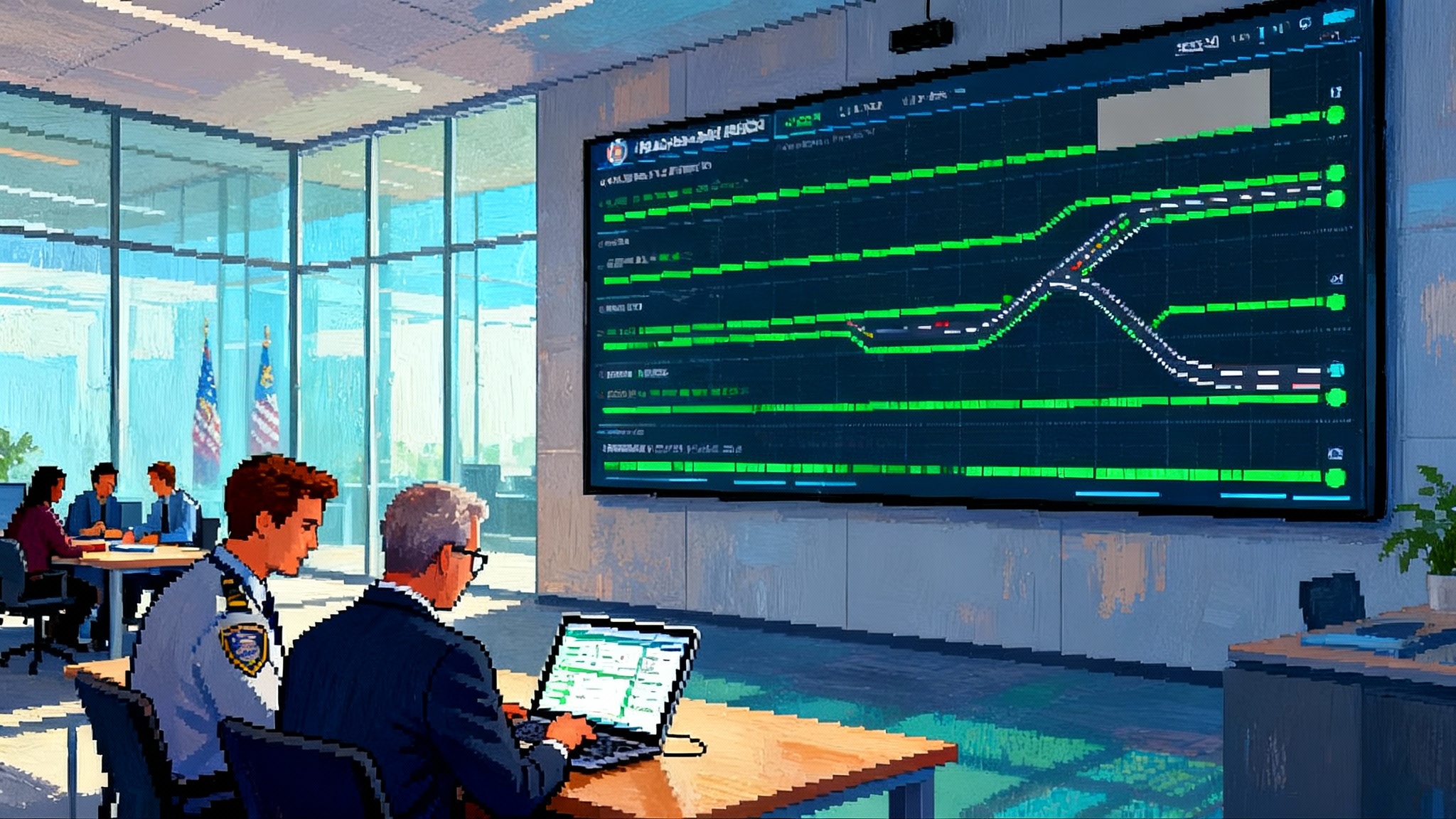

Observability that answers hard questions

Production agents live or die by the ability to answer simple questions fast: what happened, why, and how much did it cost. The observability model for agents on Databricks should capture five classes of telemetry as first class tables:

- Requests: user, skill, inputs, and derived risk signals

- Traces: prompts, tool calls, intermediate thoughts, and model outputs with token counts

- Retrievals: which indexes, tables, and documents were consulted and why they were chosen

- Actions: every write with success or failure codes and references to staging records

- Costs: model time, tokens, tool time, and downstream service charges

Point all of this at the same catalog where the rest of your analytics live. Now you can answer audit queries directly. How many times did the contract analysis agent look at unredacted PII last week. Which prompts generated the most escalations. Where did prompt injection attempts originate. Observability becomes analysis, not art.

Cost controls that actually bite

Enterprises never sign blank checks for inference. Practical controls sit at three layers:

- Policy layer: enforce per user and per agent budgets, concurrency limits, and max tokens. Enable per skill latency SLOs so cost and experience are explicit trade offs.

- Planning layer: prefer tools that narrow the problem before asking a large model to write a long answer. Summarize, filter, and retrieve before generation. Use small models for routing and classification.

- Serving layer: add caching, shared answer stores, and distillation. Cache final answers to common questions. Distill long multi step planners into shorter flows once stable. Switch off streaming for low value use cases.

Tie these controls to finance through a shared dashboard. For transaction heavy or revenue touching flows, add a trust layer for AI agent commerce so authorization and settlement are centralized and auditable. Cost volatility drops when the organization sees exactly how behavior maps to spend.

Concrete build patterns for CIOs and teams

Below are patterns that have proven both effective and acceptable to risk owners. They do not require heroics, and they align with lakehouse controls.

- Agent evaluation in the loop

- Golden set: build a versioned dataset of real conversations and tasks with correct answers and allowed actions. Mask sensitive content where needed, but keep enough context to test policies.

- Offline replay: every change to prompts, tools, or models triggers a replay across the golden set with metrics such as task success, policy violations, hallucination rate, and escalation rate.

- Counterfactual tests: inject synthetic edge cases such as prompt injection, toxic input, and ambiguous policy boundaries. Score not just correctness but safety margins.

- Shadow deploy: route 5 percent of real traffic to a new plan in parallel and compare outcomes before promotion.

- Guardrails that fail safe, not just fail closed

- Input validation: reject or redact inputs that contain PII, secrets, or high risk patterns before they ever hit a model.

- Plan validation: require tool call plans to pass a schema and a policy check. If a plan fails, the agent offers a safe alternative such as a read only report or a human escalation.

- Output validation: verify that generated SQL, code, or API calls compile and conform to allowlists. Add an auto explain step for SQL to detect table scans or cross joins.

- Human approval ladders: map risk levels to approval flows so high impact changes require explicit consent.

- Lineage that closes the loop

- Data lineage: every retrieval and write stamps a lineage ID that ties back to the request. This ID attaches to the data artifacts created or modified by the agent.

- Model lineage: track prompts, versions, and system instructions through a registry. Include tool versions and policy bundle versions.

- Decision lineage: for regulated processes, store the chain of thought that matters for compliance as structured rationales, not free form monologues. Keep sensitive internal reasoning private while recording the minimal sufficient explanation.

- Hybrid inference that matches task to trust

- Private by default: run routing, classification, and retrieval assembly on smaller models that can live in your VPC. They are fast, cheap, and good enough for control tasks.

- Frontier when it counts: call frontier models for planning, complex generation, and sparse reasoning when the task benefits. Strictly control which data crosses the boundary and prefer abstracted features or summaries over raw records.

- Policy aware routing: create rules that route based on sensitivity labels, geography, or customer segment. For example, do not send raw EU personal data to external services, only derived signals.

- Fallbacks: set deterministic fallbacks for outages or quota exhaustion. Fail positions should be safe and legible to end users.

What changes competitively

Three markets feel this immediately: data clouds, foundation model platforms, and enterprise application ecosystems.

-

Snowflake: Snowflake has invested heavily in bringing models to the data and encouraging native apps that run close to governed data. A tighter OpenAI path into the lakehouse raises the bar on unified lineage, retrieval, and action inside analytics platforms. The battleground will be who makes retrieval to action simplest without sacrificing governance. Expect acceleration in vector search quality, native evaluation tooling, and policy aware app frameworks.

-

AWS Bedrock: Bedrock already integrates widely across AWS services, knowledge bases, and guardrails. The pressure will be around lakehouse neutrality and ease of tapping controlled analytics assets in complex enterprises. Expect deeper patterns that blur the line between knowledge bases and enterprise feature stores, plus stronger cost and quota governance as standard features.

-

Anthropic: Anthropic will emphasize reliability, harmlessness, and tool use correctness, and deepen partnerships that embed Claude into existing enterprise control planes. Expect a focus on high assurance patterns for regulated industries, with strong red teaming artifacts out of the box.

In short, the center of competition shifts from isolated model quality to end to end managed agent stacks. Whoever can deliver secure retrieval to action with clear cost and reliability story will win executive trust.

Near term opportunities to seize

CIOs do not need to wait for the perfect platform to harden. The partnership creates immediate openings where the control surface is already strong and the risk is manageable:

- Finance close copilots: agents that reconcile transactions, flag anomalies, and draft variance explanations using governed ledgers and forecast models.

- Sales ops copilots: quote desk helpers that validate configurations, assemble just in time legal language, and write back clean records to CRM with human checkpoints.

- Customer support deflection: build retrieval-first support agents that answer complex product questions from knowledge bases and turn solved tickets into new training data.

- Procurement agents: policy guided RFQ summarization, supplier risk checks, and auto generated redlines that route to legal only when material changes appear.

- DevX copilots: engineering knowledge agents that retrieve from design docs, runbook wikis, and code search to propose safe remediations, gated by code owners.

- Governance workflows: agents that monitor policy drift, flag over privileged service accounts, and suggest safe remediations with diffs.

- Data quality bots: agents that watch freshness, null rates, and distribution changes in critical tables, open tickets with suggested fixes, and track resolution.

Pick one or two workflows per domain where success looks like measurable cycle time reduction and lower escalations, not flashy demos.

A 30, 60, 90 day plan

Day 0 to 30: establish the rails

- Create service principals for agents and map them to minimal roles

- Stand up an evaluation harness with a golden set and offline replay

- Define a policy bundle that covers retrieval sources, tool allowlists, and approval ladders

- Turn on tracing and cost tables and agree on a spend budget with finance

Day 31 to 60: ship one retrieval to action agent

- Choose a workflow with reversible writes and clear success metrics

- Build the intent router, context assembly, plan validator, and executor in the lakehouse

- Pilot with 20 to 50 users and iterate weekly using replay results

- Add caching and finalize your fallback plan

Day 61 to 90: scale responsibly

- Expand to a second skill in a different domain to test generality

- Introduce hybrid inference routing policies tied to sensitivity labels

- Tighten cost controls and distill stable plans into cheaper paths

- Prepare a security and compliance review package with lineage, policy, and evaluation artifacts

Risks and how to handle them

- Prompt injection and data exfiltration: treat all retrieved content as untrusted. Sanitize, constrain tools, and validate plans. For high risk skills, require human confirmation for any action that writes outside a quarantine zone.

- Governance drift: lock policy bundles in version control and require change approvals. Observe for silent permission creep via periodic access reviews.

- Over reliance on a single model: hedge with hybrid inference and keep alternate plans warm. Store traces and prompts in a way that is portable.

- Cost blowouts: set budgets on day one, monitor token use and tool time per skill, and push for smaller models in routing and summarization.

What good looks like in six months

A mature enterprise agent program will have fewer moving parts than it started with. The platform cancels noise by codifying guardrails, logging, and cost controls once, then reusing them across dozens of skills. Developers spend time on business logic, not bespoke security. Security sees the same audit trails they trust in analytics. Finance sees predictable trends, not spiky bills. Most importantly, users trust the agents because they are precise, reversible, and polite about asking for help when uncertain.

The bottom line

Agents go mainstream in the enterprise when they can read, reason, and write without leaving the governance perimeter or blowing up the budget. A deeper connection between frontier models and the Databricks data plane closes that loop. It gives CIOs a credible path to scale and it gives builders a clear playbook. Start with one retrieval to action workflow, measure everything, and let the rails do the heavy lifting. The payoff is not just better answers. It is better operations.