Azure Agent Ops Goes GA: From Demos to Governed Workloads

Azure AI Foundry’s Agent Service is now generally available, bringing bring-your-own thread storage, run-level observability, Fabric integration, MCP tools, and Deep Research so enterprises can move from flashy demos to governed, auditable workloads. Here is what shipped, why it matters, and how to launch in 90 days.

The moment enterprise agents have been waiting for

The past two years have been a parade of agent demos. They booked travel, wrote emails, and even chained tools together on stage. Then most of them stalled in security reviews. What changed in May 2025 is not a new trick. It is a production layer. Azure AI Foundry’s Agent Service reached general availability and shipped the operational scaffolding that security, risk, and platform teams have been asking for: bring-your-own thread storage for compliant memory, run-level observability through Azure Monitor, native hooks for Microsoft Fabric data tooling, Model Context Protocol tool support, and the Deep Research capability for multi-step investigations. For context on cross-vendor standards, see our take on a common language for enterprise agents.

If you run a regulated workload, those nouns unlock verbs. You can route memory into your tenant’s storage with retention and eDiscovery. You can watch agent runs like you watch microservices. You can govern tools and data like any other Azure workload. The practical outcome is simple. Agents move from prototypes to governed software. For lessons from a highly regulated deployment, review these bank‑grade pilot insights.

What actually shipped and why it matters

Here is the shift in plain language, with examples you can map to familiar systems:

-

BYO thread storage: Treat agent memory like application state, not a black box. Store message histories and tool results in your own data store. That can be an Azure Storage account, a Microsoft Fabric Lakehouse, or a database already covered by your retention and legal hold policies. You choose the encryption at rest, the region, the lifecycle rules, and the access via managed identities. The benefit is auditability and data minimization by default.

-

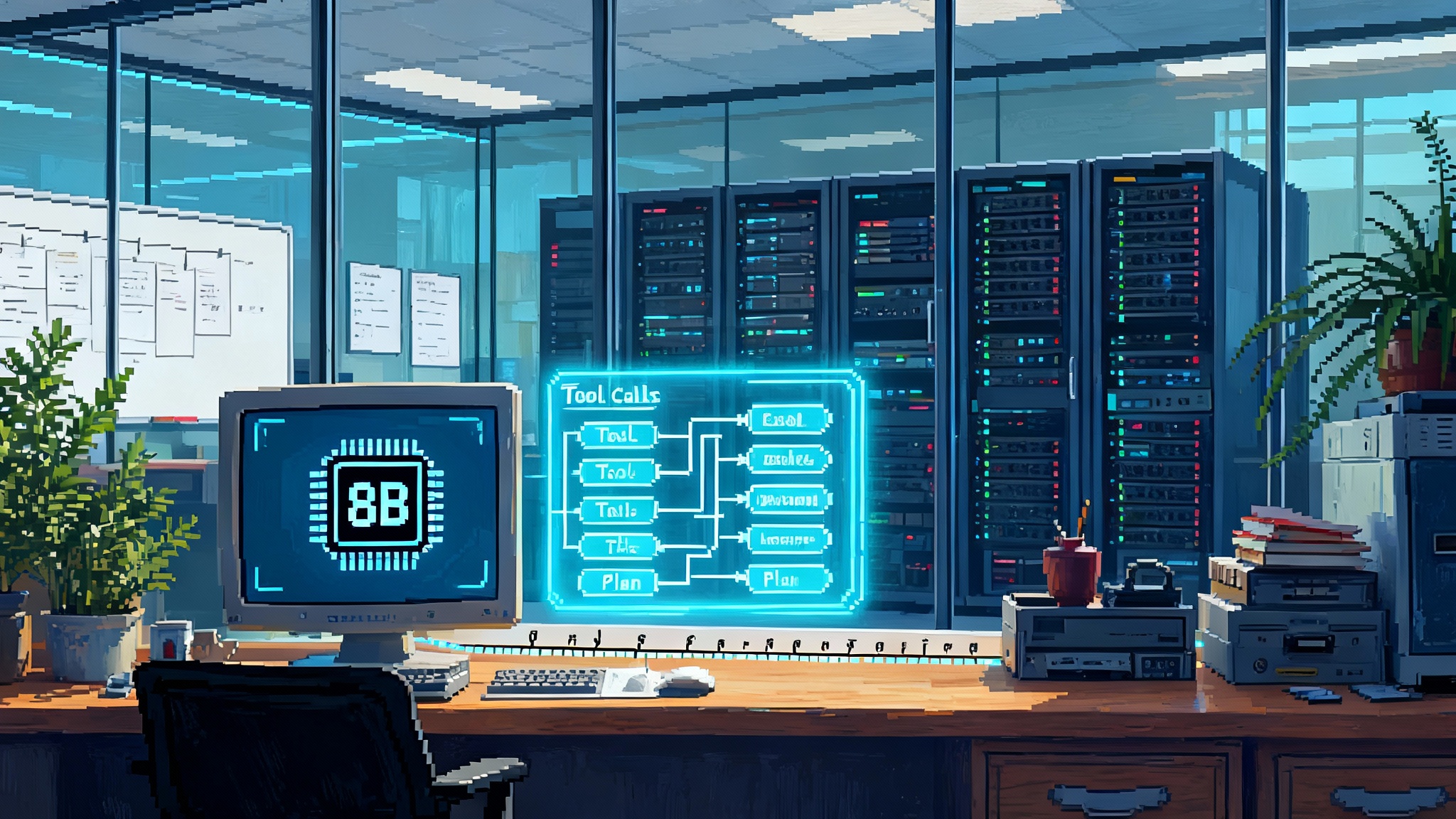

Run-level observability: The service emits structured telemetry per run. Think of it as application performance monitoring for autonomous workflows. A single run can include prompt construction, tool calls, retrieval steps, function outputs, and model responses. Each step carries a correlation identifier, a duration, a token count, a latency, and a success flag. You can query runs in Azure Monitor, build Kusto queries, and trigger alerts when tool success dips or tail latency spikes.

-

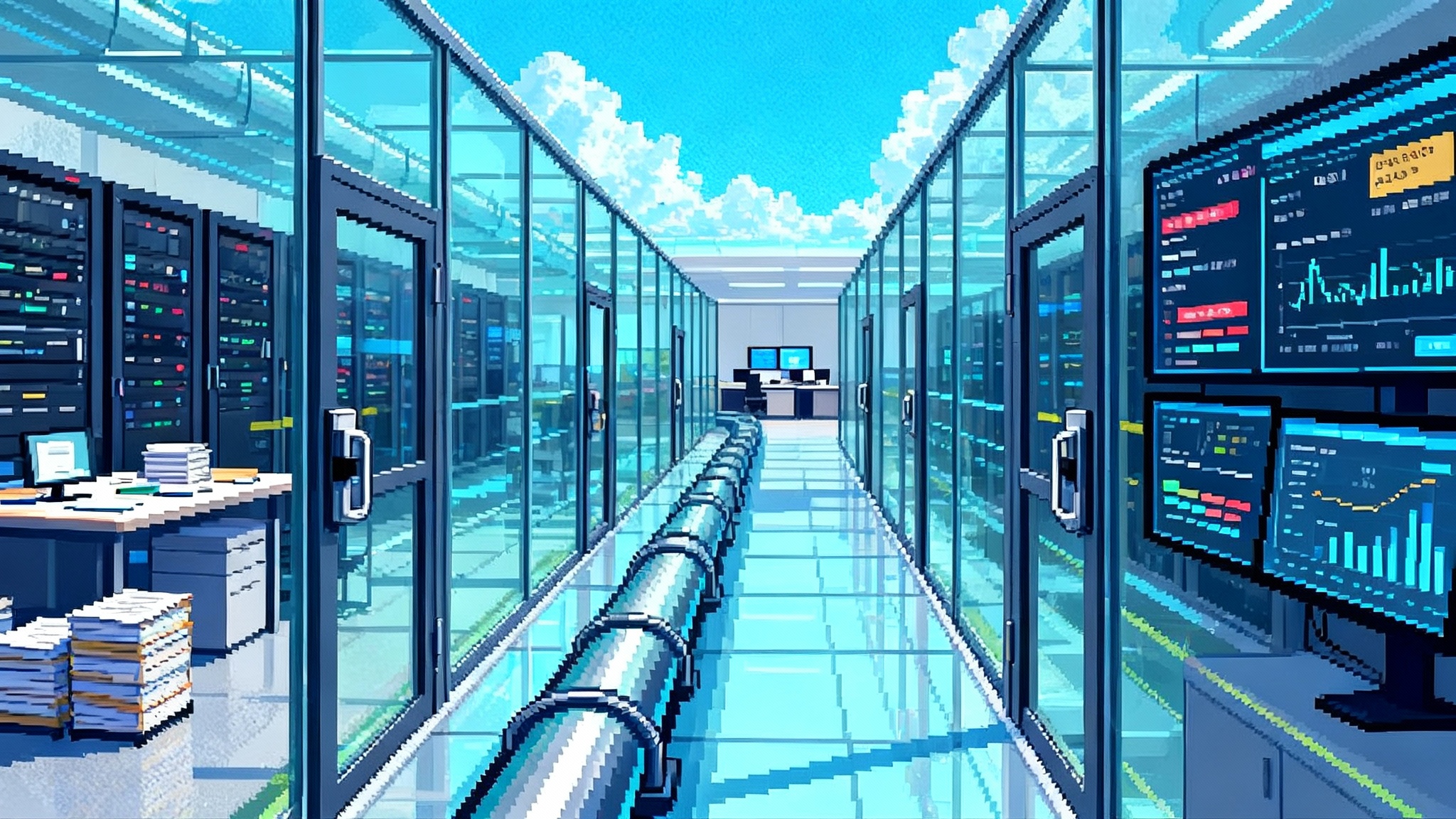

Microsoft Fabric integration: Agents rarely work alone. They fetch data, synthesize reports, and feed analytics. With Fabric, you can land agent outputs in a Lakehouse, orchestrate cleaning jobs in Data Factory, and visualize run health in Power BI. The tight loop between agent actions and data engineering is what makes this feel like a first-class workload.

-

MCP tool support: Model Context Protocol gives you a standard way to describe tools, resources, and their security. With MCP, you can register a tool one time, describe inputs and outputs, and centrally control access. If your risk team has asked for a catalog of approved tools and a policy for who can call them, this is how you implement it.

-

Deep Research: This capability lets agents plan, browse, cite, and revise across multiple hops. It looks like a careful analyst doing desk research, not a single prompt. Deep Research combines tool calling, scratchpad planning, and iterative verification. When connected to your internal search or data hub, it becomes a repeatable research assistant with logs you can review.

Together, these pieces transform an agent from a clever prompt into a service with memory, metrics, governance, and repeatability. That is the difference between a demo and a deployment.

Where Azure’s approach fits against NVIDIA NIM/NeMo and Salesforce Agentforce

Three strategies for enterprise agents have emerged.

-

Azure AI Foundry Agent Service: Cloud-native agent runtime with first-party integrations for identity, networking, storage, and monitoring. Strong fit if you already standardize on Azure Policy, Private Link, and managed identities. Designed to be model-agnostic, including small language models and large language models hosted on Azure. If your agent surface area includes productivity suites, explore how Copilot’s new modes in Office enable agents in daily tools.

-

NVIDIA NIM and NeMo: Optimized inference and model tooling for those who own the hardware path. NIM provides containerized microservices for inference with performance features like TensorRT accelerations. NeMo covers model development, guardrails, and retrieval. Great fit if you want consistent serving across on premises and cloud with control over GPUs and latency.

-

Salesforce Agentforce: Agents inside the Salesforce platform. Deeply integrated with Customer Relationship Management objects, Data Cloud, Einstein trust controls, and Flow automation. Strong fit when the center of gravity is sales, service, and marketing operations and you want agents that live next to your records and automations.

A quick side-by-side to anchor the differences:

| Dimension | Azure AI Foundry Agent Service | NVIDIA NIM/NeMo | Salesforce Agentforce |

|---|---|---|---|

| Primary scope | Agent runtime plus ops on Azure | High-performance inference and model tooling | Platform-native agents for CRM and operations |

| Deployment surface | Azure-managed service with VNet, Private Link, managed identity | Containerized microservices on any Kubernetes or cloud, on premises friendly | Inside Salesforce with Data Cloud and Flow |

| Tooling model | MCP tool registry, managed connectors, Fabric data access | Microservices and SDKs, NeMo Guardrails, retrieval and safety components | Native Salesforce tools, Actions, and Flow steps |

| Memory strategy | BYO thread storage into your tenant’s storage or Fabric | Application-defined storage next to services | CRM object memory and Data Cloud context |

| Observability | Run-level telemetry into Azure Monitor, Kusto queries, alerts | Metrics from NIM services, Grafana and Prometheus ready | Salesforce monitoring and audit logs |

| Governance | Azure Policy, role-based access control, network isolation, data residency | Governance you construct around clusters and services | Salesforce trust controls, Data Cloud governance |

| Model flexibility | Broad marketplace, Azure-hosted small and large models | Optimized serving for many open and proprietary models | Salesforce-curated models and connectors |

| Best for | Enterprises standardized on Azure looking for governed agent ops | Engineering teams needing performance and portability across GPU stacks | Teams who live in Salesforce and want agents in the flow of CRM work |

None is universally better. The right choice depends on where your data sits, how you deploy software, and who must sign off a risk review.

A reference architecture that survives risk review

Most rejections from security teams come from four questions: Where is memory stored, who can read it, how is it deleted, and how do we prove what happened? The GA release answers those questions with patterns you can adopt immediately.

-

Memory as a first-class system: Create a dedicated thread store in your subscription. Use customer-managed keys. Define retention in days, not forever. Add a process to purge memory tied to subject requests. Treat the store like you treat databases that hold user data.

-

Observability with run context: Forward run logs to a Log Analytics workspace. Tag runs with tenant, environment, and use case. Build a workbook that shows tool success rate, average cost per task, P95 latency by tool, and refusal rates. Alert on sustained drops or spikes.

-

Network and identity by default: Put the service behind Private Link. Bind it to a managed identity with the minimum set of roles to read data, call APIs, and write logs. Lock down public network access.

-

Data access via Fabric: Land outputs and citations in a Fabric Lakehouse. Use lake-first patterns for downstream analysis. If the agent generates structured outputs, store validation results next to the payload.

-

Tool governance with MCP: Register tools centrally. For each tool, define required scopes, input schema, output schema, and logging behavior. Create an approval workflow for new tools with automated schema linting and security checks.

-

Deep Research with explicit guardrails: Set limits on crawl depth, time spent, maximum tool calls per run, and domains allowed. Add a post-processing step that validates citations and flags broken links or ungrounded claims.

These are not nice-to-haves. They turn a clever agent into an auditable service.

The 30-60-90 day blueprint to ship a secure, observable, multi-tool agent

You can get from a blank repo to production without heroics. The secret is to sequence the right problems.

Days 0-30: Prove value inside guardrails

Goal: a working vertical slice that a risk partner can review.

- Pick one painful process with measurable value. Good candidates: summarizing weekly risk reports, generating customer-ready briefs from cases, or drafting procurement responses.

- Define a single-thread memory plan. Create a thread store in your subscription. Turn on encryption with your keys. Define a 30-day retention and set up a deletion job.

- Choose a model with latency and cost targets. For research and drafting, start with a strong model and add a small language model for classification and validation. Capture token budgets per step.

- Wire up 3 to 5 tools via MCP. Examples: internal search, a knowledge base, a ticketing system, and a calendar service. Register each tool with input and output schemas. Deny wildcard scopes.

- Add Deep Research for one high-value query. Keep hop count and time-box conservative. Require citations and write them back to your Lakehouse.

- Instrument everything. Emit a run identifier, step-level logs, token counts, costs, and error types. Create a quick dashboard with tool success and P95 latency.

- Run a tabletop threat model. Identify sensitive data paths. Verify that memory does not leak to external tools. Add a refusal policy for forbidden data classes.

- Deliver a demo to risk and platform teams. Show where memory is stored, how it is purged, how identity is scoped, and how alerts fire on anomalies.

Exit criteria: One use case in a staging environment, with run telemetry, tool governance, and a documented memory plan.

Days 31-60: Harden and scale to a pilot cohort

Goal: a pilot with real users and meaningful load.

- Build evaluation datasets. Capture 100 to 300 real prompts and expected outcomes. Label failure modes: hallucination, tool error, refusal, privacy risk, quality gap.

- Add guardrails and structured outputs. Use JSON schemas for tool calls and final responses. Validate outputs before they leave the service. Reject and retry when schemas fail.

- Introduce policy as code. Use Azure Policy to enforce network isolation, disable public endpoints, and require customer-managed keys for the thread store.

- Add canary and rollback. Roll out agent changes behind a feature flag. Keep one last known good snapshot. Build a one-click rollback.

- Monitor SLOs that matter. Track tool success rate, groundedness score for research answers, P90 and P99 latency by step, cost per task, and incident count per week. Alert on SLO violations.

- Expand the tool registry. Add a change log for each tool, owners, escalation contacts, and data classifications. Require security review for tools that touch sensitive systems.

- Pilot with 25 to 100 users. Train them to annotate bad answers in a structured form. Use the feedback to drive weekly retraining of retrieval indices and prompt templates.

Exit criteria: Pilot in production with SLOs, rollback, policy baselines, and weekly evaluation reports.

Days 61-90: Industrialize

Goal: make the agent boring in the best way.

- Stand up a central agent platform team. Assign clear ownership for runtime, tools, memory, and observability. Define a pager rotation and an escalation tree.

- Create a gold path. Publish a template repo with recommended model selection, MCP tool boilerplate, memory interface, policy checks, and integration tests. Require new teams to start here.

- Scale memory and storage. Partition the thread store by tenant or business unit. Add soft deletes and immutable logs for audit trails. Document retention by data class.

- Optimize cost. Cache intermediate results in the thread store. Route simple classification to a small model. Add a budget per run and cut off long chains.

- Automate compliance. Build a weekly report that lists runs, tools used, data touched, and policy exceptions. Pipe the report to your risk team.

- Design for failure. Run chaos drills: tool outage, stale credentials, network restriction changes. Verify the agent fails closed and produces a helpful fallback.

- Plan for multi-agent patterns. When you need handoffs, define a shared contract for messages, context objects, and control signals. Keep run identifiers consistent across agents for traceability.

Exit criteria: Production readiness with ownership, on-call, compliance reporting, cost controls, and a reusable path for new agents.

Practical metrics and thresholds you can defend

Defining Service Level Objectives for agents is new territory, but you can borrow from software reliability.

- Tool success rate: Keep it above 98 percent during business hours. Treat retries as failures for tracking purposes.

- Latency by step: Set P95 under 1.5 seconds for simple tools and under 5 seconds for retrieval plus synthesis. Investigate any run with a step over 10 seconds.

- Groundedness for Deep Research: Require that final answers contain citations for claims that are not common knowledge. Flag and review any answer with fewer than two citations for nontrivial claims.

- Cost per task: Cap by use case. For an internal brief, aim under a few cents per run at pilot scale. For high-value research, allow higher budgets but enforce a hard ceiling per run.

- Refusal accuracy: Agents should refuse unsafe requests and proceed on safe ones. Target over 95 percent correct refusals on your evaluation set.

- Incident rate: Fewer than 1 user-visible incident per week once you hit steady state.

These numbers are starting points. The important part is that you measure, alert, and adjust.

How to choose between Azure, NVIDIA, and Salesforce

Use a decision tree rather than a feature checklist.

-

Where does your data live and who owns the network? If your data and policies are anchored in Azure, the shortest path is the Azure Agent Service. If you split across data centers and clouds and care most about consistent GPU performance, NIM plus NeMo gives you portability. If your most important workflows live in Salesforce records and automations, Agentforce will feel native and reduce integration work.

-

Who signs the risk review? If your security and compliance teams already manage Azure Policy, Key Vault, and Private Link, you get a head start with Azure. If they are comfortable with containerized stacks and on premises controls, NIM fits that mental model. If your governance center is Salesforce’s trust controls, Agentforce aligns with existing guardrails.

-

How much do you need to customize the runtime? If you want to tune serving stacks and squeeze latency, NIM gives you knobs. If you want to build apps fast on a governed cloud and plug into Fabric, Azure is attractive. If your priority is agents that act inside CRM workflows without plumbing, Agentforce is best.

There is no wrong answer as long as you pick the path that matches your center of gravity.

Common traps and how to avoid them

- Unbounded memory: Without retention and deletion, thread stores become risk magnets. Set retention early and automate purges.

- Tool sprawl without owners: Every tool needs a steward. Track inputs, outputs, scopes, and break-glass contacts in a registry.

- Latency death by a thousand hops: Deep Research is powerful, but you must cap time and steps. Add budgets and fail fast.

- Overfitting to a single model: Keep your contracts model-agnostic. Use schemas and adapters so you can swap models without rewriting tools.

- Missing human fallbacks: When confidence or groundedness drops, route to a human reviewer. Capture the correction and turn it into training data.

The bottom line

The hardest part of enterprise agents was never creativity. It was control. With Azure AI Foundry’s Agent Service now generally available, you get the mundane levers that matter in production: a memory you own, metrics you can trust, tools you can govern, and a research loop that can justify its claims. That is what turns a viral demo into a dependable system. If you start with a narrow use case, instrument from day one, and treat tools as first-class citizens, you can reach a pilot in 60 days and a stable service in 90. The breakthrough is not a trick prompt. It is finally having an operations layer designed for agents.