Microsoft's Security Store makes AI agents the new SOC

Microsoft’s new Security Store shifts security from point tools to autonomous workflows. With build-your-own and partner agents spanning Defender, Sentinel, Entra, and Purview, the SOC becomes a policy-governed marketplace of AI operations.

Breaking: the SOC just got a store

Microsoft has turned a corner on enterprise security. With the debut of its Security Store, the company is not simply listing apps. It is packaging autonomous security workflows as products. The pitch is straightforward: security teams can buy or build agents that triage alerts, remediate vulnerabilities, optimize access, and help run investigations. The announcement landed on September 30, 2025, and the early coverage points to a bigger shift in how cybersecurity gets done. See the Security Store launch coverage.

This is not a cosmetic storefront. Partners such as Darktrace, Illumio, Netskope, Performanta, and Tanium appear on day one. Their offerings slot into Microsoft Defender, Sentinel, Entra, and Purview. The big idea is that customers can assemble an operating model by composing agents, not just licensing another console. If the classic security stack was a toolbox, the new stack is a roster of specialists you can hire for shifts.

From tools to autonomous workflows

The old procurement cycle was a maze. Buyers compared feature matrices, ran pilots, then spent months integrating and writing runbooks. The Security Store collapses those steps by packaging the runbook itself as an agent. A phishing triage agent, for example, does not only label messages. It reads mail flow signals, checks user reports, clusters similar incidents, drafts user notifications, and learns from analyst feedback. A vulnerability remediation agent does not just open tickets. It maps exposure to exploitability, coordinates with Intune for patch windows, and tracks remediation to closure.

This changes the buying question. Instead of asking whether a tool has a feature, buyers ask whether a workflow completes a job end to end. That is why the store matters. It treats outcomes as the product. The middle layer of scripts and glue code becomes the responsibility of the agent vendor. For the standards angle, see our take on the OSI language for enterprise agents.

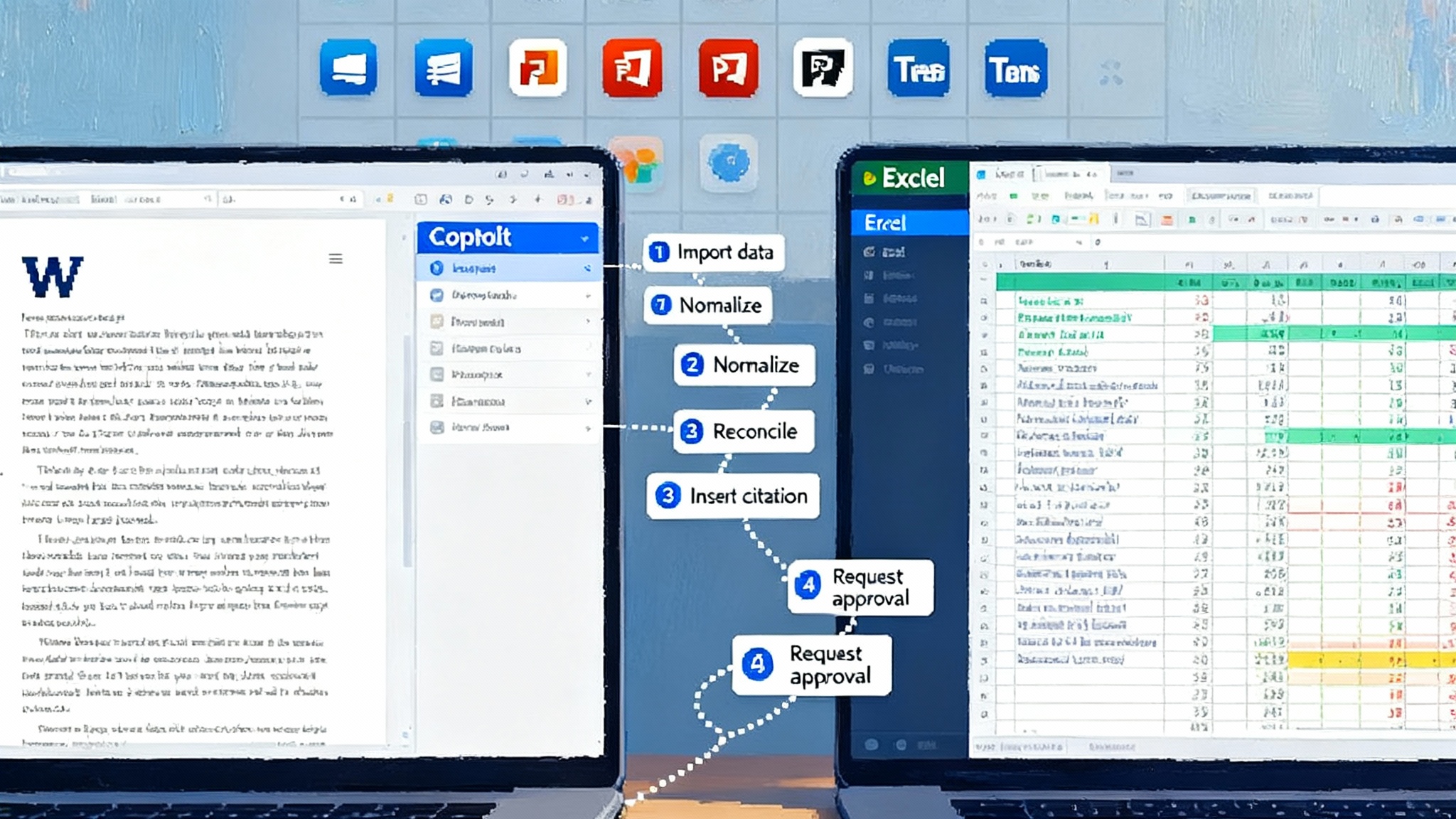

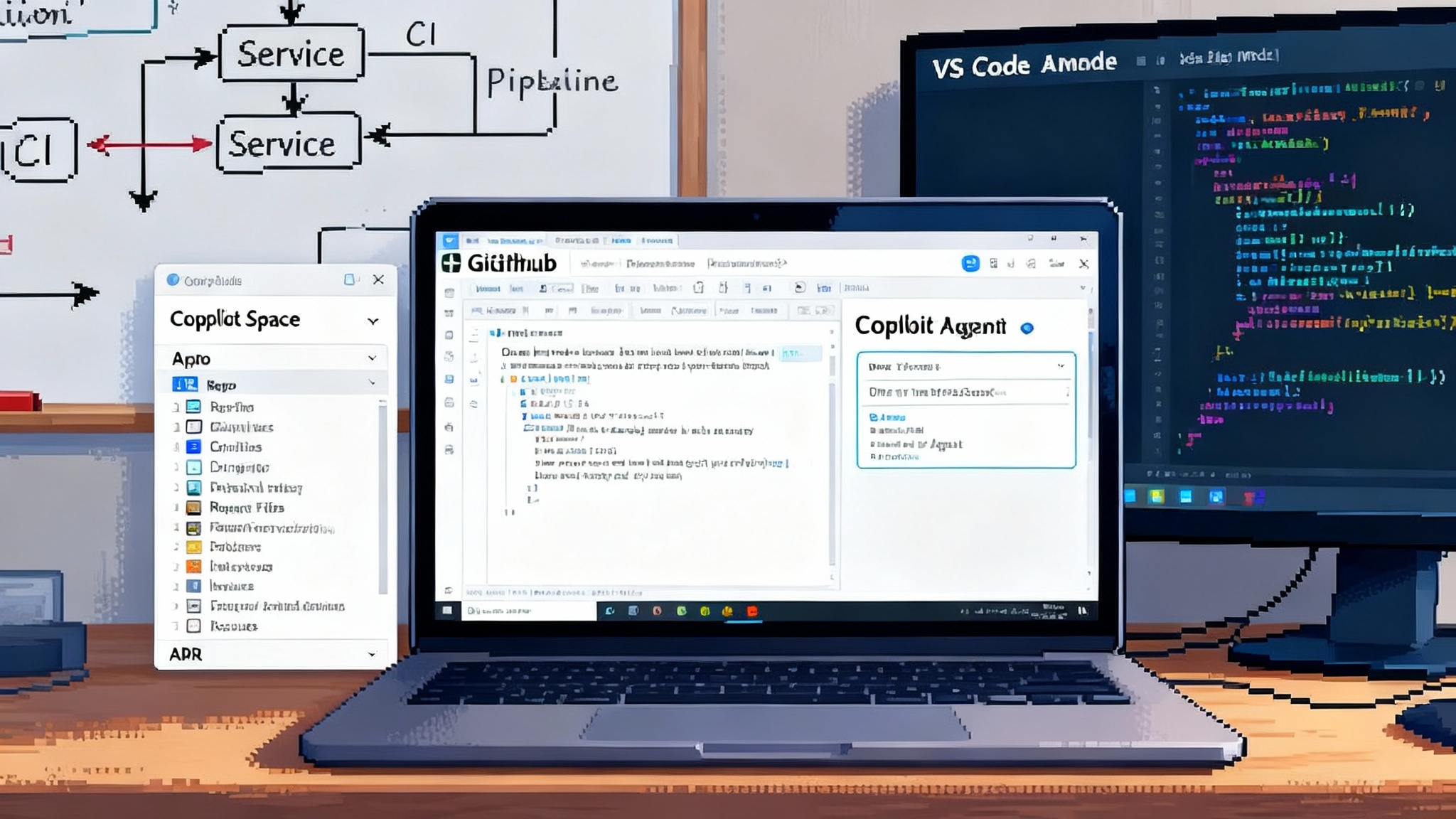

Build your own, then publish

Microsoft is also widening the do-it-yourself path. Security teams can author agents tailored to their environment. The pattern looks like this: describe the job in natural language, connect data sources, specify allowed actions, and publish to the store or to your private catalog. A small team can now stand up a data loss prevention triage agent for a specific business unit, a conditional access hygiene agent for newly onboarded applications, or an alert summarizer that speaks in the vocabulary of the incident response team. For implementation guidance, start with our Azure Agent Ops governance playbook.

The practical upside is speed. Organizations can create a minimum viable agent to solve a narrow problem in a week, learn from telemetry, and iterate. Instead of requesting headcount for another analyst shift, security leaders can put a specialized agent on the roster and let humans supervise the edge cases.

Agent identity becomes real identity

All of that autonomy raises a hard question: who is the agent in the eyes of your directory, your auditors, and your regulators. Microsoft’s answer is to treat agents as first-class identities. The company introduced Microsoft Entra Agent ID earlier this year, which assigns identities to agents and brings them under the same access provisioning, conditional access, and lifecycle policies as human accounts. Read the Entra Agent ID announcement.

Why this matters: identity is the new change control. If an agent can read user mailboxes, exfiltrate logs, or disable a risky session, you need to know exactly which account holds those rights, how it was authenticated, and who approved them. Treating agents like service principals with auditable histories gives security and compliance teams the hooks they need. Purview extends that control plane to data, so you can apply sensitivity labels, track eDiscovery events, and audit what content a given agent touched.

Think of each agent as a contractor who receives a badge, a budget of actions, and a time boxed engagement. The badge is the Entra identity, the budget is a scoped permission set and usage limits, and the engagement is a lifecycle workflow that prunes or rotates credentials when the contract ends.

The multicloud model mix is here

The Security Store is not just about Microsoft’s own models. Enterprise buyers already expect agents to run on the best model for a task. That could be an OpenAI model in Azure for reasoning over long traces, a small in-house model for sensitive content inspection, or a vendor model hosted elsewhere for a niche detection. The modern agent needs to be model agnostic and boundary aware, selecting a model based on policy, data residency, and cost. For the broader stack view, see how the enterprise agent stack goes mainstream.

A practical example: a data loss prevention agent classifies documents with a tenant-hosted small model, then asks a larger remote model to generate a remediation plan only when the content is already redacted or synthesized. Another example: an incident summarization agent runs on a cost-efficient model most of the time, but automatically escalates to a higher accuracy model when confidence falls below a threshold.

This is how the multicloud reality shows up in the security operations center. The agent is the orchestration layer, and the security decision is to define which models are eligible for which data under which conditions.

Governance moves from meetings to code

As agents grow in number, the governance overhead will crush teams unless it becomes policy as code. Expect the following patterns to become standard:

- Human in the loop gates defined in policy. For actions such as disabling a risky session for an executive, policy can require a human approval step, log the approver, and impose a cooling off period before the agent retries.

- Data boundary rules expressed as attachable policies. For example, allow this agent to read labels and metadata from Purview, but prohibit exporting raw content outside the tenant. If allowed, require just in time access that expires in four hours.

- Identity hygiene checks enforced on every run. Before an agent touches anything, it must attest that its token was minted by Entra, that multi factor guarantees are in place for its operator, and that no privileged role activation was in effect without justification.

When governance becomes code, change management becomes versioned, reviewable, and testable.

Telemetry and attestation will decide trust

Security leaders will soon demand the equivalent of a flight recorder for every agent action. The right pattern looks like this:

- Immutable event streams. Every agent step emits signed events with a correlation identifier, so you can reconstruct what it knew and why it acted.

- Run attestations. Vendors publish cryptographic attestations that bind agent version, configuration, and model identities to a run. Your SIEM should be able to verify these at ingest.

- Outcome analytics. Beyond precision and recall, the store will need to show real reductions in mean time to detect, mean time to remediate, and change failure rates when agents propose fixes.

The implication is simple. Without standard telemetry schemas and verifiable attestations, regulated buyers will stall at proof of concept. The first marketplace that ships solid evidence and easy exports will win large, skeptical customers.

Pricing will shift to usage credits

Licensing seats for an autonomous worker never made sense. Expect pricing to move decisively to usage credits and metered actions. If you budget for credits, you can let agents scale up during an incident and scale down when quiet. That aligns spend with risk.

The actions to take now:

- Define guardrails. Set per agent and per team monthly credit caps and auto off triggers. For incident spikes, define a manual override with financial approval.

- Track value. Tie credit burn to outcome metrics. If the phishing agent consumes 10 percent of your credits but eliminates 80 percent of your false positives, that is a win. If not, start tuning prompts, model choices, and policies.

- Plan for chargeback. Finance teams will want to allocate agent costs to the business units that benefit. Build a tagging scheme on day one.

Interoperability will be the new lock-in battle

The Security Store will not exist in a vacuum. Customers already buy from cloud marketplaces and run models across providers. Two questions now matter:

-

Can agents from one store run in another tenant without painful rework. This is not just packaging. It involves identity federation for agents, portable policy artifacts, and compatible telemetry.

-

Can a vendor ship a single agent that meets store criteria across ecosystems. That implies common expectations for attestation, update channels, and vulnerability disclosure.

If these questions are not answered in the next year, buyers will see cross cloud projects drag and will hesitate to standardize on agents.

What to watch next, and why it matters

- Policy as code for agents. The winners will expose policies as versioned artifacts, testable in pre production, and enforceable at runtime. Look for native support to gate high risk actions, expire permissions, and snap back configurations after a task completes.

- Telemetry and attestation standards. Expect a convergence around signed run manifests, event correlation identifiers, and open schemas that can land in your SIEM with minimal parsing. The vendor that makes these exports easy will become the default choice for regulated industries.

- Pricing via usage credits. Credits fit the bursty nature of incidents and red teaming. They also force vendors to optimize model calls and caching. If an agent burns too many credits, customers will switch.

- Interoperability across rival stores. A practical test is whether your incident response agent can run in a subsidiary that lives on another cloud without a rewrite. If not, the vendor should supply a compatible package and a policy translation tool.

A near-term ripple into healthcare and finance

Security is not the only domain about to be industrialized by agents. The pattern of identity, policy as code, telemetry, and credits translates cleanly to regulated industries.

- Healthcare. Imagine an incident response agent for protected health information that can only operate on de identified content unless a clinician approves a break glass event. Every step is logged with an attestation. Audit can replay the run, and policy can ensure the agent never leaves the hospital network boundary.

- Finance. Consider an insider risk agent that monitors sensitive deal rooms. It applies Purview labels, correlates with identity anomalies in Entra, and drafts a suspicious activity report for human review. Credits cap its run rate. Every export includes an attestation that spells out which model saw what data and under what policy.

The same machinery will show up in contact centers, insurance claims, supply chain, and fraud operations. The industry playbook will be to buy portable workflows, not just tools, then wrap them with governance you can prove.

A punch list for CISOs and SOC leaders

- Start an agent inventory. Create an agent bill of materials that includes identity, permissions, data sources, model options, and the owning team.

- Establish an approval board. Treat risky actions like changes. Require policy reviews, test plans, rollback steps, and attestation checks before agents gain production rights.

- Build a sandbox. Stand up a representative test environment with synthetic data and attack simulations. Make it part of the release process.

- Wire telemetry early. Insist on signed events, consistent correlation identifiers, and an export that lands cleanly in your SIEM.

- Pilot usage credits. Set caps, create dashboards, and run a quarterly optimization review focused on cost per outcome.

- Plan for multicloud. Decide which models are eligible for which data. Write the policy now so agents can choose later without a meeting.

The bottom line

The Security Store is more than a new entry point to Microsoft’s security portfolio. It is a forcing function that moves cybersecurity from tools to outcomes, and from meetings to code. Agents will be new coworkers that need badges, budgets, and supervision. The organizations that learn to hire, audit, and pay these digital specialists with discipline will close cases faster and sleep better. Those that treat agents as toys will find themselves cleaning up after them. The store puts both futures within reach. The choice comes down to policy, telemetry, and the courage to measure results.