GPT-5.1’s Adaptive Reasoning Resets the Agent Cost Curve

OpenAI released GPT-5.1 on November 12, 2025 with Instant and Thinking modes, a true no reasoning setting, 24-hour prompt caching, and built-in apply_patch and shell tools. Here is how these features shift the cost and latency math for long-horizon agents and the 2026 products they unlock.

The news, and why it matters

On November 12, 2025, OpenAI pushed GPT-5.1 to developers and set a new baseline for practical agents. The release is not only a performance bump. It reshapes how models spend time and money on reasoning, how context gets reused, and how code and systems are edited by default. OpenAI shipped two use styles, Instant and Thinking, a new no reasoning mode, 24-hour prompt caching, and two first-party tools, apply_patch and shell. Together these features change the frontier between what is fast and cheap versus what is slow and careful, and they do it without demanding new orchestration stacks. The shortest summary: GPT-5.1 gives you a dial for how much the model thinks and a cache for what it already knows, then hands you wrenches to change code and run commands in the same loop. See OpenAI’s formal description in the GPT-5.1 for developers announcement.

Adaptive reasoning, decoupled

Think of model reasoning like a car’s hybrid drive. On the highway you cruise on the efficient engine. In the city you tap the battery. GPT-5.1’s adaptive reasoning does this automatically. Easy tasks stay on the efficient path. Hard tasks recruit more deliberate thinking. Developers do not need to guess in advance whether a request will be trivial or gnarly.

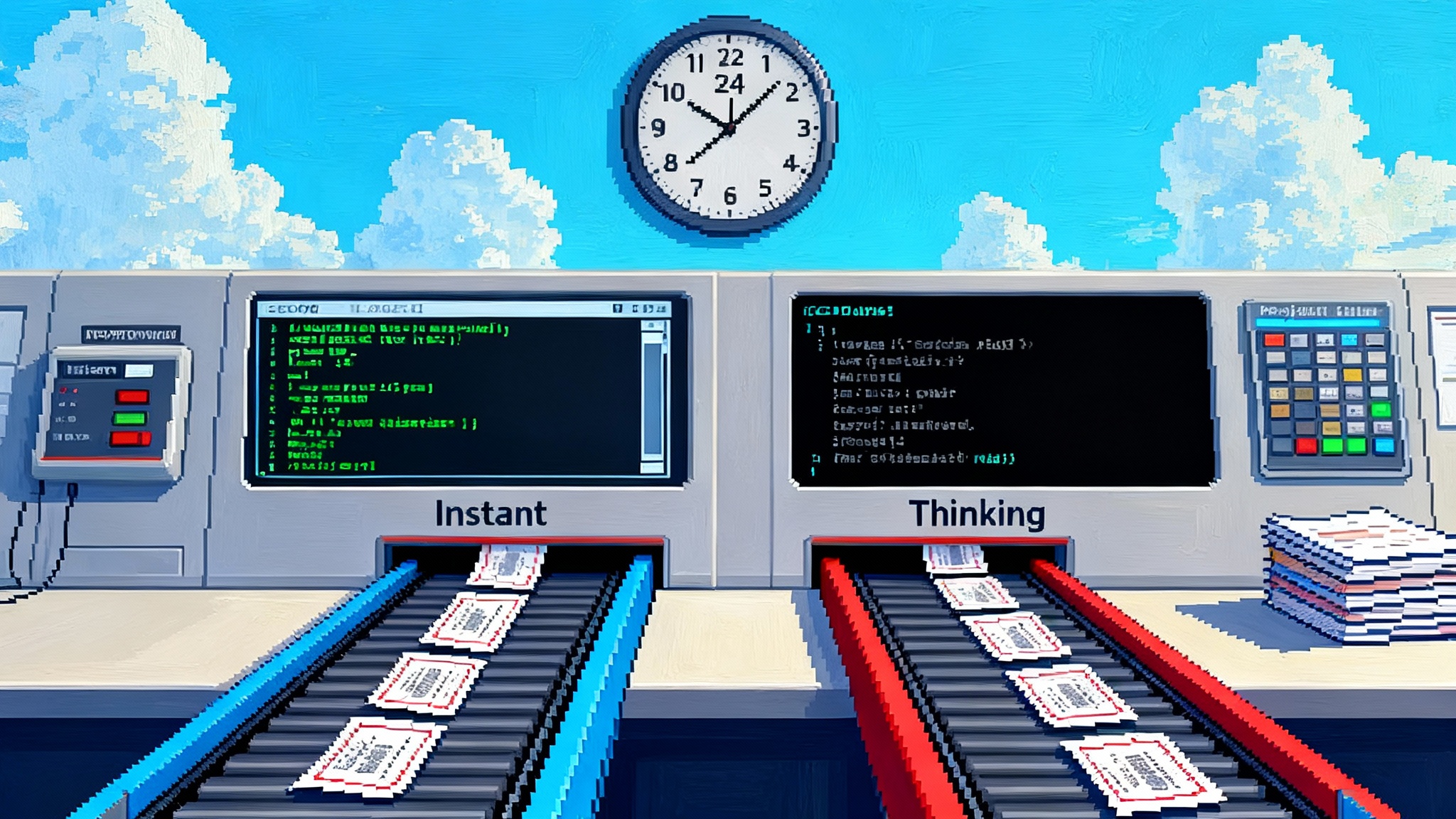

Two user-facing styles make that behavior legible:

- Instant: answers quickly and favors responsiveness. Use it as your highway mode for simple retrieval, formatting, or routine tool calls.

- Thinking: spends more time on knotty problems. It can plan, reflect, and check its own steps before answering.

There is also a lower gear: no reasoning. Instead of allocating any internal thought budget, the model responds directly. This is not dumbing the model down. It is preventing unnecessary inner monologue when a task is so simple that thinking overhead dominates latency and cost. OpenAI exposes this as a control on the “reasoning effort,” with none as the default and higher levels available when reliability trumps speed.

This matters because most real agents do not perform hard reasoning every second. They alternate between bursts of planning and long stretches of routine steps. A checkout bot that validates a shipping address does not need the same depth of thinking as the part that negotiates a shipping exception with a carrier. Being able to turn thinking on and off, or let the model autoswitch, reduces spend and wait time without reducing quality where it matters.

Caching that fits how agents actually work

Agents carry a lot of context: goals, environment, constraints, partial plans, and state. Re-sending this on every request is both slow and costly. With GPT-5.1, OpenAI extended prompt caching to a 24-hour retention window, and cached tokens are priced at a steep discount compared to uncached tokens. That aligns model economics with how real work happens: a procurement assistant running over a day, a coding session that stretches across commits, or a research thread that pauses overnight can all reuse the same backbone context without re-paying for it.

A simple mental model: treat the cache as a day-long session memory that you update sparingly. You pay full fare once to load the project brief, team guidelines, and key data schemas. After that, every follow-up reuses those tokens at a much lower cost while also cutting latency because the model does not have to read the same context again. For multi-turn, plan-execute loops the savings are multiplicative because the plan scaffolding persists.

Built-in tools that close the loop

The new apply_patch tool lets the model propose diffs instead of prose. It emits structured patches for file creates, updates, and deletes. The shell tool lets the model propose terminal commands that your integration executes and then reports back. Together they reduce the distance between a decision and an action and align with how the browser becomes the agent runtime in modern stacks.

Why that matters: a long-horizon agent is not a talker; it is a doer in small, reversible steps. With diffs and commands as first-class outputs, you can run a tight loop:

- Ask the model to plan a small change.

- Apply the patch in a sandbox repo.

- Run tests or linters with

shell. - Feed results back to the model.

- Repeat until the acceptance criteria pass.

This turns the model from a code suggestion engine into a system that can progress work safely with clear checkpoints. It also makes observability easier. You can log patches and commands as artifacts and audit the entire chain without parsing paragraphs of natural language. For governance and safety, see patterns from the agent trust stack.

The cost and latency frontier just moved

Before GPT-5.1, you faced a rigid tradeoff: either run a fast model and bolt reasoning patterns on top or run a heavy reasoning model for everything and swallow the cost. With Instant vs Thinking and the no reasoning setting, you can shape a workload like a waveform. Spikes of deep thought where it is valuable; flat stretches of quick work where it is not.

Pair that with 24-hour caching and your steady-state costs drop again. The model spends money on new information and new steps, not on rereading the same background. For long-running automation, that is the difference between something that demos well and something that carries a weekly ticket queue at a sane budget.

Here is a concrete scenario from a customer support context to see how the math shifts:

- Day 1 9:00 a.m.: Load a 10,000-token knowledge base summary and policy book into the cache. Pay full price once.

- All day: Each of 300 tickets reuses that context at cached rates, only sending per-ticket details and tools output at full price.

- Reasoning budget: Most tickets use no reasoning, but the 12 percent with policy exceptions escalate to medium reasoning automatically.

In this design, latency is driven by the minority of hard tickets while the majority stay snappy. Cost is driven by new facts, not background. The agent stops feeling like a luxury concierge and starts behaving like a dependable teammate.

What this enables for 2026 products

-

Maintenance engineers with a real autopilot: Roadmaps to retire tech debt depend on small, incremental edits.

apply_patchgives you a reliable stream of diffs.shellruns tests, formatters, and static checks. By spring 2026, expect serious adoption in developer tools that perform day-long refactors by stitching many small, reversible steps, not single heroic jumps. -

Finance and procurement agents with line-item accountability: Caching keeps the chart of accounts, vendor terms, and approval matrix in short-term memory. No reasoning drives latency out of invoice triage. Medium reasoning kicks in only when a mismatch appears. All actions are logged as commands or document diffs.

-

Research and editorial copilots that do not wander: Use Instant to skim and gather, Thinking to draft a structure and verify facts, then Instant again to format. The plan and source list sit in cache across the day so that rewrites do not incur a new cost wall.

-

Sales engineering that survives hand-offs: The model keeps a cached backlog of constraints and customer context. New emails and call notes arrive as deltas. When the team sleeps, the agent finishes a safe set of tasks, leaving diffs, command logs, and a change summary that a human can accept in the morning.

-

Test automation that writes itself: Give the model a spec and seed fixtures once. From there, it iterates with

apply_patchandshellto generate tests, run them, review failures, and retry. The loop is bounded by a thinking budget and a command allowlist to guard safety.

Design patterns to adopt now

- Separate plan tokens from step tokens. Put plan scaffolding and constraints into the cache and refresh it only when governance changes. Let step-by-step execution be the only part that pays full freight.

- Gate thinking with triggers. For instance, only enable medium reasoning if the model’s confidence drops below a threshold or when a regex detects a policy keyword. Keep none as the default for speed.

- Use patch and command sandboxes. Apply patches in a temporary branch or disposable filesystem. Execute shell commands inside a jailed container with an allowlist and rate limits. Record artifacts so that every action is inspectable and reversible.

- Pre-compile affordances. Provide a catalog of capabilities such as run unit tests, format code, apply migrations, call payment gateway. The more the agent expresses work as discrete tools rather than freeform language, the more predictable your costs and latency become.

- Budget by loop, not by task. Set a maximum number of patch-command cycles and a ceiling on cumulative tool output per incident. If the loop hits the ceiling, escalate to a human. This keeps long-horizon tasks from turning into long-tail burn. For cost levers at the model layer, note how open-weight reasoning takes over.

How it stacks up to Google and Amazon

Google has been moving in a similar direction, exposing explicit controls on how much models think. In May, Google described thinking budgets for Gemini 2.5 that let developers cap or disable internal reasoning to balance speed and quality. That is philosophically close to GPT-5.1’s no reasoning control and makes cross-vendor orchestration saner. See Google’s own write-up on Gemini 2.5 thinking budgets.

Gemini Enterprise is also leaning into governance and workplace integration. Google is positioning it as the front door for company data and tools, with early adopters like hospitals and retailers using it to ground agents in existing systems. Practically, Gemini’s advantage is the depth of native ties to Workspace and Vertex AI. If you live in Sheets, Docs, and BigQuery, Gemini Enterprise may minimize glue code for approvals, audit logging, and admin controls.

Amazon’s Nova Act, meanwhile, is focusing on agent development inside the integrated development environment. The emphasis is on natural language scripting, precise flows, and a tight test loop in a browser or device simulator. For teams building agents that manipulate complex web apps or consoles, Nova Act’s end-to-end workflow in the editor is appealing. If your operational world is already in AWS, the path to deploy these agents with per-service permissions and CloudTrail visibility can be more direct.

The upshot: all three stacks are converging on the same triangle of controls. 1) knobs for thinking time, 2) strong integration surfaces for tools and data, and 3) clearer windows into what the model just did and why. GPT-5.1’s contribution is to make those controls first-class inside the model rather than only in the orchestration layer, while also bundling the most common code-and-system tools into the default kit.

Pitfalls to avoid

- Treating cache as a trash bin. Do not dump entire data lakes into the cache. Curate summaries, schemas, and policies. Keep it small and stable, and rotate a fresh snapshot when rules change.

- Overusing Thinking. Long inner monologues feel smart but can slow the system and raise bills. If a task has deterministic rules, encode them as tools or validators. Let the model call those fast instead of pondering.

- Running shell with broad permissions. The power of a command line comes with risk. Use a narrow allowlist, a locked filesystem, and visible dry runs before execution. Keep secrets out of the model’s view.

- Ignoring measurement. Track time-to-first-token, tokens per successful action, patches per acceptance, and human escalation rate. These are the north-star metrics for agent productivity.

A clear playbook for teams

- For CTOs: draw a two-tier agent budget. Baseline runs with no reasoning and cached context; a smaller premium pool funds medium or high reasoning on qualified triggers. Enforce the split in code and in dashboards.

- For product managers: scope features around loops, not monoliths. Frame user value as the agent completing one safe loop end to end. Add loops over releases. This reduces risk and exposes value earlier.

- For engineering leaders: standardize patches and commands as artifacts. Store them alongside pull requests and observability logs. Require human signoff for scope-changing patches.

- For security: build a command policy for

shelland a diff policy forapply_patch. Patterns like no network, no package installs, no file deletes without a matching create nearby will catch most risks. - For finance: model cost at the incident level. Include cache priming, average loop count, and exception rate. This makes budgets legible to non-technical stakeholders and keeps surprises low.

The bigger meaning

For years, the gap between agent demos and agent production was not intelligence; it was economics and ergonomics. Models either thought too much about the easy parts or forgot everything between steps. GPT-5.1 cuts that waste with three simple ideas. Only think when needed. Do not reread what you already know. Act through diffs and commands you can audit. The rest is engineering discipline.

If you use these controls well, 2026 can be the year your agents stop being a sidecar and start handling whole flows. Start small, wire in the guardrails, and teach the system to spend time and tokens only where they buy real quality. The stack is finally giving you the knobs to do it.