Qwen3-Next and Max Flip the Cost Curve for Long-Context Agents

Alibaba’s late September release of Qwen3-Next and the trillion-parameter Qwen3-Max brings sparse activation, multi-token prediction, and 128K to 256K context windows that reduce latency and cost for tool-using agents running on commodity GPUs.

The September launch that bent the curve

Alibaba’s late-September drop of Qwen3-Next and Qwen3-Max did not just add two more model names to an already crowded shelf. It rewired how builders can think about cost, context, and capability. The bet is simple. If a model can read more, remember more, and plan across many documents while waking up only the parts of the network that matter, then agentic systems move from boutique experiments to everyday infrastructure. For teams formalizing governance and ops, our take on Azure Agent Ops goes GA pairs neatly with this shift.

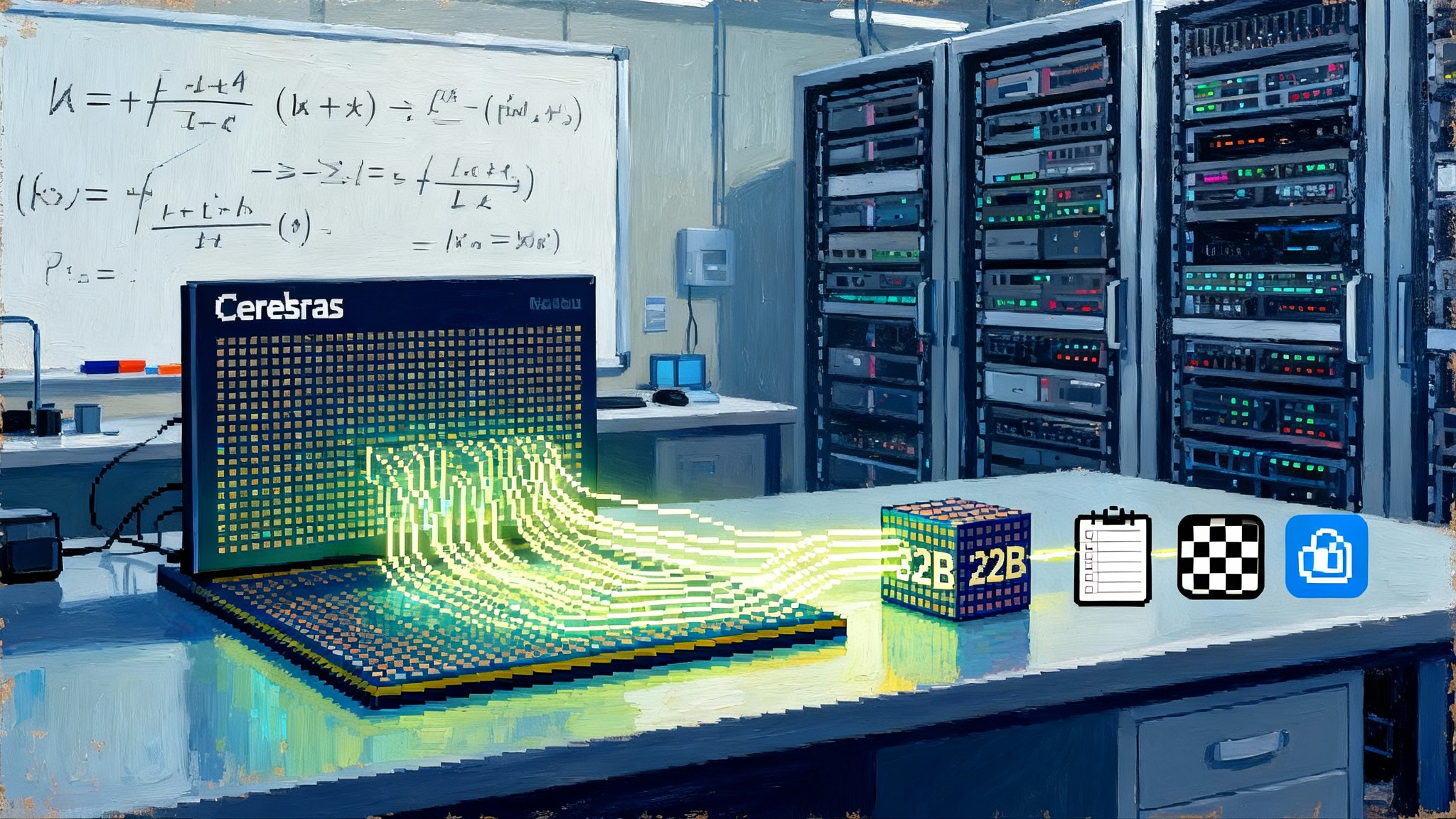

NVIDIA’s engineering write-up underscored why this matters for practitioners. It previewed Qwen3-Next’s hybrid mixture-of-experts design and showed how the model maps cleanly onto current accelerators and software stacks, with parallelism that actually pays off in wall-clock terms. See NVIDIA’s explanation of the hybrid experts and parallel processing in its post, NVIDIA on Qwen3-Next hybrid MoE.

This piece unpacks what is new, why it flips the cost and performance curve for agents, what it means for U.S. builders, and how to start building now without waiting for another hardware cycle.

What is actually different

Three ingredients make this release feel like a turning point rather than another benchmark point.

- Hybrid mixture of experts that behaves like a dense model when it must, and a sparse model when it can

- Multi-token prediction so the model can advance the generation frontier several steps at a time

- Context windows at 128K to 256K tokens and beyond, so agents keep working memory alive across long tasks

Each ingredient was known in isolation. The difference here is the integration and the engineering details that make the parts add up.

Sparse activation in plain language

Qwen3-Next uses a sparse-activation scheme often referred to as A3B. Think of the model as a city of many small teams, each expert trained to handle certain patterns. At inference time you do not hire the entire city. A router quickly picks a few teams most likely to add value for the current token, and only those teams clock in. That is sparse activation.

Why it matters:

- Because only a subset of experts run per token, you get the representational power of a big model without paying the full compute bill every step

- As sequence length grows, keeping many experts idle most of the time reduces both latency and power draw

- Routing can be tuned to be conservative for safety critical steps and aggressive for routine steps, which is exactly what agent loops need

The hybrid part adds stability. Certain layers remain dense to preserve a reliable backbone, while expert layers provide bursts of specialization. This avoids the worst failure mode of pure experts models where routing errors cascade.

Multi-token prediction and why agents care

Multi-token prediction changes how the decoder works. Instead of predicting one token, committing it, and moving on, the model is trained to predict several plausible next tokens in one shot and to reuse intermediate computations. For agents this is not just about raw tokens per second. It shortens tool latency.

Consider a coding agent that emits a function signature, a docstring, and a few lines of boilerplate. Those are predictable patterns. Multi-token prediction lets the model stamp them out quickly and reserve expensive attention for the unusual parts, like weaving together two obscure library calls. The net effect is that the agent spends less time waiting on the obvious and more time reasoning about the hard parts.

Long contexts as durable working memory

Context windows at 128K to 256K tokens feel like moving from a notepad to a newsroom archive. With that much room, an agent can keep its scratchpad, the last few tool responses, the relevant policy pages, and several documents from the user all in the live window. This reduces the fragile dance of retrieve and summarize, then retrieve again, where each pass compounds errors.

Long context does not make retrieval unnecessary. It changes the cadence. You fetch fewer times, you chunk less aggressively, and you can keep intermediate plans in plain text rather than compressed bullet points that lose nuance. When you do retrieve, the model can scan richer passages and maintain cross references without losing track of earlier steps.

Why this flips cost and performance for tool-heavy agents

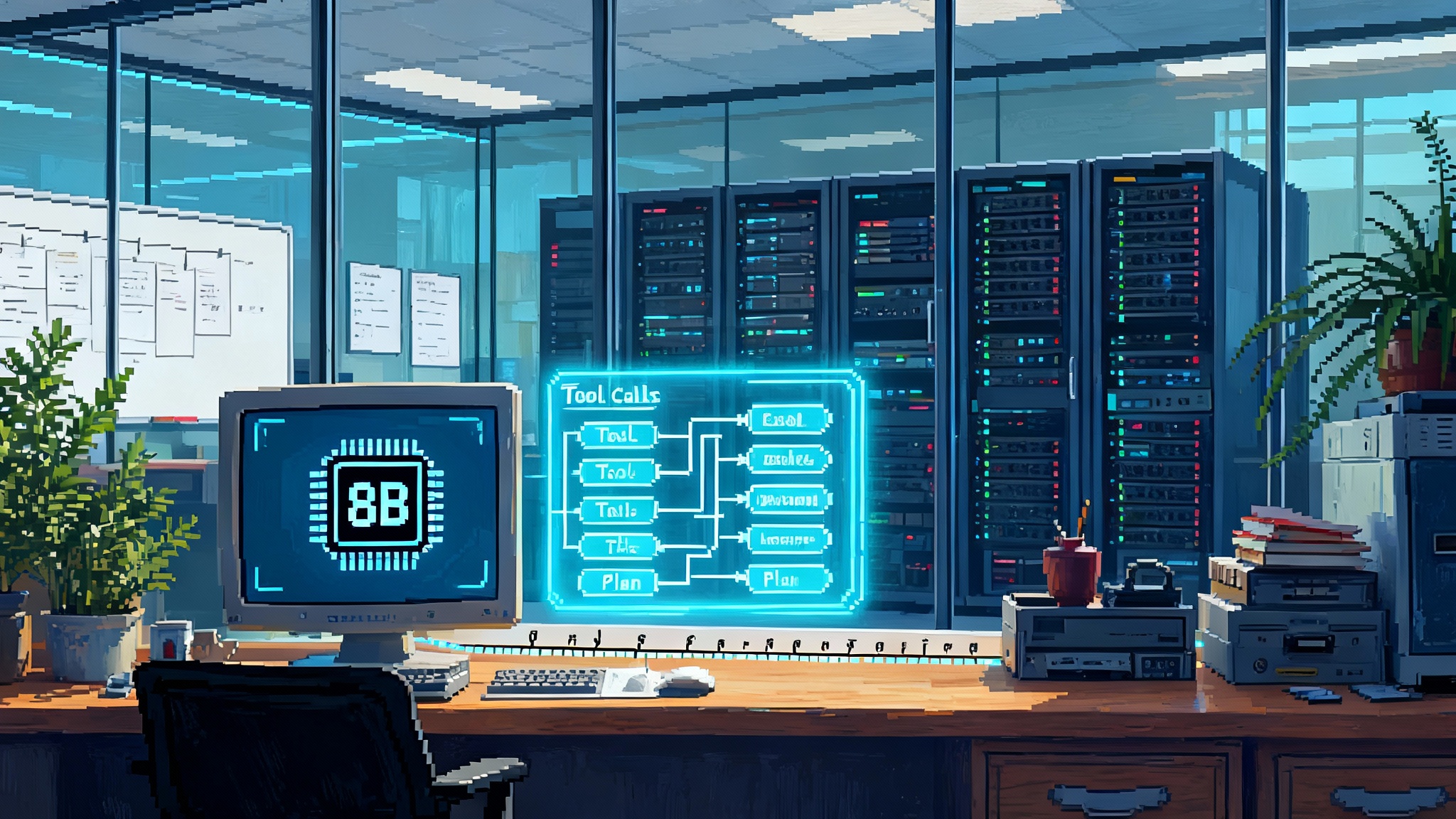

The core loop of a production agent looks like this: read a task, plan, call tools and functions, evaluate results, write, and repeat. The slow parts are tool round trips and planning over multiple artifacts. Qwen3-Next attacks both.

- Sparse activation lowers the per-token cost so you can afford to keep more context alive for longer

- Multi-token prediction reduces the number of decoding steps, which reduces the number of times you wait for the model between tool calls

- Hybrid experts plus dense layers keep quality stable across styles of work, which lowers the need for repeated attempts

When you combine these, the dollars per completed task come down, not just dollars per million tokens. That is the metric that matters to operators.

What fits on commodity graphics cards now

Many teams do not have a rack of top-end accelerators. They have a few workstation cards or older data center cards. Sparse activation effectively shrinks the active model footprint at inference time. That means a quantized or partially offloaded Qwen3-Next can run useful contexts on a single modern consumer card, while a multi graphics card node can orchestrate agents that read entire document folders.

You can build the following on a handful of commodity graphics cards today:

- A customer support agent that reads a 70 thousand token knowledge base excerpt, the last five tickets, and current policy text, then chooses from eight tools to resolve issues without escalation

- A financial research agent that keeps a live plan and a running index of ten filings, switching between extraction and reasoning without flushing its memory every step

- A compliance assistant that watches a stream of meeting transcripts and drafts action registers while maintaining references into a 120 thousand token policy binder

Implications for U.S. builders

Two access paths stand out: NVIDIA NIM and open weights.

The NVIDIA NIM path

NVIDIA Inference Microservices, often shortened to NVIDIA NIM, provides containerized endpoints with optimized runtimes, scheduling, and observability. If you want a quick integration path, standing up Qwen3-Next through NIM gets you:

- A consistent network surface with health checks and scaling already wired

- Kernels and attention implementations that are tuned for your drivers and cards

- A clean way to mix multiple model endpoints behind one gateway

This matters for U.S. shops that need predictable latencies and supportable deployment footprints. You can route traffic to a NIM cluster for production while keeping a separate open weights cluster for experimentation.

The open weights path

Open weights let you tailor the model to your domain and your privacy posture. You can fine tune on your proprietary data, control logging, and audit the entire inference path. For many regulated teams this is the only viable choice. For patterns that favor open stacks, see how we frame Cognitive Kernel-Pro for open agents.

A practical hybrid approach is common. Use a high capacity service for the rare hard questions and an open weights Qwen3-Next for the routine ones. Route by difficulty and sensitivity. Keep a log of triggers so you can retrain your smaller model to handle more of tomorrow’s traffic.

For documentation, model cards, and releases, Alibaba’s code presence is a good hub, such as the QwenLM GitHub repository. It is where you will find example tokenizers, quantization recipes, and serving hints that match the latest releases.

Risks and constraints

- Licensing: Read the license and usage clauses closely. Some weights ship with research terms or with commercial carve-outs that require attribution or limit certain uses. If you plan to resell, get a human lawyer to review your stack

- Geopolitics: Supply chains and export controls can change the availability or terms of software and models with Chinese origins. Have a substitution plan for critical components and be ready to pin versions

- Infrastructure: Long contexts produce heavy key value caches. Measure memory headroom at the start of your project. Decide in advance where to trade context length for batch size

How the parts enable durable memory and multi document planning

Durable memory is a fancy phrase for not losing your place. With 128K to 256K tokens available, an agent can keep the following in memory for the entire task rather than juggling it in and out:

- The original user brief, uncompressed

- The current multi step plan, with rationale and alternatives

- The latest tool results, pasted in verbatim so chain of custody is clear

- The key passages from multiple documents, with inline citations that survive copy and paste

Tool heavy workflows benefit because fewer external requests are wasted. A calendar tool call from step two still sits in the context at step nine, next to the email draft that the agent is shaping. The model does not need to re summarize every time it switches tools, which cuts both cost and error.

Multi document planning becomes straightforward. The agent can carry a map of where facts live. Instead of writing a brittle one line summary for each source, it keeps a structured outline with quotes and page references, then weaves content from that outline. If a claim is challenged, the evidence is still present.

A pragmatic build guide

You can stand up a serious agent with Qwen3-Next or Qwen3-Max in a single sprint if you control scope. Here is a recipe.

- Choose your path

- If you need the fastest way to production with observability and on card tuning, start with NIM hosted Qwen3-Next

- If you need full control or offline inference, pull the open weights and serve them in your cluster

- Pick a context target

- For most agents, 64K to 128K tokens is the sweet spot. It keeps memory costs sane and still covers many documents

- Only move to 256K plus when you have a measured failure that long context solves

- Set quantization and precision early

- Decide on 4 bit or 8 bit weight formats up front. Test for your task. Tool calling often tolerates more quantization than creative writing

- Prefer kernels that keep attention math in higher precision even if weights are quantized. It preserves recall on long contexts

- Build a simple memory layout

- Reserve a fixed segment of your prompt for a durable scratchpad that persists across tool calls

- Keep tool results in raw form with short headers so they are easy to scan and easy to trim if you run low on space

- Append a running plan as the last section before the task instruction so the model always conditions on the plan

- Wire tools with a firm contract

- Define schema rich tool signatures. Use structured outputs such as JSON so the agent can validate before acting

- Add retries and guardrails around tools that can mutate state, such as ticket updates or code pushes

- Log for learning, not for storage

- Sample full traces with inputs, tool responses, and model outputs. Use them to train small rewrites. Avoid keeping everything. Logs grow fast at long contexts

- Evaluate on agent outcomes

- Measure tasks completed per dollar and per kilowatt hour. Inspect failure trees. If the agent fails, is it planning, retrieval, or tool drift

- Tune the router and the plan

- If your serving stack exposes expert routing controls, experiment with more conservative routing for safety sensitive steps and more aggressive routing for templated steps

- Adjust the agent plan template until it stabilizes. Stability shows up as fewer plan edits mid run

- Start with a small tools palette

- Each additional tool increases branching and failure modes. Begin with three. Add more once you have telemetry and tests

- Budget for the key value cache

- Long contexts are memory heavy. Size your cards for the worst case. Consider cache offload to host memory if your serving stack supports it, but measure the latency tax first

Early benchmarks and operational metrics to watch

Raw leaderboard scores tell only part of the story. For agent systems the following signals are more revealing.

- Tokens per second at real prompts: Measure throughput on your actual task prompts, not on lorem ipsum. Multi-token prediction will show bigger gains on structured outputs than on poetry

- Effective experts per token: If your stack exposes it, track how many experts are active on average. The right range balances quality and cost

- Tool call round trip time: End to end time from an agent deciding to call a tool to getting the result back into the model. Multi-token prediction reduces the number of pauses, which should show up here

- Retrieval precision at long context: With 100K tokens in play, check whether the model quotes the right passages. Use a synthetic needle in a haystack plus real-world documents

- Multi document reasoning tasks: Watch performance on suites that require planning across many sources. Flaky reasoning at 4K tokens becomes obvious at 128K tokens

- Agent stability: Count mid run plan rewrites and backtracks. Stable plans correlate with lower cost to completion

- Cost to completion: Dollars per resolved ticket or per validated analysis. This is the number to report to your leadership team

For a gut check, run a weekly regression on a small harness of tasks that match your business. Add one tough long-context case, one heavy tool case, and one case that blends both. Plot tokens per second, tool round trips, and success rate together. You want the first two to go down and the third to go up.

What about Qwen3-Max

Qwen3-Max is the capacity play. It is a trillion parameter class model meant for the hardest reasoning and most nuanced writing. On its own it may be too heavy for small teams to host. Paired with Qwen3-Next it is perfect. Route rare, high difficulty queries to Max. Keep the common cases on Next. The combination achieves the user experience of a cutting edge assistant while keeping the unit economics in check.

In practice, you can start with a small gateway rule set. If the agent fails twice on Next or detects a rare domain tag, it escalates to Max. Log the escalations and periodically fine tune Next on those cases to reduce future escalations.

The bigger picture for the U.S. stack

A few implications flow from the intersection of hybrid experts, multi-token prediction, and long context.

- Developer experience improves because you can use plain language plans instead of brittle prompt acrobatics. A shared interface like OSI as a common language for agents helps teams align plans, tools, and reviews

- Data governance improves because more of the evidence sits in the same context as the claim, which simplifies audits

- Hardware utilization improves because sparse activation lets you pay for capacity only when a token actually needs it

There are also strategic choices to make.

- Have a plan if licensing terms tighten or if versions change their usage clauses. Pin model revisions and keep a tested fallback

- Track geopolitics. If your procurement team needs to certify origins or if export rules change, know in advance which models you can switch to

- Budget for energy. Long-context work can be steady rather than spiky. Monitor watts per token and watts per task. Your finance team will thank you

The bottom line

Qwen3-Next and Qwen3-Max mark a new baseline for agent builders. You get the strength of a large network when it matters, the frugality of sparse activation most of the time, the speed of multi-token prediction, and a working memory that finally feels roomy. You can run serious agents on modest hardware, and you can escalate to the largest model only when the problem truly demands it. That is how the cost curve bends.

Start small. Pick one painful workflow. Give the agent enough context to think. Limit your tools. Measure outcomes, not vibes. Add capacity where your logs tell you it pays. In a few sprints you will have an assistant that reads widely, remembers what matters, and works at the pace of your team rather than the other way around.