Agent Memory Wars: The New Data Layer For Autonomy

Q4 2025 turns agent memory into the new battleground. Platforms now ship project and team memory, vector-native storage, and consent policies that lock in context. Here is the emerging stack, the tradeoffs, and a portable blueprint to ship.

Breaking: memory just became the moat

Until this year, “memory” sounded like a nice-to-have for agents. Store a few facts. Remember preferences. Speed up a workflow. In Q4 2025 the stakes changed. Major labs and clouds are rolling out project memory, team memory, vector-native storage, and enterprise policies that snap context to identity. Memory shifted from feature to platform control point. The teams that recognize it as a data layer will ship faster and avoid lock-in. The teams that treat it as a checkbox will pay for it twice.

This piece maps the emerging memory stack, quantifies the cost and latency tradeoffs, and gives you a vendor-portable blueprint you can stand up now. Think of it as a field guide for the quarter when memory became the new runtime.

From feature to data layer

Traditional applications had a clear divide between state you keep and state you compute. Agents blur that line. Every message, file, event, and decision becomes potential fuel for the next action. That fuel lives across scopes: the person, the project, the team, and the organization.

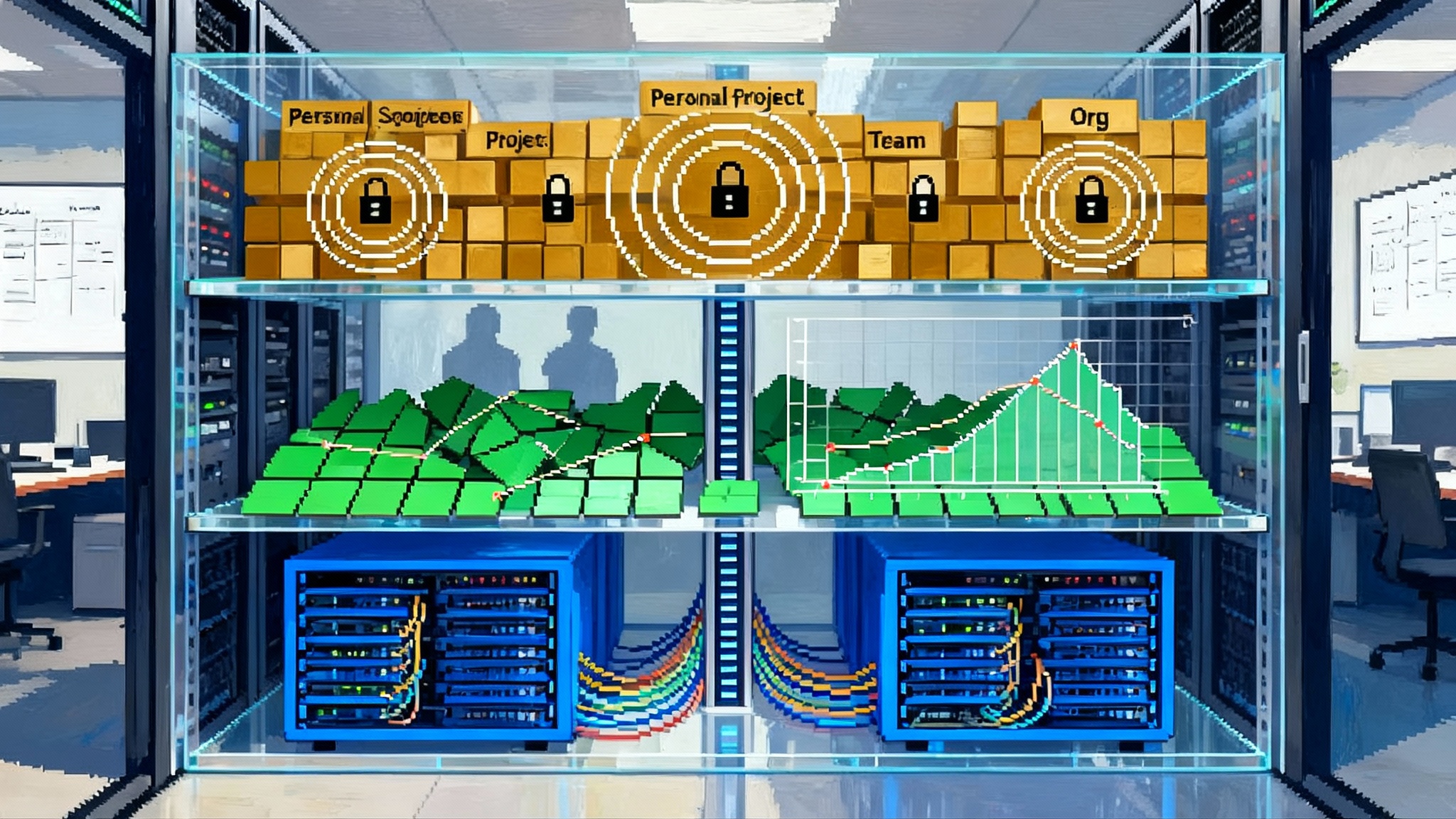

In practice, the new memory layer has four pillars:

- Scoped memory: Personal, session, project, team, and org scopes with explicit boundaries and sharing rules.

- Vector-native storage: Fast approximate search over embeddings tied to real objects and events. Many teams standardize on the open source pgvector extension for Postgres.

- Knowledge bases: Curated corpora, policies, and connectors that shape retrieval and keep answers grounded rather than speculative.

- Retention and consent controls: Time-boxed memory, human-in-the-loop approvals, provenance, and export paths that keep data portable.

Why this matters now: labs and clouds are turning these pillars into productized knobs that default to their ecosystem. If your agents rely directly on a vendor’s proprietary memory API, your data gravity just doubled. Your context and your behavior both get harder to move.

For a related lens on production readiness, see how the agent observability control plane raises the bar for measurement and safety.

The emerging memory stack

You can draw the new stack as seven layers, each with a clear job to do.

- Identity and scope registry

- What it does: Defines who the agent is acting for and which scopes are in play. Personal data lives in a different bucket than project data. Team memory travels only when policy allows.

- Practical tip: Treat scopes as first-class keys on every record. You will want to filter, export, and delete by scope without writing ad hoc migrations.

- Event and object capture

- What it does: Normalizes messages, files, tickets, logs, calendar entries, code diffs, and tool calls into a small set of object types. Attach provenance: source, timestamp, and signer.

- Practical tip: Capture before you embed. Keep the raw text or binary in object storage and record the pointer. Re-embedding becomes a batch job rather than a data-loss event.

- Vector object store

- What it does: Indexes embeddings for retrieval. Do not store vectors in a vacuum. Each vector should point to a real object and carry metadata like scope, sensitivity, and retention.

- Practical tip: Start with a relational store plus vectors for most workloads. Postgres with pgvector scales far enough for many teams and keeps joins simple. Managed vector services make sense when you need large collections with strict latency budgets.

- Knowledge base and graph overlays

- What it does: Curates what the agent should know and how facts relate. Adds synonyms, entity links, and policy tags. Connects to external systems of record so answers age gracefully.

- Practical tip: Keep your knowledge base writable by both humans and agents, but require review for cross-scope promotions. Promotion is where leaks happen.

- Retrieval and routing

- What it does: Turns a task and a user turn into targeted fetches. Blends short-term chat context with long-term memory, then routes to specialized tools as needed.

- Practical tip: Use query plans. Simple tasks can use a single dense vector search. Complex tasks benefit from a sequence: keyword filter, vector search, rerank, then scope-aware dedup.

- Policy and consent engine

- What it does: Enforces who can read or write which facts, for how long, and for what purpose. Marks certain scopes as non-trainable and requires opt-in to widen sharing.

- Practical tip: Keep policy decisions explainable and logged. If a memory fetch is blocked, record the rule that fired so developers and auditors can reproduce it.

For teams standardizing governance, the patterns in Azure Agent Ops for governance map cleanly onto this layer.

- Summarization and compression

- What it does: Reduces long histories into durable nuggets. Turns meetings into action items. Collapses tickets into incident summaries. Produces context that fits in prompt windows without repeating the full history.

- Practical tip: Summaries are lossy on purpose. Keep links back to the original objects so the agent can drill down when needed. This is especially crucial for long-haul AI agents that run over extended sessions.

What changed in Q4 2025

- Project and team memory rolled out as first-class concepts. Scopes are no longer a custom table that your team hand-wires. They show up in the vendor’s model tokens, access controls, and analytics.

- Vector-native storage landed in default stacks. You can create a table with an embedding column and expect cloud-grade performance with approximate search and hybrid filters.

- Enterprise controls entered the baseline. Retention windows, export jobs, and consent prompts now come with the platform. They make governance easier and portability harder if you adopt them without an abstraction.

For product leaders this means you will be pushed to adopt a one-click memory service that feels magical for month one and painful to unwind in month six. The safest move is to define your own memory interface, then implement adapters for vendor memory, not the other way around.

Cost and latency: the numbers that bite

Let’s make the tradeoffs concrete with simple, vendor-agnostic math. Substitute your provider’s prices.

Embeddings

- Unit: dollars per million tokens embedded.

- Typical assumption for planning: 0.10 dollars per million tokens to get an order-of-magnitude estimate.

- Knobs that matter: chunk size, overlap, re-embedding cadence, and compression.

Vector storage

- Unit: dollars per million vectors per month plus compute for queries.

- Practical assumption: a mid-sized collection of 5 million vectors will incur low four figures per month across storage and query compute if self-hosted, and mid four figures if fully managed with strict latency targets.

- Knobs that matter: index type, dimensionality, and filter selectivity. High dimensional vectors and many replicas help recall but hurt cost.

Query latency

- Dense vector search with approximate nearest neighbor: 5 to 50 milliseconds at the index under moderate load; 50 to 200 milliseconds end-to-end with network and aggregation.

- Hybrid search that blends keyword and vectors: add 20 to 80 milliseconds depending on your reranker.

- Rerankers provide quality lift at the cost of another model call. Use them sparingly for long contexts or safety-critical tasks.

A quick sizing example

- Corpus: 200 thousand documents, 800 tokens average, chunked to 300 tokens with 30 percent overlap.

- Tokens to embed: about 520 million.

- Embedding cost estimate at 0.10 dollars per million tokens: about 52 dollars for a full ingest. Re-embedding quarterly costs the same unless you compress or reduce dimensionality.

- Storage: 2 million vectors at 1 kilobyte each is roughly 2 gigabytes plus index overhead. Budget 10 to 30 gigabytes effective after indexing and replicas.

- Queries: If each user session runs 6 queries and you have 50 thousand weekly active users, plan for 300 thousand queries per week. At 100 milliseconds per query end-to-end, the memory system adds about 30 seconds of aggregate latency per user per week. That is a real tax, so cache aggressively.

Where teams overspend

- Over-chunking inflates embeddings by 2x with marginal recall gains.

- Re-embedding on every edit rather than batching daily.

- Running every fetch through a reranker when a cheaper filter would do.

A vendor-portable blueprint you can ship now

The goal: enjoy the convenience of vendor memory while keeping your data layer neutral. Build a thin portability shell with a stable schema and swappable adapters.

- Define the MemoryRecord

Create a single record type that all components understand:

- record_id: stable unique identifier

- owner_scope: personal, session, project, team, org

- subject: user or service principal

- object_type: message, file, event, task, code, tool_call

- object_ref: pointer to object storage or a database row

- text_fields: preprocessed text for embedding and display

- embedding: vector

- metadata: JSON for tags like sensitivity, product area, environment

- retention_policy: days_to_live, deletion_reason, legal_hold flag

- consent_state: collected, denied, pending, revoked

- provenance: source system, timestamp, signer, checksum

This schema forces every memory write to declare scope, retention, and provenance up front. You can now enforce policy without guessing.

- Stand up storage with a default and an escape hatch

- Default: Postgres with pgvector for vectors, a relational table for records, and object storage for blobs. It keeps joins simple, reduces operational sprawl, and lets you use standard migrations. Your vectors sit next to your business data which simplifies auditing.

- Escape hatch: a pluggable index interface for managed vector services when you cross a scale or latency threshold. Keep the adapter narrow: upsert, delete, query, and stats.

- Add a knowledge base with promotion workflows

- Split your memory into two zones: raw capture and promoted knowledge. Raw capture is noisy but rich. Promoted knowledge is reviewed, curated, and ready for broad reuse. Queue promotions behind a lightweight approval flow.

- Provide a graph overlay for entities and relationships. Use aliases, merges, and edge weights so the agent can reason about “the big customer that merged last quarter” without memorizing a string literal.

- Build scope-aware retrieval plans

- Start every request by resolving scope and purpose. Draft a query plan before the first fetch.

- Plan examples:

- Personal Q and A: search personal and project scope with time decay and no cross-team fetches.

- Team triage: search team scope first, then org-wide knowledge base, then call tools.

- Safety-critical change: only promoted knowledge and verified sources; no raw capture.

- Put a policy engine in the middle

- Keep a separate decision service that answers: can_read, can_write, can_promote, can_export. Log the rule that fired. Return explanations on denials.

- Use human-readable policy definitions. Short, testable rules beat opaque code.

- Make retention a product surface

- Show users where their memories live, how long they live, and why. Provide a one-click delete by scope and subject. Offer a download archive.

- Adopt renewable retention. Expire personal and session memory quickly by default. Require explicit renewal for team and org scopes. No silent forever.

- Instrument everything

- Log memory hits, misses, and fallbacks. Track how often memory improved quality and how often it confused the agent.

- Set a budget for tokens added by memory per task. Watch it like a cost control metric, not a footnote. Instrumentation pairs well with an agent observability control plane to close the loop between memory and outcomes.

Interop with vendor features without marrying them

Vendors now offer attractive memory buttons. Use them, but keep your center of gravity. A pragmatic pattern looks like this:

- Mirror writes: when your agent saves a memory, write to your MemoryRecord and optionally to the vendor memory for convenience features like sticky chat context.

- Keep truth in one place: your MemoryRecord is the system of record. Vendor memory is a cache that can be rebuilt from exports.

- Export nightly: produce a scope-partitioned archive to your cloud storage. One zip per scope. Include schemas and hashes for integrity checks.

- Rebuild drills: practice restoring index state from exports on a fresh cluster every month. Treat it like a disaster recovery run.

Two opinions about technology choices

- You will go farther with a good relational backbone plus vectors than with a vector-only stack for most products. Joins, transactions, and migrations are the difference between a demo and a system. The pgvector ecosystem is mature and keeps options open.

- Managed knowledge bases are great for change detection and connectors. When you need tight control of promotion and policy, run your own curation path and use managed services as sources and sinks. For a concrete reference, review how Amazon Bedrock knowledge bases integrate data and retrieval before you design yours.

Product patterns that win

- Memory as preference, not prophecy: let users declare preferences that the agent can cite and edit. Do not guess.

- Memory with receipts: every time memory influences a decision, surface a short citation with a link to the source object and the policy that allowed it.

- Memory budgets: treat memory as a scarce resource like battery on mobile. Spend it where it changes outcomes.

- Memory promotion rituals: run weekly reviews where the team curates raw capture into promoted knowledge. Measure time-to-promotion.

Antipatterns to avoid

- Shadow memory: saving side-channel notes outside your retention and consent path. This creates policy debt that shows up during audits.

- Unbounded stickiness: letting chat history leak across projects because it helps. It will help until it mixes confidential details across clients.

- Single-index heroics: assuming one global vector index solves everything. Different scopes and latencies demand multiple indexes and caches.

- Reranker everywhere: adding a reranker to every query without measuring lift. It often adds cost and latency while hiding poor chunking.

A 30-day plan for teams

Week 1

- Define scopes and adopt the MemoryRecord schema. Ship a small ingestion service that writes raw capture with provenance and retention.

- Stand up Postgres with pgvector and build adapters for at least one managed vector service. Keep the interface narrow and tested.

Week 2

- Implement a basic policy engine with can_read and can_write. Start logging denials with explanations.

- Build a retrieval router with two query plans: personal Q and A and team triage. Add a simple cache.

Week 3

- Add summarization jobs for meetings and incidents. Promote summaries to knowledge with a human approval step.

- Expose memory visibility to users: show what is stored by scope and offer delete-by-scope controls.

Week 4

- Run a migration drill: export everything by scope, rebuild the index on a fresh cluster, and rehearse a vendor switch by pointing the adapter to your alternate index.

- Set budgets: max tokens from memory per task, max reranker calls per session, and a target P95 retrieval latency.

At the end of 30 days you will have a thin portability shell, a policy backbone, and a measured memory budget. You can now adopt vendor features without losing the keys to your own context.

What to watch next

- Identity-aware prompts: model runtimes that personalize prompts based on scope and policy without sending raw memory on every turn.

- Continuous re-embedding: cheaper, faster embeddings that allow near real-time updates without batch jobs.

- Memory provenance standards: signatures on summaries and citations that make cross-vendor trust portable.

- AI-native caches: shared caches that understand retrieval semantics and reduce repeat queries across teams.

The bottom line

Memory is not a checkbox. It is the data layer that will decide which agents feel trustworthy, fast, and portable. The battleground is not just who stores vectors faster. It is who controls the relationship between scope, knowledge, and policy. Teams that define a portable memory interface, keep one source of truth, and practice export and rebuild from day one will ship faster now and negotiate better later. That is how you win the memory wars without giving up the map.