Facewear Rising: Smart Glasses Become Agent-Native Platform

Meta’s Ray-Ban Display and Neural Band hit the market, and Reuters reports Apple is accelerating AI-first glasses. Together they signal the first agent-native consumer platform. Here is what changes, who wins, and what to build now.

Breaking news, and why it matters

In mid September, Meta pulled the future a little closer. The company introduced Ray-Ban Display smart glasses with an in-lens screen and a companion wrist sensor called the Neural Band, which reads muscle activity to turn tiny finger motions into commands. The product went on sale in the United States on September 30 for 799 dollars, bundling the glasses and the band. See Meta's announcement: Ray-Ban Display and Neural Band.

One day later, the story expanded. On October 1, Reuters reported that Apple paused its lighter Vision Pro headset to accelerate work on smart glasses that lean into voice and artificial intelligence. The report suggests a two-stage plan, starting with a model that pairs to the iPhone, followed by a version with its own display. See the coverage: Reuters reports Apple pivot.

Taken together, these moves mark the moment smart glasses become the first agent-native consumer platform. Not accessories. Not novelty cameras. A platform designed for software that sees what you see, remembers what matters, and takes action for you while you stay heads-up in the world.

From assistants to agents, now on your face

Assistants answer prompts. Agents do jobs. The difference is autonomy and loop closure. An agent does not just respond. It observes, plans, acts, and learns from the result. That loop needs eyes, memory, and hands.

Glasses supply the eyes and ears through cameras and microphones. A neural wristband or subtle voice input supplies the hands. A glanceable lens display supplies the feedback your brain needs to stay oriented without dropping into a phone. Put those together and you have the first consumer device that can run the full loop in everyday life.

Think of an agent like a competent stagehand that moves with you. On a phone, that stagehand keeps tugging you behind the curtain. With glasses, it finally works in the wings, quietly updating the set while you stay on stage.

The real-world agent loop: see, remember, act

Here is how the loop translates to daily life.

- See: The glasses capture context with the camera and hear ambient cues like a boarding call. The agent recognizes the object in your hand, the sign you are reading, or the food on your stove. It knows this because you let it see what you see during the task, not because it scraped your inbox two hours ago.

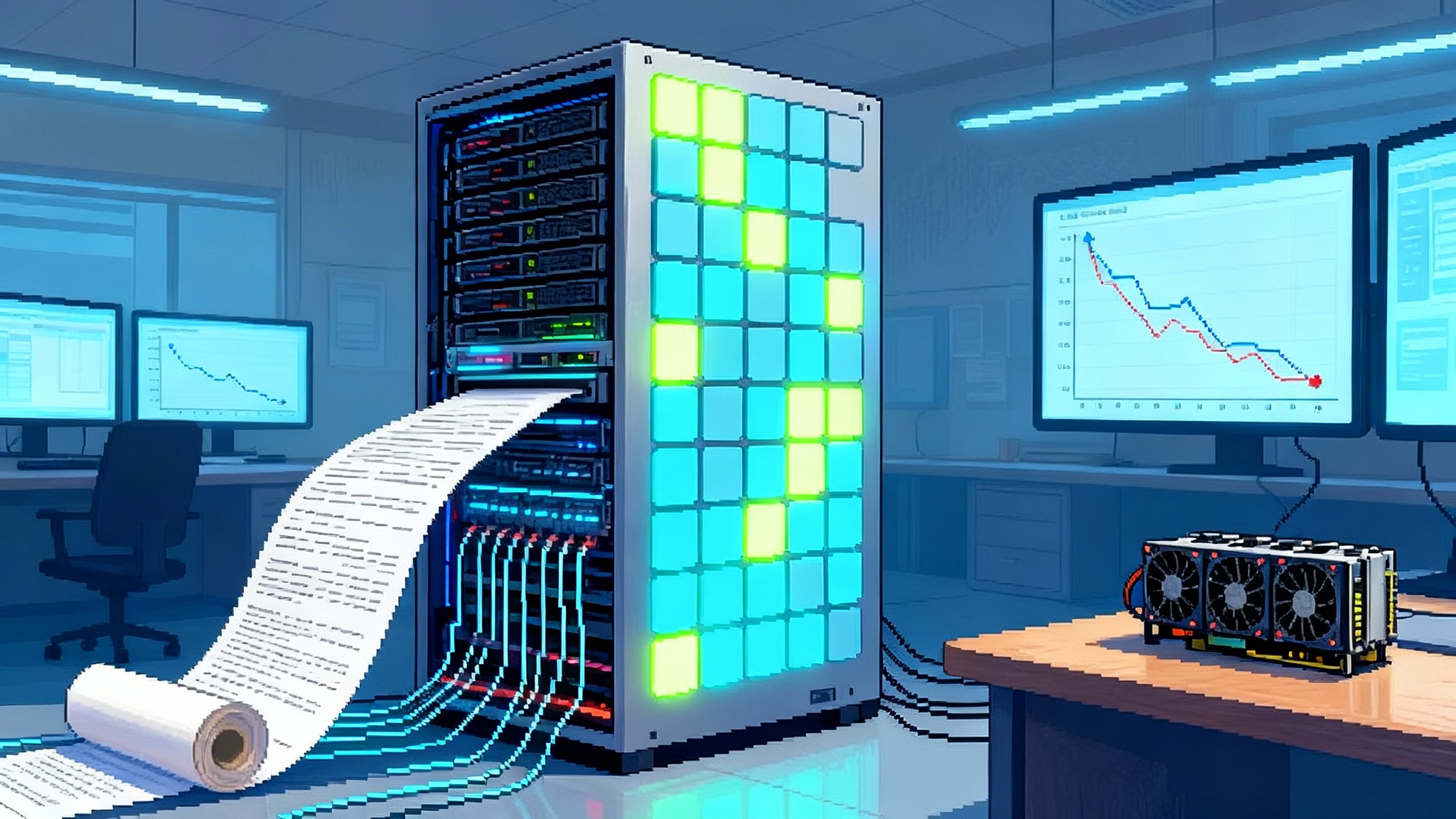

- Remember: The agent keeps task memory and personal preferences. That can live on device, in the cloud, or split between the two. Memory is not a diary of your life. It is a working set that helps the agent finish the job you gave it and get better at your routine.

- Act: The agent sends the message, starts the timer, logs the expense, checks in for the flight, or buys the part. It confirms on your lens when the action is sensitive, and it expects silent thumb-and-finger approvals for anything with real consequences.

Concrete examples make it real:

- Kitchen: You say, “let’s cook that saved tofu recipe.” The agent recognizes the pan and the ingredients on the counter. It shows three steps on the lens, listens for the sizzle, lowers the heat alert if the oil smokes, and logs substitutions so it can adapt next time.

- Commuting: You walk toward the wrong train platform. The lens flashes a small arrow and the band buzzes twice. No map juggling, no missed train.

- Work: You look at a whiteboard. The agent captures a clean copy, labels it with the project name it heard in the meeting, and drops it into the right folder. A pinch gesture shares it to the team with your standard caption.

- Parenting: You point at a museum placard. The lens shows kid-level facts and a quick scavenger challenge. The agent notices the attention span fading and shortens the activity.

In each case, the agent does not replace your presence. It replaces the drop into your phone that breaks it.

Input, output, and feedback: EMG on the wrist, a display in the lens

Eyes need clear outputs and hands need low-friction inputs. The current wave of glasses adds both.

- Neural wristbands using surface electromyography pick up the faint electrical signals that travel from your brain to your fingers. The band reads a micro pinch, a scroll motion, a rotate gesture, and even silent text entry with practice. The biggest win is social. You can approve, undo, or select without speaking. That matters on a quiet train or a noisy street, and it sidesteps the awkwardness of barking commands in public.

- Lens displays deliver information where your vision already is. They are small and glanceable, not a floating desktop. Done right, they cut cognitive load. You see a discrete message chip, a short navigation cue, or a timer tick. You make eye contact with your life, not your screen.

Compared to a watch, the display sits where your attention naturally lands, so it avoids arm lifts for every micro interaction. Compared to a phone, the wristband avoids the tug-of-war between eyes and hands that pulls you out of the moment. The combination is a human factors upgrade, not just a gadget trick.

Why Apple and Meta now converge

Meta is building from wearables outward. Apple is collapsing a head-worn computer into everyday frames. The strategies differ, but the destination is the same: hands-free agents that live at eye level.

The pivot matters because Apple is one of the few companies with both a massive developer base and best-in-class custom silicon for low power on-device inference. If Apple brings its chip and privacy muscle to glasses while Meta brings scale in social graph, messaging, and price points, developers will see a durable market. That is what creates a platform era, not a single hit device. For a sense of how agent runtimes are stretching, see our take on long-haul AI agents.

The race also puts pressure on the pieces around the glasses. Phones and watches become compute and sensor satellites. AirPods and similar audio devices become private audio channels and spatial anchors. Homes and cars become agent habitats. This is not a single-product story. It is a network effect across your personal hardware cluster.

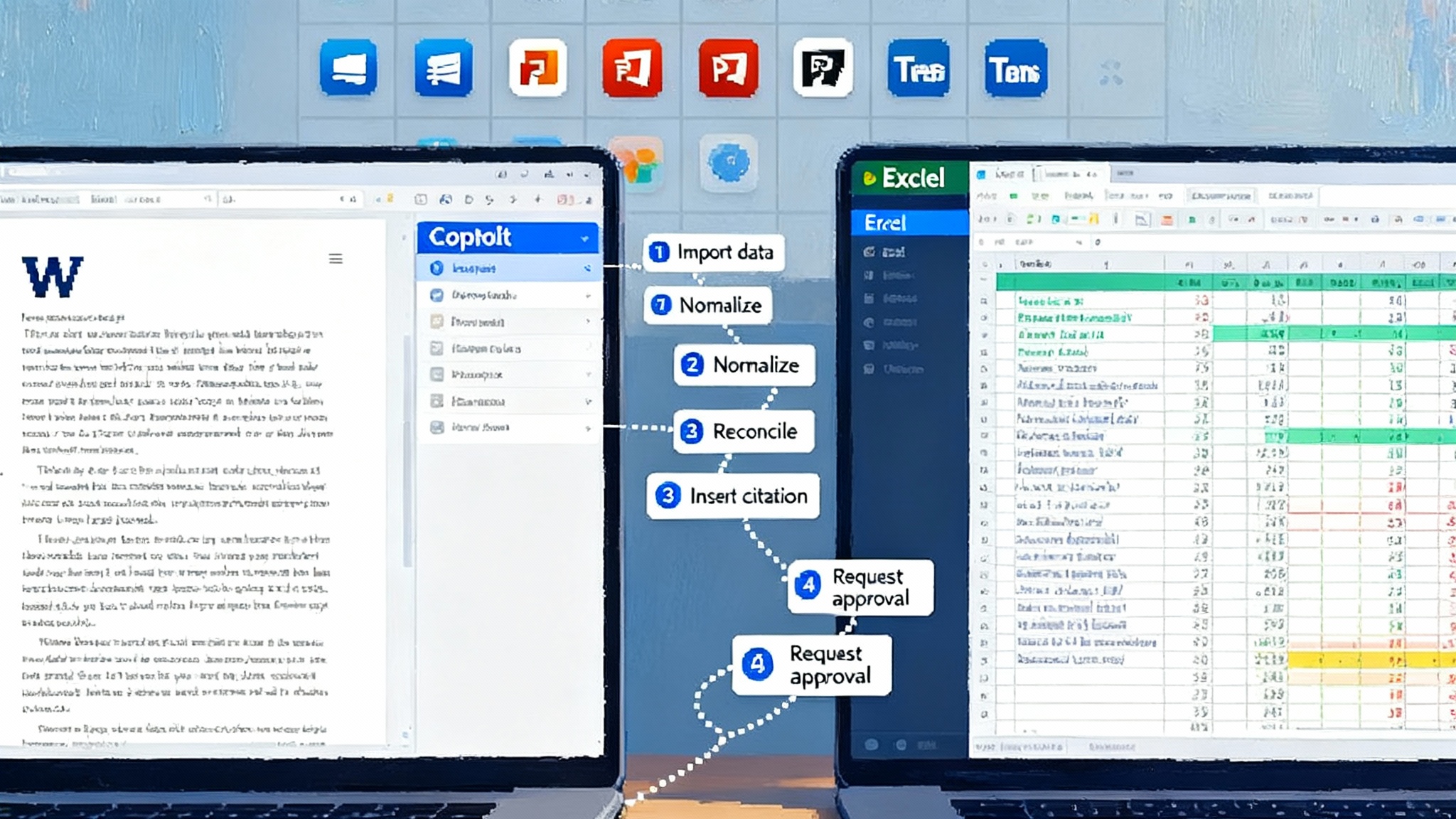

The coming skills economy for agent glasses

Agent-native apps will look less like icons and more like skills you can summon by name, context, or gesture. Expect three tiers.

-

Companion skills that attach to existing services. The grocery app becomes an agent that recognizes your pantry, reduces food waste, and auto-builds the list as you cook. The language app becomes an agent that captions your conversations and practices vocabulary with objects you touch.

-

Vertical agents for work. Field technicians get an agent that recognizes parts and checks procedures. Retail staff get an agent that matches shoppers to inventory on the shelf in front of them. Healthcare teams get an agent that documents encounters and prompts for consent when the lens detects a sensitive setting.

-

New native categories. Memory agents that learn your home and your habits. Personal safety agents that detect hazards in a workshop. Creative agents that do live visual remix while you perform.

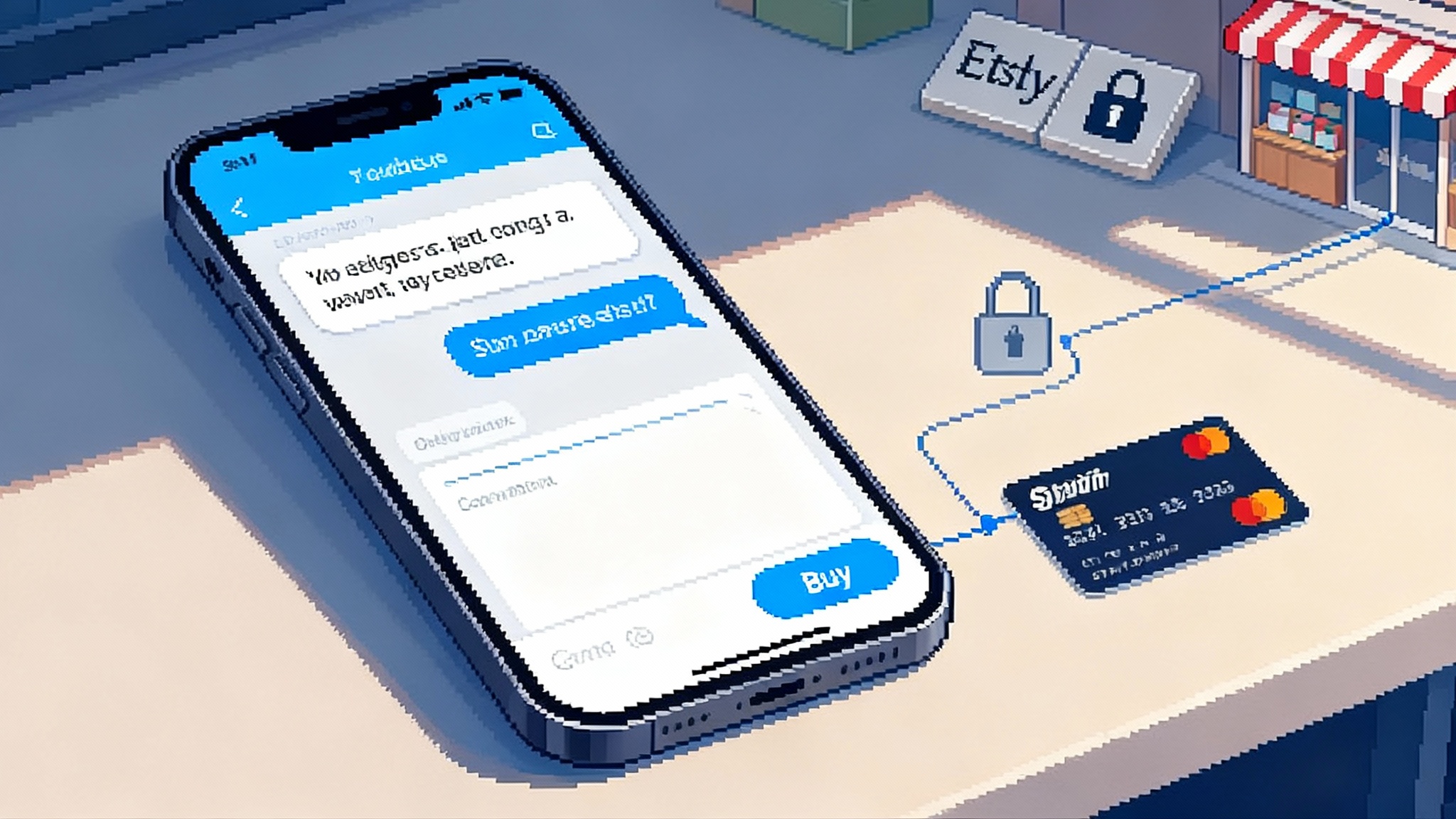

Monetization will follow function, not icons:

- Subscription for ongoing skills like translation, coaching, or professional checklists.

- Per-use fees for high-value actions such as notarization, medical transcription, or travel rebooking.

- Transaction and affiliate fees for commerce that the agent completes on your behalf.

- Action credits that cover compute cost for heavier on-device or cloud inference.

Expect platform rules that look like app store meets automation. Users approve sensitive scopes such as camera memory, location, and payments. High-risk actions require glance-to-confirm or a specific gesture. Logs are sealed and auditable. Skills compete on reliability, not animations. For governance patterns emerging on the enterprise side, see Azure Agent Ops Goes GA.

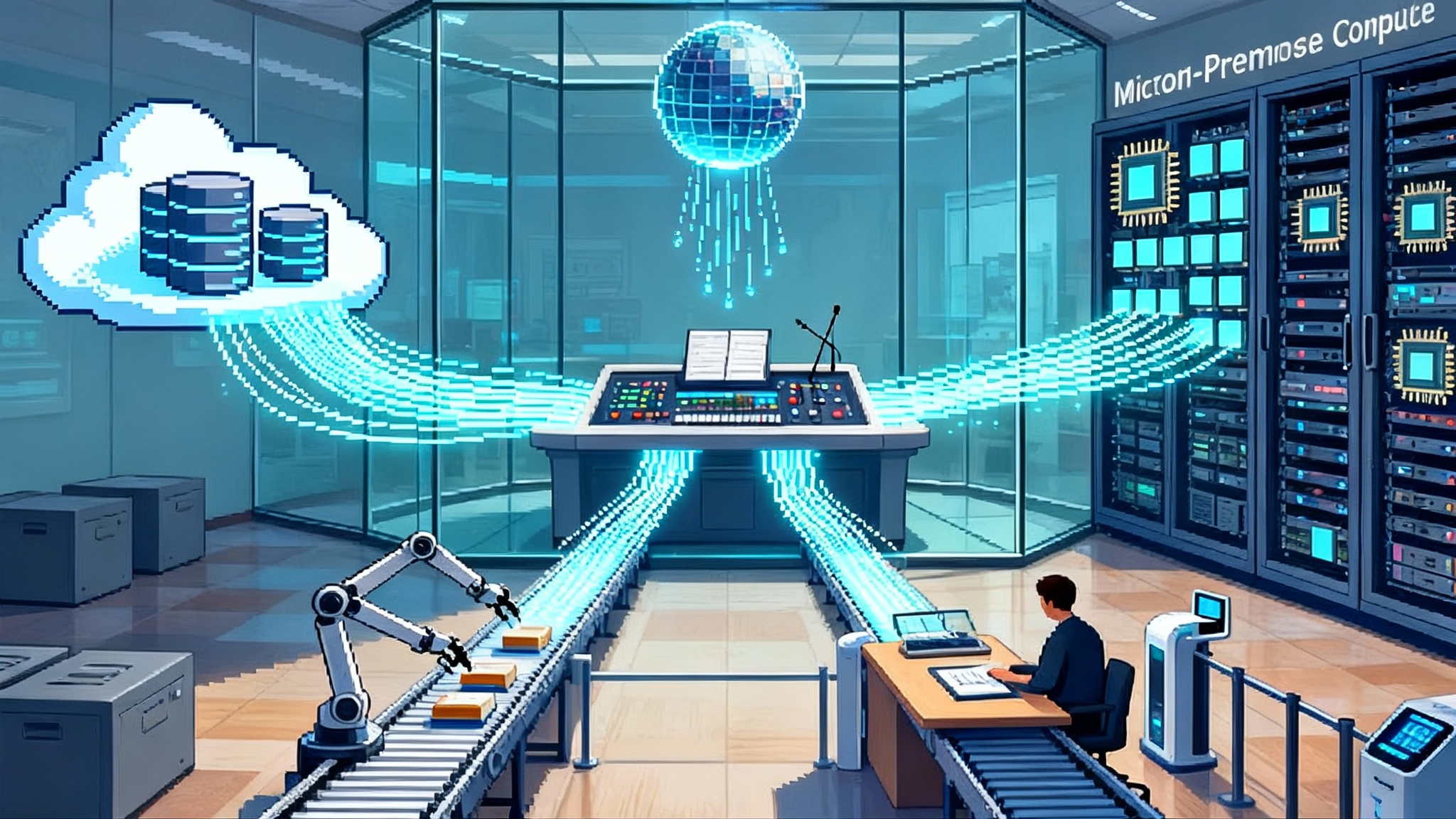

On-device inference vs cloud: privacy and performance, not a slogan

Smart glasses will mix on-device and cloud inference for the next several years. The trade-offs are specific.

- On device: lower latency, better privacy, and reliability on a flaky network. Perfect for wake words, hot commands, simple classifiers, basic translation, and safety checks. Battery is the constraint. Every extra milliwatt has a face comfort cost.

- In the cloud: larger models, longer context, and access to private or enterprise data. Perfect for planning, multi-step tasks, complex vision, and knowledge work. Privacy becomes a contract problem, not only a technology one.

A good default looks like this: process immediately sensitive frames on device and discard them. Send abstracted features or redacted text to the cloud when a richer model is needed. Ask for permission when the agent wants to retain memory across days. Give users a single “show me what you kept” control that is simple enough for anyone to use. If you need a common language for policies and capabilities, revisit OSI for enterprise agents.

For bystanders, make the rules visible. A bright capture indicator when the camera records. An audible cue for live calls. A built-in mode that automatically blurs faces and private screens in the background unless the wearer is at home or has consent. Social norms will harden fast. The platform that handles this well will win trust early.

The headwinds are real, but solvable

Skeptics point to battery life, heat, and fashion. They are right that comfort wins markets. That is why the near-term lens displays are glanceable, not full overlays, and why the wristband offloads input from the frames. The daily wear target is six to nine hours with charging breaks, not twenty-four. That is acceptable if the experiences deliver consistent value in small bites.

Demos can fail. Networks can hiccup. Early owners will find the edges. The fix is not to delay until perfect. It is to ship narrow, reliable loops where the agent can be trusted, and to give developers the tools to build more of them. If a task cannot be done reliably hands-free, it should not be shipped on glasses yet.

Regulators will ask hard questions. The platform that leans into consent, transparency, and clear user controls will not just comply. It will create differentiation. Privacy is not only a risk. It is also a design opportunity that reduces clutter and focuses the agent on what you actually want.

Why 2026 could be the breakout year

Several forces converge next year.

- Installed base: 2025 sales seed a visible community of daily wearers. Word of mouth matters more than ads for facewear.

- Platform maturity: expect expanded glass-specific software kits, better neural band training flows, and lighter on-device models. Developers prefer stability and a clear path to paid distribution.

- Ecosystem catalysts: Apple’s move concentrates developer attention. Meta’s expansion into more regions brings scale. Carriers will bundle glasses with data plans, which matters for adoption.

- Use case clarity: early hits will emerge. Translation, navigation, capture, coaching, and quick-reply messaging are the likely leaders. Once two or three become daily habits, a store of agent skills makes sense.

That is when an agent app store appears on glasses. Not a grid of icons. A directory of skills that you can invoke by name, context, or gesture. Discovery will be contextual, not top charts. Billing will be tied to actions, not downloads. Reviews will measure reliability and privacy behavior. The store will feel more like a marketplace of helpful workers than a catalog of software.

What to build now

If you are a founder, product lead, or developer, there is a clear path.

- Pick a job that benefits from hands-free, eyes-up interaction. Cooking, fixing, teaching, training, guiding, inspecting, and coaching all qualify.

- Start with a small loop you can make reliable. Define a single success metric. For example, minutes saved per task, errors reduced, or completed steps per hour.

- Design for silent confirmation. A micro pinch for approve, a rotate for undo, a two-finger press for escalate to phone. Avoid long dictation flows.

- Split compute with intent. Keep an on-device classifier that recognizes the start and stop of your task and protects private frames. Push planning and knowledge to the cloud with clear permission prompts.

- Offer a free skill tier with hard limits and a paid plan that guarantees fast response and better memory. Add per-action credits for expensive tasks.

- Build enterprise pilots where the return on investment is obvious. Field service, retail operations, logistics, and hospitality can pay for reliability.

If you are a brand, think service, not ads. A travel agent that rebooks you while you are walking to a gate earns loyalty. A grocery agent that helps you waste less food earns trust. Agents that interrupt will get uninstalled.

The shape of the platform

Agent-native glasses will not replace phones overnight. They will absorb the moments where phones are weakest. Quick capture. Subtle control. In-context guidance. Over time, they will pull more tasks up to eye level where attention is scarce and presence is precious.

Meta’s launch puts the hardware and input rails on the table. Apple’s shift points the developer spotlight at facewear. The rest is up to the software builders who decide what the agent learns first and how it behaves in public.

The next platform will not be defined by pixels per degree or model parameters alone. It will be defined by how well agents help people do real things without leaving the moment. That is the bar. Meet it, and 2026 will be the year facewear gets its app store.