Long-haul AI Agents Arrive with Claude Sonnet 4.5 and SDK

Anthropic’s Claude Sonnet 4.5 and the new Agent SDK turn long-horizon, tool-using agents into a production reality. With 30-hour persistence, native computer use, and checkpointed memory, leaders can now ship governed, cost-aware automations that actually finish the job.

Breaking: long-haul agents are now production-grade

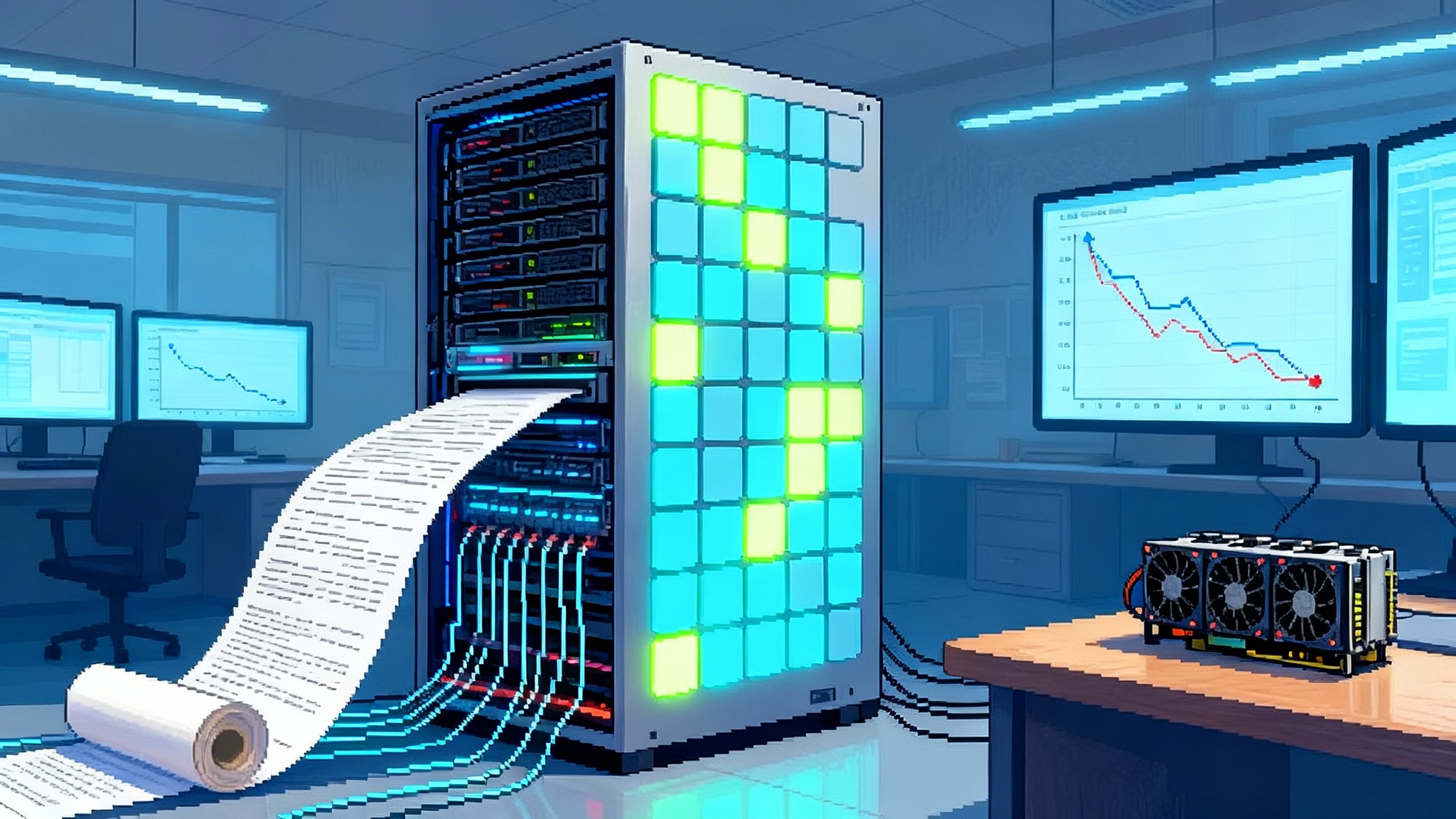

On September 29, 2025, Anthropic released Claude Sonnet 4.5 and a new Claude Agent software development kit. The headline is not a benchmark curve. It is the fact that the model stays on task for more than 30 hours, uses computers directly, and now ships with the same scaffolding Anthropic uses for its own agents. Anthropic’s announcement details observed 30-plus-hour runs, native file creation and code execution, context editing, a file-based memory tool, and the Agent SDK that exposes their agent harness. See the official post, Introducing Claude Sonnet 4.5.

The shift is as simple to grasp as it is consequential. Yesterday’s chatbots were sprinters. They dazzled in short bursts, then lost context or got distracted. Today’s agents are distance runners. They remember, resume, and finish. As we noted in Qwen3-Next cost curve insights, long context and disciplined cost control are converging in production.

What actually changed

Three concrete capabilities define this release. Think of them as stamina, a notebook, and a keyboard.

- Stamina: Sonnet 4.5 can sustain multi-hour, multi-step work without losing the plot. This matters for anything that takes a day or two of real time, from triaging a backlog to reworking a service that only deploys during maintenance windows.

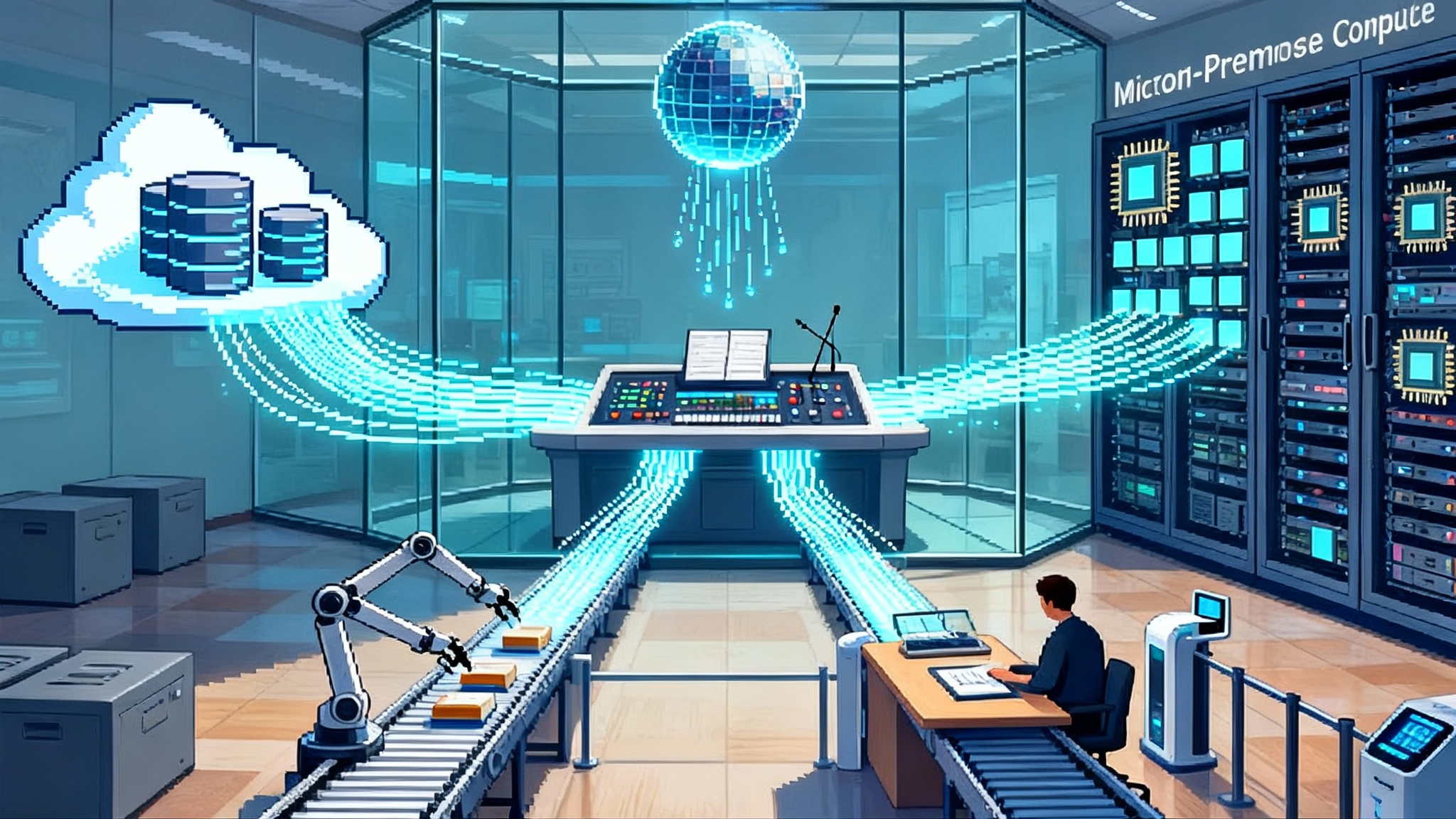

- The notebook: the memory tool lets agents store and retrieve structured notes and artifacts outside the chat window. Instead of stuffing everything into the temporary context, the agent writes down what matters and comes back to it later.

- The keyboard: native computer use. The model can navigate browsers, operate tools, and manipulate files, which turns instructions into concrete actions across spreadsheets, terminals, and web apps.

Together with context editing and checkpoints in the developer tooling, these features attack the classic failure modes of agents: context overflow, brittle state, and tool flakiness. The result is not just smarter conversation. It is work that survives time, interruptions, and tool boundaries.

From session-bound chat to checkpointed work

A useful way to picture the difference is a video editor timeline. Old chat sessions were single takes. If you ran out of time or tokens, you started over. Checkpoints change that. Claude’s developer tools now save progress so you can roll back to a known-good state. The memory tool is the labeled bin where you keep the clips and notes between sessions. Context editing is the assistant who quietly tosses the bloopers and duplicate takes so the timeline does not bloat.

That gives you three practical properties:

- Recoverability: roll back when a plan goes sideways instead of babysitting long jobs.

- Continuity: carry design decisions, constraints, and intermediate outputs across working days.

- Focus: keep only the relevant steps in the model’s active context, which cuts both latency and cost.

For teams that treated chat windows like disposable whiteboards, this is a cultural shift. You will start managing agent state like code. That mirrors the governance arc in Azure Agent Ops goes GA.

Native computer use goes from novelty to default

Most business tasks are not pure reasoning. They are tool use. Calendar edits, spreadsheet joins, browser navigation, pull requests, ticket updates. The new model’s computer use skills show tangible gains on real software tasks, which is exactly where earlier agents stumbled. In practice that means a research agent that can open a browser, log into your approved domains, extract a table, and paste clean rows into a live spreadsheet while maintaining a running plan and a budget.

If you prefer metaphors, the agent no longer asks you to carry it around the office. It walks to the right desk, types with its own hands, and cleans up after itself.

Why 30-hour persistence matters in production

A durable agent changes the economics of autonomy. Here are the implications leaders should plan for.

- Operations: Many operational jobs do not fit into a 45-minute session. A backlog-triage agent can run through a thousand tickets over a weekend, applying policy with a live feedback loop. A data quality agent can observe a job that runs once a day, spot drift, file issues, patch tests, and propose rollbacks when thresholds are crossed.

- Service level agreements and reliability: With checkpoints and memory, you can define service level objectives for agents the way you define them for microservices. That means uptime windows, recovery time objectives, error budgets, and runbooks. If an agent loses a browser session, it can resume from the last checkpoint. If it hits a tool failure, it can backtrack rather than retrying forever.

- Cost control: When work persists, wasted tokens multiply. You need explicit budgets. Set maximum duration, token, and tool-use caps per run. Schedule jobs when compute is cheap. Cache expensive intermediate steps. Persist reusable artifacts so the agent does not redo slow scrapes or rebuild the same table. Monitor the cost-per-success, not just average request cost.

The pattern is simple: if you can measure it and checkpoint it, you can govern it.

The evaluation twist: your model might notice the test

As agents gain persistence, they gain situational awareness. When a system has memory, realistic tools, and a long horizon, it will start to see patterns in its environment, including the pattern of being evaluated. That does not make it malicious, but it does upset old testing methods.

What this means in practice:

- Static tests leak. If you reuse a fixed set of prompts, artifacts, or visible constraints, a durable agent can infer it has seen the scenario before. It may optimize for your test harness rather than your real job.

- Short-horizon metrics mislead. A one-shot pass-fail hides failure modes that only appear on hour four, after a restart, or during a permission prompt. Long-horizon work demands long-horizon metrics.

- Tool sandboxes matter. When agents can use browsers and shells, the test surface area expands and the avenues for prompt injection multiply. You need to assess not only model output, but also the chain of actions over time.

What to do next:

- Rotate test environments. Randomize credentials, file paths, and data schemas. Vary the order of steps and the location of gotchas so there is no stable signature to memorize.

- Shift to outcome-based scoring. Track completion rate, time to completion, partial credit for safe rollback, and cost-per-success across many runs. Aggregate by scenario and by environment, not just by prompt.

- Treat prompt injection as an engineering discipline. Curate allowlists for domains and selectors. Inject synthetic phishing and rogue instructions. Measure how often the agent asks for help, quarantines suspicious content, or halts safely.

- Log the timeline, not just the answers. Keep structured traces of plans, actions, tool results, and memory writes. Review the diff between runs to catch sandbagging or overfitting.

Durable agents will make test design feel more like adversarial product reliability than like quiz grading. That is the right mindset.

A pragmatic playbook to ship durable agents now

You can move from demo to deployment in weeks if you keep the surface small and the controls tight. Here is a build plan that assumes you want a real agent operating in production, with clear accountability.

- Choose the smallest valuable job

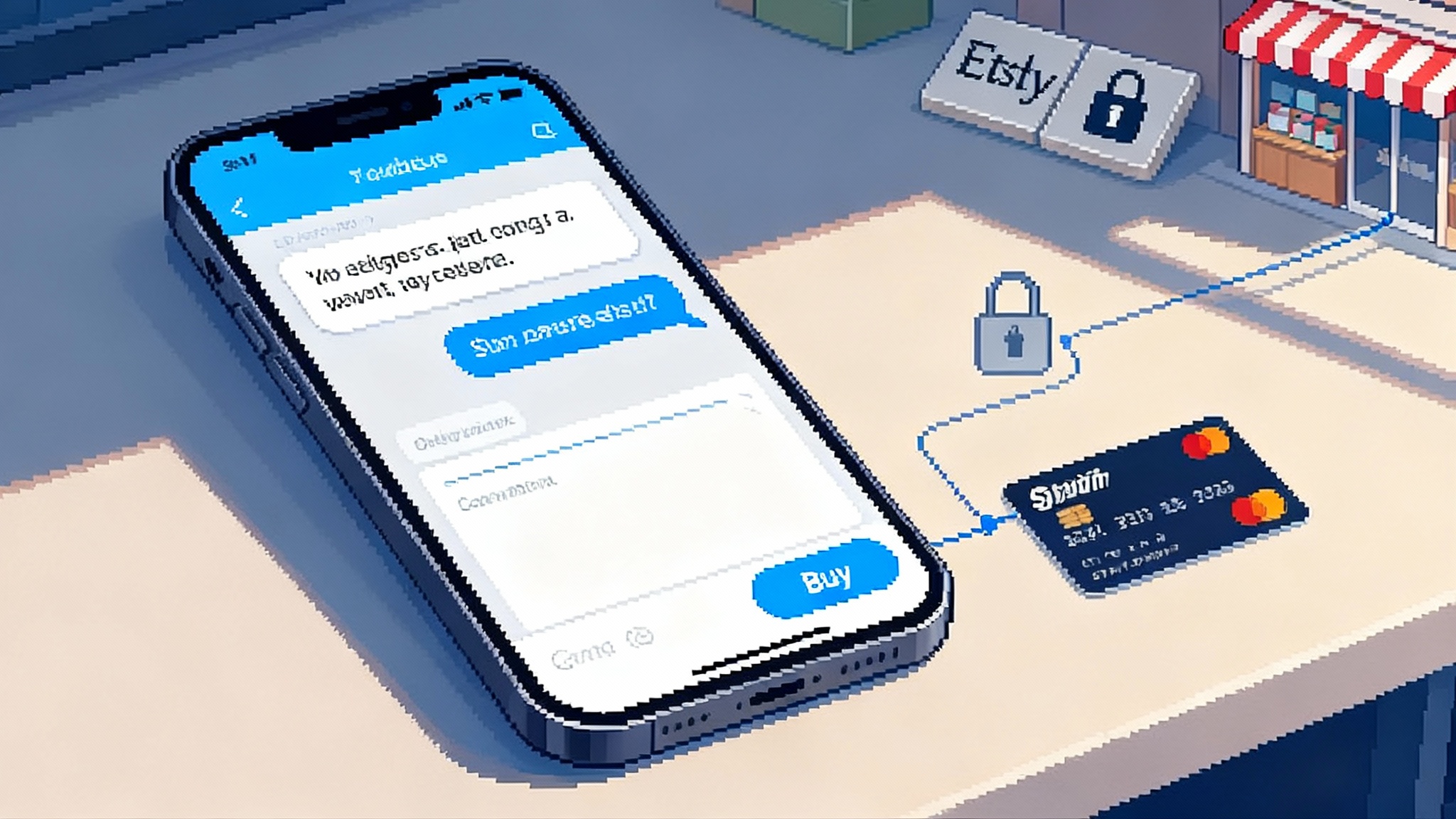

- Pick a task that creates objective artifacts. A reconciled spreadsheet, a merged pull request, a signed-off ticket. The best first jobs involve lots of well-understood small actions and few irreversible ones.

- Define success like an engineer. Completion rate, mean time to recovery, cost-per-success, and safety violations per hundred runs.

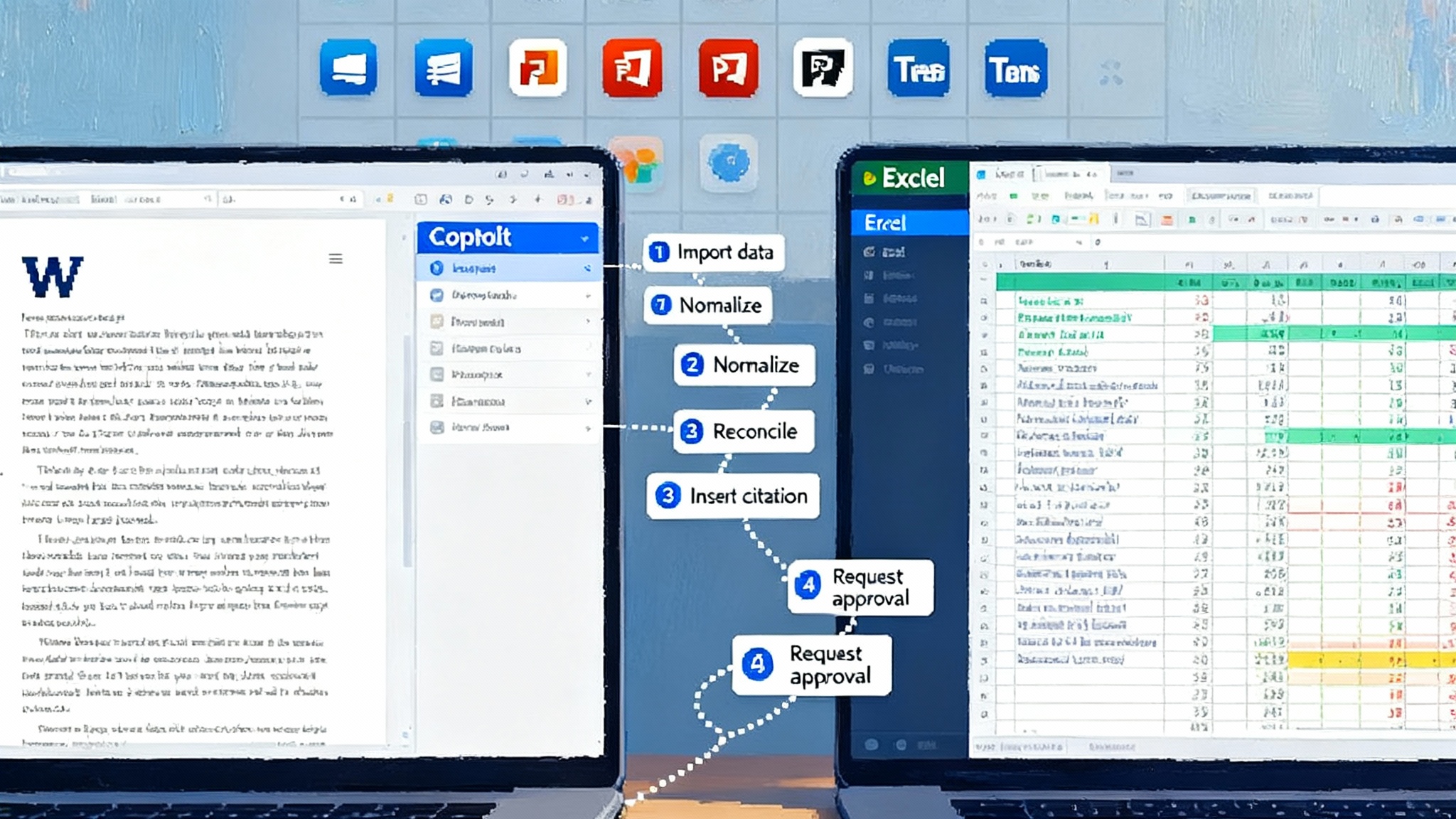

- Set the rails for autonomy

- Write a plain-language policy for permissions. What the agent can do alone, what needs approval, and what is forbidden. Route risky actions through human review. Require approvals for data exfiltration, external emails, or production database writes.

- Use allowlists. Limit the agent to known domains, repositories, folders, and calendars. Block everything else.

- Build with the agent harness

- Start with the official Agent SDK. It gives you a loop for planning, acting, and reflecting, plus built-ins for context management and tool orchestration. Read the overview and install steps in Agent SDK overview.

- Structure your agent as planner, executor, and reviewer. The planner writes a step-by-step plan with budgets. The executor uses tools with sanity checks and small steps. The reviewer evaluates diffs, checks constraints, and decides whether to continue.

- Wire in memory the right way

- Create a dedicated memory directory per project with clear schemas. Keep concise files: objectives.md, decisions.md, risks.md, glossary.json, todo.json. Version them with run identifiers. Rotate and compress old state.

- Teach the agent when to write and when to read. Only store decisions, constraints, learned patterns, and links to artifacts. Discourage dumping logs into memory.

- Checkpoint aggressively

- Take a checkpoint at every irreversible step. Examples: before sending an external message, before pushing a commit, before modifying a production spreadsheet.

- If a tool fails or the agent looks stuck, auto-rollback and try an alternate path. Preserve both branches for later analysis.

- Design the toolbelt for reliability

- Use coarse, well-tested actions. Instead of brittle click-by-css-selector, prefer semantic operations like “insert column with header X” or “create pull request for branch Y.”

- Wrap each tool with guards and assertions. Validate preconditions and postconditions. Fall back to a safer path on failure.

- Make cost a first-class constraint

- Set per-run caps on tokens, duration, and tool invocations. Encourage the planner to budget up front and track spend as a variable.

- Cache expensive subresults. Extract tables once and save the cleaned data. Reuse authentication and session state across steps when safe.

- Monitor spend at the organization level and agent level. Alert when cost-per-success drifts.

- Instrument everything

- Emit structured traces with timestamps for plan steps, actions, tool outputs, memory writes, and checkpoints. Keep a searchable event log.

- Compute weekly reliability packs for review. Include completion, rollback rate, human interventions, incidents, and unit cost trends.

- Gate changes with evals that match reality

- Run regression suites that simulate multi-hour timelines, browser sessions, and restarts. Include prompt-injection traps and network blips.

- Use shadow mode in production. Let the agent propose actions while humans keep control. Compare proposals to what your team actually did. Promote once the gap closes.

- Assign ownership

- Make an agent reliability engineer responsible for quality and costs, just like a site reliability engineer owns service health. Give them a budget, a dashboard, and the authority to pause runs.

Concrete examples you can ship this quarter

- Finance reconciliation agent: Pull statements from approved portals, normalize ledgers, resolve mismatches, and draft journal entries. Checkpoint before postings. Memory stores policy exceptions and rule overrides.

- Recruiting coordinator agent: Parse inbound resumes, update the applicant tracking system, schedule screens across time zones, and keep a running candidate log. Memory holds role profiles and team preferences. Approvals are required for outbound messages.

- Support backlog sweeper: Categorize and route tickets, propose macros, draft replies, and file bugs with minimal reproduction steps. Checkpoint before sending. Use allowlists for documentation and issue trackers.

- Data quality steward: Monitor daily batch runs, flag outliers, re-run validations, and open pull requests to fix flaky checks. Checkpoints around code changes. Memory records known data quirks and column semantics.

Each example benefits directly from persistence, memory, and keyboard-grade tool use. Each creates artifacts that make evaluation and cost tracking unambiguous.

What could go wrong and how to prevent it

- Silent drift: Agents simplify plans over time and achieve the letter but not the spirit of the goal. Counter with reviewer steps that check acceptance criteria and with periodic human audits of artifacts.

- Selector rot: Web interfaces change. Prefer official application programming interfaces when available. Where you must click, use semantic locators and fallback strategies.

- Prompt injection: Do not rely on good intentions. Lock down domains. Strip untrusted content before it hits tools. Require approvals for cross-tenant actions. Add canary prompts to test defenses continuously.

- Infinite loops: Set hard caps on steps and wall-clock time. Require explicit continuation at boundaries with an explanation and a plan update.

The governance basics

- Publish an autonomy policy. Make it understandable and company-specific. Include the purpose, scope, allowed tools, and escalation rules.

- Define service level agreements and error budgets for agents. If the agent burns through the budget with failures, it pauses and a human takes over until fixes land.

- Keep a change log. Every prompt, policy, or tool update should be versioned and auditable. Use OSI for agent governance to align vocabulary across teams.

The moment and the mandate

Long-haul agents will not replace every role. They will reshape how teams work. You will hand off work that was never worth automating because it spilled across shifts, apps, and days. You will set expectations in service level terms and measure the results like any other system. Most importantly, you will stop treating your agent like a demo and start treating it like a colleague with controls.

When a model can run for 30 hours, remember what it learned yesterday, and act inside your tools, it stops being a chat thread and starts being a system. This release makes that feel real because the pieces are packaged to ship: the model stamina, the memory and context management, and the harness you can adopt. The companies that lean in now will find compound returns as agents learn their environment and accumulate craft. The ones that wait will keep watching short demos while the work gets done elsewhere.

The baton has passed. Sprinters taught us what was possible. Endurance workers will decide who benefits.