Compute-Agnostic Reasoning: DeepSeek Shock and Split Stacks

China-native backends just landed as U.S. standards scrutiny intensified. Cheap, open reasoning models now run on domestic NPUs and Nvidia, cracking the CUDA moat, accelerating model sovereignty, and reshaping inference costs. Here is the split-stack playbook.

The week the backends broke loose

In the span of a few days at the end of September and the start of October 2025, two signals arrived that tell the same story. First, China-native backends and plugins shipped that make it practical to run state-of-the-art reasoning models on domestic accelerators such as Huawei Ascend, Cambricon, and Hygon, not just on Nvidia. Second, U.S. standards bodies turned up the spotlight on Chinese models, especially DeepSeek’s line of low-cost reasoning systems. The net effect is a visible split in the stack. Models are getting compute-agnostic, backends are multiplying, and procurement is turning into a portfolio game.

If you are a builder, this split is a gift. It allows you to reduce vendor risk, shop for the best price and throughput, and keep shipping even when a single chip family gets squeezed. It also demands real engineering discipline, because diversity without guardrails can inflate reliability debt. This article ties the headlines to the architecture, and closes with a playbook you can apply this quarter.

From the DeepSeek shock to practical sovereignty

The reference point for today’s moment is the DeepSeek shock earlier this year. DeepSeek’s R1 line of reasoning models arrived fast and cheap, and the market reacted. Coverage noted how DeepSeek R1 shocked markets by delivering competitive reasoning at a fraction of expected cost. Whether or not you deploy DeepSeek, the shock was not only about a single model’s scores. It was a proof that reasoning models could be compressed, distilled, and tuned to run almost anywhere, from workstation cards to data center accelerators, without five years of software plumbing.

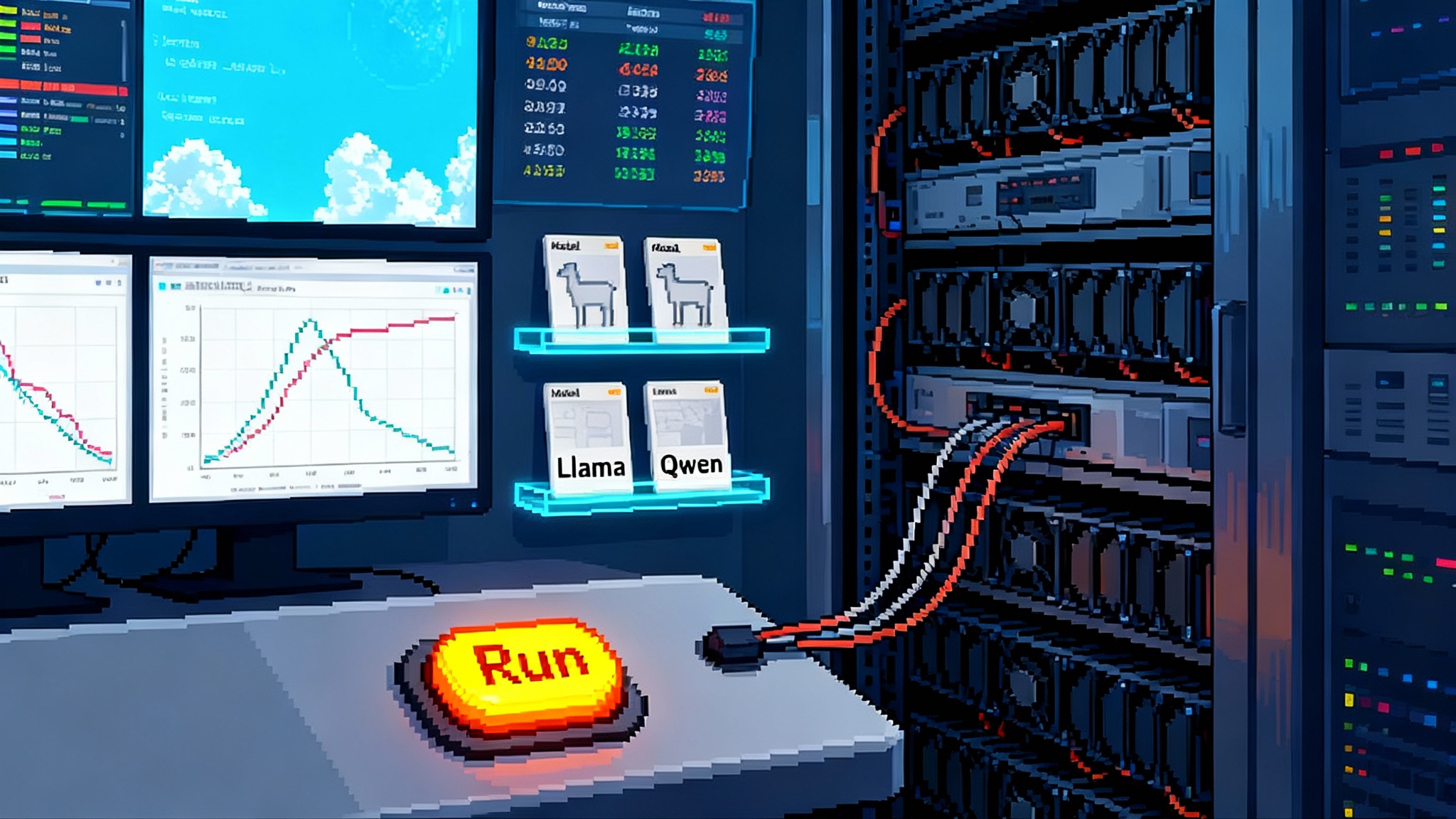

In late September, that idea moved from concept to practice on China-native hardware. Model repos and inference engines shipped first-class support for domestic backends. You could point the same checkpoint at Ascend with CANN, at Cambricon with its MLU stack, or at Hygon with its DCU toolkits, and get usable throughput with modest code changes. If you develop in vLLM’s new hardware plugin architecture, you can swap backends like swapping a database driver. The CUDA moat is not gone, but it is cracked where it matters to builders: in the last mile of running models to serve users.

Why compute-agnostic matters for reasoning models

Reasoning models are not just bigger language models. They are different in how they spend time and memory. They plan, tool-call, and verify, which means they pause and resume, they fetch external data, and they generate long chains of intermediate thoughts. That changes the economics:

- Long context means attention dominates memory traffic, so optimized attention kernels and paging strategies are worth more than raw teraFLOPS.

- Tool use and verification favor backends with fast context switches and efficient host-device synchronization.

- Sparse and mixture-of-experts topologies move the bottleneck to routing and communication, so interconnect and kernel launch overheads matter. See how Qwen3 Next flips inference economics for a concrete example of sparsity shifting costs.

Compute-agnostic support, when it is real, lets you place each workload where the backend’s tradeoffs match the model’s quirks. You might keep a large mixture-of-experts model on Nvidia for multi-node all-to-all, while placing long-context retrieval runs on Ascend boxes that shine at memory bandwidth for attention. The point is not ideology. It is fit for purpose.

The CUDA moat shows cracks, not collapse

For years, CUDA was more than a driver. It was the center of gravity for kernels, graph execution, profiling, and the entire tooling ecosystem. The new picture looks different for three reasons.

- Pluggable inference engines. Modern servers such as vLLM expose a clean interface for backends. That turns hardware choice into a runtime option rather than a rebuild. It also forces competing backends to implement the same scheduler and attention primitives, which is a quiet but powerful form of standardization.

- Kernel portability. Compiler projects and kernel languages have matured to the point where the same high level description can target multiple backends with minimal edits. Triton-like programming patterns, tile-based kernels, and template libraries let vendors compete on implementation while keeping model code stable.

- Vendor incentives. China-native vendors are now shipping complete stacks, from driver to model zoo, because domestic demand requires sovereignty. The more complete the stack, the less you depend on CUDA. The more models run well, the more developers stick around, and the better the tools get.

CUDA remains very strong in areas that reward decade-long polish, such as multi-node collectives, low variance latency under heavy load, and mature profilers. What changed is that you can now choose an alternative without rewriting your application, and with reasoning models those alternatives often win on price per useful token.

Standards scrutiny arrives right on time

On September 30, 2025, the National Institute of Standards and Technology’s Center for AI Standards and Innovation published its first comparative evaluation that squarely targeted DeepSeek models. The report’s language was clinical, but the message was plain. It found performance and security gaps versus leading U.S. models and highlighted elevated risk of hijacking and jailbreaks for agentic use cases. Read the summary in the official CAISI evaluation of DeepSeek. Whatever your posture on geopolitics, two practical takeaways follow for engineering teams:

- If you are U.S.-based or serve regulated customers, assume increased scrutiny on where models are trained and where inference runs. Keep an inventory of which endpoints and vendors are in your path.

- If you deploy Chinese-origin models or run on Chinese hardware outside the U.S., invest in adversarial and hijack testing that goes beyond generic jailbreak prompts. Treat agent hijacking as a first-class reliability metric.

The standards lens and the backend split are converging. You will not be asked whether you support a single blessed provider. You will be asked to demonstrate security outcomes and operational controls across a mix of providers. Expect more focus as the moat shifts to identity and policy compliance becomes a feature.

Inference economics just changed shape

When a model runs well on more than one backend, you can arbitrage four variables instead of two.

- Price. Per hour or per month cost varies widely by vendor and region. Domestic accelerators often trade headline FLOPS for better availability and lower contract pricing.

- Throughput. Reasoning workloads have a healthy prefill phase, then a long token stream. Schedulers that separate prefill from decode and exploit speculative decoding can change the game. Backends that implement these well can close the gap with stronger hardware that lacks modern scheduling.

- Memory footprint. Sparsity and experts help, but attention is still memory heavy. If a backend offers larger on-device memory or more efficient paging, total cost of serving drops.

- Stability. For agent workloads, variance matters as much as mean latency. If a backend delivers smoother tails under mixed loads, you can provision less headroom.

The economic optimization is no longer a single curve. It is a frontier. Each workload lands on a different point. That is good news if you have the routing and monitoring to place it there.

A builder’s playbook for split stacks

The rest of this piece is a checklist you can use to build once and run across Nvidia and domestic NPUs without a forked codebase or a thicket of bespoke scripts.

1) Design for multi-backend from day one

- Choose a pluggable inference server. Adopt an engine that already supports multiple hardware plugins and has an open scheduler. Keep your business logic and routing outside the engine.

- Package models like shipping containers. Freeze weights and tokenizer in a single artifact, and keep backend-specific kernels as separate images. Use consistent tags that encode quantization, rope or position encoding, and context length. Treat it like a versioned API contract.

- Avoid backend-specific Python hooks. If you must write a custom op, wrap it behind a capability flag and provide a slow path. Never let a single op lock you to one vendor.

Deliverable for your team: a minimal repo with two Dockerfiles, one targeting CUDA, one targeting the alternative backend, both running the same engine and model card.

2) Hedge cost and performance as policy, not panic

- Build a capacity portfolio. Split your serving into a base layer and a burst layer. Put the base on the cheapest predictable capacity you can lock in for months. Put the burst on whatever the spot or on-demand market gives you across backends.

- Route by workload shape. Identify three traffic classes: long-context retrieval, tool-heavy agents, and short chat. Put each class on the backend that wins its benchmark inside your environment. Publish that routing rule as code so it can be audited.

- Use quantization with discipline. Standardize on two quantization formats only, for example 8-bit weight only for conservative deployments and 4-bit for aggressive ones. Test accuracy and hijack rates per format and per backend. Keep the matrix small so you can retest after upgrades.

- Prefill once, reuse many times. If your engine supports separate prefill and decode, cache the prefill on the device that will do the decode. If backends differ, cache at the edge of your router and forward the cached state to the chosen backend.

Deliverable: a weekly capacity review that shows tokens per dollar and tokens per watt by backend, with a change log when a routing rule flips.

3) Revamp evals for agent reliability

Benchmarks that rank static model responses do not catch the failure modes that matter for agents. You need four new tests in continuous integration.

- Hijack rate. Measure how often an agent follows malicious side instructions when juggling tools and memory. Use a fixed harness that injects instructions into web pages, documents, and tool outputs. Track hijack rate by backend and quantization.

- Tool-call correctness. Evaluate whether the agent chooses the right tool and provides the right arguments under time pressure. Replay the same traces across backends. Differences in kernel scheduling and sampling can change tool choice, so this is a real compute-agnostic test.

- Long-horizon stability. Run tasks that require 50 to 200 steps, such as multi-hop research or code refactor. Record divergence points. If a backend shows more drift, limit it to shorter tasks.

- Shadow alignment checks. Create a red team harness that pairs your model with a control model known to refuse risky actions. When the control refuses but your agent proceeds, log it as a policy gap.

Treat these like unit tests. If a backend upgrade increases hijack rate even as it improves speed, roll it back or confine it to low risk routes. The CAISI focus on agent security is a preview of what large customers will ask you to demonstrate, not an abstract warning.

4) Build a router that does not care who made the chip

- Describe routes by capability, not brand. Instead of hardcoding vendor names, define capabilities such as max context, estimated throughput, expected tail latency, supported quantizations, and available tools. Match workloads to capabilities.

- Keep observability consistent. Expose a common telemetry schema across backends: per request latency, tokens per second split into prefill and decode, sampling parameters, hijack detector outcomes, and tool-call success.

- Bake in graceful degradation. If the preferred backend for a route is unavailable, fail to a slower but safe alternative and announce the downgrade to the calling service. Avoid hard failures that bubble up to the user.

Deliverable: a simple rule engine with a readable configuration file and a dashboard that shows which routes map to which backends in real time.

5) Operate under divergent rules without getting stuck

- Keep a vendor and jurisdiction register. Track where your endpoints run, who operates them, and which laws apply. If you are U.S.-based, assume that models and hardware from certain jurisdictions will face scrutiny for government and critical infrastructure customers.

- Separate data planes. If you must run in multiple jurisdictions, keep user data residency rules in code, not policy docs. Encrypt, tokenize, or stub tool outputs that cross borders.

- Have a swap plan. If an export control change or procurement rule blocks a backend, you should be able to move 80 percent of your traffic to another within 72 hours. That means image parity, pre-warmed autoscaling groups, and tested data migration scripts.

Deliverable: a quarterly failover drill that moves a real production route to a secondary backend and back, with metrics and a postmortem.

What not to do

- Do not tie your roadmap to a single kernel trick. If your performance depends on one vendor’s proprietary attention kernel, you will pay for it later when you need to move.

- Do not collect fifteen quantization formats. Every format you add multiplies your testing load and your incident surface.

- Do not optimize only the average. Reasoning agents suffer when tail latency flares. Demand p95 and p99 targets from every backend and enforce them in your router.

- Do not assume policy is someone else’s problem. If your sales team lands a regulated customer, your architecture must already answer data flow and model origin questions.

A concrete reference architecture

Here is a simple starting stack you can build in a week with a small team.

- Inference engine. One engine with a hardware plugin system. Enable CUDA on Nvidia nodes and the corresponding plugin on domestic NPU nodes.

- Router. A lightweight service that reads route definitions from a versioned repository. Each route declares model, context, quantization, and capability targets, not vendors. For enterprise rollouts, watch how agent control planes go mainstream.

- Model packaging. A single model registry that stores safetensors weights and tokenizer files. Every release comes with a manifest that lists supported backends and known caveats.

- Observability. A shared telemetry pipeline that exports per request metrics and agent reliability scores to the same dashboard, regardless of backend.

- Security. A sidecar that inspects tool outputs and user prompts for hijack patterns before they reach the model, and that logs all tool invocations with hashes for audit.

- Tests. A nightly run of the four reliability evals plus throughput and tail latency, on every backend you support, with alerts when a route needs to flip.

Where this goes next

As more reasoning models use sparsity, mixture-of-experts, and longer contexts, the useful unit of comparison will shift from model size to tokens per dollar at a fixed reliability target. Backends will compete on scheduler quality and memory behavior as much as on raw speed. Standards work will force everyone to publish more about hijack resilience and agent containment. The two externals you cannot control, geopolitics and supply chains, will keep shifting beneath your feet, which is exactly why compute-agnostic design is the rational default.

The CUDA era taught a generation how to deploy at scale. The split-stack era will teach a generation how to deploy across scales and across vendors without losing velocity or trust. Treat hardware as a capability set, treat models as containers, treat reliability as a product feature, and your stack will ride the split instead of being torn by it.

The close

This week’s backends and last week’s standards note describe the same future from both ends. The hardware side says you can run the best ideas on more chips. The policy side says you must prove you are doing it safely. Builders who respond with a clean router, a small quantization matrix, and agent-centric evaluations will not have to pick a side to win. They will pick a stack that adapts.