Copilot Now Generally Available: IDEs Become Agent Control Rooms

GitHub Copilot’s coding agent is now generally available. It drafts pull requests, runs in secure ephemeral workspaces on Actions, and brings enterprise guardrails across Visual Studio Code and JetBrains. Here is how that reshapes the SDLC and what to do next.

Breaking: Copilot’s coding agent is now open to the world

GitHub has flipped a big switch. The Copilot coding agent is no longer a future promise or a gated preview. As of September 25, 2025, the agent is generally available for all paid Copilot subscribers, with administrators able to turn it on for organizations. The agent can open a draft pull request, work in the background in its own development environment, and request your review when it is finished. GitHub sums it up in one line: give the agent a task and it ships a draft for you to edit. That is not autocomplete. That is a teammate. See GitHub’s announcement in plain language: Copilot coding agent is now generally available.

If the last decade turned code editors into smart word processors, general availability turns the integrated development environment into a control room. You set the objective. The agent spins up a secure workspace, explores the repository, writes code, runs checks, and reports back with a draft pull request. You stay in the loop, and you stay in control. This mirrors a broader market shift as agent control planes go mainstream.

From autocomplete to autonomous teammate

The shift is not subtle. Autocomplete is helpful when your hands are on the keyboard. An autonomous teammate is useful even when you are in a different meeting. The Copilot coding agent is asynchronous. That means you can delegate a task, close your laptop, and come back to a draft pull request with commits, tests, and a summary of what changed. It works inside GitHub’s control layer rather than only inside the editor, so it sees issues, pull request discussions, and repository conventions that govern how your team ships software.

The right mental model here is not a predictive text box. It is a junior developer who reads an issue, follows the style guide, and asks for review when done. That junior developer is faster than a person at rote work, tireless at test scaffolding, and able to re-run checks without consuming human attention. This evolution tracks with how LangChain and LangGraph 1.0 standard runtime patterns have normalized agent loops.

How it actually works under the hood

When you assign a task, the agent starts a secure, fully customizable development environment that runs on GitHub Actions. Think of it as an ephemeral virtual machine that only exists to do the work you just asked for. It clones the repository, gathers context, and begins to implement changes. You can watch it push commits to a draft pull request and read the session log as a running narrative of decisions. When it finishes, it tags you for review.

This approach brings the workflows developers already trust into the agent’s loop. Actions provide the compute. Branch protections and required reviews keep human approval at the center. Internet access is controlled, so the agent cannot wander beyond the policies your security team has set. The pull request is the gate, not a backdoor. GitHub’s Build 2025 press materials are explicit about this design: it is an enterprise-ready agent built around GitHub’s control layer, powered by Actions, with human approval before any continuous integration or deployment runs. For the bigger picture across editors and the roadmap, see: GitHub introduces an enterprise-ready coding agent.

What changes for the software development life cycle

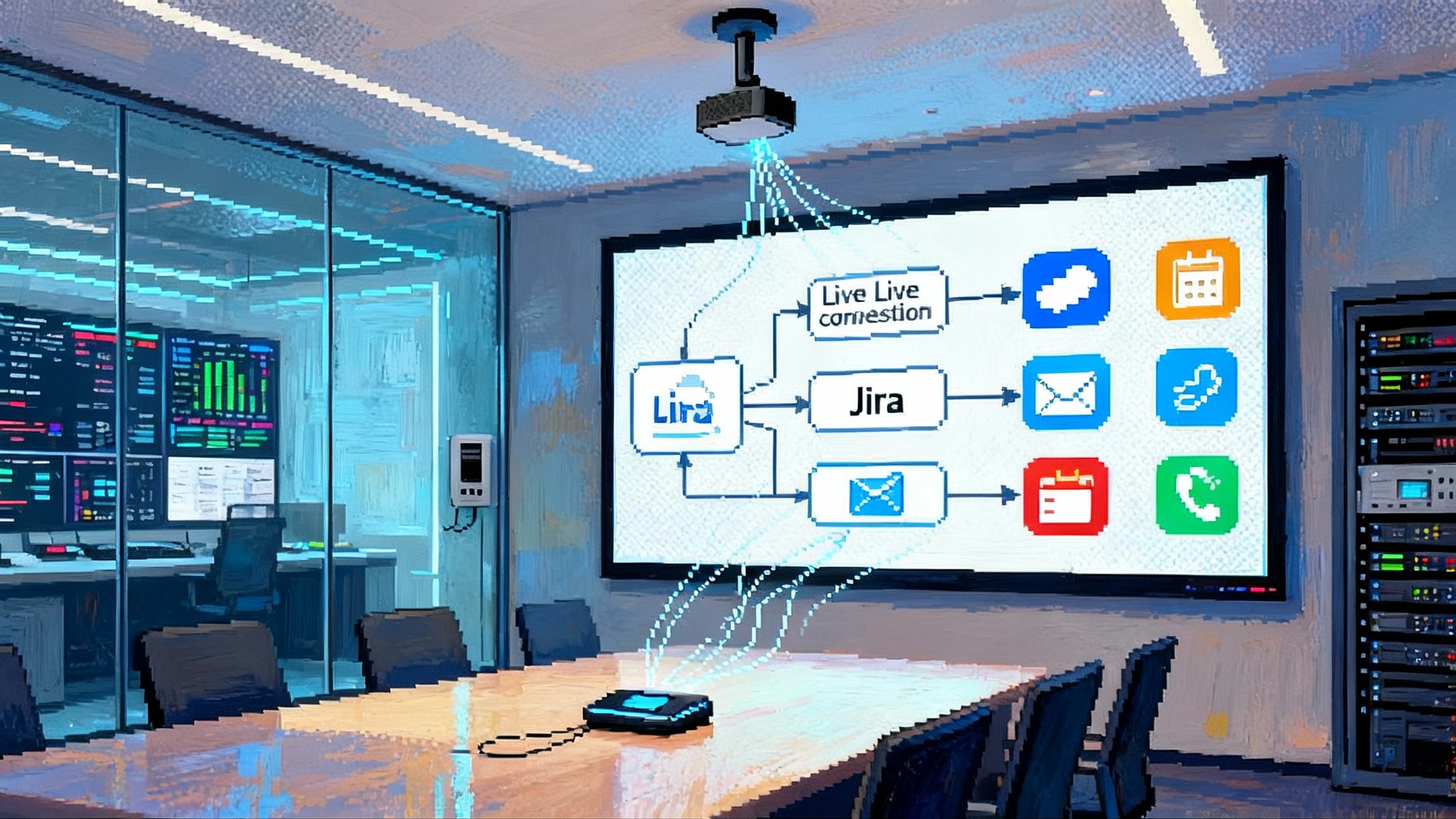

Software development life cycle is a mouthful, so teams shorten it to SDLC. It is the chain from plan to production. The agent slots into several links of that chain.

- Plan: You can assign an issue to the agent with a clear acceptance criterion. The agent reads related issues and past pull requests to pick up intent and house rules.

- Code: It edits multiple files, wires imports, and updates configuration. The agent is designed for low to medium complexity tasks in well tested codebases. It is not a magician. It is good at well bounded work.

- Test: It adds or extends tests and runs the suite as part of its loop. Failing tests keep the draft pull request blocked, just as they would for a human developer.

- Review: The agent opens a draft pull request and summarizes what changed. You review diffs, leave comments, or ask the agent to make adjustments.

- Release: Branch protections and required approvals still apply. Your deployment pipeline remains the gate. The difference is the pipeline gets a steady supply of ready to review changes.

The biggest impact is not any single step. It is the reduction of idle time between steps. Delegation removes context switching. When a human returns to the task, the draft is already waiting.

PR drafting as the new heartbeat

Pull requests are the heartbeats of modern teams. The agent leans into that. Draft pull requests become the default coordination object for human plus agent work.

- You can ask the agent to implement a feature flag and wire it to a configuration file. It will push a draft pull request that adds the flag, updates the docs, and introduces tests that prove the flag toggles behavior.

- You can hand it a bug report with a failing test case. It will reproduce the failure, propose a fix, and leave breadcrumbs in the session log about why it chose one approach over another.

- During review, you can request changes with a normal comment. The agent uses the comment as a to-do list, updates the code, and pushes another commit. There is no special syntax to learn.

When this works well, code review becomes a conversation between a human and a responsible automation that can iterate quickly inside clean boundaries.

Secure virtual machine workspaces as default hygiene

For years the security team’s dream has been simple. Perform untrusted work in a locked room, keep the logs, and keep the keys. The agent does exactly that by default. Each session runs inside a controlled, ephemeral environment powered by Actions. The repository gets cloned into that workspace, secrets are managed by your existing policies, and outbound network access is controlled. When the session ends, the environment goes away.

This is not a magic security shield. It is good hygiene that reduces blast radius and makes compliance easier to demonstrate. It also means you do not have to wire a new compute provider into your stack to try agents. You are using the platform you already use for builds and tests.

Enterprise guardrails that matter in practice

Guardrails can be vague. Here they are concrete.

- Admin controls: For Copilot Business and Copilot Enterprise, administrators explicitly enable the coding agent in organizational policies. No silent rollouts.

- Change control: All changes flow through draft pull requests into your normal review process. Required reviewers and status checks still apply. No bypasses.

- Least privilege: Controlled internet access and repository scoped permissions keep the agent inside the fence you set. If your policy blocks external package downloads during agent sessions, the agent is blocked too.

- Audit trail: The pull request history and session logs show what the agent did, when, and why. That is the difference between a toy and something you can take to a change advisory board.

Combined, these guardrails let security teams say yes without writing a custom framework. They also highlight an important point. The best way to control agent behavior is to improve the repository. Better tests, clear contributing guidelines, and strict branch protections define a smaller, safer playground for any automation.

What it means for Visual Studio Code and JetBrains ecosystems

In Visual Studio Code, there is now a Delegate to coding agent button and agent controls inside Copilot Chat. That turns the editor into a mission panel. You can send tasks to the agent, track progress, and pull down the draft branch to continue locally. That workflow removes the friction between chat, code, and pull request.

In the JetBrains world, agent mode is rolling out to IntelliJ IDEA, PyCharm, and other family editors. The significance is not just feature parity. It is the ecosystem shift. Plugin authors who once targeted completion and refactoring now have incentive to expose agent friendly actions. A test generator that used to write a single file can become an agent aware assistant that opens a draft pull request with tests and fixtures wired across modules. This aligns with how Microsoft Agent Framework unifies enterprise agent surfaces.

The result for teams is choice. If your developers split across Visual Studio Code and JetBrains, you do not have to standardize on one editor to benefit from agentic workflows. The center of gravity is the repository and the pull request, not the editor brand.

Why every repository gets an intern in the next 12 months

The phrase is catchy for a reason. The economics make sense. The agent consumes Actions minutes and Copilot requests you are likely already buying. The work it does is the exact work teams often avoid on busy days: writing tests, fixing small bugs, clarifying error messages, and updating docs.

Over the next year, the baseline expectation will be that every repository is ready for an intern like this. Ready means: tests run green on a clean checkout, contributors know how to run the project locally, and the style guide is machine readable in editor configuration files. If your repository is not ready, the intern is less useful. If it is ready, you get a steady stream of small, compounding improvements.

There is another reason this becomes table stakes. Platforms compete to keep developer attention. Repositories that reliably respond to small chores with agent driven pull requests will feel alive. That feeling is sticky. Contributors will return to codebases where the smallest change gets shepherded forward without a human having to babysit.

A playbook you can start this week

You do not need a task force. Start with one repository and one well defined goal. Then scale.

1) Prepare the repo

- Make tests fast. Aim for a suite that runs under 10 minutes. If it takes too long, the feedback loop becomes sludge.

- Write a CONTRIBUTING.md with acceptance criteria examples. Agents read these. Humans appreciate them too.

- Turn on branch protections and required status checks. You want gates before production.

2) Define good work for the agent

- Backlog grooming: File issues for tasks a junior developer could finish in a day. Link to related code and past pull requests.

- Coverage uplift: Ask the agent to bring a low coverage module up to a target percentage. Provide a seed test to anchor the approach.

- Docs drift: Assign it the chore of updating code snippets in README files after interfaces change.

3) Measure results

- Cycle time: Track time from issue to merged pull request for agent assigned work versus human only.

- Review depth: Count how often reviewers request changes on agent pull requests and why. Look for recurring gaps.

- Escapes: Monitor defects that escape to production after agent contributions. Your goal is zero, your tolerance should be very low.

4) Tighten guardrails

- Limit internet access where possible and prefer vendored dependencies for agent sessions.

- Keep secrets out of repositories and scopes minimal in tokens.

- Require a human approval before any continuous integration or continuous deployment job involving agent changes.

5) Share the pattern

- Write an internal guide with examples of good prompts and good issues.

- Encourage pairing sessions where a senior developer and the agent work on the same pull request. This helps calibrate trust boundaries.

How it reshapes team roles

Leads gain more leverage by moving from doer to router. They identify work that pays back with low risk. Individual contributors who have been too valuable to spare for tests can focus on architecture and cross cutting concerns. Senior engineers should not fear replacement. They should fear stagnation. The teams that win will be the ones that can specify problems clearly and review with speed and rigor.

Product managers benefit as well. The backlog becomes more fluid because small items can be moved forward without negotiating for scarce developer hours. That said, the product role becomes more accountable for writing crisp acceptance criteria. Vague tickets make bad agent work. Specific tickets make great agent work.

Failure modes and how to avoid them

- Shallow fixes that mask deeper issues: Keep an eye on repeated edits around the same module. If the agent keeps touching the same area, schedule a refactor carried out by a human.

- Blind trust in green builds: Do not confuse a passing build with a good change. Read the diffs. Teach reviewers to look for incomplete error handling and brittle test assertions.

- Quiet policy creep: Document your agent policies. Changes to internet access or runner environments should go through the same review as any security sensitive change.

- Actions budget overruns: Treat agent sessions as a cost line item. Start with a per repository budget. If the agent is doing work not worth the minutes, adjust your issue triage.

What about other tools

Agents are not new. Cursor and Windsurf showed what it looks like when you push autonomy closer to the editor. GitHub’s approach is different because the control surface is the repository itself. That means the same rules that keep your organization compliant can keep the agent on a short leash. The posture here is pragmatic. Use the agent where it makes sense, within the rules you already enforce. If another tool does a specific job better in your stack, use it there. The point is not a brand. The point is the workflow.

The shape of the next year

Expect three waves.

- Wave one: Every team with Copilot turns on the agent for a subset of repositories and uses it for tests, small bug fixes, and documentation. Leaders publish internal case studies that measure cycle time and quality.

- Wave two: Editors evolve. Visual Studio Code and JetBrains plugins expose richer agent controls. Teams wire custom repository instructions and integrate external tools through standard interfaces so the agent can perform more complex work.

- Wave three: Governance matures. Security teams become comfortable with agent sessions because the evidence and approval flows are first class. Procurement gets a stable picture of Actions usage. The agent becomes table stakes.

If you are reading this, you already know how the story ends. Repositories that are easy to build, test, and review will bend this capability to their benefit. Repositories that are not will be forced to improve or watch competitors ship faster.

The conclusion: Ship the drafts, keep the judgment

General availability is not hype. It is a contract. GitHub is saying the agent is ready to live inside your production workflow with controls that match how you already ship software. The new habit is simple. Delegate, review, and iterate. Or in the phrase you will hear repeated in standups all year: every repo gets an intern. The teams that take it seriously will use the intern well. The teams that ignore it will still feel its presence when their neighbors start merging drafts while they write the first line of code.