Gemini 2.5 Browser Agents Break the API Bottleneck

Google’s Gemini 2.5 Computer Use preview turns agents into first‑class web users. With visual reasoning and 13 native browser actions, software can now navigate, type, click, and complete tasks across sites without brittle plugins or custom APIs.

The breakthrough: browser‑native agents are out of the lab

On October 7, 2025, Google previewed Gemini 2.5 Computer Use, a specialized model that lets agents operate a real browser the way people do. Instead of waiting for someone to build a custom integration or an application programming interface, the model reads the screen, reasons about what it sees, and takes stepwise actions to complete the task. In Google’s release, developers can try it in preview through Google AI Studio and Vertex AI, and watch demos that show the agent navigating sites in real time. The simple headline hides a major shift: the web itself becomes the integration surface. See Google’s Gemini 2.5 Computer Use preview.

If you have spent years cobbling together plugins, connectors, and brittle test scripts, this is a clear turn in the road. The model’s visual understanding means it can perceive what a human sees: fields, buttons, menus, scroll regions, and dialogs. Its action primitives give it hands and feet in the page. Together they allow an agent to carry out multi‑step work on almost any website.

Why 13 actions matter

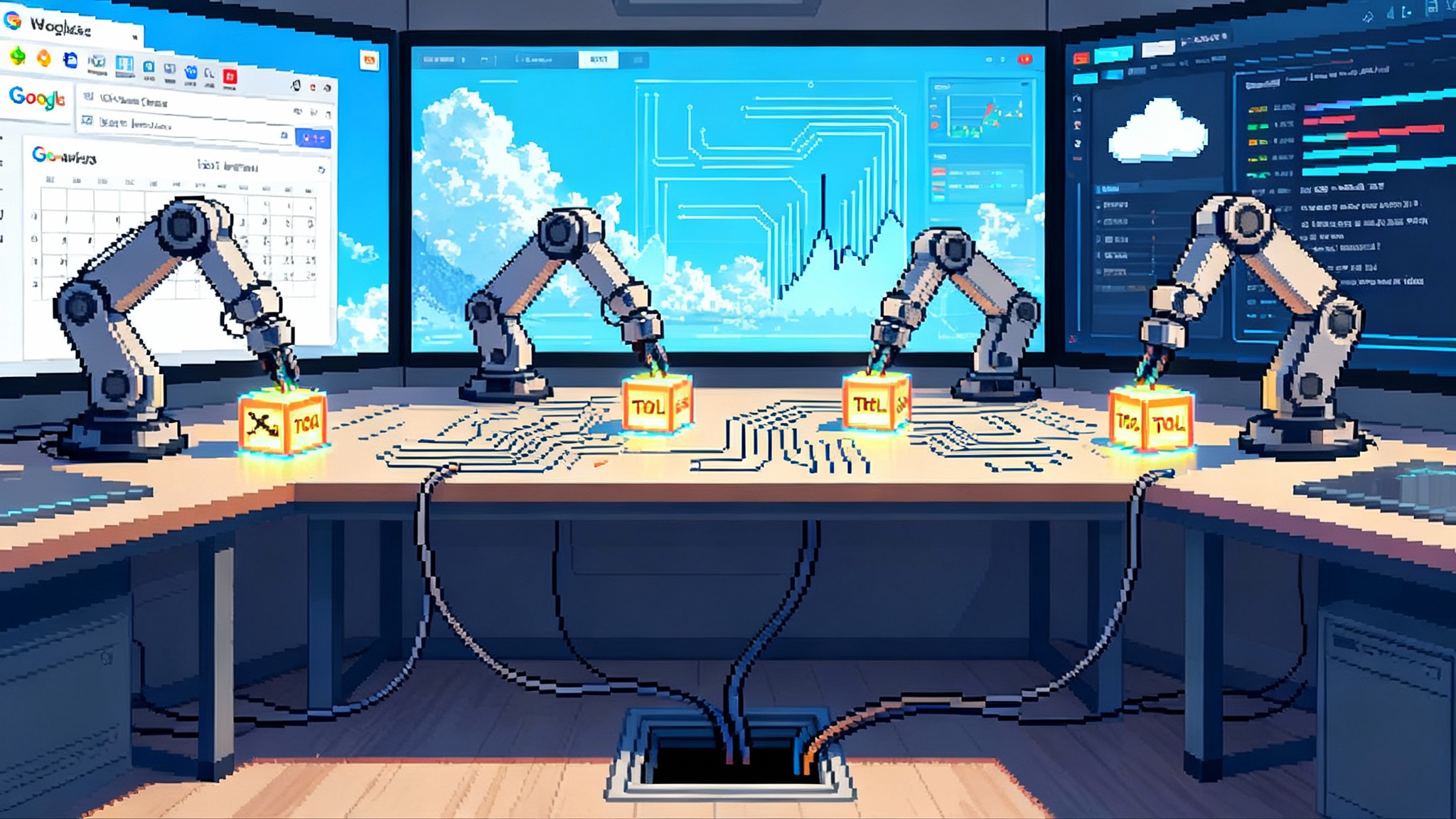

Think of the browser as a city. You can ride the subway, walk, take a bus, use a bike, or hail a car. Each choice gets you from one place to another, and you can mix them to complete a trip. Gemini 2.5 Computer Use provides a small set of travel modes for the web that combine into nearly any user journey. These are the action primitives the model can call, the equivalent of assembly instructions for browsing:

- open_web_browser

- wait_5_seconds

- go_back

- go_forward

- search

- navigate

- click_at

- hover_at

- type_text_at

- key_combination

- scroll_document

- scroll_at

- drag_and_drop

You do not need a special plugin to click a button or a bespoke connector to type into a field. With these thirteen, plus visual reasoning, the agent can search, log in, filter, add to cart, paginate, and submit. That is why this release feels different from another tool wrapper. It is a minimal alphabet that can spell almost any interaction. See the Computer Use actions and agent loop documentation.

What changed under the hood

Underneath, the agent runs in a loop. It receives a screenshot and recent action history, decides on the next function call, and returns that proposed action to your execution environment. Your code performs the action, captures a fresh screenshot, and sends it back. The model repeats until the task is finished or a safety rule intervenes. Two details matter for production:

- The model is optimized for browsers first. Mobile control shows promise, while desktop operating system control is explicitly not the focus of this preview.

- High‑risk steps can be gated with confirmation. The model can flag actions that require a human “continue” before execution, and developers can add custom policies in system instructions.

These mechanics make the system predictable to operate. The model proposes; your runtime decides. That clear contract is what allows builders to add monitoring, tests, and guardrails without fighting the model’s internals.

Beyond Robotic Process Automation and the plugin era

Robotic Process Automation, known as RPA, proved that repeating what users do with a mouse and keyboard has real business value. Yet traditional RPA relies on brittle selectors, coordinates, and application‑specific adapters. Small changes to the Document Object Model or a new cookie banner can break a flow. Teams spend as much time maintaining scripts as they do shipping them.

Plugin ecosystems solved a different problem. They replaced user interface steps with tool calls. If a partner built a plugin, the model could invoke structured functions. This is great when the tools you need exist and their schemas express everything you must do. In practice, teams hit coverage gaps. Many sites never build plugins. The flows you actually need often cross interfaces that no single developer has wired up.

Gemini 2.5 Computer Use shifts the burden. Rather than asking every website to publish a perfect application programming interface or a custom plugin, it lets the agent learn the page and act visually. The web becomes the operating system. Companies like UiPath and Automation Anywhere can still matter here, not as brittle recorders but as orchestration layers that schedule jobs, isolate sessions, distribute credentials, and collect logs. The job changes from writing one‑off connectors to supervising a capable browser pilot. For deeper context on trust boundaries, see our internal guide to the credential broker layer for browser agents.

What you can ship this quarter

Here are production‑ready workflows you can build immediately with the preview, without waiting for partner integrations.

- Quality assurance and user interface testing

- What to do: Point the agent at your staging site with a suite of journeys: account creation, password reset, search and filter, add to cart, checkout, subscription change, and profile update. Seed test data ahead of time.

- Why it works now: The action set is expressive enough for modern sites, and the model sees the same bugs your users do, including layout shifts, off‑screen elements, and disabled buttons. You can require confirmation only before executing destructive steps such as canceling an order.

- How to build: Use Playwright as the execution layer. Implement the 13 predefined actions. Add a thin translator that converts the model’s normalized coordinates into pixels for your viewport. Capture a screenshot and URL after each action and feed it back. Persist run artifacts: screenshots, action logs, error messages, and timing. Gate the final submit or destructive actions with human sign‑off.

- What to measure: Success rate per flow, median actions per step, and flakiness over time by browser release. Track why failures happen: model perception, layout changes, or network errors. Use these fields to trigger a re‑run or mark a regression ticket with a screenshot.

- Small and medium sized business back‑office operations

- What to do: Automate arrival of web leads into your customer relationship management, invoice creation in accounting software, and reconciliation across bank feeds. Build flows that copy shipping updates from carrier portals into your helpdesk, or that log product listings across marketplaces.

- Why it works now: Many of these properties lack reliable application programming interfaces, or they lock key features behind higher‑tier plans. A browser agent can log in, navigate to the right page, and complete the form with the same controls a staff member would use.

- How to build: Create a catalog of tasks as prompts with structured inputs, for example customer name, email, invoice amount, order identifier. Store customer‑specific site locations in a configuration file. Use a scheduler to run agents in isolated browser profiles. Provide a supervisor view for approvals on any payment or policy‑sensitive step.

- What to measure: Hours saved compared to manual entry, first‑attempt success rate, and review queue size. For finance actions, record confirmation timestamps and reviewer identity.

- Growth experiments and checkout flows

- What to do: Use agents to replicate a prospective customer browsing your site from organic search through checkout. Vary the entry query, category page, and device viewport. Capture where the agent hesitates, backtracks, or misclicks.

- Why it works now: The actions cover search, click, scroll, and type across everything from a marketing site to a payment screen. You can model drop‑off the same way you model traffic funnels, then fix what caused the agent to get stuck.

- How to build: For each experiment, define the goal and constraints, for example complete checkout under forty seconds with no coupon use. Send a budgeted sequence of steps to the agent. Compare the agent’s path and success against real customer telemetry. Auto‑file issues when the agent hits an error state or exceeds the budget.

- What to measure: Time to complete, steps taken, and conversion by variant. Pair this with heatmaps from the agent’s clicks and scrolls to surface surprising blockers. For more on this approach, see how the browser as an agent runtime changes experimentation velocity.

The production controls to put in place next quarter

The preview is powerful, and it belongs in a secure envelope before you scale. Four controls will keep you moving fast while staying within guardrails.

- Session isolation

- Why: You never want one customer’s cookies, tokens, or autofill data to leak into another’s session. Isolation also reduces the blast radius of prompt injection.

- How: Run each agent in a sandboxed environment, for example a clean container with a fresh browser profile. Disable extensions, set a fixed viewport, and block third‑party storage as a default. Tag every artifact with a session identifier.

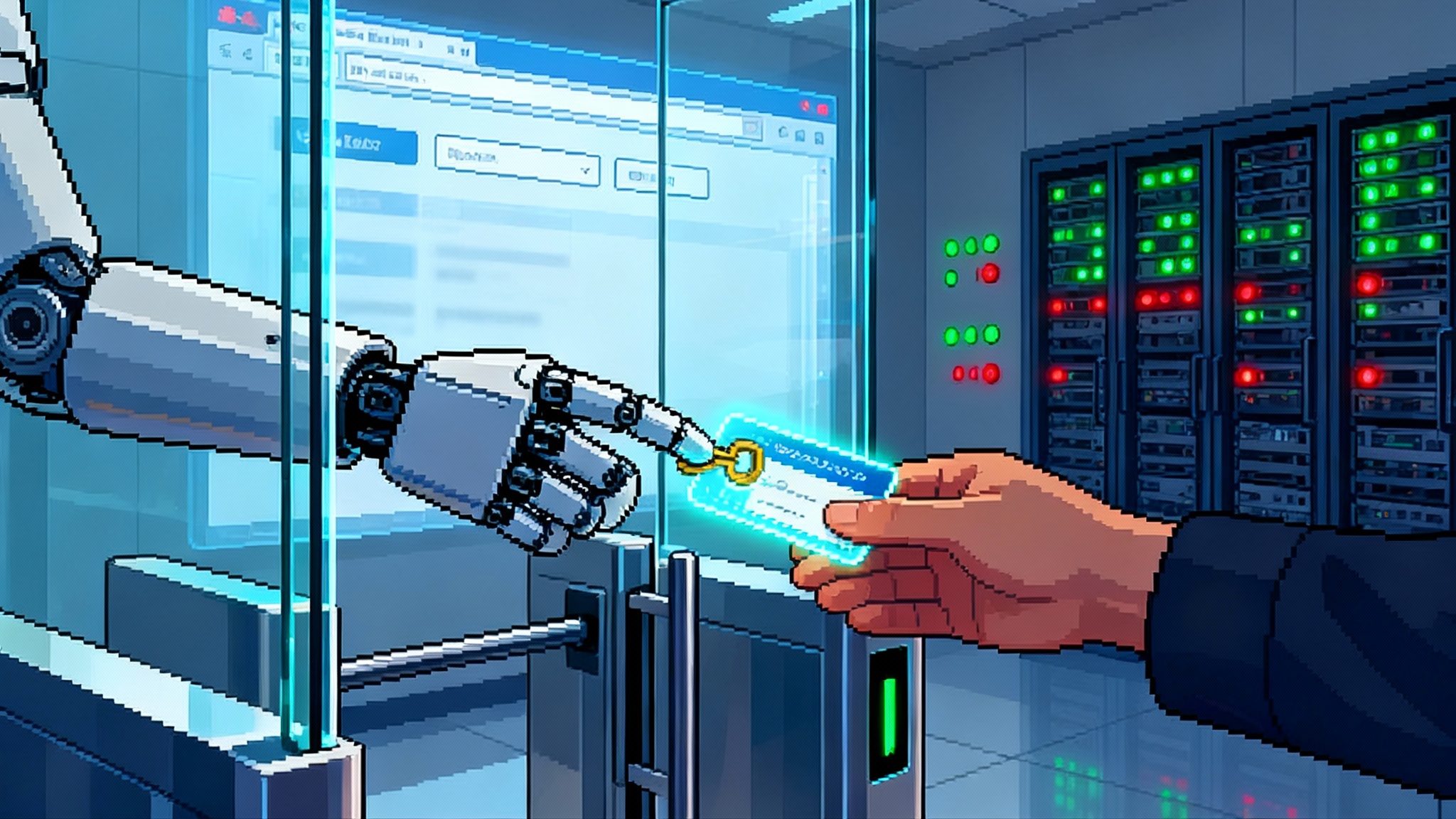

- Credential vaulting

- Why: Agents will log in to real services. Secrets in code, screenshots, or logs are an incident waiting to happen.

- How: Store credentials in a managed vault. Pass short‑lived tokens to the agent process at runtime. Use role accounts instead of personal accounts. Scope permissions to the minimum needed for the specific task. Rotate secrets on a calendar and after any suspected leak.

- Audit trails and reproducibility

- Why: You must be able to explain what happened, especially for money‑moving or customer‑visible actions.

- How: Log every model response and every executed action, including arguments, timings, pixel coordinates, and page titles. Save screenshots before and after each action. Keep the environment details, such as browser version and viewport. Build a replay tool that steps through the run so an engineer or analyst can watch what the agent did.

- Policy guardrails

- Why: Real sites contain traps, scams, and legal commitments. You need rules the agent cannot silently bypass.

- How: Use the model’s safety signal to require confirmation on consequential steps, and add your own system instruction with a clear allowlist and denylist. For example, allow add to cart, typing into search bars, or opening menus. Require confirmation for purchases, email sends, or data exports. Deny accepting terms of service or solving a human verification challenge. If the agent flags a high‑risk action, force a pause and prompt an approver in your supervisor dashboard. Document every policy decision in your audit log.

The official docs and blog outline built‑in safety training and a per‑step safety service that classifies actions requiring confirmation. In production, treat that signal like a circuit breaker. If the model suggests a risky step, the breaker flips and your code must ask a person to proceed. The benefit is not theoretical; it is a clear seam where you can inject governance without throttling the agent’s overall speed.

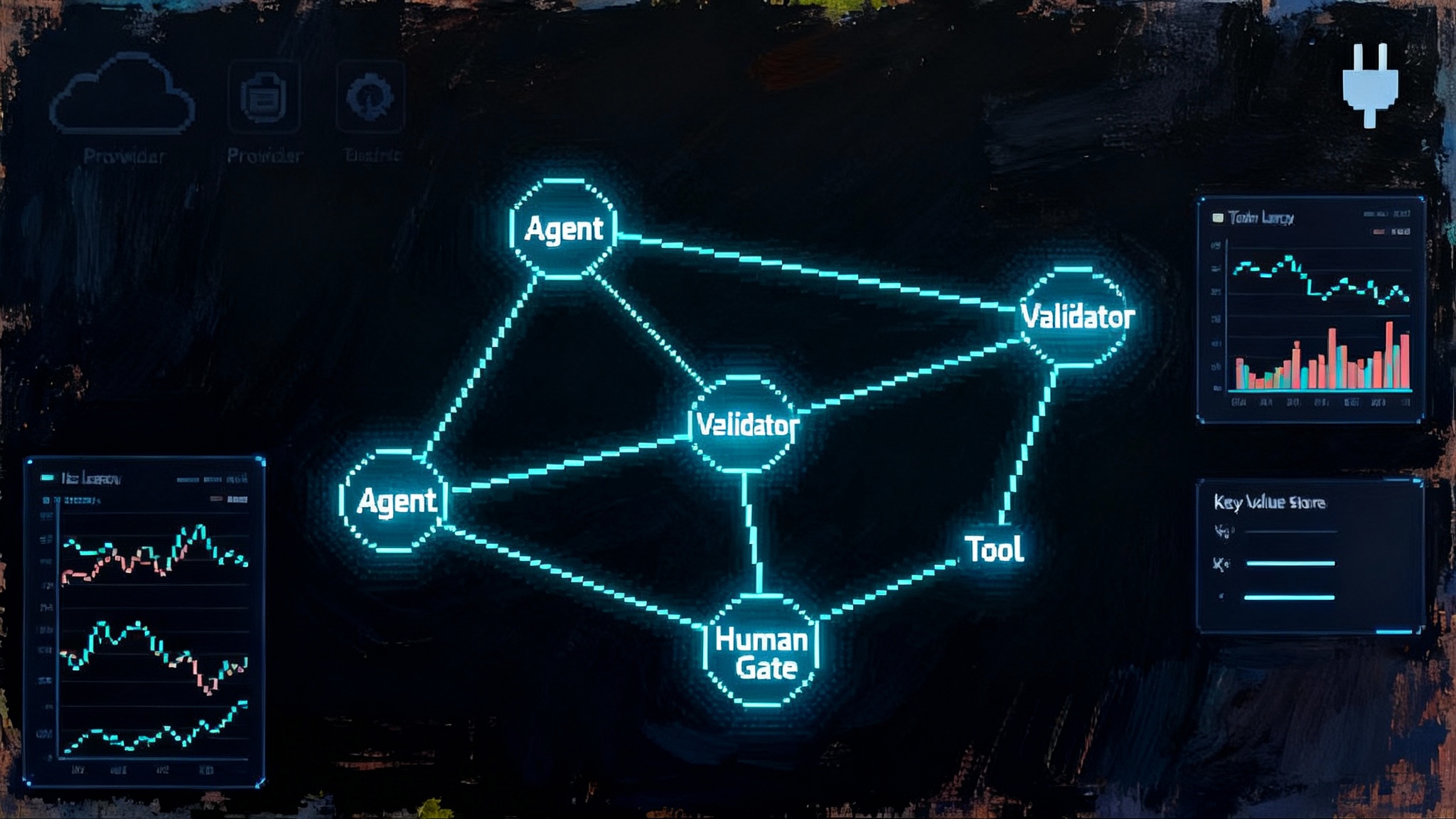

Architecture blueprint

Here is a pragmatic architecture that teams are already implementing:

- Control plane: A service that manages jobs, assigns them to isolated worker pods, enforces per‑job budgets, and writes action logs to a tamper‑evident store.

- Worker pod: A container image with a hardened browser, a Playwright runtime, and your action executor. It receives a prompt, a single set of credentials pulled at start, and a budget of steps.

- Model gateway: A thin client that calls the Gemini 2.5 Computer Use model and validates that the only functions returned are from your allowed set. It also interprets the safety decision field and asks for human confirmation when needed.

- Supervisor interface: A real‑time view of the current screen, pending confirmation requests, and the action log. Approvers can allow, deny, or terminate a run.

- Evidence store: Screenshots, video capture if needed, action logs, and configuration. Make it searchable by order identifier, session identifier, and time range.

Vendors are already circling this stack. Browserbase offers hosted execution environments. Autotab and other agent companies are layering custom flows and tooling on top. Internally at Google, related models have powered Project Mariner, the Firebase Testing Agent, and agentic features in AI Mode in Search, which hints at how this technique behaves at scale. For a landscape view, read our Gemini Enterprise vs AgentKit comparison.

How this changes the calculus for builders

- Integration cost moves from building to operating: You will spend less time waiting for partners and more time tuning prompts, policies, and budgets.

- Product managers can test flows before engineering commits: With a small library of tasks, teams can simulate end‑to‑end journeys and measure success before pushing code.

- Security teams get a defined control layer: By centralizing session isolation, credential handling, and confirmation policies, you reduce the chance of one‑off agents going rogue.

- Tool vendors that embrace browser‑native agents will win: The value shifts to observability, safety, and performance at the edge of the browser, not the number of plugins you publish.

A 90‑day plan to get value fast

Week 1–2

- Pick two high‑value flows that are painful to maintain with test scripts or do not have stable application programming interfaces. Draft the success criteria and what must be confirmed by a human.

- Create a minimal executor with the 13 actions. Hardcode an allowlist and enforce confirmation for anything financial, communicative, or legally binding.

Week 3–4

- Run the flows on staging and record every action and screenshot. Identify where the agent gets stuck or requests confirmation too often. Tighten prompts and add micro‑instructions, for example “scroll the results pane before concluding there are no more results.”

- Wire the supervisor interface and add single‑sign on for approvers.

Week 5–8

- Expand to three production flows with clear rollback plans. For each, set a time budget and a maximum number of retries.

- Put credential vaulting, session isolation, and audit logging through a tabletop exercise. Rotate credentials and ensure the system continues to operate.

Week 9–12

- Measure net hours saved and defect catch rate against your baseline. Bring in security to review logs and policies. Present a go‑forward plan with a unit cost per run and a capacity model for the next quarter.

The bottom line

The web was built for people, not for programs. Gemini 2.5 Computer Use flips that constraint into an advantage by letting agents work like people, with eyes and a small set of hands. The 13 browser actions are not a gimmick. They are a compact alphabet that lets you compose real work on the unstructured surface where business actually happens. RPA and plugins will not disappear; they become part of the orchestration. The teams that win will be the ones that stand up safe, observable, browser‑native agents and let them iterate. The bottleneck was waiting for integrations. Now the browser is the integration.