Meta’s Ray-Ban Display turns AI agents into a hands-free OS

Meta’s new Ray-Ban Display glasses and Neural Band turn on‑lens cards and silent EMG gestures into a complete loop for ambient computing. Here’s what shipped, why it matters, and how builders can design for glance-and-gesture first.

The moment the screen moved to your face

For years, smart glasses were microphones with a camera. Useful, but not a platform. That changed at Connect 2025 when Meta put a display in the lens and paired it with a neural-controlled wristband. Together, on-lens responses and subtle finger gestures turn the glasses into something new: an always-available operating system for agents. The headline is not the hardware by itself. It is the new posture of computing. Eyes up, hands free, information when you want it and gone when you do.

If you only remember one thing, remember this: it now works in motion, in public, without a phone in your hand. That unlocks workflows that could never survive the friction of pulling a slab of glass from your pocket.

What Meta actually shipped

Meta announced Ray-Ban Display, its first consumer glasses with a built-in, full-color in-lens screen, bundled with a Meta Neural Band that reads tiny electrical signals in your wrist to recognize finger motions. The system supports glanceable messaging, live captions and translation, a camera viewfinder with zoom, and turn-by-turn pedestrian navigation that appears directly in your field of view. It also exposes music controls and quick access to Meta’s assistant. Pricing starts at 799 dollars in the United States. You can see Meta’s description and feature set in its Connect launch post: Meta Ray-Ban Display and Neural Band. The post confirms the Neural Band is included and that navigation launches in a beta set of cities.

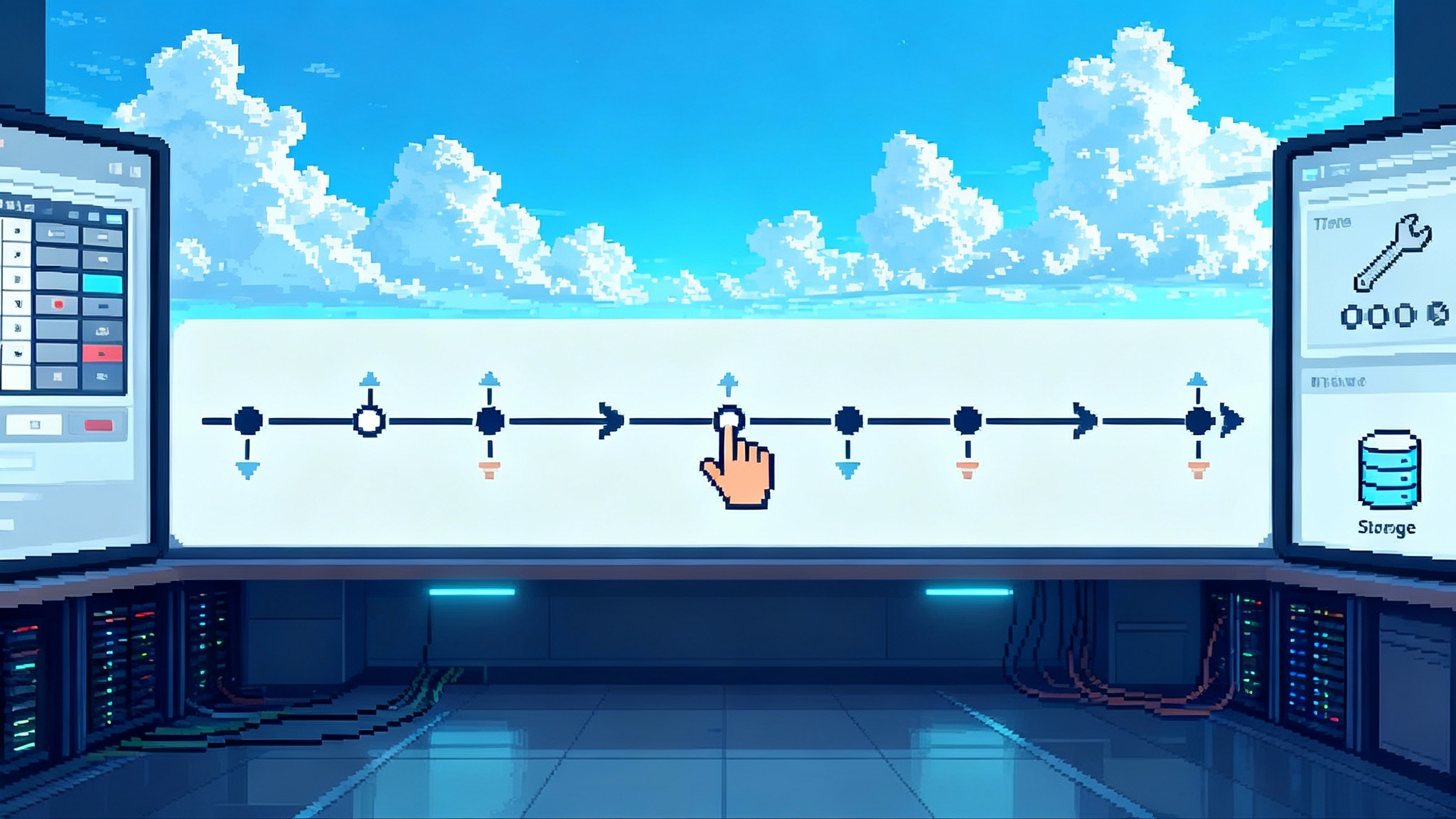

The new baseline interactions are simple but powerful:

- Glance to receive a short response or step-by-step card without blocking your view.

- Pinch, flick, or rotate your fingers to confirm, scroll, or adjust volume, all through the EMG band.

- Speak to the assistant when you want richer output or to set up tasks that will complete while you walk.

None of these are brand new in isolation. Together, they form a complete loop where seeing, deciding, and acting happen in a single flow.

Why on-lens plus EMG matters

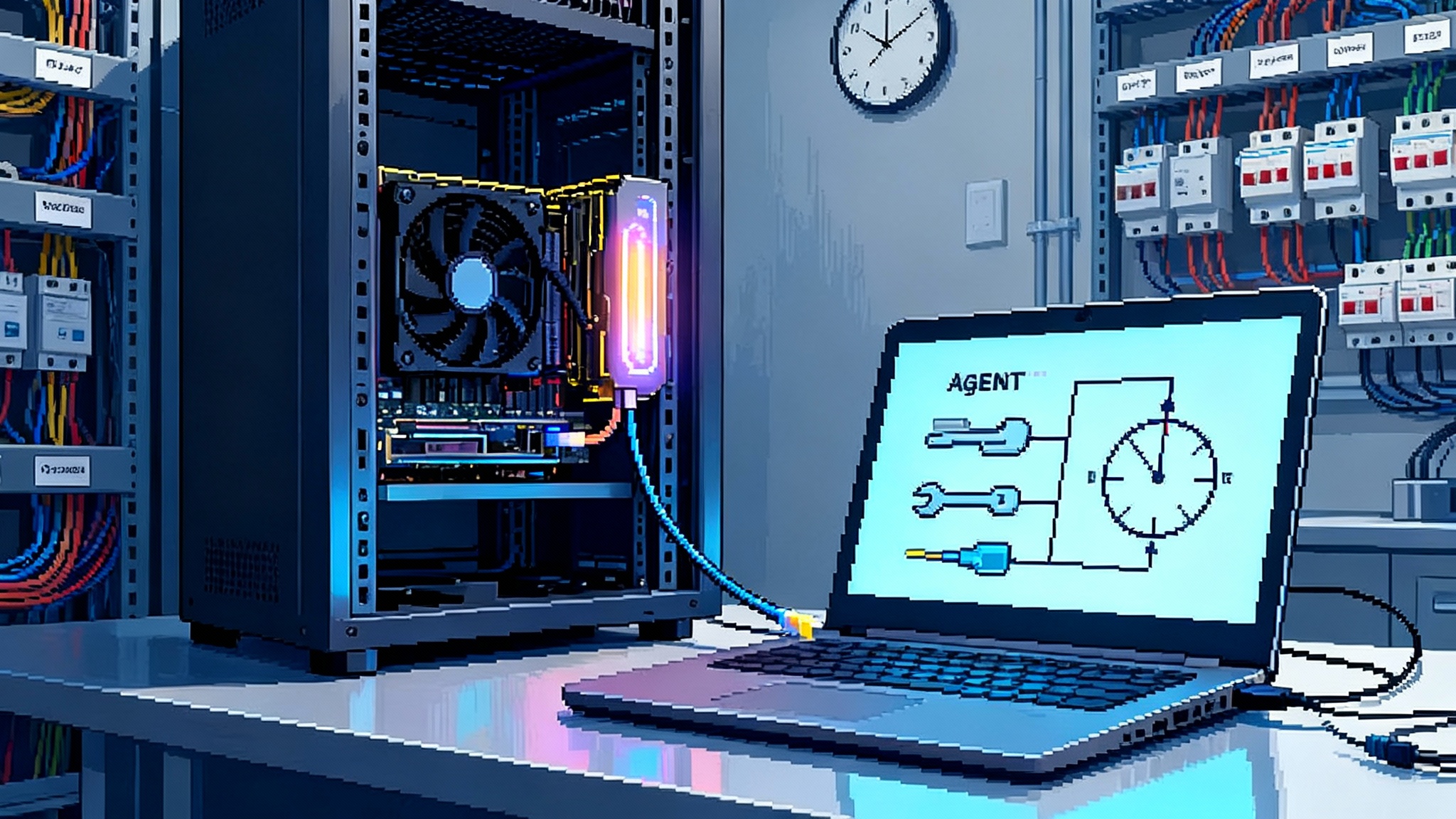

Think of the glasses as a whisper-quiet status bar for the real world. A heads-up card appears when the next turn is approaching, or when someone replies to your message. You acknowledge with a micro-gesture. You keep walking. There is no unlock, no app grid, no shoulder-surfable screen. This is not about replacing your phone; it is about removing the reasons you kept reaching for it.

The EMG band is the other half of the breakthrough. Voice is great until it is noisy, private, or impolite. Touch is great until your hands are full. EMG fills the gap with invisible input. That single capability creates a reliable fallback so you can complete the loop anywhere. The agent can ask a yes or no question on the lens. You pinch for yes. The task continues. This is the difference between a demo and a daily driver.

The first truly ambient agent workflows

Four workflows now feel native:

- Navigation. The lens shows your next step, the wrist confirms or snoozes a detour suggestion, and the assistant quietly re-routes. You never stop to look down, which means you keep social context and situational awareness.

- Translation. Live captions and inline translation appear as you converse. You can raise or lower caption font size with a dial-like wrist twist. The display reduces the cognitive load of holding translations in your head.

- Messaging. A short WhatsApp or Messenger reply appears on-lens. Pinch once to send a templated response, long pinch to dictate one sentence, and carry on. The target is not writing a novel. It is staying present without going dark.

- Assistive features. For hearing support, captions help in loud places. For motor constraints, EMG gestures replace taps. For attention support, the lens can show a single instruction at a time while you complete a multi-step task.

Each of these flows shares a structure: a small visual card when needed, a private micro-gesture to act, and an assistant that handles the rest.

The emerging developer surface

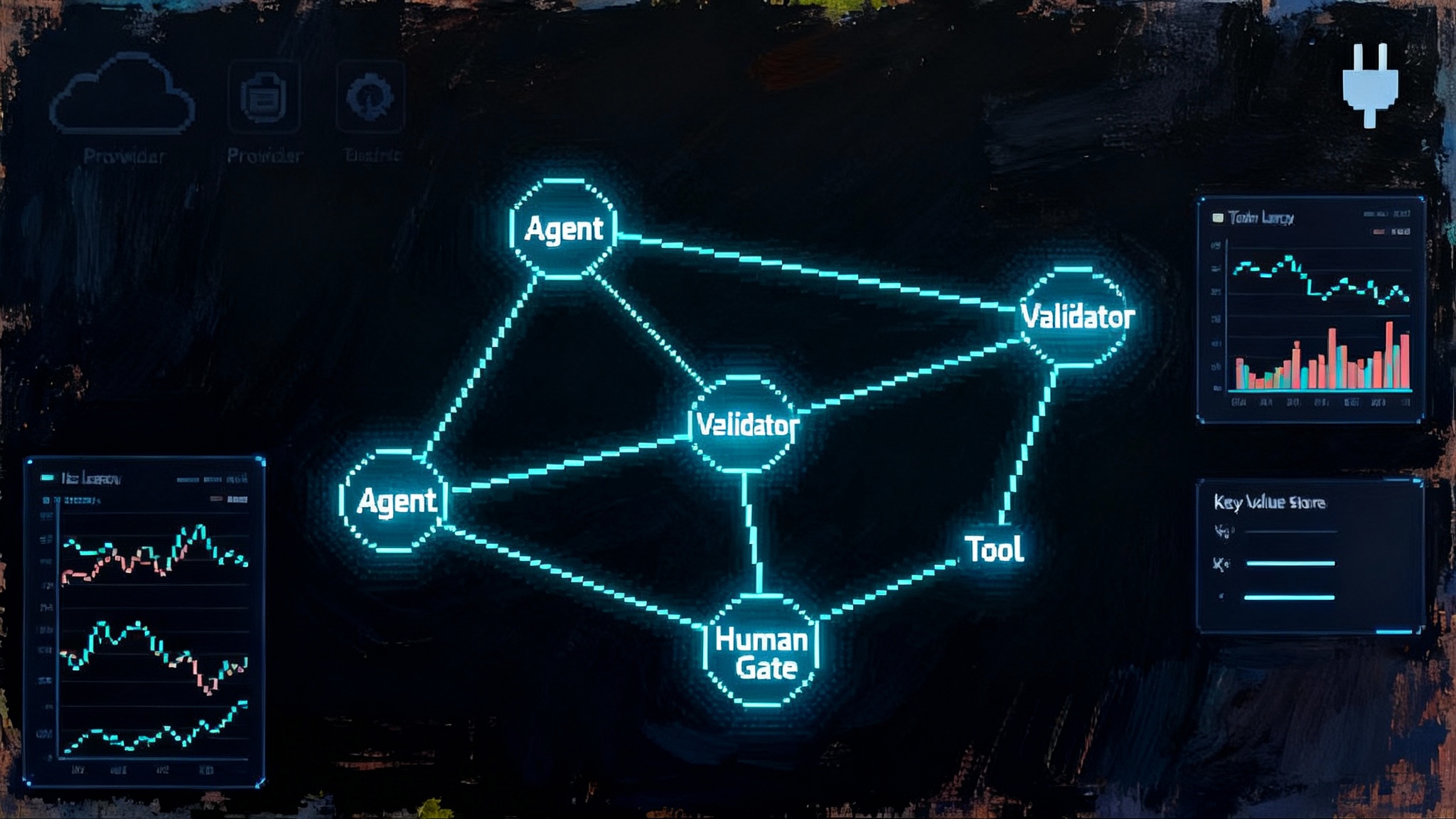

Meta did not drop a full glasses App Store on day one. The pattern is familiar from the early watch era: start with first-party capabilities, then open surfaces in phases. Based on what Meta disclosed and what it already offers to developers across its apps, expect three layers to matter over the next year:

-

Agent Actions. Developers register actions that the assistant can call, with clear input and output schemas. Think of an action like “create a pickup order at store X” or “book a desk for 2 hours.” The response renders as a glanceable card. This likely arrives first because it builds on existing AI agent tooling and messaging integrations. For runtime patterns, see how the LangChain and LangGraph runtime standard guides action composition and state.

-

Companion integrations. Your iOS or Android app supplies sensor data, handles authentication, and receives events from the glasses for fulfillment. A delivery app, for example, could receive a “leave at door” confirmation triggered by a pinch when the on-lens card asks for it. This layer bridges existing mobile stacks into the new glance-and-gesture loop.

-

On-lens UI primitives. Meta will eventually need a small, predictable set of components that fit a safety-critical field of view: prompt, confirmation, step list, progress, and alert. Developers will not build free-form interfaces; they will compose these primitives and subscribe to system contexts like position, heading, and proximity to place marks.

For now, plan your product as an agent that calls actions, uses mobile for heavy lifting, and returns a minimal, high-signal card to the lens. When fuller on-lens APIs arrive, you will already have the right architecture.

Likely monetization rails

Meta already runs one of the largest performance advertising and commerce networks in the world. The question is not if it will monetize agents on the face, but how. Expect these rails to come online quickly and align with what we covered in Meta's ad-fueled AI chats:

- Click-to-Message for the face. Paid conversations that start in Instagram or Facebook can be initiated by an on-lens suggestion when you walk into a participating store. The conversation moves to WhatsApp or Messenger with your permission.

- Catalog and order actions. Merchants expose inventory and fulfillment actions to agents. You pinch to confirm a reorder of your standard coffee or a pickup for a pharmacy refill. The receipt stays in your messages. The glass shows only the state change.

- Paid placement in agent suggestions. When the assistant proposes options for lunch nearby, sponsored entries will appear, clearly marked, ranked by relevance and willingness to pay. Trust is the constraint. The system must bias for usefulness first, with money as a tie-breaker.

- Meta Pay and saved credentials. Expect simple, presence-based checkout for low-risk purchases, with a second factor via the wristband or phone for higher risk. The agent can complete the order while you keep moving.

The near-term revenue will not come from on-lens display ads. It will come from the same funnels that already convert, now triggered at the moment and place of intent.

Privacy and credential patterns that make sense on your face

Putting a display in everyday eyewear resets expectations. The platform will need to normalize a few patterns quickly. A design like a credential broker layer for agents helps enforce them:

- Presence unlock. If the band and glasses are in proximity to your phone, lightweight actions can complete without prompts. If the band is absent, the system should ask for a second factor before ordering or sending sensitive messages.

- Visual discretion. On-lens cards should default to terse text with no sender names in public contexts, expand only on a hold gesture, and auto-clear on head movement. This minimizes unintended disclosure.

- Camera gates. When an action requests the camera, the system should show a short, edge-only banner in the lens and light the external privacy LED. Developers receive a limited stream or a processed result depending on the permission.

- Short memory by default. Agent context on-device should clear on time or place boundaries. For example, the translation memory for a conversation should expire when you leave the venue. Longer retention should require explicit consent and show up in a unified privacy log.

None of this is optional if face-first computing is to become normal in public spaces. Make privacy the default and utility the reward for opting in.

How Meta’s approach stacks up

- Apple. Apple’s likely strategy is deep iPhone integration, polished glanceables, and private defaults, with payments through Apple Pay and identity through Face ID or passkeys. Reporting indicates Apple is prioritizing smart glasses development, including a path to models with and without displays, which would shift its wearables line from wrist to face. See the competitive framing in Apple is reportedly prioritizing smart glasses. If Apple ships within the next couple of years, the contest will be ecosystem versus ecosystem: iMessage and Apple Pay on one side, WhatsApp and Instagram Shops on the other.

- Google. Google brings Android, Gemini, and a willingness to license to many hardware partners. Expect it to seed “Android for glasses” across Samsung and others, focusing on Assistant actions and mapping. The playbook is distribution and developer reach rather than a single hero device.

- Amazon. Amazon’s Echo Frames show the audio-first path and a commerce engine that could become a real-time reorder assistant. If Amazon leans into a simple, screenless model for price checks, shopping lists, and home control, it can own a reliable slice of ambient tasks.

Meta’s edge today is that it showed up with a lens you can buy and a control method you can use without talking. Its risk is platform trust. If on-lens suggestions ever feel like ad-first, progress stalls.

The next 12 months: what changes in daily life

- Notifications shrink. You will only see what needs a decision and only when it is relevant to your place and motion. Phones become inboxes. Glasses become decision bars.

- Commerce moves to the curb. Pickup confirmations, table-ready alerts, and reorder prompts jump to your lens at the moment you arrive. You will pinch three times a day to complete small, low-risk purchases.

- Maps become choreography. Directions will feel like a series of quiet cues rather than a map you stare at. Tourist flow in popular districts will get smoother because fewer people stop mid-sidewalk to consult their phones.

- Work goes hands free in the field. Facilities, logistics, and healthcare settings will pilot glanceable checklists with wrist confirmations and automatic audit trails. Agents will pre-fill steps based on location and equipment identity.

- Accessibility spreads to everyone. Live captions and translation will be normalized as polite and practical. People without hearing challenges will use captions at concerts or in transit hubs. This will become the de facto quiet mode.

What to build now

- Pick two high-frequency intents. For example, “reorder the usual” and “check in for pickup.” Wrap them as agent actions with predictable inputs and outputs. Keep the on-lens card to one line of state and one line of next step.

- Design for pinch-first, voice-second. Assume the user is in a place where speaking is awkward. The happy path must complete with a single pinch and a single glance.

- Make responses time and place aware. If an action cannot be completed within 10 seconds while the user is moving, hand it off to the phone and send a complete card back to the lens when ready.

- Build a memory policy. Decide what your agent remembers, for how long, and how the user clears it. Show the memory at a glance when asked. If you cannot explain it in one sentence, it is too complex.

- Instrument for trust. Log every on-lens suggestion and whether the user accepted or dismissed it. Use that to reduce interruptions. Fewer, more accurate cards will beat more cards every time.

A note on safety and etiquette

Face-first devices change the social contract. Developers and product teams should adopt etiquette rules that the system enforces by default: no autoplay media on-lens, no sender names in public, and an obvious LED when the camera is engaged. Social norms will adapt if the technology behaves politely.

The big picture

The significance of Connect 2025 is not that Meta packed a tiny screen into a Wayfarer. It is that the company shipped the minimum complete loop for ambient computing: glanceable output, silent input, and an agent that does work on your behalf. A year from now, we will not talk about “wearing AI.” We will talk about how often we did not pull our phones out. That is the bar. The winners will be the platforms and builders who respect attention, design for movement, and treat a single pinch as sacred.

The Rubicon has been crossed. The next platform is on your face. Build for the glance, and let everything else fall away.