The Agent Is the New Desktop: ChatGPT’s Work Takeover

OpenAI turned ChatGPT into a computer-using agent in July and opened a preview Apps SDK in October that lets third-party apps run inside the chat. Together they point to a new default UI for work and a very different near-term automation playbook.

What changed on July 17

On July 17, 2025, OpenAI introduced ChatGPT agent as a built-in, general-purpose assistant that can think, browse, click, type, fill forms, and produce files on its own virtual computer. In other words, ChatGPT now uses a computer the way a person does. The company calls this a unified agentic system, and it includes a visual browser, a text browser, a terminal, and access to connectors for accounts like email and calendars. OpenAI’s release also spelled out a key control: before doing anything consequential, the agent must ask you for permission. That one detail may be the most important reason this model can move from demos to daily work. OpenAI introduced ChatGPT agent on July 17, 2025.

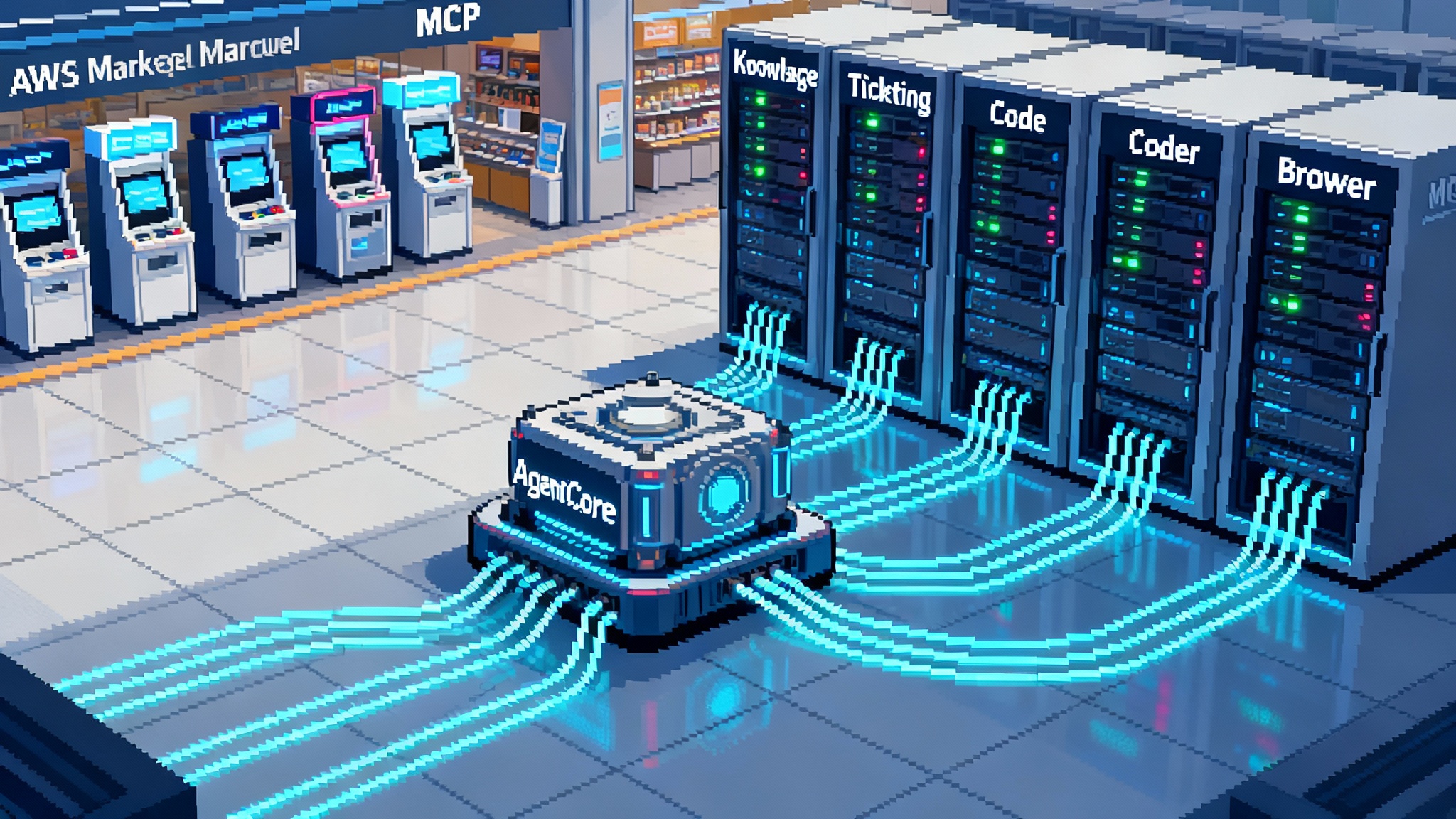

In early October, OpenAI followed with a preview of an Apps Software Development Kit. It lets developers build small applications that live inside ChatGPT with their own mini interfaces, like maps, lists, or buttons. Early partners include Booking.com, Canva, Coursera, Expedia, Figma, Spotify, and Zillow. The Apps SDK is positioned as an open standard on top of the Model Context Protocol, with a reviewable directory and monetization details promised later. Together, agent plus apps makes ChatGPT feel less like a chatbot and more like a new kind of operating environment. OpenAI announced apps in ChatGPT and the Apps SDK on October 6, 2025.

The default UI for work is shifting to a computer-using agent

For decades, the default user interface for work has been a person at a browser, moving data between tabs, pasting into spreadsheets, and downloading reports. API integrations and robotic process automation tried to remove the human, but both have steep adoption cliffs. As we argued when exploring how browser agents can break the API bottleneck, the near-term pattern is different: an agent that uses a cloud computer to operate the same graphical interfaces your team already uses.

Think of it like hiring a capable generalist who knows the web, not a brittle script. The agent can log in to a supplier portal that never offered an API, download a comma-separated-values export, clean it in a spreadsheet, and write a status summary. If a page layout changes, the agent can adapt, because it perceives and reasons about what it sees on the screen. It is closer to a junior operations analyst than to a workflow rule.

Why this matters now:

- Meets teams where they are. Most small and mid-sized businesses live in software that was never built for deep integration. They rely on vendor portals, legacy back ends, custom fields, and a tapestry of browser extensions. A computer-using agent fits this reality, which means faster time to value.

- Humans stay in the loop. The permission model and on-screen narration let managers supervise. You can require approval before a purchase, watch what the agent is doing, or take the mouse and keyboard back at any moment.

- Stateful cloud desktop. The cloud virtual machine keeps state. An agent can run for hours without losing context, download files, open them in the right tool, and return structured artifacts like editable slides and spreadsheets.

Why GUI control beats APIs for the next wave of automation

API automation is still great when the data is clean and the endpoints are stable. Many small firms do not live in that world. Here is what the graphical approach unlocks immediately:

- No integration backlog. The agent can operate the same web forms and tables that humans use. That removes months of vendor negotiations and avoids fragile, one-off scripts.

- Mixed toolchains. In one task, the agent can read an invoice in a portal, reconcile it in a spreadsheet, check last month’s expenses, and update a task tracker. That mixed stack mirrors how people actually work.

- Training by demonstration. You can show the agent a one-off example inside the environment, or let it narrate its plan, then refine. There is less need to encode every branch in advance.

Concrete examples for a ten to one hundred person business:

- Payables close. The agent logs in to two supplier portals, downloads statements, reconciles line items in a spreadsheet based on purchase order numbers, flags exceptions, and drafts emails for human approval.

- Lead list cleanup. It opens a CRM export, visits company sites to find missing industry tags, updates the sheet, and pushes back a clean file. If the CRM tool lacks a solid import, the agent can paste data through the interface.

- Ecommerce catalog refresh. It scrapes vendor pages for updated prices, writes product descriptions to your style guide, resizes images, and schedules a publication checklist.

- Recruiting loop. It filters inbound resumes from email, collects missing details through a form, books screening calls, and updates the tracker, all while asking permission before sending anything external.

If you are a founder used to wrangling automation platforms, this is the difference between building a fragile bridge across a canyon and hiring a hiker who can traverse the terrain as it is.

The ceiling comes from permissions and usage limits

Two design choices from OpenAI define how the agent works in real organizations.

- Explicit consent. ChatGPT must ask before it buys anything, sends an email, or touches sensitive data. There is also a watch mode for tasks like outbound email that requires a human to stay present. That keeps legal and brand risk in check, and it is the basis for a clean approval culture. It also means you should design tasks with clear checkpoints where a manager clicks approve.

- Privacy and session controls. In takeover mode you control logins, cookies persist by site policy, and you can wipe browsing sessions from settings with one click. That minimizes long-tail exposure. In practice, teams will designate a few accounts for agent use to simplify audit, often mediated by a credential broker layer for agents.

There are also practical usage limits. At launch, OpenAI stated that Pro users receive a few hundred agent messages monthly, and other paid plans receive a smaller monthly allotment with credit-based options to extend. Treat those quotas the way you treat cloud compute budgets. Build runbooks around them. For example, do not burn through a month of agent messages running a one sentence request fifty times. Instead, write a checklist prompt that batches steps like login, export, reconcile, and summary into one run.

What this implies for deployments:

- Start with high-value, low-frequency tasks that fit within quotas. Monthly reporting beats minute-by-minute back office updates.

- Add budget guards. Set a max number of actions per task, require approval before any purchase, and stop the run if an upstream site looks wrong.

- Pool quotas across a team plan before you buy more capacity. Put agents behind shared accounts when appropriate, with a named owner in finance or operations.

The agent app store is taking shape

With the Apps SDK preview, third-party services can appear inside a ChatGPT conversation. You can call an app by name, or ChatGPT can suggest one when relevant. Early partners offer a hint of what will thrive: travel, design, courses, productivity, maps, and real estate. Because apps are built on a standard that aims to travel beyond ChatGPT, it is reasonable to expect a cross-tool ecosystem where one app can run in more than one agent environment. As agent platform wars intensify, expect sharper differentiation on governance, cost, and distribution.

If you remember the early days of mobile platforms, the winning apps were not just novel, they were useful in workflows people already had. Expect the same here. The best early apps will attach to the agent’s strengths: operating a browser, composing structured files, and summarizing context.

What to watch over the next 6 to 12 months:

- Discovery and quality bars. A visible directory and a review process will reward good design and reliable execution. Expect a simple ranking signal that favors completion rate, user approvals, and low error counts.

- Pricing models. Two patterns are likely. First, a subscription for access to premium features inside the app surface. Second, pay per action for high value tasks, for example a small fee each time an agent books a flight that meets constraints.

- Vertical breakouts. Real estate search will pair with the agent’s ability to summarize neighborhoods. Expense tools will hand the agent reconciliation checklists. Learning platforms will let the agent pause a course and create a practice plan.

- Compliance controls. Enterprise versions will bring team permissions, logs, and data residency guarantees. That opens the door for regulated industries to try narrow tasks with human approvals.

A one month playbook to pilot agents at work

- Week 1. Pick one process where the browser is the bottleneck. Choose something costly and predictable, like reconciling supplier statements. Document the happy path and the few common exceptions.

- Week 2. Create a runbook prompt. Spell out the pages to visit, the files to export, where to store them, and what to name outputs. Add explicit stop points. Example: after downloading statements, pause and ask me to confirm account names. Add a budget limit, for example no more than 40 actions.

- Week 3. Run three supervised trials. Use the on-screen narration to see where it gets confused. Fix your instructions. If a portal requires two factor authentication, decide who will be present and when. Create a shared account if appropriate and add audit notes.

- Week 4. Measure time saved and error rate. If the agent is already beating your manual baseline, put the task on a schedule. If not, strip the scope to the substeps it does well and let a human finish. Bots do not need to win every step to be worth it.

Tools to prepare in parallel:

- A dedicated storage location for outputs with clear naming conventions

- Single sign-on or a password manager the team is comfortable using during takeover mode

- A rotating calendar of supervisors who can approve agent checkpoints and clear two factor authentication

What could go wrong and how to mitigate it

- Prompt injection. A malicious site can try to push hidden instructions into the agent’s plan. The model ships with defenses, but you should still limit what connectors are enabled by default and require approvals before any action that leaves your domain.

- Silent drift. Over long tasks, agents can wander. Keep steps short and opinionated, add progress summaries, and stop the run if a page does not match an expected title or element.

- Misaligned incentives. If you pay per action or per message, bloated runs get expensive. Favor batch operations and strict finish conditions over chatty back and forth.

- Over-automation. Resist the urge to automate processes that change weekly. Use the agent to do the rote pieces, then hand off to people for judgment calls.

The deeper shift

The web browser is becoming a programmable surface that an assistant can operate safely enough for daily work. That does not replace APIs. It extends them. For small and mid-size teams, that means useful automation without waiting on vendors. For developers, it signals a new distribution channel. For managers, it changes how you think about process design. You will still write procedures, but now the consumer is a tool that can execute them.

The path here is not magic. It is many boring, valuable steps. Permission prompts, usage budgets, and small task wins. Add the Apps SDK and you have a path for third-party tools to meet workers where they are, inside the chat, at the moment of need. If the last decade was about moving work onto the browser, the next year will be about letting an agent drive that browser from a cloud desktop, with you riding shotgun.

The bottom line

The pattern to bet on is simple. Give an agent a clear runbook. Let it operate the interfaces you already use. Keep approvals tight. Use monthly quotas like a budget. Add apps when they shorten steps that humans repeat. In six to twelve months, the default way to get routine digital work done will be to ask the agent to do it, watch the plan unfold on its virtual screen, and approve the moments that matter. That is not science fiction. It is the new desktop, and it is already on.