AWS AgentCore and MCP are unifying the enterprise AI stack

AWS just turned MCP from a developer curiosity into production plumbing. With the Knowledge MCP server now GA and AgentCore in preview, enterprises finally get a unified way to run dependable AI agents with real governance, observability, and portability.

The breakthrough: AWS turns MCP into production-grade plumbing

On October 1, 2025, AWS made the AWS Knowledge Model Context Protocol server generally available. The service gives agents a reliable way to pull current AWS guidance, What’s New posts, and Well-Architected best practices through a standard protocol, rather than through ad hoc scraping or brittle connectors. It is free to use, publicly accessible, and designed to feed agent workflows with vetted context so they answer with confidence and act correctly. That single step moves Model Context Protocol from a developer curiosity to enterprise infrastructure. AWS Knowledge MCP Server GA announcement.

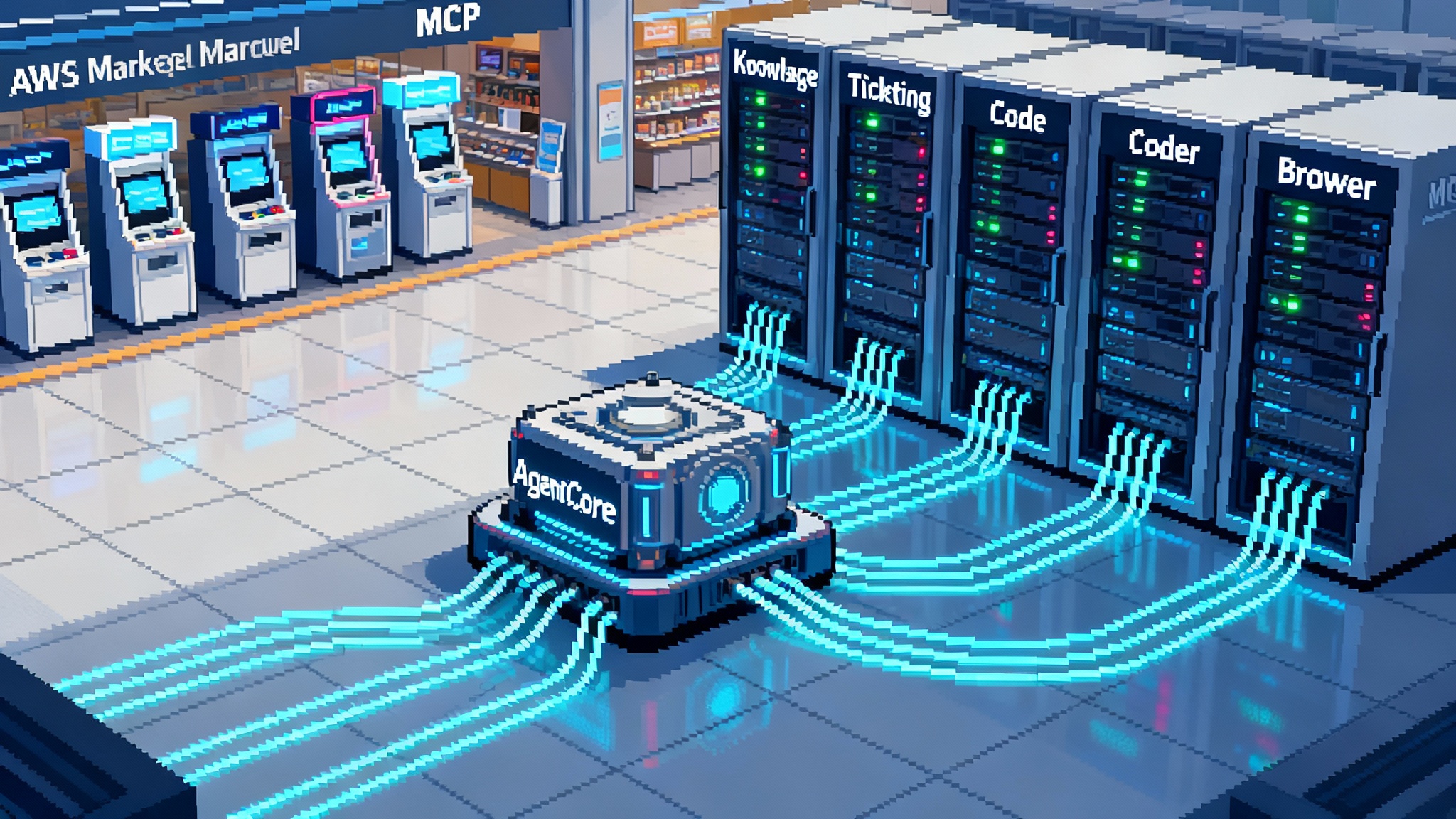

This GA capped a run of summer releases that matter to any team trying to move agents beyond pilots. On July 16, AWS introduced a new AI Agents and Tools category in AWS Marketplace, so buyers can procure agent apps and MCP-compatible tools through the channel they already trust. The same day, AWS previewed Amazon Bedrock AgentCore, a runtime and set of services that operate production agents with the controls enterprises expect.

The pattern is clear. MCP is becoming the fabric that lets agents talk to tools. AgentCore is the scaffolding that runs those agents at scale. The Marketplace is the storefront that fills their tool belts.

Why MCP is the missing interoperability layer

Model Context Protocol is a standard for how agents and tools exchange capabilities and context. Instead of building fragile one-off integrations, MCP defines a simple contract: tools describe what they can do and the schema they accept; agents discover those tools and call them predictably. In practical terms, that means a security review once, a data contract once, and a smaller blast radius when vendors change implementations.

Think of it like USB for software agents. Before USB, every device needed a custom cable, driver, and installer. After USB, you plugged in a keyboard or camera and it just worked. Enterprises have been living in a pre-USB world for agents, where every tool is an integration project. MCP moves the ecosystem closer to plug and play. For a parallel push outside AWS, see how Microsoft's agent framework unification is shaping a similar stack.

AWS’s embrace matters because critical enterprise backends live on AWS. There are MCP servers for major AWS services, and the Knowledge MCP server now serves as the canonical source of AWS patterns and limits. When your agent can query real service quotas or best practices through MCP rather than hallucinating them, you reduce time wasted on retries and misconfigurations and you lower operational risk.

What AgentCore adds on top of MCP

Where MCP standardizes the interface, AgentCore focuses on operations. In preview, Amazon Bedrock AgentCore is the runtime that makes agent workloads dependable: scaling, concurrency controls, policy enforcement, and safety rails. You can run the model you prefer, compose open source frameworks, and still keep enterprise-grade controls without building yet another custom orchestrator. Amazon Bedrock AgentCore preview.

AgentCore’s value shows up in three places:

- Control plane: consistent policies for who can run which tools, with auditability. This aligns with how security teams think about identity and permissions.

- Data plane: deterministic routing to tools, retries with backoff that respect rate limits, and circuit breakers when tools degrade.

- Observability: native hooks for tracing and evaluation, so teams can surface slow tool calls, detect loop risks, and measure outcome quality.

Put simply, MCP tells you how to plug tools into agents. AgentCore tells you how to run those agents without waking the on-call.

How this actually cuts integration cost and lock-in

The biggest costs in agent projects are not model inference or even tool licenses. They are integration and governance.

- Integration creep: Every new tool takes weeks to wire up, secure, and test. With MCP, tools declare capabilities and schemas. Your agent runtime reads a contract, not a one-off software development kit. If your team adds five tools per quarter, standardization can mean weeks back on the calendar and a six-figure reduction in integration engineering over a year.

- Vendor swap friction: With MCP, capability contracts are portable. If a vendor sunsets a product or pricing changes, you do not rewrite the agent. You swap the MCP server behind the same interface. That lowers switching costs and gives procurement leverage.

- Compliance reviews: MCP’s explicit function schemas and tool manifests shrink the surface area that auditors need to understand. That shortens review cycles because you can point to consistent contracts and execution logs.

A simple back-of-the-envelope: suppose your team maintains 12 bespoke tool connectors that each take 80 engineer hours per quarter to keep current with authentication tweaks, API drift, and edge cases. That is 960 hours per quarter. Consolidating behind MCP can cut this by half with a shared contract and common auth patterns. Even at a conservative fully loaded rate, that is material savings.

Lock-in is subtler. AgentCore is comfortable with models inside and outside Bedrock, and MCP is an open protocol. If you keep your tool layer MCP-first, your prompts and policies in version control, and your evaluation harness independent of a single model provider, you maintain the option to change models without ripping out the tool plane. That is practical portability.

Governance patterns to adopt now

Enterprises can move fast and stay in control with a few patterns that work well with AgentCore and MCP.

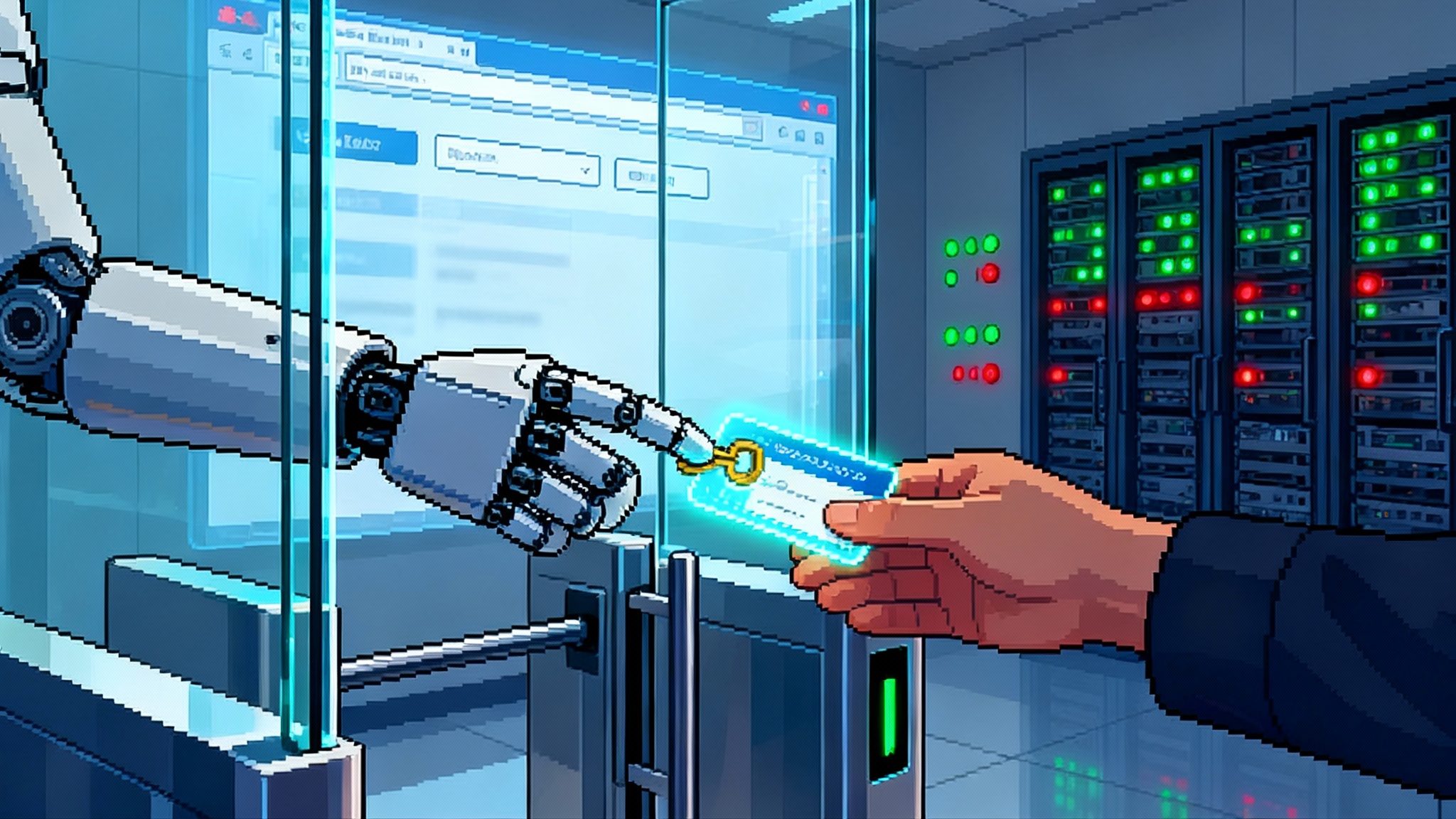

Identity: one identity plane, scoped credentials

- Centralize authorization in your existing identity provider. Use short-lived credentials for each agent session. No persistent keys embedded in tool configs.

- Scope permissions to the minimum tool functions the agent needs. MCP makes this easier because each tool advertises its functions. Bind policies at the function level.

- Support human-in-the-loop escalation. When an agent attempts an action above its baseline, require a just-in-time token from a human approver. Log both the approval context and the original agent intent.

Why it matters: production incidents often start with privilege bleed. Tight scopes and short-lived credentials limit lateral movement and make rollbacks instant. For deeper background on identity isolation in the browser, read our guide to the credential broker layer for browser agents.

Observability: traces first, metrics second

- Trace every tool call with a stable request identifier that flows from the user, through the agent plan, to the tool invocation and back. Include the prompt hash and tool schema version in the trace.

- Emit first-class metrics for tool error codes, retries, and cold-start latencies. In practice, p95 latency by tool and by schema version tells you where to optimize.

- Store the agent’s plan and deltas for each step. Plans let reviewers reconstruct what the agent believed would happen, not just what it did.

- Create a deterministic replay harness. Replaying a failing interaction with the same inputs, tool states, and model seed lets you debug without poking production systems.

Why it matters: without traces and replays you cannot separate model issues from tool or policy errors. Teams guess, and incidents drag on.

Browser and code sandboxes: tight, testable, and ephemeral

Agents often need a browser to navigate documentation or a code runner to transform data. Treat both as untrusted workloads:

- Browser: headless browser isolated in a container with a deny-by-default egress policy. Allowlist domains the agent is permitted to read. Strip cookies and storage between navigations. Enforce a download quarantine.

- Code: run code in micro virtual machines or hardened containers with no host mounts. Ephemeral volumes, capped CPU and memory, and strict network egress. Preinstall only whitelisted libraries. Persist artifacts to a signed object store with time-boxed read permissions.

- Both: record console output and snapshots for root-cause analysis, but redact secrets at the sink. Sandboxes should fail closed when resource caps hit.

Why it matters: browsers and code runners are the easiest path from a helpful agent to a data exfiltration incident. Guard rails need to be structural, not advisory. If your team is leaning into browser-native agents, see how the browser becomes an agent runtime.

A reference architecture you can copy

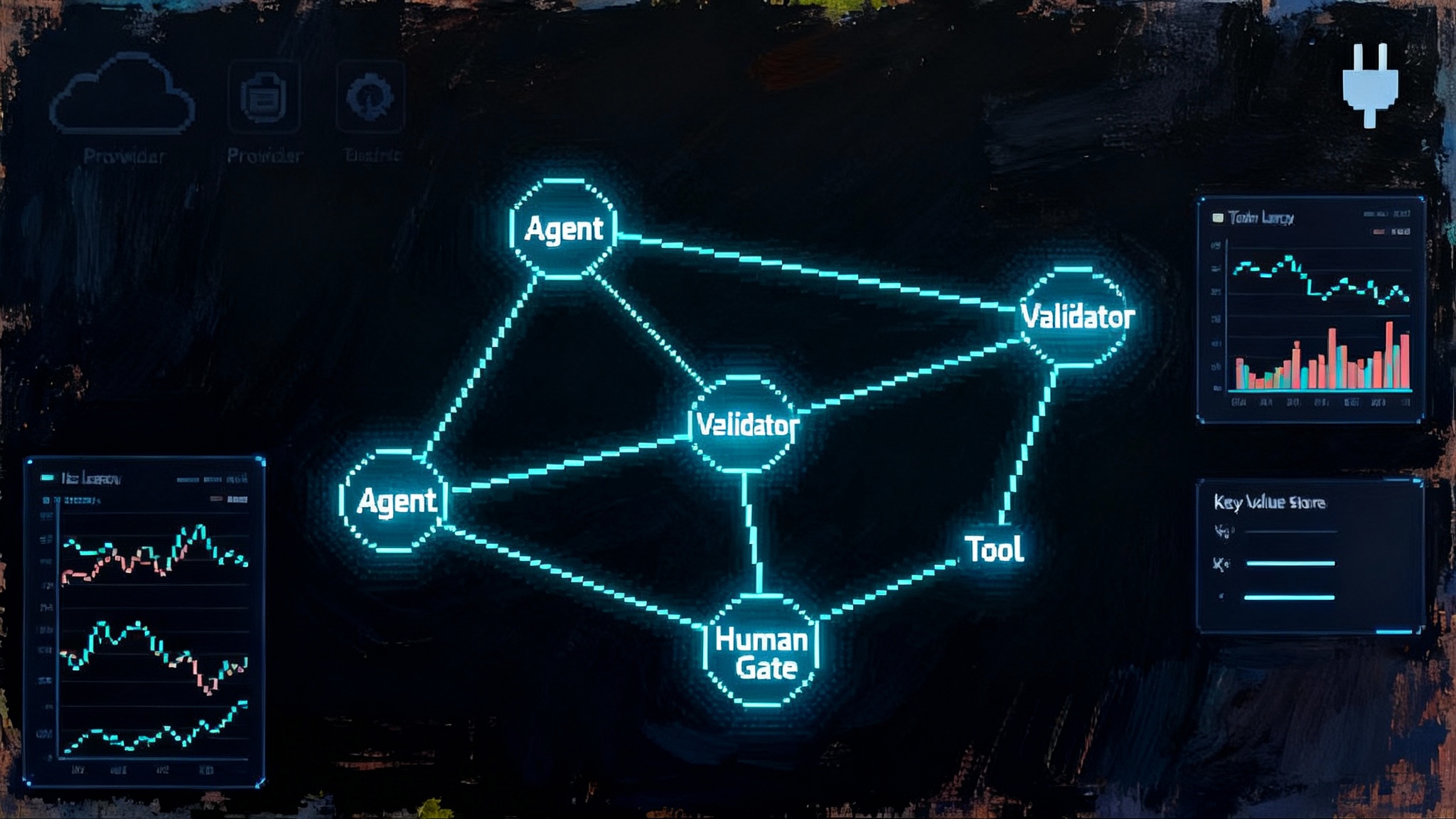

Picture a simple but production-ready flow:

- Ingress: users interact through a chat surface or a workflow trigger. Requests carry a user identity and policy tags.

- Agent runtime: AgentCore plans the task and calls tools via MCP servers. Policies gate tool usage at each step.

- Tool plane: a mix of Marketplace tools and internal services, all exposed through MCP so capabilities are explicit. Examples include a ticketing system, a knowledge source, and an automation runner.

- Knowledge: the AWS Knowledge MCP server provides authoritative guidance on service limits, best practices, and regional availability. This cuts hallucinations and failed calls.

- Sandbox: a browser or code runner executes in an isolated environment with network and filesystem controls.

- Observability: traces and metrics stream to your logging and monitoring stacks. A replay service stores inputs and tool responses.

- Guardrails: a policy layer checks content and action thresholds; a human review step handles sensitive operations.

This layout scales from a single agent to a fleet. It also sets obvious boundaries for security reviews.

The 90-day playbook to pilot and ship on AWS

The fastest teams do not start by wiring everything. They pick one painful workflow, run it to ground, and then generalize. Here is a practical plan that assumes you want a production deployment at day 90.

Days 0 to 30: pick your wedge and stand up the basics

- Choose a workflow with clear business value and limited blast radius. Examples: environment provisioning for internal developers, knowledge search that cites sources, or customer-support summarization for internal use.

- Stand up AgentCore preview in a development environment. Configure one model to start, and commit prompts, policies, and tool manifests to version control.

- Connect two MCP servers: the AWS Knowledge MCP server for grounded guidance, and one operational tool relevant to your workflow. Keep scope tight.

- Implement identity with short-lived credentials per session and least-privilege policies bound to tool functions.

- Build tracing from day one. Emit a stable request identifier and trace plan steps, tool calls, and model invocations. Do not defer this to week eight.

- Construct a minimal sandbox for any browsing or code execution. Default deny for egress. Document the allowlist.

- Define success metrics. For example, first-contact resolution rate, average handling time, percent of steps executed autonomously, and p95 tool latency.

- Run a tabletop risk review. List failure modes, escalation paths, and rollback procedures.

Deliverable: a working development deployment that demonstrates value on a single workflow and produces traces and metrics you can show to security and operations.

Days 31 to 60: expand coverage and prove controls

- Add two more tools through MCP. Favor tools from the AWS Marketplace AI Agents and Tools category so procurement and billing stay simple.

- Introduce human-in-the-loop approvals for sensitive actions. Capture approver identity in the trace.

- Build a deterministic replay harness. Use it to reproduce the top five failures from the first month and fix them.

- Establish service level objectives. Example: p95 end-to-end latency under five seconds, tool call success rate above 99 percent, and an autonomous completion rate target that increases weekly.

- Run a red-team exercise. Attempt prompt injection, cross-tool data leakage, and sandbox escapes. Patch the policies and sandboxes accordingly.

- Model cost and load envelopes. Set concurrency limits and backoff strategies. Create circuit breakers that degrade gracefully.

Deliverable: a controlled beta with a small set of real users, clear SLOs, documented risks, and a replayed test suite that catches regressions.

Days 61 to 90: harden and launch to production

- Productionize identity. Integrate with your identity provider, enforce per-tool scopes, and roll keys on a schedule. Add alerting for unusual privilege escalations.

- Expand observability. Add dashboards for p95 latencies by tool and by schema version, retry rates, and approval rates. Track the top five tool errors and assign owners.

- Harden the sandbox. Cap CPU and memory, enforce stricter egress, and add artifact signing and retention policies.

- Write runbooks. For each top error, document symptoms, probable causes, and steps to remediate. Include rollback checklists.

- Define change management. Any new tool function or prompt change goes through a pull request with automated checks and a replay run.

- Pilot a second use case that reuses the same tool plane. The goal is to prove that the architecture scales by reconfiguration, not by reintegration.

- Execute launch gates with security, compliance, and operations. Agree on success criteria for the first 30 days of production.

Deliverable: a production deployment with auditable controls, a second use case in progress, and a backlog prioritized by impact and risk.

What to buy and what to build

- Buy: the tool plane where the market is crowded and the problem is not your differentiator. Discovery through the Marketplace simplifies procurement and renewals. MCP support should be a must-have on any vendor shortlist.

- Build: the prompts, policies, and plans that encode your institutional know-how. That is where advantage accumulates. Keep this logic portable by treating the model as a dependency, not the center of your system.

- Customize: evaluation harnesses and replay systems. Most teams need bespoke checks that reflect their risk and domain.

Risk checklist you can copy into your tracker

- Data leakage across tools: verify that tools that should not share context never receive the same session token. Add an automated policy test.

- Prompt injection through browsing: sanitize page content, strip scripts, and tag untrusted input. Block cross-origin fetches from the sandbox.

- Over-permissioned tools: implement a linter that flags any tool manifest whose scope exceeds the functions used by current plans.

- Unbounded retries: set per-tool retry budgets and surface them in dashboards. Retries should count against SLOs.

- Supply chain drift: lock tool schemas to versions. New versions require a pull request and a replay run before promotion.

What to watch next

Expect more first-party MCP servers for AWS services and deeper Marketplace catalogs that label MCP compatibility and deployment paths. Also expect richer AgentCore policy and observability features, because that is where enterprises spend time today. The more that becomes out of the box, the fewer custom patches your teams will need.

The bottom line

The pieces that held agents back from production were always the same: connectors that broke under change, governance bolted on after the fact, and evaluation tools that arrived only at the end. AWS’s July to October 2025 releases point to a different pattern. MCP becomes the lingua franca for tools. AgentCore turns agent operations into a first-class platform. The Marketplace gives you a supply chain that security and procurement already understand. If you adopt MCP-first tools, a single agent runtime, and a simple set of governance patterns, you can move from proof of concept to production in a quarter and stay portable enough to make deliberate choices later. The stack is settling. Teams that learn to use it now will ship faster and with fewer regrets.