From Demos to Deployments: Claude 4.5 and the Agent SDK

Anthropic’s late September launch of Claude Sonnet 4.5 and a production Agent SDK marks a real turn for agentic coding and computer use. Long-horizon reliability, checkpoints, and parallel tools now let teams ship, not just demo.

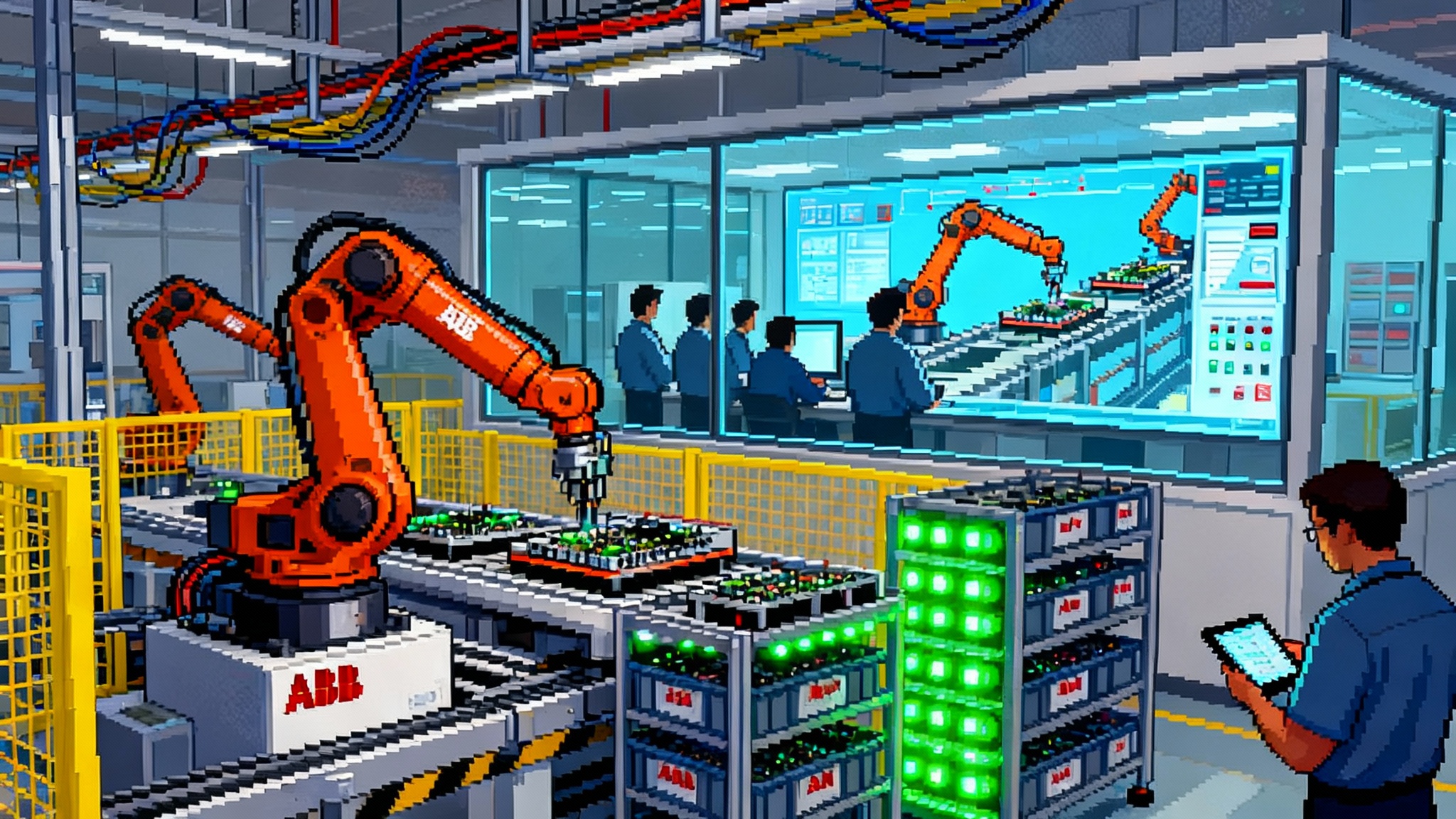

The week agents got real

In late September 2025, Anthropic shipped something that felt different. The company announced Claude Sonnet 4.5 and, crucially, opened a production Agent Software Development Kit. In Anthropic’s own words, the model leads on real computer use benchmarks and maintains focus on multi-step tasks for more than a day. The release also highlights checkpoints in Claude Code, a memory and context editing feature, and a new permissioned path for running tools. Put plainly, the flashy agent demos we watched all year are crossing into deployable systems. You can read the company’s details in the official announcement, which also calls out parallel tool execution and the Agent SDK that powers Anthropic’s own products, not a separate experimental stack: Anthropic’s Sonnet 4.5 release.

This is not a model-only story. It is the pairing of a frontier model with a harness that bakes in durability, permissions, and session control. That combination changes what engineering teams can attempt with agents that operate browsers, terminals, and files for hours instead of minutes. For context on how browser agents are evolving, see how Gemini 2.5 browser agents rethink the bottlenecks at the UI layer.

What changed under the hood

Three capabilities matter most for anyone attempting production agents that touch live systems.

1) Long-horizon reliability

Agents do not fail only because they are bad at code. They fail because they forget, drift, or get stuck during long tasks, like database migrations that span tens of thousands of records or refactors across large repositories. Sonnet 4.5 arrives with two practical supports. First, Anthropic is encouraging extended thinking budgets that preserve coherence over many steps. Second, the platform adds memory and context editing so agents can prune, reframe, or reload what matters without losing the thread. The result is not magic. It is a reduction in error accumulation across time. Think of it like marathon pacing for models: fewer surges, fewer stalls, steadier progress.

Concrete example: an internal tools agent that must read a legacy monolith, extract a set of API endpoints, generate request validators, update tests, and open a pull request. In previous generations, the agent would often wander off after the second or third subtask. With a durable session and explicit memory control, it can checkpoint its plan, complete one phase, and move cleanly to the next.

2) Checkpointing that actually helps recovery

Checkpoints are not new as a concept. What is new is having them inside the same harness as the agent, with native awareness of files, diffs, and tool history. In Claude Code, checkpoints serve as save points that let you roll back quickly to a known good state. It is more like version control than a debug log. In an agent run, that means you can allow the model to explore several bash edits, then force a return to the last green state if tests fail. The key is speed and scope. The checkpoint is close to the agent’s tools, not an external snapshot. So rollback is measured in seconds, not minutes, and includes the exact artifacts the model touched.

3) Parallel tool execution

Agents typically wait their turn. Run a command, wait. Run another, wait. Parallel tool execution changes the cadence. Instead of serial steps, the agent can run multiple shell commands at once, or stage several fetch-and-parse operations in the browser while it compiles in the background. That shortens wall clock time and increases the chance that long jobs finish within a human workday. The tradeoff is classic concurrency. You must handle race conditions and write to isolated paths. With the SDK’s permissions and working-directory controls, the system can keep those parallel tasks from stepping on each other.

Put these together and the picture is clear. An agent can plan for hours, checkpoint progress, and use parallel tools to compress waiting time. The output is not a demo clip. It is a merged pull request, a populated spreadsheet, or a hardened configuration.

From demo stacks to deployable systems

A year ago, the default architecture for a real computer use agent looked like a scrapbook of parts. You might wire up a headless browser, a stack of wrappers for tool calls, a custom memory store, a permission dialog built with webhooks, and a scheduler that tried to keep the whole contraption alive. It worked, sometimes. But every piece had a different failure mode. Browser selectors broke. Memory overflowed. The retry logic masked real errors. And half your time went into glue code rather than user value.

Anthropic’s approach is different in two ways:

- It is SDK-centric. The same harness that powers Claude Code comes to you as a package. That brings session management, tool permissioning, file scopes, context compaction, and error handling into one place. You still need to configure it, but you do not have to reinvent it.

- It is product-backed. The company is shipping and running agents for its own users. That tends to harden edges. Bugs get found the hard way. Guardrails exist because a real system needed them.

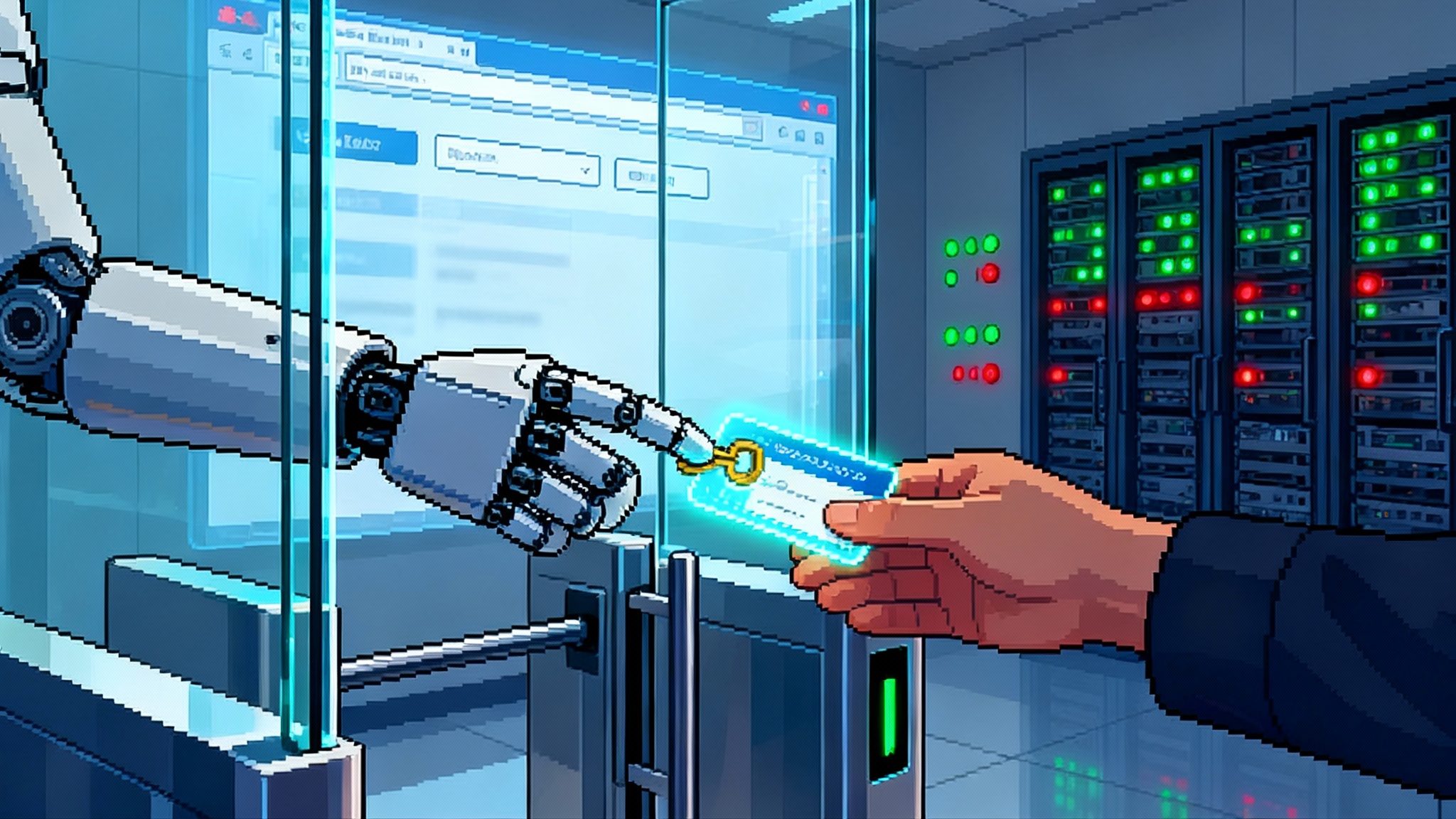

The point is not that other frameworks cannot do this. It is that, with Sonnet 4.5 and the Agent SDK, a coherent default exists. You can get to a minimal viable agent without building a framework first. If you are designing the safety plane, the credential broker layer is a useful mental model for controlling secrets and permissions.

A practical build path your team can ship

If your mandate is to produce something useful in a quarter, here is a concrete path that leans on the new pieces.

1) Choose a tractable, bounded job

- Target tasks with durable artifacts and clear pass or fail signals. Examples: refactor a library and pass tests, reconcile revenue in a spreadsheet with traceable diffs, triage bug reports into labeled issues with proposed fixes.

- Avoid open-ended browsing as a first milestone. Keep the surface area small so that you can observe and improve the loop.

2) Define the agent contract

- Inputs: repository path, branch, data file locations, credentials to a sandbox, time budget.

- Outputs: pull request with tests, spreadsheet with change log, report with machine-readable findings.

- Allowed tools: bash, file read and write within a working directory, browser with domain allowlist, test runner, code formatter.

3) Turn on guardrails by default

- Use a strict permission mode that requires the agent to ask for elevation before high-impact actions such as deleting files, pushing branches, or making network requests outside the allowlist.

- Set a working directory and a scratch directory. Block writes outside those paths.

- Enforce timeouts per tool call and a total session budget. If needed, cascade to a resume run using the last checkpoint.

- Mask and rotate secrets. Do not pass long-lived tokens into the session. Route secrets through a short-lived proxy and log every use.

4) Put a human in the loop in the right spots

- Require explicit approval on three events: crossing a checkpoint, escalating permissions, and publishing artifacts. Create a one-click diff view for each. The core rule: never ask a human to read a wall of text. Ask them to confirm a specific change.

- When the agent proposes a plan, have it produce a checklist. The reviewer checks boxes and adds notes. The model revises the plan. This reduces ambiguity without burying the operator.

5) Use live-fire evaluations, not just offline scores

- Replay real tasks from your logs. Seed the agent with the true inputs from last week’s incidents or last month’s reconciliation backlog. Measure end-to-end success and wall time.

- Add chaos tests. Change a file path, flip a feature flag, rename a column. Agents that tolerate small shifts are the ones that survive in production.

- Red team for prompt injection and tool abuse. Paste in a poisoned readme or a malicious web page and verify the agent refuses exfiltration, code execution, or policy violations.

6) Instrument the economics from day one

- Budget tokens per session and per phase. Sonnet 4.5 keeps pricing parity with the prior Sonnet tier. Cap the thinking budget and require a checkpoint before raising it.

- Track the cost of repeats. A failed step that re-runs five times can dominate your bill. Use checkpoints to restart from the last green state instead of replaying the whole job.

- Exploit parallel tools to reduce wall time, then profile token usage to make sure you did not just create expensive contention.

7) Land the build with the SDK

- Start with a minimal configuration of tools and permissions. Add only what your target task needs. Keep a short list of allowed domains and commands.

- Use the SDK’s sessions to persist context across long runs. Resume from a checkpoint when a worker restarts or a deployment rolls.

- When you are ready to code, the fastest route to scaffolding is the Claude Agent SDK overview.

Why the UI layer is finally viable

The phrase real computer use often gets reduced to cursor-moving demos. The reality is more serious. An agent that navigates a browser or application window must deal with brittle selectors, inconsistent page load behavior, and pop-ups that change every week. Two shifts make this layer more dependable now:

- Better supervision signals. Anthropic calls out strong results on OSWorld, a benchmark that evaluates real computer tasks. Benchmarks are not production, but they encode skills like finding the right button, following multi-page flows, and recovering when an unexpected dialog appears. That maps to the work many teams need. For a parallel view of runtime choices, see why Comet turns the browser into an agent runtime.

- Harness-level permissions. Running a browser as root on a developer laptop is a good way to have a bad time. The Agent SDK’s permissioning, working directories, and domain allowlists shrink the blast radius. Your agent can fill a spreadsheet in a sandbox while your production environment stays untouched.

A design tip: teach your agent to explain its UI assumptions. When it clicks a button, have it log the text label it expected and the selector it used. When the UI shifts, the diff tells you what broke and whether you can add a fallback rule.

Patterns to watch in the next 3 to 6 months

- Memory as a product surface. Project-scoped memory files and team-level instructions will become first-class artifacts, reviewed like code. Expect a memory review step in pull requests alongside tests and lint.

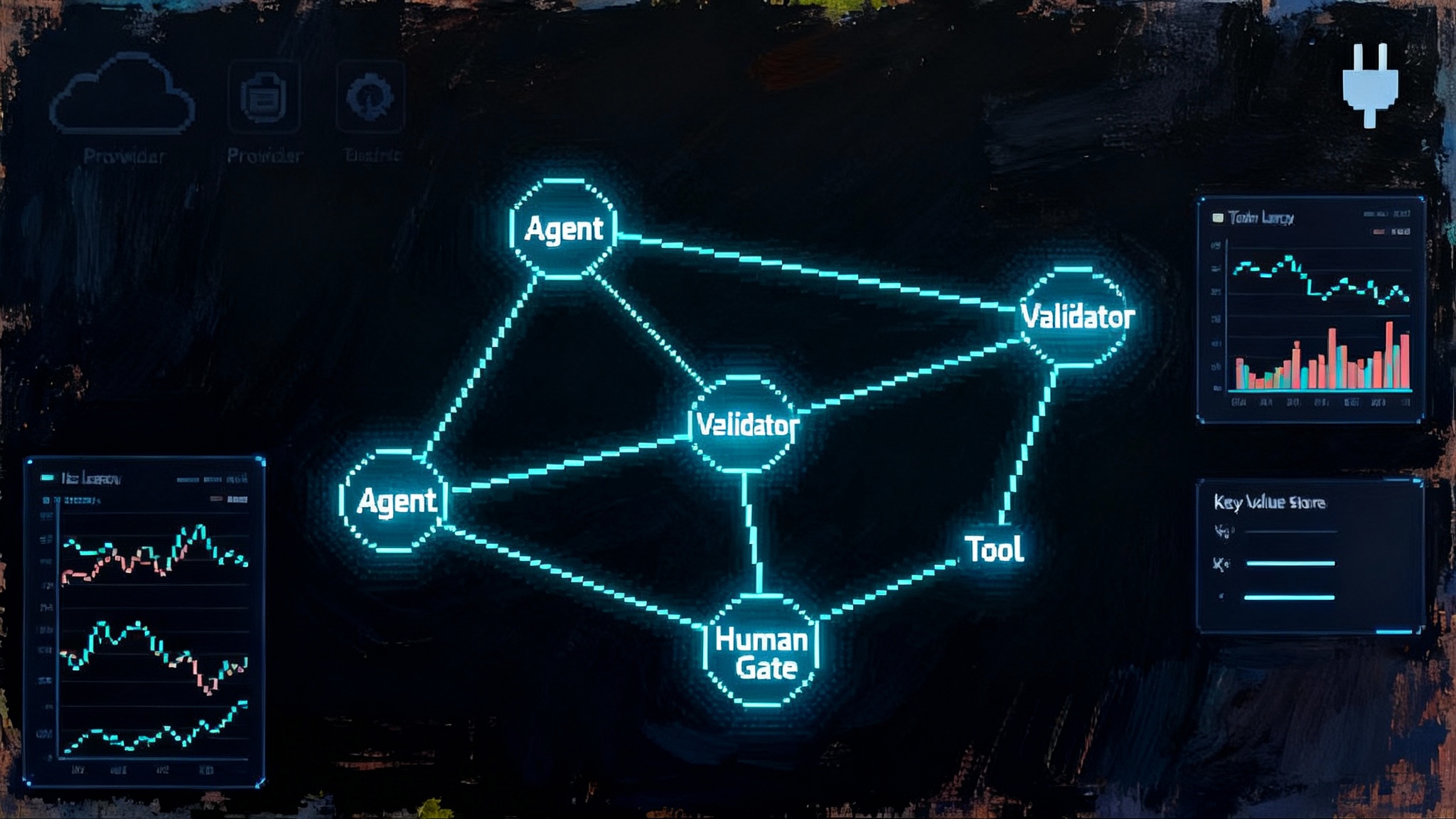

- Subagents with explicit charters. Instead of one mega-agent, teams will compose specialists that hand off via checklists. A test agent writes and runs tests. A refactor agent changes code. A docs agent updates guides. The orchestrator watches checkpoints and owns the ship-or-roll-back decision.

- Parallelism by design, not accident. Agents will schedule speculative steps the way modern processors predict branches. Run three approaches to a flaky test in parallel, then keep the winner and kill the rest. The harness will make that safe by isolating writes and gating merges.

- Live-fire evals replace static leaderboards. Golden paths will include adversarial inputs, injected pop-ups, and slow networks. Teams will publish operational reliability numbers like success rate under chaos and cost per successful run, not only benchmark scores.

- Guardrails become contracts. Permission prompts will be templated, logged, and audited. Security teams will define per-tool policies in code and block changes without review. Approvals will be short, structured, and attached to artifacts.

- Browser and desktop agents join the build pipeline. Expect agents that cut release notes from merged diffs, fill compliance spreadsheets from telemetry, and validate pricing pages end to end after every deploy. Less sizzle, more chores done correctly.

The economics of long-running agents

The price tag is visible and the hidden costs are now more manageable.

- Token spend. With pricing parity to prior Sonnet, you can set a per-task budget and enforce it with checkpoints. The point of checkpointing is to keep repeats small. Restart the last step, not the whole journey.

- Wall time and compute. Parallel tools shrink the clock but can increase transient resource use. That is fine if you contain it. Run in an isolated workspace, cap concurrent subprocesses, and throttle network calls to avoid getting rate limited by external services.

- Human review time. The biggest savings often show up here. If the agent produces clean diffs, crisp checklists, and artifacts that slot into your existing tools, a reviewer can approve in minutes. That is the difference between a toy that needs constant babysitting and a colleague that tees up good work.

- Failure handling. Every minute you do not spend manually unwinding a broken run is value. Checkpoints, resumable sessions, and clear logs move you from detective work to surgical fixes. That speed shows up in the next sprint’s capacity.

A practical budgeting pattern: set a per-ticket budget in dollars, a maximum session length in minutes, and a retry limit. When either limit triggers, force a human decision at a checkpoint. That keeps costs predictable while giving the agent room to do useful work.

What to build first

- Backlog burners. Give the agent labeled, repetitive work with clear pass or fail outcomes. Examples: migrate internal spreadsheets to a standardized schema, add missing tests to the top fifty untested modules, or normalize product metadata.

- Release hygiene. Ask the agent to read merged diffs, update changelogs, and validate the staging site with a browser run. Require approval before it touches public pages.

- Data reconciliation. Point it at invoices and bank exports, then have it produce a reconciled spreadsheet with a clear audit trail. Gate any system writes behind a human signer.

- Support triage. Let the agent tag tickets, propose fixes, and draft responses. Keep it inside your sandbox until a human approves the send.

Avoid starting with tasks that have blurry objectives or legal exposure. Do not begin with production database writes, open web browsing with no domain limits, or anything that creates financial obligations. Get the scaffolding right first.

The take-home for teams

The meaning of Anthropic’s late September releases is not that one model beat another on a score. It is that a model with strong coding and computer-use skills now ships with a harness mature enough for production work. Long-horizon reliability reduces drift. Checkpointing makes recovery cheap. Parallel tools turn hours into minutes without losing control. And the Agent SDK gives you a tested foundation instead of a pile of glue.

Build like this: pick a bounded job, define a contract, turn on guardrails, keep a human in the loop where it matters, and measure real-world outcomes with live-fire evals. If that sounds like the rest of modern software, that is the point. Agents just joined the real world. The next few months will belong to teams that treat them like products, not props.

For deeper comparisons across enterprise stacks, see how AWS AgentCore and MCP are shaping platform choices alongside Anthropic’s move.