GitHub’s Copilot Agent Goes GA, PR-Native Coding Arrives

GitHub’s Copilot coding agent is now generally available with pull request native automation, enterprise policies, and Actions-powered sandboxes. Use this 30‑day rollout plan to deploy it safely and see what it means for 2026.

Breaking: repo‑native autonomous coding hits production

On September 25, 2025, GitHub moved its Copilot coding agent into general availability for paid Copilot plans. You can now delegate a task to an autonomous agent that lives inside your repository, works in the background in its own isolated environment, and reports back through a draft pull request. It reads issues, follows your repository rules, and responds to your comments on the pull request like a diligent teammate. See the official announcement on Copilot coding agent is now generally available.

If you are tracking the broader shift to software run by agents, our analysis of the agent as the new desktop sets the stage for what is coming next.

Repo native, explained in human terms

Think of the agent as an embedded teammate who never leaves GitHub. It is not a bot that copies code to a mystery cloud. It works where your developers already work and plays by the same rules.

Repo native means the agent:

- Reads from your repository the same way a developer would. It uses the code, issues, pull requests, labels, and project boards you already maintain.

- Enters the codebase through a draft pull request. No silent pushes to main, no shadow branches. Every change goes through the same review gates your team set up.

- Obeys repository controls such as branch protections, required status checks, code owners, and rulesets. If a human could not merge without green checks, the agent cannot either.

- Uses organization policy to determine where it is allowed, who can delegate to it, and which capabilities it has. That is how you keep experiments from becoming production surprises.

The net effect is that the agent is a first class participant in your software factory. It shows up in the very artifacts your developers trust and know how to review.

How pull request native automation actually works

Here is a step by step example. You assign the agent an issue titled “Add pagination to the search endpoint.”

- The agent launches a clean development environment for the repository, then clones code into that environment.

- It scans the codebase, the issue thread, and any repository instructions you have provided. If you maintain a CONTRIBUTING file or a docs folder with architecture notes, the agent uses them as context.

- It creates a draft pull request with a branch and an initial plan. The plan lists intended changes, tests to update, and commands it plans to run.

- Inside its environment the agent runs builds and tests. If a linter or formatter fails, it fixes and reruns until checks pass locally.

- It pushes commits to the draft pull request as it makes progress and documents what changed. That gives reviewers a narrative to follow.

- When ready, the agent requests review. If you leave review comments such as “Use cursor based pagination, not offset,” the agent reads those comments and updates the branch.

- The agent cannot merge unless your rules allow it. A human can control the final gate while the agent does the heavy lifting.

That is the core loop. Plan, change, run, document, request review, iterate. The important part is that the loop is visible. The pull request captures the plan and the code, and your existing checks enforce quality.

Why Actions powered sandboxes make this safe

Behind the scenes, the agent runs inside an environment that GitHub instantiates with GitHub Actions. Think of this as a disposable workshop. It is created on demand, furnished with the minimum tools and permissions required, and torn down when the job is complete. For details on limits like branch protections and who can approve, see how Copilot respects branch protections.

Safety levers you control:

- Ephemeral runners. Each run gets a fresh runner, which reduces risk of cross task contamination.

- Least privilege permissions. Restrict the default token to read only or to specific scopes. Allow creating branches and opening pull requests, but not force push or secret writes.

- Network policy and isolation. Control whether the sandbox can call external services. Lock down outbound traffic for sensitive repositories and allow egress only to approved hosts.

- Time to live limits. Jobs and environments expire, which reduces the window for misuse if credentials are exposed.

- Audit trails. Every job, step, and log is captured in the repository, so security teams can reconstruct who did what, when, and where.

If you already trust GitHub Actions to build, test, and release your software, then you already trust the core machinery that powers this agent.

Admin policies are the safety rails

For organizations on Copilot Business and Copilot Enterprise, administrators decide whether the agent exists at all. Then there are finer grained switches that matter just as much:

- Repository allowlists. Turn the agent on only for pilot repositories at first. You can enable by team or by repository path.

- Role based delegation. Allow only maintainers to delegate tasks in production repositories while letting all developers test in sandboxes.

- Capability scope. Permit the agent to open draft pull requests but require a human to upgrade to ready for review. Disallow pushes that bypass status checks.

- Model and data policy. Choose which language models are allowed, where prompts can be stored, and whether model logs can include proprietary code snippets.

- Cost and quota controls. Set daily or weekly limits on agent task hours per repository.

- Audit and export. Export logs to your security information and event management system and link agent actions to your identity provider.

These knobs make it possible to deploy the agent in a regulated environment without inventing new processes.

The 30 day rollout playbook

The fastest way to learn is to run a small but real experiment. Here is a practical plan that fits in 30 days.

Days 1 to 3: Frame the pilot

- Choose two pilot repositories. Prefer a service with good tests and a documentation site. Avoid your most critical production system on day one.

- Define success. Pick three measurable goals such as 20 percent reduction in average pull request cycle time for documentation changes, 10 percent increase in test coverage, and 30 percent of accepted agent pull requests requiring no revision by a human.

- Baseline your metrics. Capture current pull request cycle time, review time, failure rate of checks, and test coverage.

- Draft a risk register. List realistic failure modes such as over permissioned tokens, flaky tests hiding regressions, or the agent touching files outside the intended path.

Days 4 to 7: Put guardrails in place

- Policies. Enable the coding agent at the organization level but restrict it to the two pilot repositories. Allow only maintainers to delegate tasks.

- Permissions. Set the default token for the agent to read repository and write pull requests only. Disable repository administration and secret write permissions.

- Branch protections. Require status checks to pass before merge. Add code owners for critical paths. Require at least one human reviewer.

- Runner hygiene. Use ephemeral hosted runners by default. If you must use self hosted, isolate them on a network segment and rotate machine images weekly.

- PR templates. Add a template with checkboxes for scope, tests, and documentation. Include a short section where the agent will paste its plan.

- Labels. Create labels like agent suggested, agent updated, and agent needs input so you can filter and measure later.

Days 8 to 14: Start with low risk tasks

- Backlog grooming. Add an agentable label to issues that fit well, such as adding a missing unit test, updating a dependency with a known safe version range, or writing a README section.

- Daily cadence. Ask a maintainer to assign three issues per day to the agent.

- Reviews. Hold a 15 minute daily review focusing on two questions. Did the agent follow repository conventions. Did the agent overreach its scope.

- Measure as you go. Track acceptance rate, number of comments required before approval, and whether the agent’s plan matched the outcome.

Days 15 to 23: Increase complexity carefully

- Expand the task set to include small bug fixes and refactors that touch two or three files.

- Introduce guarded external calls. If a task requires calling a known service, whitelist only that host in the runner network policy.

- Add test gates. Require new or updated tests for any change that touches code paths that lack coverage.

- Stress the review flow. Ask the agent to respond to reviewer suggestions and verify it can iterate without creating new branches or noisy commits.

Days 24 to 30: Evaluate and decide to scale

- Compare metrics to baseline. Look at lead time for the pilot repositories versus the previous month. Check change failure rate and review throughput.

- Document failure cases and fixes. For example, if the agent touched a generated file, add a path filter to block that directory for agent tasks.

- Write the runbook. Include who can delegate, what to delegate, how to recover from failed jobs, and how to escalate security concerns.

- Present to leadership. Recommend one of three outcomes. Keep the pilot running, expand to five more repositories, or pause and fix guardrails.

This plan keeps risk low, builds shared understanding, and produces data you can defend in a design review.

The metrics that matter

Do not drown in dashboards. Focus on a small handful of signals that connect to software delivery and safety. For a deeper view on measurement and dependability, see our look at enterprise agent reliability benchmarks.

- Pull request cycle time. Median time from draft open to merge for agent initiated pull requests, broken down by task type.

- Review effort. Average number of review comments and the number of commits after the first human comment.

- Acceptance rate. Percentage of agent pull requests merged without human edits to the code.

- Change failure rate. Percentage of agent pull requests that trigger a rollback, hotfix, or follow up bug within seven days.

- Coverage delta. Net change in test coverage for files the agent touched.

- Policy hits. Count of actions blocked by branch protections, token scope, or path filters.

- Cost per merged change. Aggregate agent runtime minutes and model usage divided by the number of merges.

Common pitfalls and how to avoid them

- Over permissioned tokens. Start with the smallest scope that lets the agent open pull requests and push to its own branches. Only expand if you observe a real need.

- Flaky tests as blindfolds. The agent will believe your checks. Fix the flakiest tests in pilot repositories before you delegate anything non trivial.

- Unbounded tasks. Write issues with clear acceptance criteria. Use labels to restrict scope, for example only files under src or only docs.

- Reviewer overload. Set a daily cap on agent initiated pull requests per repository. Pair the agent with the most relevant code owners by default.

- Silent environment drift. Version your build toolchain in the repository and pin Actions to specific versions so the agent sees the same world as your humans.

How repo embedded agents will reshape development in 2026

The biggest shift will not be in how code is typed. It will be in how work moves through the system.

- Backlog management becomes proactive. Teams will pre label agentable issues, and agents will clear them as background tasks. This turns a pile of small chores into a steady flow of micro changes.

- Pull requests get smaller and more frequent. The agent prefers surgical changes that pass checks quickly. That nudges teams toward incremental architecture and merge trains that bundle safe changes downstream.

- Reviews focus on intent, not syntax. Humans will review whether the change aligns with architectural decisions and product goals, while the agent handles style, formatting, and obvious fixes.

- Test coverage and documentation improve by default. Over time, codebases will accrete guardrails that make all contributors more effective.

- Release trains get steadier. With a constant cadence of small, passing pull requests, release managers can automate promotion with high confidence.

- New roles emerge. Policy engineers will tune organization level settings and write templates. Prompt librarians will curate repository instructions that teach the agent how the team works.

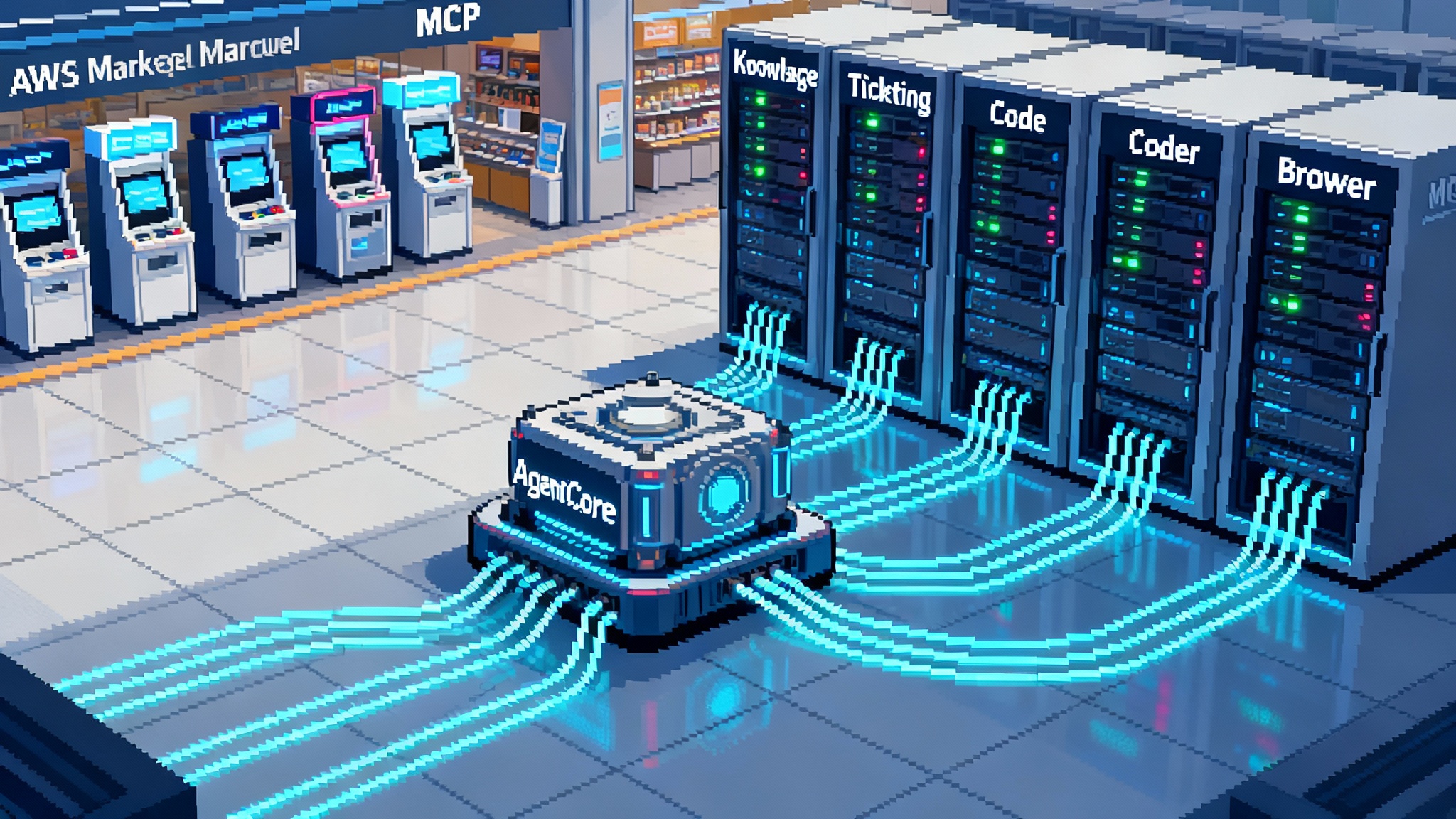

- Multi repository campaigns become practical. Need to bump a dependency across twenty services, or apply a safe codemod pattern. Agents can open coordinated pull requests in minutes, with humans reviewing the plan rather than hand editing every file. For how this connects to standards and orchestration, see work on unifying the enterprise AI stack.

This is not speculative science fiction. It is the natural consequence of putting an autonomous worker at the center of the pull request loop and constraining it with the same rules the rest of your system uses.

What to do next

Pick two repositories with good tests and a clear contribution guide. Turn on the agent behind strict policies, run the 30 day playbook, and measure outcomes. Keep the scope narrow and the feedback loop tight. Write down what works and what fails, and iterate on the guardrails before you scale.

The path to safe adoption is straightforward. Start small, enforce policies, keep everything in the pull request, and let data guide the roll out. The result is a software factory where humans set the direction, agents move the work forward, and your delivery pipeline gets faster and calmer at the same time.

In other words, repo native is not just a feature label. It is an operating model. The organizations that learn that lesson in 2025 will look very different by the end of 2026, and they will ship like it.