Reasoning Shock: Test-time Compute Is Rewriting Agents

2025 flipped agent design from brittle scaffolds to models that think, budget, and verify at inference time. Here is how test-time compute, open distillations, and edge acceleration are reshaping costs, architecture, and what you can ship in 90 days.

The shock that landed in 2025

A year ago, agent design meant careful prompt trees, brittle tool wiring, and a lot of glue code to shepherd models through multi step tasks. 2025 flipped that script. The big story is not just faster models. The story is that models now think at inference time, budget their own reasoning, and verify their own work. That shift started visibly with DeepSeek R1’s open weights early in the year, then accelerated through spring with an upgraded R1 on Hugging Face in May, and with a wave of reasoning centric releases from OpenAI, xAI, and Microsoft. On the hardware side, acceleration landed on the desk. Consumer RTX PCs now run agent components locally, making a stable edge tier real for the first time.

OpenAI set the expectation on April 16 with o3 and o4-mini. These models are trained to spend more time reasoning before they answer, can think with images, and can use the full tool set without bespoke scaffolds. The result is fewer brittle hand offs and more end to end reliability for users who just want an answer in the right format. See the official details in OpenAI’s product note, OpenAI introduces o3 and o4-mini. For how this lands in real products, see our take on OpenAI’s agent platform moment.

xAI pushed the same idea in February with Grok-3 and its Think and Big Brain modes. The pitch was simple. Give the model more test time compute on hard questions and it will correct itself, explore alternatives, and deliver a better result. Microsoft followed with a different flank. The Phi-4 family focused on small, efficient reasoning models that run on device, and Windows added a Mu powered Settings agent that translates plain language requests into precise settings changes. Meanwhile, Nvidia shipped tooling that makes local agent services straightforward on consumer hardware.

Put these moves together and a new architecture emerges. Models are spending their budget at the moment you need the answer, not during training or through elaborate orchestration. That is the shock. It does not just make benchmarks jump. It changes how you design products.

What test time compute really means

Test time compute is the budget a model is allowed to use while it is answering your query. Picture a chess clock. Each request starts with a pool of time and steps. The model can decide to think longer, branch on alternatives, call a tool, or ask itself to try again. The difference from 2023 era agents is agency. We used to force steps from the outside. Now the model has learned when to spend more of its budget and how to check itself before it speaks. This shift pairs with the Gemini 2.5 rewrites agents theme that treats the runtime as the real API surface.

Two capabilities make this concrete:

- Inference time reasoning. The model writes and iterates through its own internal notes before it replies. You do not see the notes, but you benefit from them when the answer is precise and complete.

- Self verification. Before emitting a final answer, the model runs internal checks. It may rederive a math step, simulate code execution, or compare two candidate paths and select the one that is consistent with the question.

This makes old school scaffolds look overbuilt. Many multi tool flows existed to compensate for weak internal reasoning. As models internalize the decision to think longer and the habit of checking their work, the scaffolds become simpler. You still want tools, but you do not want a maze of brittle rules.

2025 milestones that changed the defaults

- DeepSeek R1 led the open weight surge, then shipped a May upgrade on Hugging Face. The signal was not only performance. It was portability. Teams could fork and fine tune, then distill for the edge.

- OpenAI released o3 and o4-mini on April 16 with full tool access and visual reasoning. The key phrase was think with images. A model that can crop, zoom, and rotate a diagram as part of its thinking is meaningfully different from one that only captions it.

- xAI launched Grok-3 in February with Think and Big Brain modes, which operationalize the idea that hard tasks deserve more time and compute than easy ones.

- Microsoft broadened the on device story with Phi-4 variants and a Windows Settings agent powered by a Mu model that runs locally. That agent turns a messy natural language request like make my laptop stop sleeping during this presentation into the exact settings change and does it fast enough to feel native.

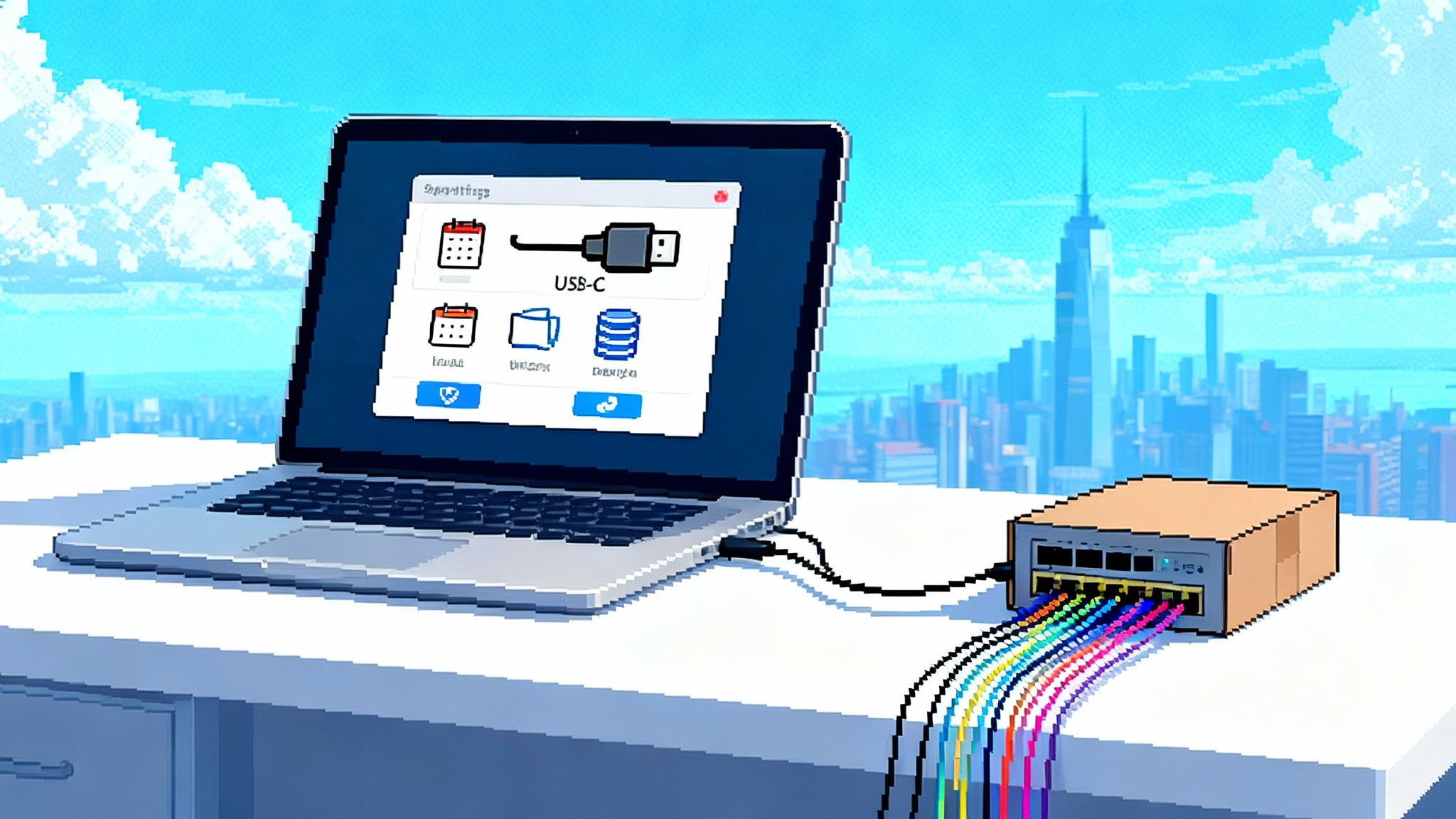

- Nvidia’s NIM microservices made it practical to run agent building blocks on RTX PCs with Blackwell class hardware. Developers can compose local language, vision, and speech components as if they were cloud services, but without the network round trips. See the announcement, NVIDIA NIM microservices for RTX PCs.

The combined effect is a race to allocate thinking at the right moment. Not more scaffolding. Smarter inference.

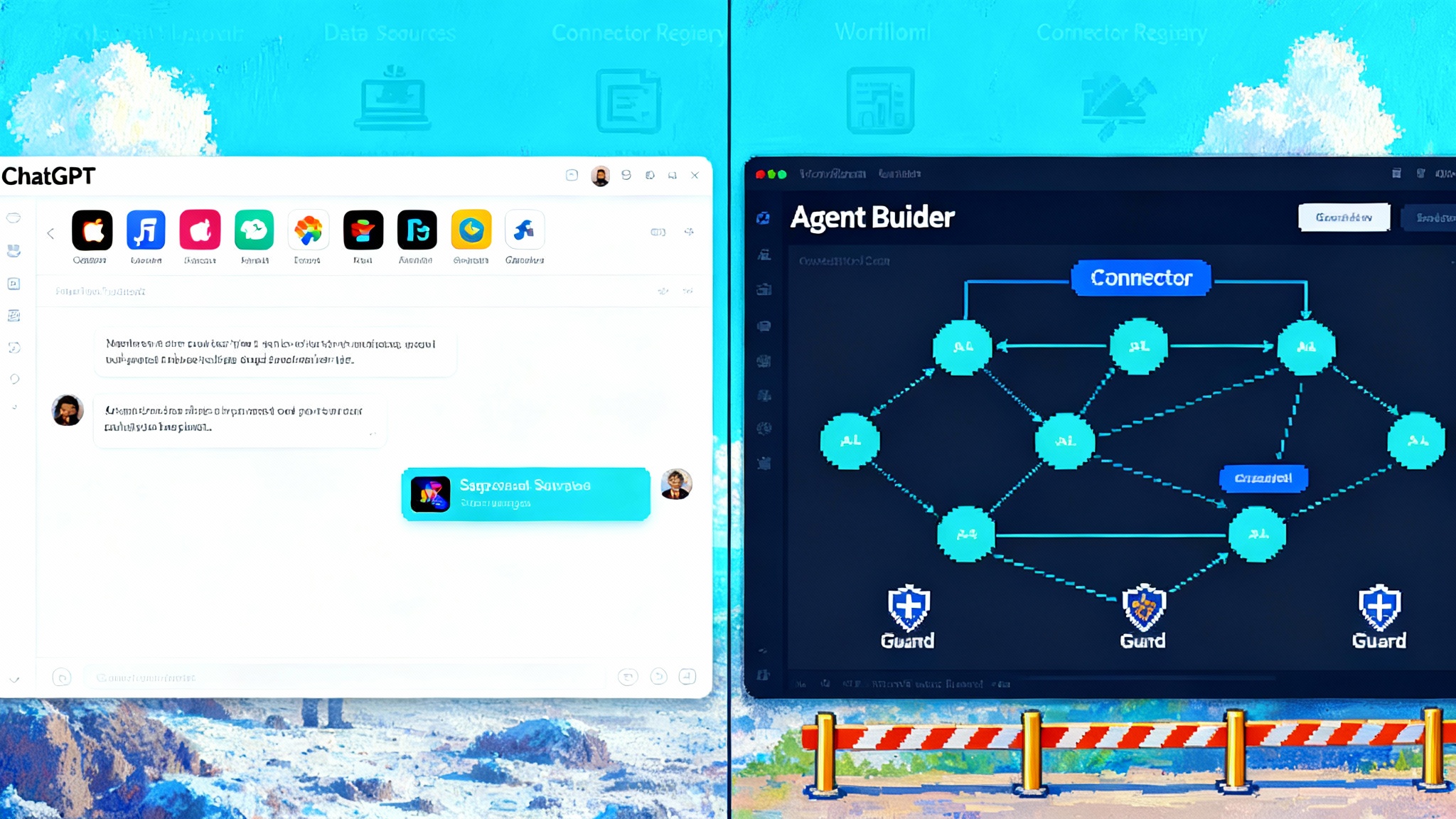

Why brittle tool scaffolds are giving way to learned control

Think of a traditional agent as a factory line with many chutes. If the output looks like math, send it to the calculator. If it mentions a calendar, call a scheduling tool. Each decision is a hand built rule. When the input is messy, rules collide and downstream tools get conflicting instructions.

A reasoning centric agent flips that. The model learns a policy for when to think longer, when to call a tool, and how to structure its output so downstream steps are simple. In practice, that means:

- Fewer forced tool calls. The model often uses internal checking to avoid unnecessary external calls.

- Cleaner outputs. Because the model is trained to plan before it speaks, you get responses in the schema you asked for without custom validators.

- Better long tail behavior. When the task is unusual, the model can spend a larger reasoning budget rather than falling through a weak rule.

You still keep a minimal scaffold to enforce business rules, to provide reliable tool adapters, and to audit decisions. But the heart of problem solving shifts inside the model.

Cost collapses through open and distilled variants

Open weights changed the pace of iteration. With R1 style reasoning in the open and a stream of distilled models, teams can put a strong reasoner in places where a frontier closed model would be too costly or governed by usage terms that block deployment. Distillation compresses the behavior of a large model into a smaller one. You give up some peak scores, but you gain lower latency, lower power, and more predictable costs.

What this looks like in practice:

- Tiered stacks. Use a top tier model for hard or high risk tasks, and a distilled or small model for routine ones.

- On device fallbacks. When the network is slow or offline, a Phi-4 class model can handle local triage. If confidence is low, queue the task for a cloud pass.

- Targeted fine tunes. Because the weights are accessible, you can fine tune for your domain and keep the improvements private.

The trade is no longer accuracy versus price as a single lever. It is accuracy versus price versus where the computation happens. Routing by these three levers is the new optimization game.

Edge agents stop feeling fragile

Edge agents used to be demo ware. They were quick, but unreliable. Now they are viable parts of production systems because they can reason enough to avoid simple errors and they can verify themselves on the device. Two forces drive this:

- Model efficiency. Phi-4 style small language models and purpose tuned models like Mu run entirely on the Neural Processing Unit in modern laptops. That makes them fast and power efficient.

- Local microservices. With NIM style packaging, you can drop speech, vision, and language services onto an RTX PC and call them like cloud endpoints. The predictability is excellent. There is no cross region variability. Latency is constant.

When a support technician opens their laptop at a client site, the agent that triages logs and suggests fixes does not need to dial a datacenter. It can parse logs locally, summarize errors, and propose next actions, then escalate only when it hits a confidence threshold.

For the integration layer that keeps tools consistent across vendors, see how the MCP interop layer for agents is emerging as a common contract.

A practical 90 day build plan

You can ship a reasoning first agent in three months. The steps below assume a hybrid cloud and edge setup, and they bake in test time compute as a controllable budget.

Days 1 to 30: scope, baselines, and reason budgets

- Pick two workflows with measurable outcomes. Example: internal IT settings changes and vendor invoice triage.

- Define success metrics. For IT, measure task success rate, user edits per task, and time to completion. For invoices, measure percentage auto approved with zero errors.

- Choose three models. One frontier reasoner for the hardest cases. One cost efficient model for the bulk. One on device small model for offline and fast paths.

- Implement a reason budget controller. Start with three levels: short, standard, and deep. Short caps internal thinking and disables self verification. Standard allows one verification step. Deep allows multiple passes and more tool calls.

- Build an evaluator harness. For each task, create gold cases and edge cases. Log the chosen reason budget, the tools used, the latency, and whether the answer passed checks.

- Establish a schema contract for outputs. Use structured outputs and validators. Fewer surprises mean less scaffolding later.

Days 31 to 60: hybrid routing and edge enablement

- Add a difficulty classifier. Predict whether a task is easy, medium, or hard using features like input length, domain tags, and historical error rates. Map the class to a default reason budget.

- Implement hybrid routing. Easy tasks route to the cost efficient model or the on device model. Hard tasks route to the frontier model with deep reasoning.

- Deploy an edge service pack. Ship on device models for text and speech and pack adapters that mimic your cloud endpoints. Cache prompts and retrieved facts locally.

- Add confidence scoring. Combine self verification results, tool agreement checks, and known constraints to compute a confidence score per output.

- Close the loop with humans in the hardest 10 percent. When confidence is low, ask for a short confirmation rather than returning a guess.

Days 61 to 90: safety guardrails tuned for long form thinking

- Add multi pass safety. Run a separate safety model that reviews the draft response for harmful content, data leakage, and policy compliance. For flagged cases, trigger a constrained rewrite with a higher reason budget.

- Protect against infinite loops. Enforce a hard cap on internal steps per request. If the cap is reached, summarize the partial work and offer a fallback plan.

- Instrument tool use. Require every tool call to log inputs, outputs, and timing. Block tools that return inconsistent formats and auto escalate to a human when critical tools fail.

- Red team the self verification. Feed adversarial prompts that try to confuse the checker. Adjust the verifier to demand explicit evidence for the highest risk tasks.

- Freeze and document your flow. At the end of the 90 days, publish your routing rules, reason budgets, and guardrails so they can be audited and improved.

Concrete design patterns you can adopt now

- Reason budget as a product knob. Expose an advanced toggle that lets power users choose quick or thorough. Record where thorough mode pays off and consider making it the default for those cases.

- Self consistent answers. For quantitative tasks, run two independent solution paths and return the answer only if they agree within a tolerance.

- Evidence gated actions. Before an agent executes a real world change, require a verified citation or an internal check against a source of record. If verification fails, return a plan plus a request for approval.

- Elastic memory. Cache intermediate computations and retrieved snippets keyed by task. If a similar task arrives, avoid thinking from scratch.

What this means for costs and architecture

Cost used to be a straight line between request count and model price. With test time compute and open distillations, you can move along three axes.

- Spend more time for fewer errors when it matters. A single deep pass can replace three failed shallow attempts.

- Swap in an open distilled model for the common path. You keep latency low and costs predictable.

- Push fast checks to the edge. Local models turn what used to be cloud calls into constant time operations.

The architecture that falls out of this is simple. A thin policy layer routes requests. A small set of reliable tools handles the world. The models do the thinking.

What to watch next

- Distillation quality. How close can small models get to the reasoning stability of larger ones after task specific fine tuning.

- Visual reasoning in the loop. As more tasks involve images, whiteboards, and screenshots, expect higher accuracy from models that treat visual input as first class evidence.

- Operating system agents. Windows’ Mu powered Settings shows what a native agent feels like when latency and accuracy cross a threshold. Expect more narrow, high quality agents embedded directly in the operating system.

- Hardware aware budgets. As NPUs and GPUs improve, the controller that dials reason budgets can incorporate live compute availability.

The bottom line

The 2025 wave did not make old agents slightly better. It swapped the center of gravity. Inference time reasoning and self verification are replacing elaborate scaffolds. Open and distilled variants are collapsing costs. Edge acceleration is finally good enough to matter. The next ninety days are not about inventing a new stack. They are about turning your agent into a good thinker with a budget, a short, reliable tool list, and a seat on the devices your customers already use.