Figure 03 and the Moment Humanoid Agents Enter the Home

Figure’s third-generation humanoid pairs a Helix vision-language-action brain with a safety-first, home-ready body and a factory plan built to scale. If fleet learning and BotQ deliver, the agentic appliance era may arrive sooner than expected.

Breaking: A home robot that looks built for real life

On October 9, 2025, Figure introduced its third-generation humanoid as a product designed first for the home, not the lab. The claim is bold, but the details are unusually practical: a gentler body, safer battery, wireless charging, hands meant for everyday objects, and a software stack that reasons about what it sees and hears. If you want a single page to understand the company’s intent, start with its announcement of Figure 03, our third generation robot.

What stands out is not a single cinematic demo. It is the combination of an always-learning brain with a chassis built for kitchens, bedrooms, and tight hallways. That is new. Most humanoid programs show bursts of skill under perfect lighting and careful staging, then go quiet. Figure 03 looks like an attempt to make the unglamorous parts work every day, at scale, and at a price that can fall.

The Helix stack in plain English

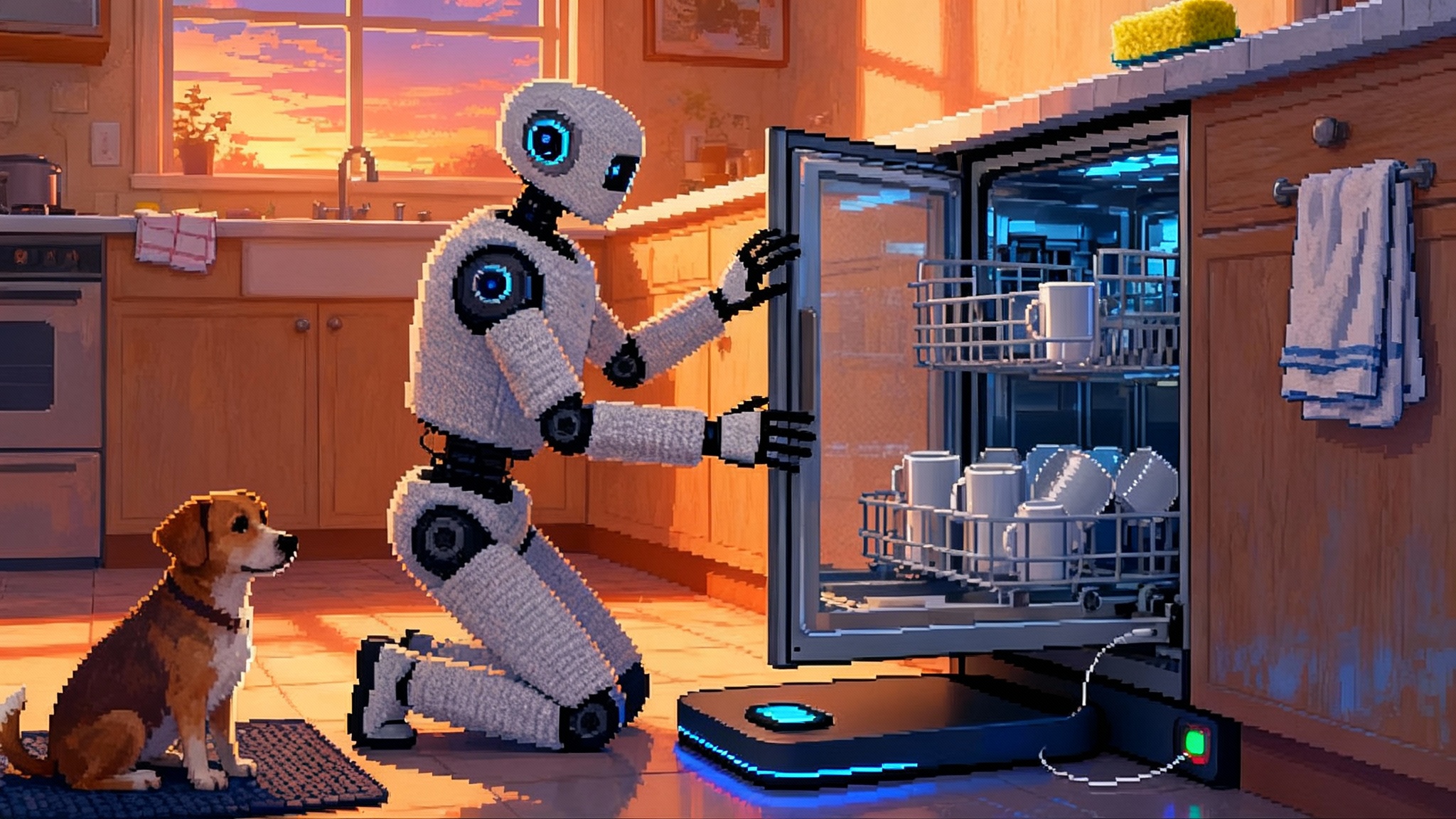

Helix is a vision-language-action system that does three jobs at once. First, it sees and remembers context: where the mug is, how full the sink looks, whether the dishwasher door is open. Second, it understands what you say, including instructions that combine steps, such as “load the top rack with cups, then wipe the counter.” Third, it translates that understanding into the actual movements of arms, hands, neck, and torso.

A useful way to picture Helix is as two teammates inside the robot. One teammate plans and reasons about the scene and the goal. The other teammate handles the fine motor work of gripping, turning, pushing, and placing with the right amount of force and at the right speed. The planner considers tradeoffs and sequences. The mover keeps the cup upright and the sponge flat without crushing anything. The two exchange information constantly, which is why Figure talks about an end-to-end loop. When the loop is tight, the robot looks composed rather than jittery.

This architecture matters in a home because tasks are varied and distractors are everywhere. Folding a towel, untangling a phone cable, or loading bowls face down are all different problems, but they rhyme. A generalist model that keeps learning across them is the only realistic path away from task-specific scripts. For a broader look at agent platforms evolving toward this model, see how teams describe OpenAI’s agent platform moment.

Hardware that tries to be a good guest

The Figure 03 hardware reads like a list of smaller, thoughtful choices that add up to houseworthiness.

- Softer exterior and textiles. The robot wears coverings and multi-density materials that reduce the risk of scuffs, pinches, or sharp edges. If you brush past it in a hallway, it should feel like bumping a backpack, not a toolbox.

- Hands for daily objects. Cameras in the palms and tactile sensors in the fingers are there to spot handles, seams, and slip, then adjust grip. Think about picking up a plastic cup versus a ceramic mug versus a dog toy; the geometry and compliance differ, and the robot must adapt midsqueeze.

- Voice and audio tuned for rooms. If the microphone array and speech processing are good, you should not have to shout over a dishwasher or a fan. More important, the robot should confirm its plan aloud. Verbalized intent is a safety feature, not a gimmick: “I will close the oven and then carry the tray to the counter.”

- Battery and thermal safety. A home robot should err on the side of boring in how it charges, cools, and shuts down. Figure calls out battery safety work explicitly. In practice that means conservative charge rates, temperature monitoring, and a charging posture that keeps the center of mass stable.

- Wireless charging and a dock that invites good behavior. You do not want to see cables draped across your floor. A recessed charging pad, a low gait for docking, and a gentle park routine are the right ergonomics.

Each of these design decisions is about friction. The less you have to think about where the robot stands, how it charges, and whether its hands are safe around your glassware, the faster it becomes a trustworthy appliance instead of a weekend novelty.

An always-learning fleet, explained by a kitchen

Imagine a fleet of Figure 03 units working in real homes. Every day they encounter slightly different dishwashers, faucets, and drawer rails. One family leaves spoons face up; another insists on face down. One countertop hides crumbs in a mottled pattern; another gleams and reflects lights.

A generalist humanoid needs to turn this messy variety into skill. The way to do that is a loop that looks like this:

- Capture. While it works, the robot records short windows of what it sees, hears, and does, along with whether the result was successful.

- Sync. When the robot docks to recharge, it securely syncs new examples. High bandwidth at the dock turns minutes of housework into learnable data without clogging your home network.

- Train. A centralized training job finds patterns across thousands of households. It learns that certain dishwasher brands prefer bowls at a slight angle, that some drawer rails stick unless you pull straight, that wiping a stone counter needs a figure eight to avoid streaks.

- Update. The robot downloads a new policy and immediately performs better on day two, especially on failure cases it saw on day one.

The loop is not magic. It is logistics. You need guardrails, which we will cover below. But if you can shrink the time from experience to improvement, the robot gets better while you sleep. That is the only way a true generalist reaches competence in the long tail of household variation.

Manufacturing is the real unlock

Robots do not become normal because of a single viral video. They become normal when a factory makes them with predictable quality, when suppliers quote firm prices, and when yields are stable enough to plan a calendar. Figure’s pitch is that it rethought the robot for mass production and built a line to match. The company says its BotQ facility opened with an initial capacity designed to produce thousands of humanoids per year and to scale from there. If you want the official manufacturing message, read Figure’s post on BotQ high volume humanoid manufacturing.

Capacity alone is not what collapses cost. It is the nitty-gritty of manufacturability:

- Part count and fasteners. Every reduction in unique parts and screws cuts handling time and error. If Figure 03 halves the number of hand-assembly steps versus its predecessor, line hours drop quickly.

- Wiring and harnessing. Routing cables in a humanoid can soak up hours and introduce faults. Switching to modular harnesses with keyed connectors and color coding can take that from artisan work to repeatable assembly.

- Test time. Flashing software and running end-of-line checks is often the hidden cost. A well-designed test jig that can exercise joints, sensors, and safety interlocks in minutes will save as much money as a cheaper motor.

- Soft goods at scale. Textiles and foam introduce their own complexity. Preformed parts, indexed clips, and automated inspection for tears or misfit are the difference between a crisp finish and a rework nightmare.

Put these together and you get a real cost curve. In year one, the bill of materials plus low yields and long cycle times keep price high. In year two, suppliers offer better terms, yields climb, and the team learns which assemblies deserve redesign. In year three, the line looks boring. Boring is the goal. Boring means affordable.

From gadgets to agentic appliances

Today’s home robots mostly do one thing. Robot vacuums clean floors. A roving camera patrols while you are away. These are gadgets. A generalist humanoid turns into something else: an agentic appliance. It is not a single-purpose tool; it is a helper that can switch roles.

- Morning reset. Load the dishwasher, wipe the table, start a laundry cycle, and take the trash out to the bin.

- Evening assist. Reheat dinner, set the table, fetch a charger from the bedroom, and remind you to take your medication.

- Weekend projects. Carry boxes during a closet purge, hold a board while you drill, or bring paint rollers and a tray while you tape the wall.

The psychology of an agentic appliance is important. You do not expect it to be a butler. You expect it to chip away at chores and be careful around your stuff. If it asks for guidance politely and improves week to week, you forgive the occasional fumble. That is how microwaves, dishwashers, and thermostats won their place. Reliability first, then breadth.

Why Figure 03 is a credible first mover

Three ingredients make this moment different from earlier home robot announcements:

- A brain that can grow. Helix is designed to be updated often and to benefit from cross-household learning. That is the only way to handle the fractal differences in homes.

- Hardware that fits the setting. Soft coverings, safer charge behavior, good audio, and hand sensing are not nice-to-haves. They are the minimum to be a guest in a human space.

- A factory plan with numbers. Talking about units per year is how you move from research to product. That anchors hiring, supplier contracts, and service logistics.

Competitors are not asleep. Tesla’s Optimus program, Agility Robotics with Digit, Unitree, Apptronik, and Sanctuary AI each push different tradeoffs. A fast, low-cost biped matters. So does a safe, dexterous partner that can be left alone in a kitchen. The race will be decided less by one spectacular demo and more by who can close the loop from data to improvement to manufacturing without drama.

Near-term limits you should expect

- Speed and noise. Early home units will move deliberately. That is by design for safety and perception accuracy. Expect quiet whirs, pauses to re-evaluate, and occasional retries.

- Dexterity gaps. Grating cheese or folding a fitted sheet are high-dexterity tasks that may remain frustrating for a while. You will likely see gradual competence: unloading dishwashers before loading them well, wiping counters before scrubbing pans.

- Battery life. Multi-hour jobs will require planned dock breaks. The robot should schedule around your habits, but it will not run all day.

- Lighting and clutter. Cameras and depth systems still struggle with glare, mirrors, and chaotic piles. Expect the robot to ask for help to move obstacles or to turn lights on.

- Cost. Even with BotQ, early pricing will be premium. The path down requires volume, standardized options, and longer service intervals.

These limits do not negate usefulness. They set expectations. If the robot can reliably close ten common loops in a day, that is already an hour of life back.

Safety and privacy guardrails that matter

Homes are intimate spaces. The right guardrails are concrete, testable, and easy to control. For a complementary view on local processing, see this discussion of on-device agents and privacy.

- On-device first. Process as much perception and language as possible locally. Cloud learning can be opt in, with clear controls and informative dashboards.

- No-go zones and schedules. Mark rooms, shelves, or drawers as off limits. Set quiet hours and no-movement windows. Confirm with spoken summaries.

- Visible intent. Before it acts, the robot should say what it is about to do and light an indicator that shows its mode: listening, moving, charging, or waiting.

- Force limits and tool locks. Cap pinch force around pets and children. Require explicit confirmation for hazardous actions like turning on an oven or handling knives, and limit those to supervised modes.

- Event recording with consent. Short clips are stored only when failures happen, with easy review and delete options. Household members can see and erase what the robot learned that day.

- Third-party audits and standards. Independent testers should probe for mechanical pinch points, safe fallback when sensors are obstructed, and resilience to network outages. That is how mainstream appliances are evaluated.

If Figure and its peers get these right, they will turn safety from a fear into a feature families point to when explaining why they brought a humanoid home.

How this changes the platform wars

The smartphone era had operating systems, app stores, and a few chip suppliers. Embodied AI will have its own equivalents.

- The foundation model. Whoever can maintain a fleet-trained generalist that learns quickly without catastrophic forgetting will set the bar.

- The embodiment layer. Hands, sensors, and balance systems that are both capable and manufacturable will decide cost and reliability. Hand design may become the new camera race.

- The skill supply chain. Instead of apps, we will see shareable skills: “load my Bosch top rack,” “fold bath towels like this,” “wipe granite without streaks.” A vetted library of skills, with provenance and user ratings, will form around the leading platforms.

- The silicon and acceleration story. Training and inference costs are a real constraint. Winners will juggle on-robot compute, dock-side acceleration, and cloud training where it makes economic and privacy sense. For the interoperability angle, compare this to the emerging MCP interop layer for agents.

Figure’s bet is vertical. Helix ties directly to Figure 03 hardware, and BotQ builds the muscle to ship and service the same thing many times. Others will push horizontal stacks that partner across multiple robot bodies. Both strategies can work, but only if the real world cooperates.

What to do now, depending on who you are

- Households. If you are curious, identify three chores you most dislike and measure how much time they take weekly. That gives you a personal break-even. When pilots open, look for safety features you understand, a clear privacy policy, and local support.

- Startups. Build skills that are hard to learn from scratch but safe to share, such as repetitive kitchen resets or laundry workflows. Design for data feedback, not one-off demos. Offer clear ways to evaluate improvement week to week. For context on agent rollouts, study this survey of OpenAI’s agent platform moment.

- Appliance makers. Design fixtures and interfaces that are robot friendly. Handles that are easily seen and grasped, drawers that tolerate imperfect angles, and status lights that a camera can read are all simple wins.

- Policymakers. Focus on certification regimes and clear rules for incident reporting in homes. Encourage independent test labs to publish pass-fail criteria for common hazards. Prioritize privacy defaults for sync and sharing.

The bottom line

Figure 03 is not the first humanoid to clean a counter, but it may be the first to combine a generalist brain, a home-suitable body, and a factory that sounds serious. If fleet learning and BotQ keep pace, a new category will emerge: agentic appliances. They will not feel like sci-fi characters. They will feel like reliable help that gets a little better each week. That is how revolutions begin quietly, in the ordinary moments between dinner and dishes.