Copilot Actions: Windows Turns Into the Agent OS

Microsoft just moved agents into the desktop. Copilot Actions bring permissioned, task‑completing agents to Windows itself, redefining consent, reliability, and distribution for developers and enterprises.

The day agents moved into the operating system

On October 16, 2025, Microsoft took a clear step toward agent‑first computing by introducing Copilot Actions on Windows. These are permissioned agents that can carry out real tasks from the desktop, like making a reservation, purchasing an item, or operating a third‑party app, with guardrails you can see and control. The news shifts the conversation from chat products to the computer itself. Microsoft is not just bolting a bot onto Windows. It is threading agent capabilities through the operating system so they feel like a native faculty of the PC rather than a website with a text box. Microsoft’s press materials for the October Windows updates frame this turn plainly: new OS‑level capabilities for Copilot, experimental agentic features, and a distribution path through Windows Update, not a browser tab. See the company’s summary in its Windows October 2025 newsroom overview for what shipped today and where it is headed next, including visuals of Copilot Actions and voice wake on Windows.

What makes today different is not that an AI can click on your behalf. We have seen prototypes do this in browsers for a year. The upgrade is the location. Moving agents into the operating system changes the model for identity, consent, capability, and distribution. That is why this is news. It is like the difference between a valet standing outside the building and a concierge with a badge to use the elevators, the mailroom, and the phones. The job is similar. The access is not.

What exactly changed

From Microsoft’s description and demos, Copilot Actions on Windows are:

- OS‑level: You can invoke them from the desktop, and they can coordinate with applications without always going through a browser.

- Permissioned: Each action requests what it needs. Users approve the scope, and Windows enforces those boundaries. Think of it as least‑privilege for your computer life.

- Task‑oriented: Book dinner, send a gift, adjust settings, organize files, or launch a sequence across multiple apps. The goal is to complete outcomes, not draft paragraphs.

- Governed by policy: Enterprises get admin controls and auditing so information technology teams can decide which users can run which actions, with logging and revocation.

If this sounds familiar, it should. Back in April, Microsoft rolled out early Copilot Actions on the web with launch partners across travel, gifting, and marketplaces and promised expansion to Windows. That earlier release named partners and described how Copilot would act on a user’s behalf for purchases and bookings across sites. It set the stage for the operating‑system turn we are seeing now. For background and the list of early commerce partners, read Microsoft’s April post introducing Copilot Actions and Vision.

Why native OS integration will define agent experience

Developers have spent the last two years wiring bots to apps using skills, software development kits, and plugins. Those patterns are useful, and they will continue. But agents that live in the operating system change the center of gravity in four ways that builders should internalize.

- Consent you can see. When an agent requests to buy an item, move money, or touch files, the operating system can present a first‑party permission dialog with consistent language, provenance, and logging. That feels more like a seatbelt than a pop‑up on a web page. Users learn to read the signals, just as they learned to read Bluetooth or location prompts on mobile. For policy context, see our look at California chatbot consent rules.

- Context without copy and paste. Agents in Windows can see enough of your screen state and file system context to help without the awkward steps of uploading screenshots or granting blanket browser permissions. That cuts friction and reduces the need to over‑share data.

- Reliability and recovery. If an action fails halfway through, the operating system can roll it back or surface a structured error you can act on. That is better than a headless browser script crashing mid‑checkout and leaving your cart in limbo.

- Distribution at Windows scale. A feature shipped via Windows Update reaches hundreds of millions of machines with a standard policy surface for information technology. That makes a real marketplace possible. Skills that required users to discover a plugin catalog in a chat app never got that advantage.

In short, app‑centric approaches made agents visitors. OS‑native turns them into residents.

The new consent contract

For agent computing to work at human scale, consent must be legible. Windows can set a high bar here. A good pattern is a three‑step contract:

- Declare: The agent states what it will do in plain text, such as “Reserve a table at Bistro 7 at 7 pm for 4 using your OpenTable account.”

- Delimit: The operating system shows a permission card with the minimal scopes required, like “Network access to OpenTable,” “Calendar read for conflicts,” and “Write to Calendar after booking.”

- Document: The operating system produces a receipt for every action with a unique identifier and a reversible record if supported by the target service.

When this is standard, users develop intuition. They learn what a normal receipt looks like. They spot odd scopes. They know where to revoke. That is the difference between agents that feel magical and agents that feel sketchy.

Builder’s playbook for the new Windows hooks

You do not need to boil the ocean to ship value on Copilot Actions. Start with one end‑to‑end capability and wire it cleanly. Here is a practical playbook.

- Model the outcome, not the steps. Write a one‑sentence capability statement. Example: “Place a repeat coffee order from a preferred cafe within 30 seconds and add the pickup time to the calendar.” Use this to bound what your agent can and cannot do.

- Design your permission surfaces. Enumerate the exact scopes your action needs on Windows. Typical scopes include screen reading in a specific app, file read in a specific folder, network access to a named domain, calendar write, and clipboard write. Map each to a Windows permission prompt and ensure your agent can run in least‑privilege mode.

- Build your connector plan. Decide how the agent will touch third‑party services. If there is a public application programming interface, use it. If there is not, choose a resilient automation method such as app automation frameworks or documented system interfaces. Avoid brittle screen scraping for anything that touches money or health data.

- Implement structured confirmations. Before a purchase or booking, present a structured summary with key fields in consistent order: merchant, item, quantity, price, time, account used, and cancellation policy. Let the user edit fields inline without leaving the flow.

- Add receipts and budgets. After every action, write a receipt to a local ledger file and to the Windows Action Log with a nonce and signature. Support monthly budgets and spend alerts per agent. If your action can buy something, it must also track. For more on payment safety, study trusted agentic checkout patterns.

- Build recovery, not just retries. If the agent fails after a payment authorization but before confirmation, trigger an automatic reconcile flow that checks the merchant and either completes the booking or reverses the hold. Expose this state in the receipt.

- Provide a simulation mode. Let users and information technology teams run a dry run where every external call is replaced with a no‑op and a trace. This is how enterprises will approve your agent.

- Embrace Windows rings. Ship first to Windows Insider channels, add telemetry guards, and define a fast rollback. Treat your action like a kernel feature, not a chat plugin.

- Prepare for enterprise policy. Implement controls for tenant allow lists, data residency, and audit retrieval. If your agent handles sensitive data, support customer‑managed keys and data retention windows.

- Think like a product manager, not a prompt engineer. Set success metrics that matter to users: success rate per task type, median time to completion, average number of prompts per task, and percent of actions reversed. Publish these in your release notes.

A contrast with skills and software development kits

Skills and software development kits made it easy to bolt a bot onto your app. You expose functions, you write tool descriptions, and the model calls them. That remains useful for depth within a single product. But several chronic issues held the pattern back for general computing tasks.

- Fragmented consent models made it hard for users to know what they had granted. Every plugin invented its own prompt.

- Distribution lived inside chat apps, which are not where people manage calendars, purchases, or system settings.

- Context was limited. A bot that lives in a browser tab cannot safely reach into the file system or coordinate two native applications without hacks.

OS‑level integration answers these. It does not make skills obsolete. It makes them part of a larger system with shared identity, permissions, and policy. In other words, Windows becomes the registry, and your skill becomes a well‑behaved entry. For portability across ecosystems, watch the emerging interop layer for agents.

Where this goes next: PCs and console experiences

Expect the agent surface to expand beyond laptops. Microsoft is already testing voice wake and game‑context helpers. The same permissioned model can apply to living room scenarios where an agent helps you manage downloads, parental controls, or shared purchases on devices that run Microsoft software. The path is clear even if the timeline is cautious. When the operating system can standardize consent and logging, the agent experiences that feel too risky on a console today become feasible tomorrow.

For the broader market, this will pressure rivals to move from app‑first bots to OS‑resident agents. Apple’s App Intents, Google’s App Actions, and third‑party agent platforms will need stronger operating system hooks for money, files, and cross‑app coordination, or they will feel confined to demos.

Risks you should plan for now

- Agent overreach. The quickest way to lose trust is for an agent to silently escalate from “book a table” to “modify calendar permissions” without a clearly scoped prompt. Keep scopes narrow and visible.

- Dark patterns. Purchase flows should not default to stored cards or hidden add‑ons. Require an explicit confirmation on the final price, taxes, and cancellation windows. Disable impulse‑buy flows by default in enterprise contexts.

- Prompt spoofing. If an agent reads the screen, malicious content can present itself as a system dialog. Windows must brand system prompts clearly, and developers should whitelist origin signals before taking high‑risk actions.

- Supply chain abuse. Agents will rely on connectors and automation scripts. Sign everything. Publish hashes. Rotate keys. Treat your action manifest like a shipping label that customs will inspect.

- Shadow automation. Employees will script their own automations on top of your agent. Provide a supported extension point rather than forcing people to hack. That is how you avoid brittle and insecure sidecars.

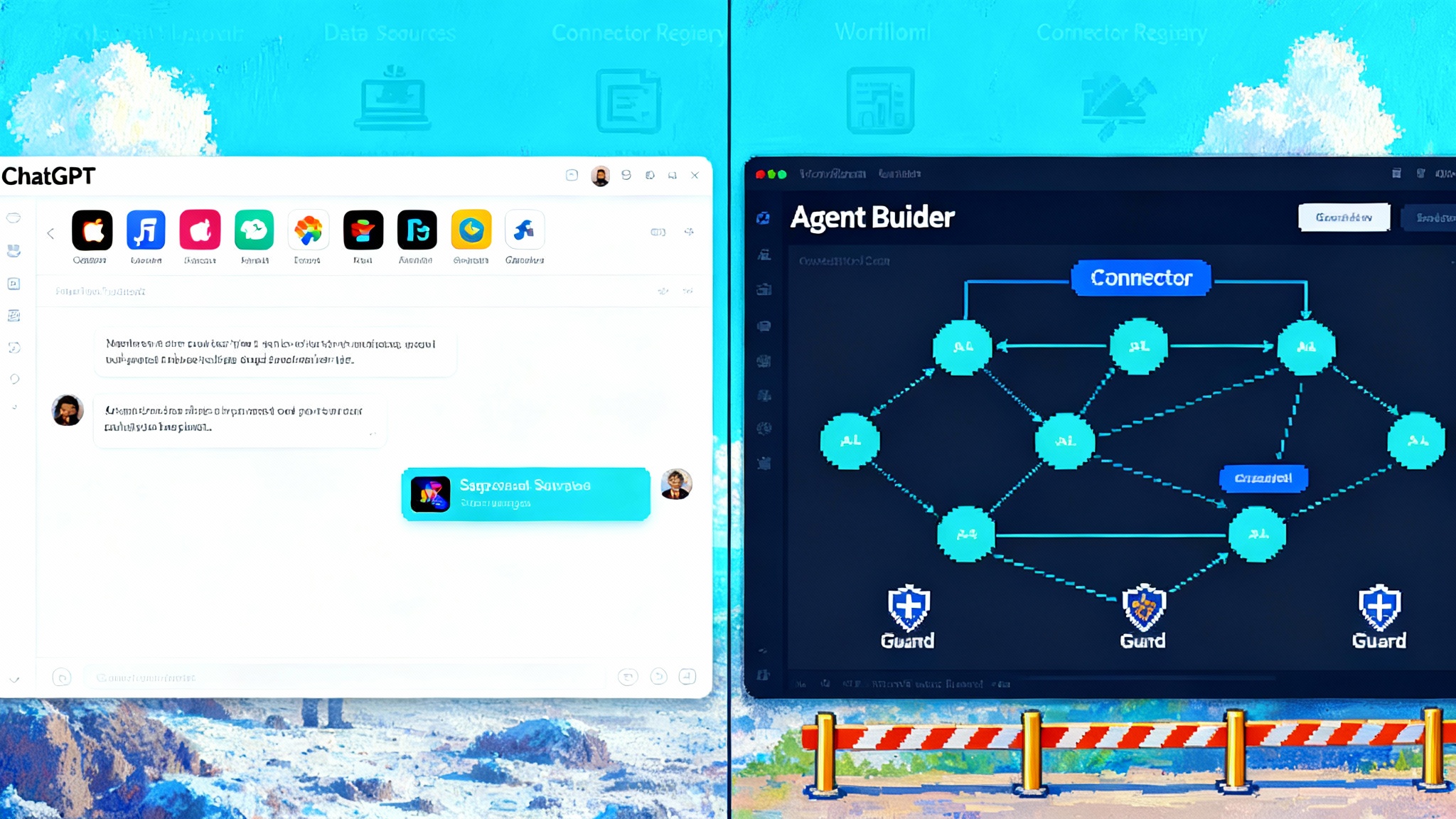

The rise of guardian agents

As soon as agents can buy and book, we will need agents that supervise other agents. Call them guardians. They do not draft emails or browse. They watch. Their job is to enforce policy, validate receipts, and flag anomalies.

- Policy guard: Checks every permission request against user or enterprise policy. If a finance agent asks to read a personal folder, the guardian blocks it.

- Payment guard: Verifies merchant identity, compares price to historical ranges, and blocks outliers unless the user types a one‑time passcode.

- Data guard: Scans outputs for sensitive data exfiltration and redacts or quarantines if necessary. Logs the event with a human‑readable reason.

- Behavior guard: Learns the normal cadence of actions and surfaces anomalies such as sudden bursts of bookings or repetitive retries.

Technically, a guardian agent is a policy engine with memory and a user interface. Practically, it is a seatbelt and a dashboard. It should ship with Windows, but independent vendors will compete to offer stronger analytics and compliance features. If you are building Copilot Actions, plan to integrate with a guardian now. Expose your receipts and permissions in a schema the guardian can parse. Publish webhooks for action start, action end, and reversal.

What to do next if you build software for Windows

- Pick one outcome to automate end to end. Ship it with receipts, budgets, and recovery.

- Define your permission schema and get it reviewed by a security engineer. Fewer scopes win.

- Add a simulation mode. Your sales team will use it to clear enterprise pilots.

- Integrate with a guardian policy layer. Start with payment and data guards.

- Measure what matters. Success rate, time to completion, reversals, and user satisfaction. Publish a changelog that shows you are improving these, not just adding features.

- Test on Insider rings. Use flighting to stage rollouts and ensure a one‑click rollback.

- Write a plain language statement of what your agent cannot do. Make it easy to find.

The bottom line

Microsoft’s move today reframes agents as part of the computer, not a service you visit. That accelerates adoption because identity, consent, and distribution flow through the operating system where users already make trust decisions. It also raises the bar for builders. The winners will act like systems engineers, not just prompt whisperers. They will design minimal scopes, durable recovery, and receipts that make sense. They will integrate with guardians that say no when the agent should not proceed. The next wave of personal computing will not be a chorus of chat windows. It will be an operating system that can act on your behalf, with your permission, and that remembers what it did. That is a future worth building, and it starts with the careful work you do in the next release.