Alexa+ Turns Your Home Into a Consumer Agent Platform

Amazon’s late September 2025 devices event and the Alexa+ rollout marked a turn from chat to action. With AI-native Echo hardware, Prime-bundled pricing, and new agent SDKs, Alexa+ aims to make the smart home programmable and outcome-driven.

The moment Alexa stopped talking and started doing

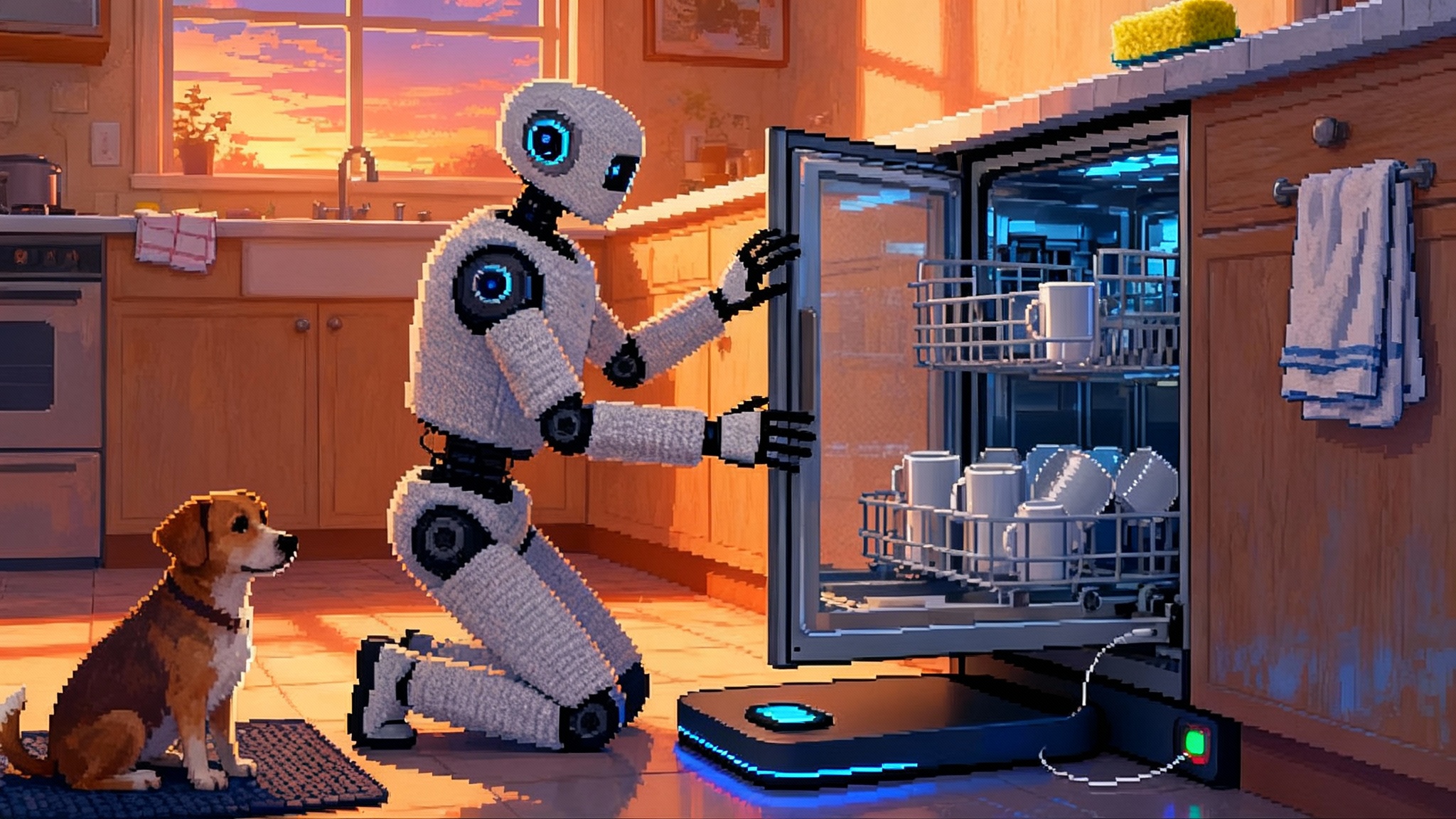

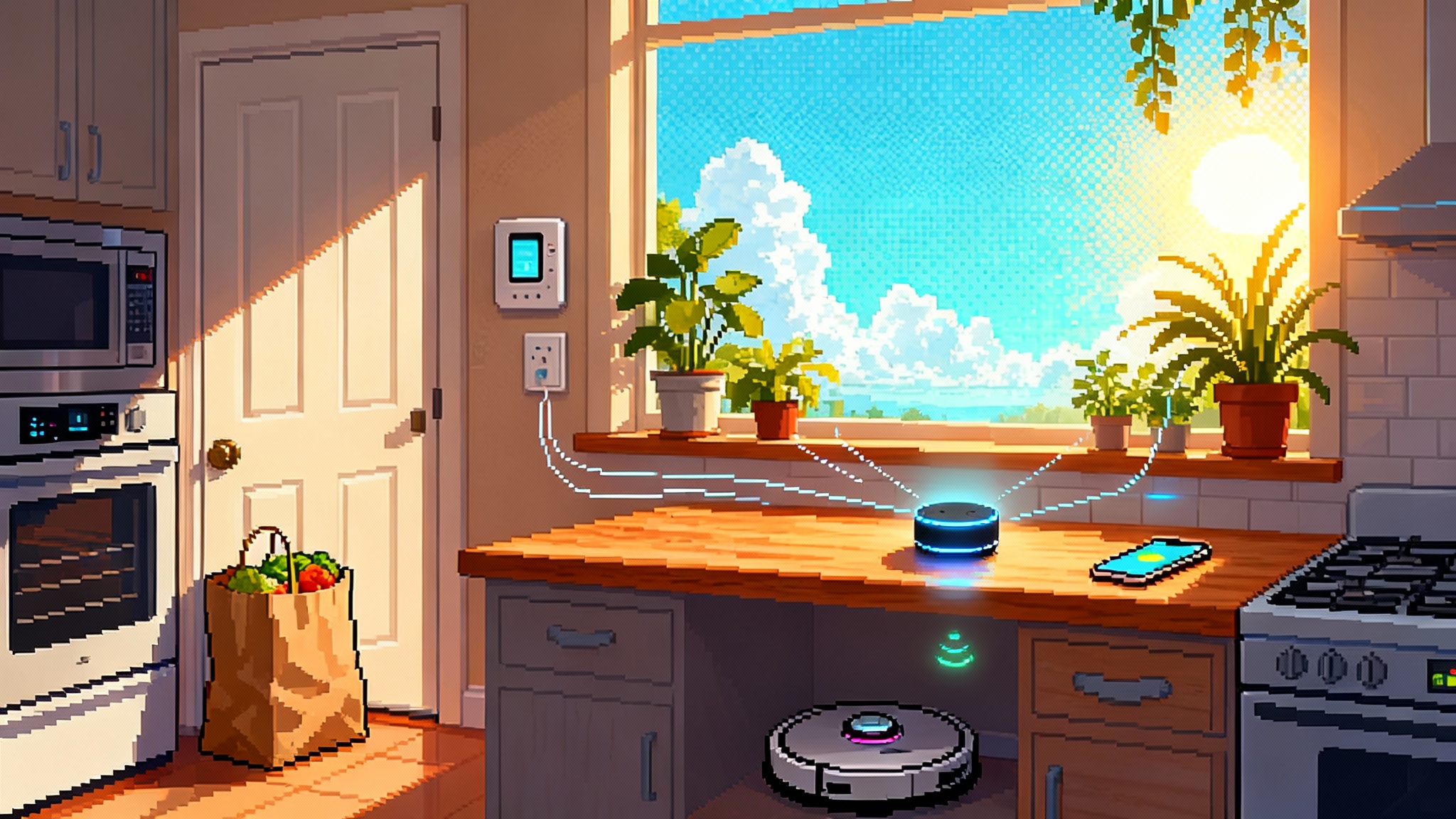

At Amazon’s late September 2025 devices event, and in the months that followed as Alexa+ rolled out to more households, something subtle but important changed. Alexa stopped auditioning as a conversational novelty and started acting like a true household operator. The company framed Alexa+ not as a better chatbot but as a consumer agent that can perceive, decide, and execute across the messy realities of a home. The difference shows up in outcomes. Instead of answering a question about dinner, Alexa+ actually places the order, stacks a coupon, chooses an arrival time that avoids the school pick-up window, and makes sure the door locks behind the courier.

That shift rides on three reinforcing pillars: AI-native Echo hardware that can run rich models locally, Prime-bundled pricing that turns ambient intelligence into a default utility, and developer tools that let third parties build agents that work with Alexa rather than compete with it. As we have seen with Windows as the agent OS, the platform turn is about action, not conversation. The result is a platform play aimed squarely at owning the home agent layer over the next 12 months.

AI-native Echo hardware is about sensing, context, and autonomy

The newest Echo devices were designed to be more than microphones with speakers. They are small edge computers with neural accelerators, better far-field arrays, and a sensor suite that understands the environment. That matters because agency requires more than language. It requires perception and a memory of state. A family’s weeknight rhythm. The way the thermostat and the oven fight each other. The teenager who always forgets to lock the back door at 10 pm.

AI-native here means three practical capabilities:

- On-device inference for the common, time-sensitive tasks. If the lights flicker or a smoke alarm chirps, the device should react without waiting for a cloud round trip.

- A household state model that fuses inputs from cameras, Wi-Fi presence, energy meters, and connected appliances into events the agent can act on.

- Secure handoffs to the cloud for planning tasks that need broader knowledge, commerce, or coordination with outside services.

The strategic effect is latency you can feel, reliability you can trust during internet hiccups, and a foundation for routines that adapt without constant user programming. If the kitchen gets unusually hot at 5:40 pm, Alexa+ can infer cooking is underway and delay the robot vacuum. If the doorbell rings while the toddler is napping, it can lower chimes and send a text update instead of a blare.

Prime turns ambient intelligence into a utility

Bundling Alexa+ capabilities with Prime reframes value for households. Instead of deciding whether to subscribe to one more app, many homes will encounter Alexa+ as a built-in capability of a membership they already use for shopping, media, and delivery.

That bundling unlocks two new behaviors:

- It normalizes the idea that the home agent can transact. If you already trust Prime for purchases, extending that trust to reordering detergent at a price threshold is a small step.

- It encourages cross-subsidy that lowers the friction for third-party device makers and services. If the agent drives incremental commerce or engagement, Amazon can justify subsidizing features like higher frequency on-device updates or integrated returns without line-item charges to the user.

For the household, the practical impact is predictability. You can set monthly spending caps, approval rules, and notification preferences. Imagine a rule: only pantry staples can be re-ordered automatically under 30 dollars, anything else requires a quick confirmation on your phone. The agent follows the rule, provides receipts, and explains choices if you ask.

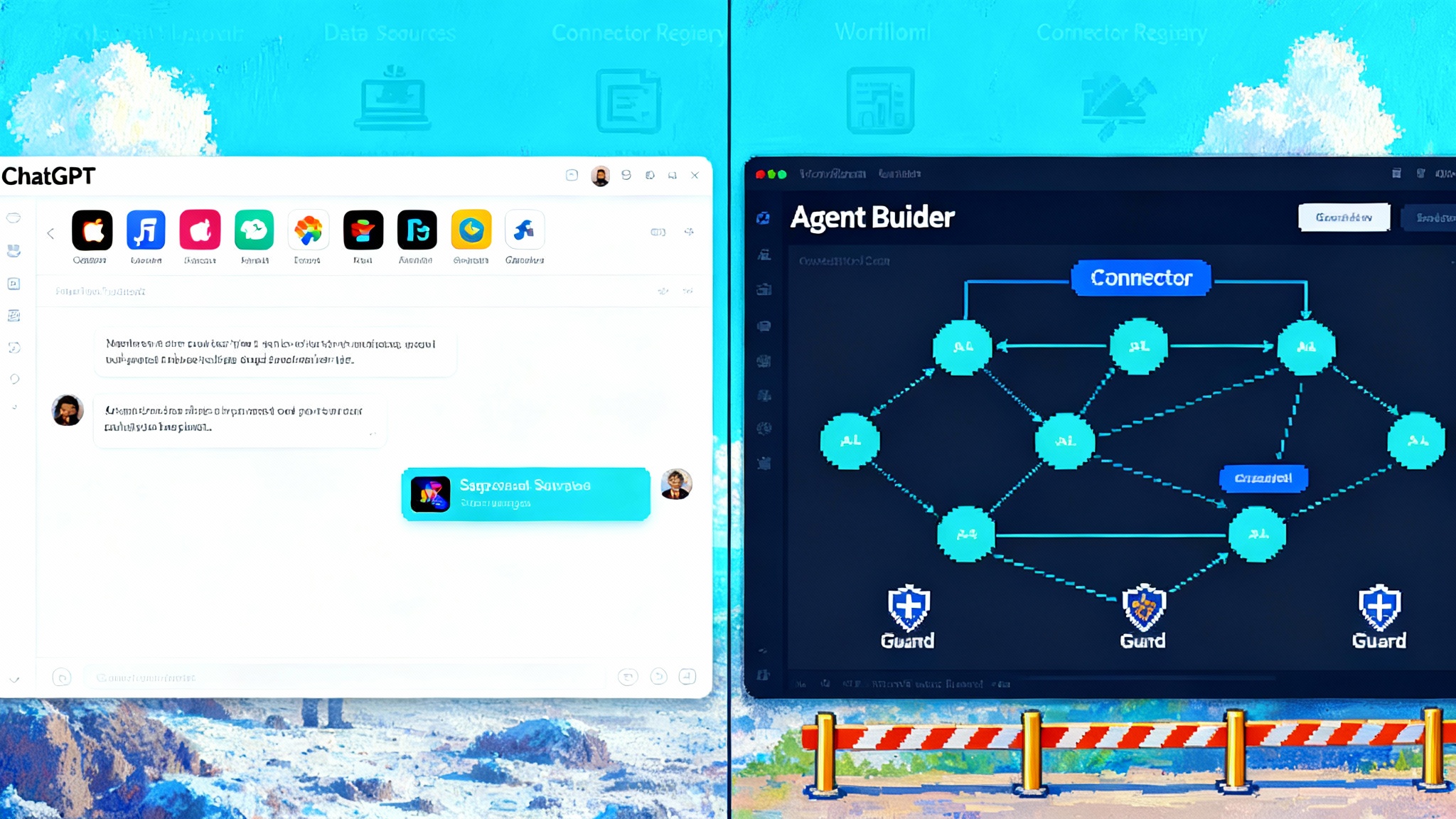

From skills to agents: what the new SDKs actually change

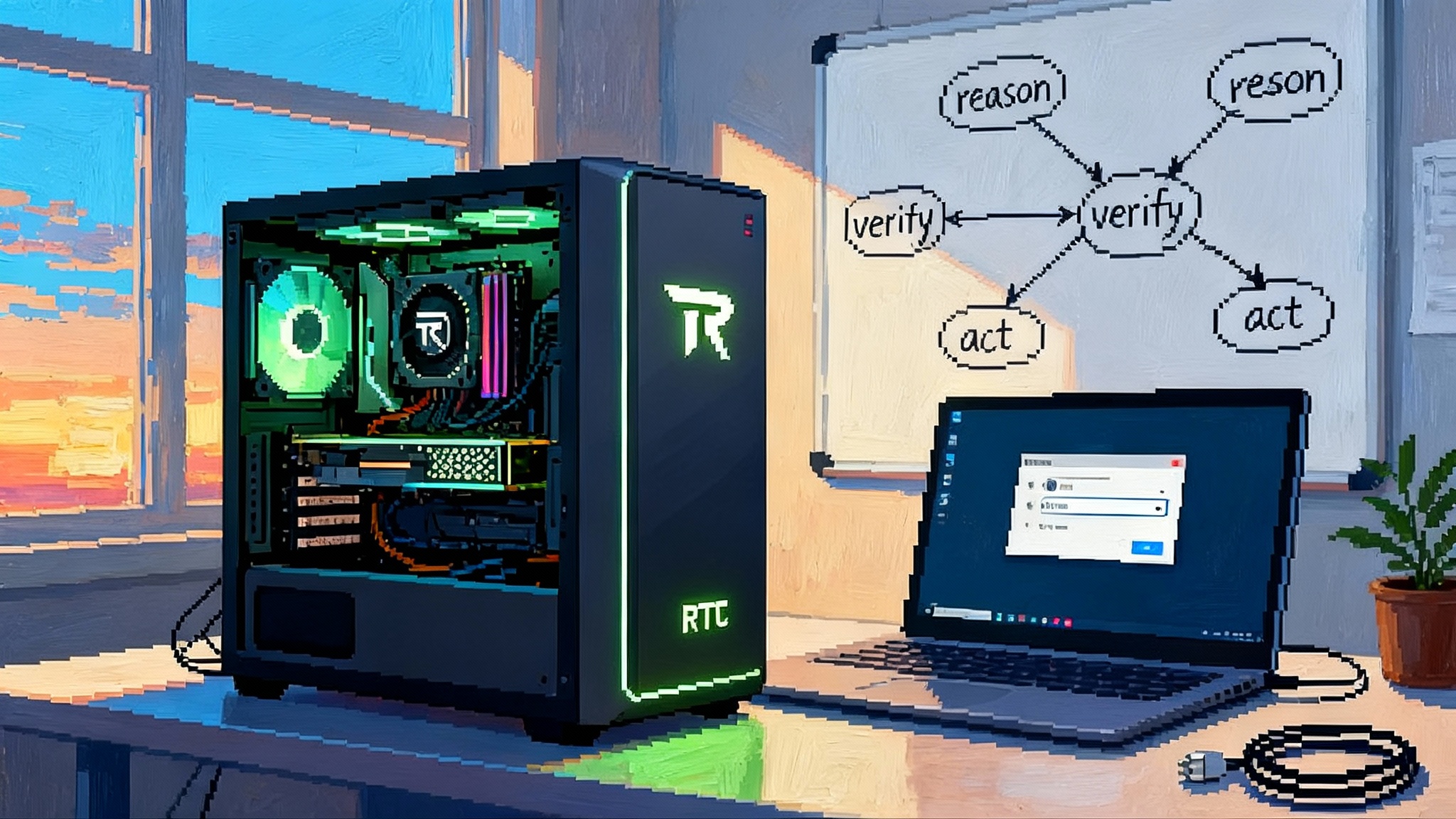

Alexa’s original developer ecosystem was built around skills that listened for an intent and returned a response. The new toolchain is about agents that collaborate on tasks and share a home context. Three changes stand out for developers:

- Capability manifests replace free-form intent forests. You declare what your agent can do, the resources it needs, and the safety boundaries. Alexa+ reconciles capabilities across installed agents and the devices in the home.

- Event-first design replaces request-response only. Your agent can subscribe to events like occupancy changes, energy price shifts, or package arrivals, then propose a plan. Alexa+ arbitrates conflicts, asks for consent when required, and logs what happened.

- Secure commerce and service primitives are built in. Identity, payment tokens, scheduled deliveries, returns, and support escalation can be invoked through standard calls with clear user prompts and receipts.

For developers who previously built a voice skill that answered questions, this is a different sport. You are building a co-worker that shows up in a shared workplace called the home, not a kiosk that waits to be asked. The best agents will be domain experts that play nicely with others, for example an energy optimization agent that coordinates with a laundry agent and a cooking agent to avoid peak pricing while hitting your desired schedule. This mirrors the move to governed building blocks for agents.

What “from chat to action” looks like day to day

It is easiest to see the shift through concrete scenes:

- A parent says, “Set up our autumn schedule.” Alexa+ builds a weekly plan from the family calendar, suggests moving laundry runs to off-peak energy hours, orders lunch supplies for Monday delivery, and sets an alert for the one night the garage door is often left open.

- You ask, “Prep for a movie night.” The agent dims lights to a known pattern, starts the projector warmup, checks the pantry for popcorn, adds it to the next grocery order if low, and offers a parental control setting if the kids are still in the living room.

- You say, “Stop wasting hot water.” The agent reads from your water heater, watches for long shower patterns, and proposes a two-week experiment with a new temperature schedule, reporting savings at the end.

Each scene blends perception, planning, and execution. There is less conversation and more doing.

Guardrails and privacy that matter in a house, not a lab

A home agent that transacts must be safe by default and controllable by design. The important guardrails are practical and visible:

- Profile-aware permissions. Household members get roles. The main account can approve categories of purchases and device control. Kids can turn on lights but cannot buy anything. Guests can unlock the smart lock only when someone with a high-trust profile is at home.

- Interruptibility. You can veto any action in plain language, at any moment. If Alexa+ is about to run the dryer at midnight and you say stop, it stops and explains the plan it was following.

- Clear consent rituals for sensitive actions. Unlocking doors, granting camera access, or booking services triggers a brief confirmation sequence on a trusted device. These flows are short, consistent, and logged.

- Data boundaries you can see and adjust. You can choose on-device retention time for recent audio and video inference, opt in to cloud learning for specific skills, and export or delete your household context.

A guardrail that rarely gets discussed is transparent negotiation. If two agents disagree, for example your solar optimization agent wants to run the dishwasher at noon but the sleep agent wants quiet time for a newborn, the system should show the conflict, the rules involved, and the final decision. That lets you refine the preferences instead of guessing what went wrong.

The platform risk for Amazon, and the opportunity for everyone else

Turning Alexa into a platform for household agency creates a classic platform risk. If Amazon captures the default context and distribution, it can become the preferred funnel for commerce and services that pass through the home. That is attractive to Amazon and to developers who benefit from reach, but it also raises questions for rivals and regulators.

- For rivals: The defense is differentiation on hardware, privacy models, or specialized agents that deliver outstanding outcomes in narrow domains. For example, a health agent that integrates with certified medical devices and insurance plans may live comfortably alongside Alexa+ while owning a valuable slice of the home.

- For regulators: The questions will center on bundling, default placement, and data use across retail, media, and devices. The more Amazon shows clean separations, user choice, and exportable data, the smoother the runway.

Interoperability pressure will rise. Expect alignment around shared protocols similar to how the agent interop layer is emerging in other parts of the agent ecosystem.

A 90-day plan for developers

If you build software or connected devices for the home, here is a concrete playbook for the next quarter:

- Map the house to a graph. List rooms, devices, people, schedules, and constraints. Translate product features to graph nodes and edges, for example a washer node that depends on water temperature and peak energy price edges.

- Define atomic actions. Break capabilities into the smallest reliable steps. StartWash, PauseWash, DelayStartUntilOffPeak. Agents compose from these atoms.

- Publish a capability manifest. Declare what your agent can do and what it needs. Keep the scope narrow and precise so arbitration is predictable.

- Subscribe to the right events. Presence changes, energy price updates, door lock states, pantry levels. Tune carefully to avoid spammy behavior.

- Design transparent proposals. When your agent wants to act, have it share a short, readable plan. Users accept plans they understand.

- Build test households. Simulate a week of events with synthetic data. Watch for conflicts between agents and fix the handoffs.

- Instrument outcomes, not clicks. Measure kilowatt hours saved, minutes of routine tasks eliminated, on-time deliveries, and avoided false alarms. Report those metrics to users.

- Ship a rollback. Every autonomous plan needs a one-sentence undo. Make it reliable and fast.

Follow that plan and your agent will feel like a resident expert, not a talkative guest.

How this changes the home over the next 12 months

Expect three waves between October 2025 and October 2026:

- Wave one: Autonomy for the obvious. Lights, locks, thermostats, and cameras become more dependable and less naggy. The agent takes on repetitive chores like pantry reordering and waste collection scheduling with minimal fuss.

- Wave two: Coordination across categories. Entertainment, energy, and security stop fighting. Movie night routine does not break the HVAC plan. Lawn sprinklers respect delivery windows.

- Wave three: Services inside the agent. Repairs, returns, groceries, pet care, and cleanings get orchestrated end to end with trusted providers. The agent prefers services with real-time status and guarantees because those let it promise outcomes to you.

Competitors will not sit still. Expect Google to push its own agent integrated with Android devices in the home, with a privacy model that emphasizes on-device control. Apple will likely lean on tight hardware security and a curated service layer. Samsung and major appliance makers will pursue vertical agents that excel inside their ecosystems and connect outward where it makes sense. The winner is not the one with the smartest conversation. It is the one that can take responsibility for results and show the receipts.

For households: how to get the most from Alexa+

If you want value in week one, try these steps:

- Set your households roles. Give each person clear permissions. Turn off purchase categories that you never want automated.

- Create two budget rules. One for small consumables with auto-reorder, another for larger items that always require approval.

- Pick one chore to automate end to end. Trash day reminders with missed pickup detection, or laundry scheduling based on energy prices. Watch how the agent explains itself.

- Add one safety ritual. A nightly lock check with a spoken summary. Trust grows from small, reliable rituals.

- Review the log every Sunday. Ask the agent why it made a choice you disagree with. Update the rule or the profile and see if it learns.

These steps make the agent genuinely helpful and keep you in control.

The hard problems still on the table

Three challenges will decide whether Alexa+ becomes the default home agent or just a promising upgrade:

- Conflict resolution that feels fair. As more agents arrive, arbitration will decide whether users feel respected or steamrolled. Transparent negotiation and easy appeals are essential.

- Supply chain realities. An agent that promises delivery and misses repeatedly will lose trust fast. Integrations need real-time availability and fallback options.

- Upfront configuration without overwhelm. Many households will not tune twenty settings. Defaults must be good, and the first ten interactions should teach the system your preferences without a setup marathon.

None of these are impossible. They require patient product design and a discipline about what the agent should never do without asking.

The Relay verdict

Alexa+ marks a genuine turn from talk to action. The hardware can perceive and react, the pricing makes the capability ambient, and the developer stack invites specialists to build agents that cooperate rather than shout. If Amazon keeps the guardrails visible and arbitration fair, the next year will be about services and devices proving they can deliver outcomes under an agent’s direction.

The prize is not a smarter speaker. It is a home that feels like it runs itself and tells you why. That is the real platform: a place where context, trust, and commerce meet in the flow of daily life. If Alexa+ keeps executing, the question a year from now will not be whether the home has an agent. It will be whether anyone can match the cadence of one that already lives there.