Microsoft’s Unified Agent Framework Exits the Lab for Work

Microsoft has folded AutoGen and Semantic Kernel into a single, open-source Agent Framework with typed workflows, built-in observability, human approval gates, and cross-runtime interop. Here is what changed and how to ship with it this week.

The quiet merger that changes the center of gravity

On October 9, 2025, Microsoft published documentation that makes the rumor mill official. AutoGen and Semantic Kernel now live under a single open-source roof called Microsoft Agent Framework. The company is not simply renaming libraries. It is standardizing how agents are built, observed, governed, and shipped across .NET and Python. For anyone who has tried to turn a demo into a dependable service, that is the headline. See the formal scope and first-wave docs in Microsoft Agent Framework overview.

Why this matters now

If 2023 and 2024 were the years of agentic demos, 2025 is shaping up to be the year those demos meet enterprise change controls. The new framework brings three shifts that solve the pain points teams have been hitting for 18 months:

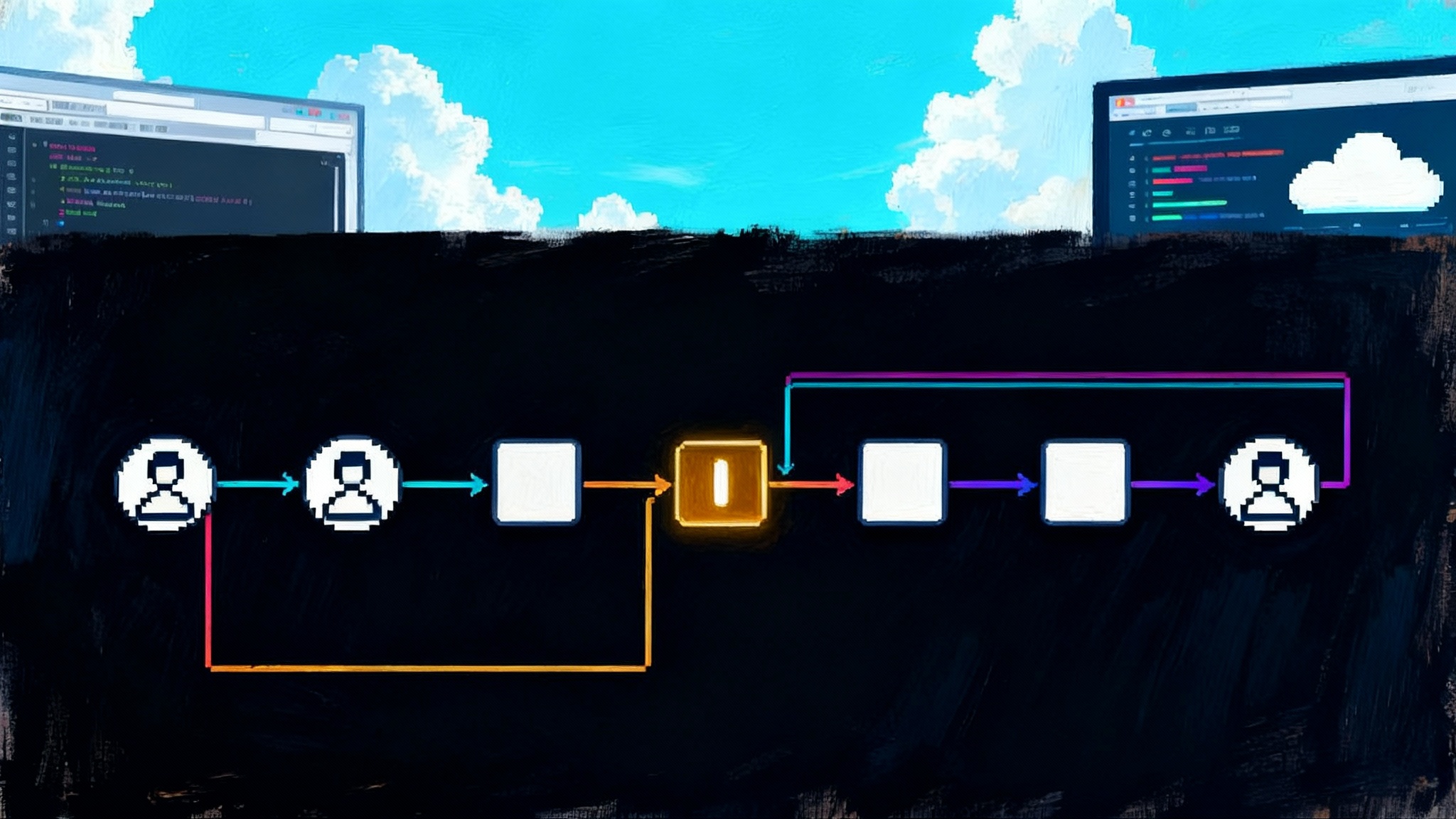

- Multi-agent as a graph, not a group chat: Wire agents and deterministic functions into a typed workflow with explicit edges, conditional routing, concurrency, and checkpointing. Instead of a room where bots talk past each other, you get a flow diagram you can reason about and test.

- Observability and approvals built in: Light up OpenTelemetry traces on day zero and insert human-approval gates where real risk lives, like money movement, access control, or code deployment. For a governance parallel, see how governed building blocks work in Claude Skills for safe agent patterns.

- Interop that crosses runtimes: Model Context Protocol for tools, Agent to Agent for cross-system messaging, and OpenAPI-first integration for existing services. Microsoft calls out these standards in OpenAPI, A2A and MCP support. For background on MCP in production, read our MCP enterprise deep dive.

Each of these pieces existed somewhere before. What changed is that one vendor with reach across Windows, Azure, GitHub, and Microsoft 365 is betting on a single path and shipping tooling that respects the way enterprises already build software. For the Windows angle, see how the desktop becomes an agent surface in Windows turns into the agent OS.

What developers can do today

You do not need to wait for a conference keynote or a new private preview. With the current toolchain you can:

- Install the Agent Framework packages for Python or .NET and create a basic agent in a few lines. The agent abstraction is consistent across providers, so you can switch between Azure OpenAI, OpenAI, or other chat clients without rewiring the whole app.

- Use the Azure AI Foundry extension for Visual Studio Code to design agents in a YAML-backed editor, generate starter projects, run locally, trace behavior, and one-click deploy to Agent Service. The extension mirrors what many teams do already with Infrastructure as Code and makes agent definitions first-class artifacts in your repo.

- Turn on observability with a single environment variable or helper call. The framework emits semantic spans, token usage, and tool invocation metrics, and it can export to Azure Monitor or any OpenTelemetry collector you already use.

- Add approval checkpoints to sensitive tools. When the agent wants to call a function you marked as requiring approval, the run pauses and returns a structured request that your client app can present to a human. Approve or reject, then resume the run. No brittle, ad hoc prompts required.

In other words, this is not a research build. The primitives you expect from production services are here in version one.

Graph-based multi-agent workflows in plain English

Think of the new workflow system like a highway with exits and traffic lights, not like a group text. You define:

- Executors: nodes that do work. They can be agents, pure functions, or nested sub-workflows.

- Edges: typed connections between executors. Conditions send messages down the right lane. Fan-out and fan-in patterns are explicit.

- Checkpoints: the workflow can persist progress on the server side and resume later, which is critical for long-running tasks or when you need a human to review something before continuing.

- Request and response gates: any executor can return a structured request for input rather than a final answer.

A concrete example: a procurement workflow for a retailer

- An intake function validates a request and emits a typed message.

- A policy agent checks the request against company rules and emits a score and rationale.

- If the spend exceeds a threshold, an approval gate returns a request payload for a manager to approve in the internal portal.

- On approval, an integration executor calls the enterprise resource planning system to create a supplier record via OpenAPI.

- A second agent writes a summary to the company’s knowledge system and notifies the requester.

Every hop, decision, and pause is observable and repeatable. You can replay a flow from a checkpoint in staging, tweak a policy, and prove that the change behaves as expected.

Observability you can turn on before lunch

Most agent stacks treat telemetry as an afterthought. When a tool errors or a model hallucinates, you get a messy console log. Here, telemetry is a first-class citizen:

- Standardized spans: chat calls, tool executions, and agent invocations emit consistent traces. Set a flag to include prompts or function arguments in dev, and disable them in prod to stay compliant.

- Metrics you actually use: duration histograms for tool calls, token usage counters, and failure rates per executor. You can put budgets and alerts on these immediately.

- Workflow visibility: the framework emits workflow-level spans, so you can follow a request across agents, functions, and gates the way you trace a microservice request across pods.

If you already run OpenTelemetry and Azure Monitor, this plugs in with minimal ceremony. If you are early in that journey, the console exporter gives you a low-friction start.

Human approval gates where it counts

The framework treats human-in-the-loop patterns as architecture, not a prompt trick. You mark a function as requiring approval. When the agent reaches that function, the run returns an approval request with the function name, arguments, and any context the approver needs. Your app decides how to present that to a user. A yes or no becomes a structured response the agent consumes to continue or change course.

That design gives security and compliance teams what they want: an explicit control point, with an audit trail, that sits in the execution plan rather than inside a paragraph of model instructions.

Interoperability that crosses the boundary

Three pieces make interop believable here:

- Model Context Protocol connects your agent to remote tools without bespoke glue. For why MCP matters across vendors, see our MCP enterprise deep dive.

- Agent to Agent brings remote agents into your app with a single abstraction. You can point at a compliant agent endpoint, resolve its agent card, and treat it like a local component in your flow. The doc set covers discovery by well-known URL and direct configuration for tighter integrations.

- OpenAPI-first integrations let you turn existing service contracts into callable tools the agent understands. This is important in brownfield enterprises where systems of record already have well-documented Swagger or OpenAPI specs. The framework’s primitives map neatly to those contracts, which means fewer one-off adapters.

Put together, this is how you get portable agents. They can call tools via MCP, talk to other agents via A2A, and call your services via OpenAPI, without forcing every team to adopt a single language or runtime.

A pragmatic migration path

You do not need a flag day. Pick the path that fits where you are.

Start from Semantic Kernel

- Replace the Kernel-centric agent setup with the new ChatClient plus Agent pattern. In .NET, create a chat client from Azure OpenAI or OpenAI, then call CreateAIAgent with instructions and tools. In Python, the shape is similar.

- Register tools directly. You no longer need to wrap every function in a plugin before an agent can use it. Keep your function signatures simple and typed.

- Let the agent create threads for you. The framework abstracts differences between providers that have hosted conversation state and those that expect you to pass history.

- Move orchestration to workflows when your single agent grows tentacles. Start with a simple sequential workflow, then split into concurrent and conditional flows as your use case demands.

Start from AutoGen

- Swap Teams and event broadcast patterns for typed workflows. Think data flow, not chat room. Nodes are agents or pure functions, edges carry typed messages.

- Expect multi-turn by default. The new ChatAgent keeps going until it can produce a final answer or hits your tool iteration cap.

- Port tools function by function. The basic function tool pattern maps well. Add approval gates where a human should be in control and let the request and response mechanism handle the pause and resume.

- Keep experiments in a lab folder. If you used AutoGen’s research patterns like group debate or reflection, the Agent Framework includes orchestration patterns you can adopt without giving up durability.

Test plan for either path

- Build a thin canary. Start with one agent and one sensitive tool behind an approval gate. Enable observability, ship to staging, then promote behind a feature flag.

- Add a workflow for the first multi-step process your operations team already tracks in a runbook. Use checkpointing to make recovery easy.

- Decide what to log. In dev, include prompts and arguments. In prod, keep only what you need for incident response. Confirm this with security.

- Prove rollback. Keep agent definitions in your repo. Tag a release. Roll forward and back a couple of times to build muscle memory.

The VS Code and Azure AI Foundry path to production

Many teams will start and stay inside Visual Studio Code. The Azure AI Foundry extension puts agent design and deployment in the same place you write code.

- Design view plus YAML: the extension opens a designer and a schema-backed YAML file side by side. You get structure and documentation without hiding the source of truth.

- Local runs with traces: run an agent thread locally, see tool calls and outputs in order, and export spans to your collector.

- One-click deploy: when you are ready to test on infrastructure that looks like production, publish to Azure AI Foundry Agent Service. Use the same identity and network controls you apply to other services.

- Pull requests as change control: because the agent is defined as code, you can set up branch policies, approvals, and checks. That paves the way to the GitOps cadence below.

What this convergence unlocks next

Portable agents across clouds

Portable does not mean chasing lowest price every day. It means your customer service agent can call an inventory planning agent run by a partner through A2A, and both can call shared tools exposed over MCP. If either side changes cloud, the contract holds.

Governed automation by default

Approvals, traceability, and typed flows push you toward safer defaults. You can make critical actions require a person, log the reason, and keep evidence in one place. That satisfies audit requests without a week of log spelunking.

A GitOps style cadence for agent deployments

Agent behavior changes are production changes. Treat them that way.

- Keep agent definitions and workflow graphs in the repo. Use schemas so editors can validate.

- Package infrastructure and runtime config together. Use templates to deploy the same topology per environment.

- Run policy-as-code checks on prompts, tools, and risk flags. For example, block merges that would remove approval on sensitive functions.

- Roll out with rings or canaries. Send 5 percent of traffic to the new agent, watch success and error rates in your telemetry, then promote.

- Attach runbooks to alerts. When a workflow has been stuck at a gate for 10 minutes or a tool crosses an error budget, page the right owner with context from the trace.

What to build this week

- A revenue-adjacent agent with low risk and business value. Examples: invoice summarization with a human approval gate for payment release, or a ticket triage workflow that assigns priority and drafts a response script for an agent to review.

- An observability baseline. Capture token usage and latency per model and per tool. Decide the thresholds that would stop a rollout.

- A cross-team handshake. Publish one tool over Model Context Protocol and one simple Agent to Agent endpoint so another team can consume them. This will shake out the identity and networking questions before your big project depends on it.

The bottom line

By unifying AutoGen and Semantic Kernel into a single Agent Framework, Microsoft shifted agent development from a grab bag of patterns to an opinionated, enterprise-ready stack. Graph-based workflows make behavior explicit, observability and human approvals add the guardrails real systems require, and interoperability standards make it feasible to connect across teams and clouds. With Visual Studio Code tooling and Azure AI Foundry as the runtime, teams can move from prototype to production without switching tools or abandoning their governance model. The best signal is simple. You can start small this week, and you do not need a rewrite later. That is what maturity looks like in software, and agents just crossed that line.