Agent Bricks Makes the Lakehouse the Agent Runtime

Databricks and OpenAI are collapsing the agent stack into the lakehouse. Here is how lakehouse‑native governance, evals, and action gating turn demos into production systems while reducing risk and lock‑in.

The breakthrough: agents move into the lakehouse

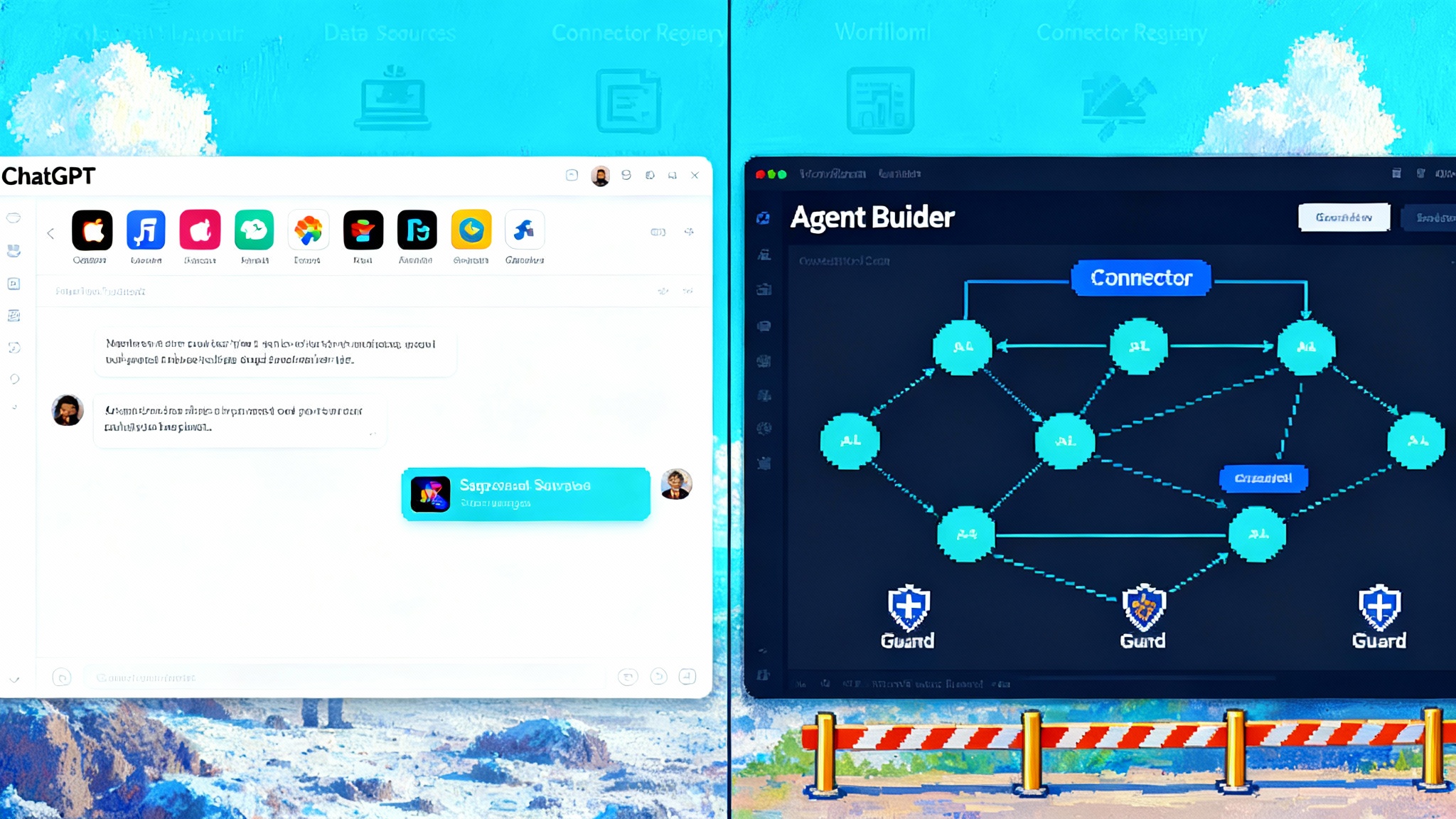

Databricks just put a stake in the ground for how enterprises will run agents. First came the June debut of Agent Bricks, an on‑platform way to build and tune agents directly on your data. Then in late September, Databricks and OpenAI announced a multi‑year partnership that brings OpenAI’s latest frontier models natively into the Databricks Data Intelligence Platform, with a headline commitment of one hundred million dollars in model spend and access. That announcement matters because it collapses a long and risky data hop into a single governed runtime inside the lakehouse. Databricks and OpenAI announce partnership.

Days later, Databricks introduced a cybersecurity offering that leans on the same principle. Security teams can build agents where telemetry already lives and let those agents act through governed tools. Read together, the message is clear: stop exporting sensitive data to distant agents. Bring trusted models to governed data and let the lakehouse operate as the agent runtime.

Think of it like moving from trucking crude oil to distant refineries, to building the refinery right on the oil field. The result is less spillage, fewer handoffs, and far easier oversight.

Why the winning pattern is data to models, not data to apps

Most early agent stacks were app‑centric. You stood up an agent service, attached a vector store, wired a handful of tools, and pushed data across the network through connectors, caches, and custom gateways. That worked for demos and pilots, but it creates four structural problems at enterprise scale:

- Governance scatter: Access policies, lineage, data masking, and audits end up split between the data platform, the agent platform, and every downstream tool.

- Security blind spots: Payloads and tool calls traverse systems with uneven logging, which complicates incident response and compliance.

- Cost creep: Duplicated storage, duplicated embeddings, and duplicated monitoring inflate cloud bills and introduce drift between copies.

- Operational drag: Every new use case needs the same plumbing again, and changes to data classification or retention policies must be propagated across stacks by hand.

A lakehouse‑native approach flips the ratio. Agents run where the data and its controls already live. The fabric that governs analytics and machine learning also governs prompts, knowledge bases, tool catalogs, and actions. That continuity is what most organizations actually need to turn a showcase bot into a durable system that survives audits and on‑call rotations. This shift also complements an interop layer for enterprise agents that keeps tools and models swappable without breaking governance.

The lakehouse as agent runtime: what changes under the hood

Agent Bricks sits on top of Databricks’ lakehouse primitives and Mosaic AI components. The key ingredients for a safe, auditable agent runtime are already in place:

- Unity Catalog as the control plane: One catalog for data, features, vector indexes, model endpoints, and even registered tools. Attribute‑based and role‑based policies live here, so an agent’s retrieval and action scope is the same scope your analytics teams already trust.

- Mosaic AI Gateway as the traffic cop: Centralized permissions, rate limits, payload logging, and guardrails for any model endpoint your agents call, whether it is OpenAI, Gemini, Claude, or an open model you host. Gateway logs every request and response to Delta tables in the catalog, so you can answer who used what, when, with what input, and what came back.

- Tools governed like data: With a tools catalog and managed connectors, tools are not ad hoc code anymore. They are discoverable assets with owners, schemas, approval workflows, and usage logs. When an agent calls a tool, the call inherits the user or service identity’s policies.

- MLflow and Agent Evaluation as the quality layer: Evals, LLM‑as‑judge scoring, human review apps, and traces land in first‑class tables. That lets you compare versions, catch regressions, and attach promotion criteria to deployment.

The practical upshot is that you can point frontier models at sensitive data without throwing away the safety net your compliance, data, and security teams already rely on. Instead of inventing a new governance story for agents, you extend the one you have.

The OpenAI tie‑up removes a long‑standing roadblock

The OpenAI partnership removes a painful operational fork. Enterprises no longer have to choose between model quality and governance. They can adopt OpenAI’s frontier models for reasoning, code, and math directly from within Databricks, with usage flowing through the same policies, budgets, and logs as any other endpoint. That is the heart of the bring models to data pattern. It reduces legal and security review cycles, shortens deployment lead times, and limits the surface area for data exfiltration.

Crucially, it also reduces accidental lock‑in. If your governance runs at the catalog and gateway layers, you can route traffic across multiple providers without rewriting your controls. You gain choice while keeping a single policy engine. For perspective on composability across providers, see how governed building blocks for agents make tool behavior auditable and reusable.

Evals are the difference between a demo and production

Most agent failures are not about the model. They are about unmeasured behavior. The same prompt produces different answers on Monday and Friday because context changed and no one noticed. Or a useful tool got promoted without tests, and error handling never fires.

Agent Bricks bakes in evaluation from the start. When you define an agent, the platform can generate task‑specific benchmarks and synthetic data that looks like your domain. It runs sweeps across prompts, models, and retrieval settings, then scores performance with LLM judges and human criteria. The result is a versioned track record for each agent, tied to data and tools it touches.

That history matters downstream. It lets you:

- Compare an open source model you fine tune against a proprietary model on accuracy and cost per task.

- Set guardrails based on measured failure modes instead of hunches.

- Attach deployment to a promotion rule such as no regression on precision or latency under a threshold on a holdout set.

Put simply, evals are your quality system. They turn agents from artisanal experiments into assets you can own and improve.

Action gating turns tools from risk to advantage

Tool use is where value and risk meet. Agents that only answer questions are useful, but agents that act are transformative. The trick is to give agents reach without giving them rope to tangle your operations.

In a lakehouse‑native runtime, you do that with action gating. Treat tools as governed assets with policies and budget limits. Log every call with inputs, outputs, and identity. Require step‑up approval for high‑risk actions. Constrain data retrieval by catalog policy so a support agent can read a customer’s tickets but not the full ledger. Route all calls through a gateway that can redact, filter, and block. Because everything is registered and logged in one place, you can answer tough questions after the fact and enforce least privilege up front.

This is a sharp contrast with app‑centric stacks where tool endpoints live outside your data plane and inherit none of its controls by default. Re‑creating those controls in a separate agent gateway is possible, but it duplicates effort and rarely reaches parity with the catalog you already maintain.

Comparing architectures: data‑centric vs app‑centric

Here is a practical way to see the difference.

-

Identity and access

- Lakehouse‑native: One policy engine in the catalog covers data, features, vectors, models, and tools. Changes roll out once.

- App‑centric: Multiple policy engines. Changes propagate by hand. Drift and exceptions creep in.

-

Observability

- Lakehouse‑native: Prompts, responses, tool calls, costs, and evals are Delta tables. You can query, alert, and visualize like any dataset.

- App‑centric: Logs live in separate stores. Joining them to data lineage is manual and brittle.

-

Performance and cost

- Lakehouse‑native: Minimal data movement. You index once, cache once, and eliminate duplicate stores.

- App‑centric: Repeated extraction, repeated indexing, and higher egress and storage costs.

-

Change control

- Lakehouse‑native: Agents and tools are versioned assets with owners and pull‑request style promotion.

- App‑centric: Many ad hoc services, variable change processes, and harder incident rollback.

What Agent Bricks actually does

Agent Bricks is not just a fancy UI. It automates the unglamorous steps that make agents dependable: generating realistic synthetic data, building task‑aware benchmarks, running optimization sweeps across prompts and models, attaching evals, and packaging the best candidate for deployment with lineage intact. That is why it accelerates teams that already know their use case and their data. Agent Bricks launch press release.

If you are a platform leader, the more important point is architectural. The lakehouse becomes the runtime. Your governance, security, and quality fabric extends naturally from analytics to agents without needing a secondary platform. For a broader view of agent platforms, see our take on the OpenAI agent platform moment.

What about cybersecurity

Security teams have felt the pain of scattered data and tools for years. Moving agent logic to where telemetry already lives is a force multiplier. You can let a triage agent read alerts, enrich with identity data, summarize probable root causes, and then propose a response. Action gating can require a human approval for containment actions. Because every query and step is already logged in your data plane, forensics and audit are first class.

Minimal lock‑in by design

There is a healthy fear of new lock‑in with agents. Bringing models to data helps in three concrete ways:

- Open model and provider mix: With a gateway and tools registered in the catalog, you can route to OpenAI, Gemini, Claude, or your own hosted models without rewriting policies.

- Portable artifacts: Prompts, eval datasets, traces, and tool specs are tables and files in your lakehouse. If you need to replicate or migrate, you copy data and metadata you already control.

- Standard workflows: MLflow for versioning and evaluation means your traces and metrics are not trapped in a closed agent service.

A 90‑day playbook to pilot production agents in the lakehouse

Here is a concrete plan that teams can follow to ship something real in three months, with minimal lock‑in.

Weeks 1 to 2: Frame one surgical use case

- Pick a high‑leverage, bounded task with measurable outcomes. Examples: information extraction from vendor contracts, a knowledge assistant for support agents, automated call summarization, or security alert triage.

- Write the success contract. Define accuracy targets, allowable latency, and guardrails. Specify what the agent may read and which tools it may call.

Weeks 3 to 4: Wire governance first

- Register all input tables, document stores, vector indexes, and tools in the catalog. Attach tags for sensitivity and retention. Map identities for users and service principals.

- Define budget policies and rate limits in the gateway for model usage. Turn on payload logging and decide redaction rules for sensitive fields.

Weeks 5 to 6: Build the baseline agent

- Use Agent Bricks to stand up the initial agent with your data. Start with retrieval and one or two tools. Let the platform generate task‑specific evals and synthetic examples.

- Instrument traces and create a dashboard of quality and cost per task. Include failure mode categories such as hallucination, tool error, and retrieval miss.

Weeks 7 to 8: Optimize and compare models

- Run sweeps over prompts and candidate models, including at least one proprietary model and one open model. Compare quality and cost per successful task using your eval harness.

- Introduce lightweight tool call retries and timeouts. Add a basic fallback plan for tool failures.

Weeks 9 to 10: Add action gating and approvals

- Classify tools by risk. For low risk tools such as ticket lookup, allow automatic execution. For medium risk tools such as ticket updates, require policy checks. For high risk tools such as refunds or account suspension, require step‑up approval.

- Validate that gateway logging captures inputs and outputs for all agent invocations and tool calls. Test your redaction logic.

Weeks 11 to 12: Run a guarded pilot

- Pick a small group of end users. Route all agent activity through the gateway and stream traces and evals to your dashboards.

- Hold daily triage on failures. Fix the top two failure modes each week. Enforce a no‑regression rule on your metrics before any update.

Weeks 13 to 14: Productionize and document

- Lock the agent behind a production endpoint with budgets. Publish the runbook that explains permissions, escalation paths, and rollback.

- Hand audits the evidence pack: lineage graph, access policies, eval results, trace samples, and change history.

Weeks 15 to 16: Extend safely

- Add one tool or one data source at a time, not both. Update policies and evals accordingly. Keep the fallback paths simple.

This playbook does not assume exclusive use of any one model provider. It assumes that the lakehouse is the agent runtime, and that your catalog and gateway are the source of truth. That is how you minimize lock‑in while moving fast.

The quiet advantage: platform compounding returns

Enterprises have spent years aligning on a single data platform to reduce cost and chaos. Putting agents on that same platform is not just about convenience. It is about compounding returns. Every improvement to data quality lifts your agents. Every new eval makes future agents safer by default. Every policy change reaches all workloads. That is how you go from one good demo to a portfolio of production agents without multiplying risk.

Closing thought

The early era of agents treated your lakehouse as a fuel depot for faraway software. The next era makes the lakehouse the factory floor. Frontier models arrive at the loading dock. Unity Catalog is the badge reader. The gateway is the safety officer. Evals are your quality line. Build there, measure there, and act there. The organizations that do will ship agents that are not only clever, but also controlled, costed, and ready for the real world.