GPT‑OSS Makes Local Agents Real: Build Yours Today

OpenAI’s GPT‑OSS open‑weight family brings private, on‑device AI out of the lab. This guide shows a practical local agent stack, what it changes for enterprises, and two hands‑on builds you can run now.

The switch just flipped

OpenAI’s GPT‑OSS arrives as an open‑weight model family that you can actually run on your own machines. Not an abstract research checkpoint, not a demo behind a cloud account, but downloadable weights that turn a laptop or an edge box into a capable teammate. If the last few years taught developers to prototype with hosted models first, GPT‑OSS does the opposite. It moves the center of gravity to your device. Privacy first. Latency measured in milliseconds. Costs that you can forecast with a spreadsheet and a receipt from your hardware vendor.

Open weights are different from open source. Source code is text you compile. Model weights are learned numbers you load. When a company releases open weights, you can execute the model locally, inspect behavior, and fine‑tune on your data. You do not need to send prompts or documents to someone else’s server. That simple shift unlocks new classes of software where the product promise is private by default rather than private by policy.

The moment feels like the day laptops got real graphics chips. Everything we did before still works, but the way we build changes. In this piece we explain why, show you how to assemble a local agent stack that works today, map what this unlocks for enterprises, and walk through two hands‑on builds you can run on a single machine.

Why GPT‑OSS is an inflection point

A lot of model families have shipped as open weights. What changes with GPT‑OSS is not only quality but also the surrounding ecosystem. You can pull it with Ollama or load it in LM Studio without writing custom glue, wire tools through Model Context Protocol servers, and let agents talk to each other through simple agent‑to‑agent channels. The emphasis is practicality. You should be able to go from nothing to a working local assistant before your coffee cools.

Three factors make this moment different:

-

A viable default stack on day one. The pieces exist and interoperate well enough that a single developer can build a useful local agent without assembling a research lab. See how the field is shifting toward the modular turn for real agents.

-

Open weights with everyday licenses. The model is explicitly usable for commercial and internal enterprise work, which means procurement can say yes without a legal thesis. The details vary by edition, but the posture is clear. You are meant to run it.

-

A credible path to hybrid. Some tasks will stay in the cloud for scale or peak accuracy. Others will run on edge hardware for privacy or latency. GPT‑OSS families are sized to let you pick a tier for each job, aligning with emerging patterns in orchestration the new AI battleground.

The local agent stack you can assemble today

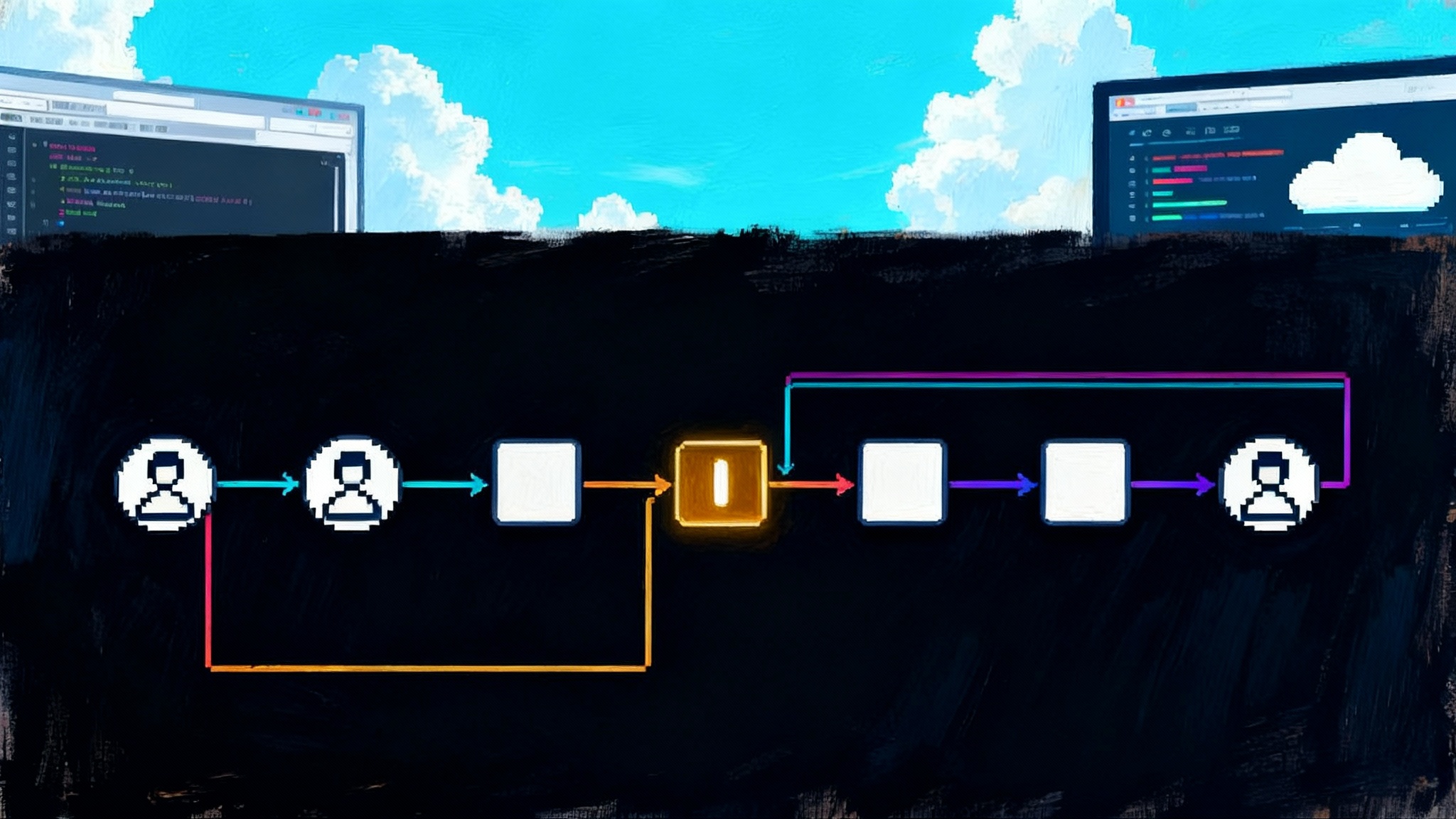

Think of a local agent as a small company in a box. There is a brain, there are tools, there is memory, and there is a way to talk to other agents and to you. Here is a stack that works and that you can mix and match to your taste.

- Model runtime: Use the Ollama model runtime or LM Studio. Both let you pull models, manage quantized variants, and run inference on CPU or GPU with minimal friction.

- Model weights: GPT‑OSS in sizes that match your machine. Smaller weights for laptops, larger for workstations and edge servers. Quantized versions lower the memory footprint with a small accuracy tax.

- Tool layer: The Model Context Protocol is a simple way for models to call external tools through well defined servers. Many tools already speak MCP, and writing your own server is straightforward.

- Interop layer: Agent to Agent. A2A means a model can hand a task to another specialist agent through a message channel. You can do this with lightweight processes on the same machine or over your local network.

- Memory and retrieval: A local vector store and a simple relational store. Start with SQLite for structure and a small vector database for recall. You do not need a cluster for personal agents.

- User interface: Terminal, a tray app, a sidebar in your editor, or a chat window in the browser. Local agents shine when they are one keyboard shortcut away.

The point is not to chase shiny components. The point is to keep data on device and to pick tools that can run without a network connection. You can add the cloud later for heavy work.

What changes for enterprises

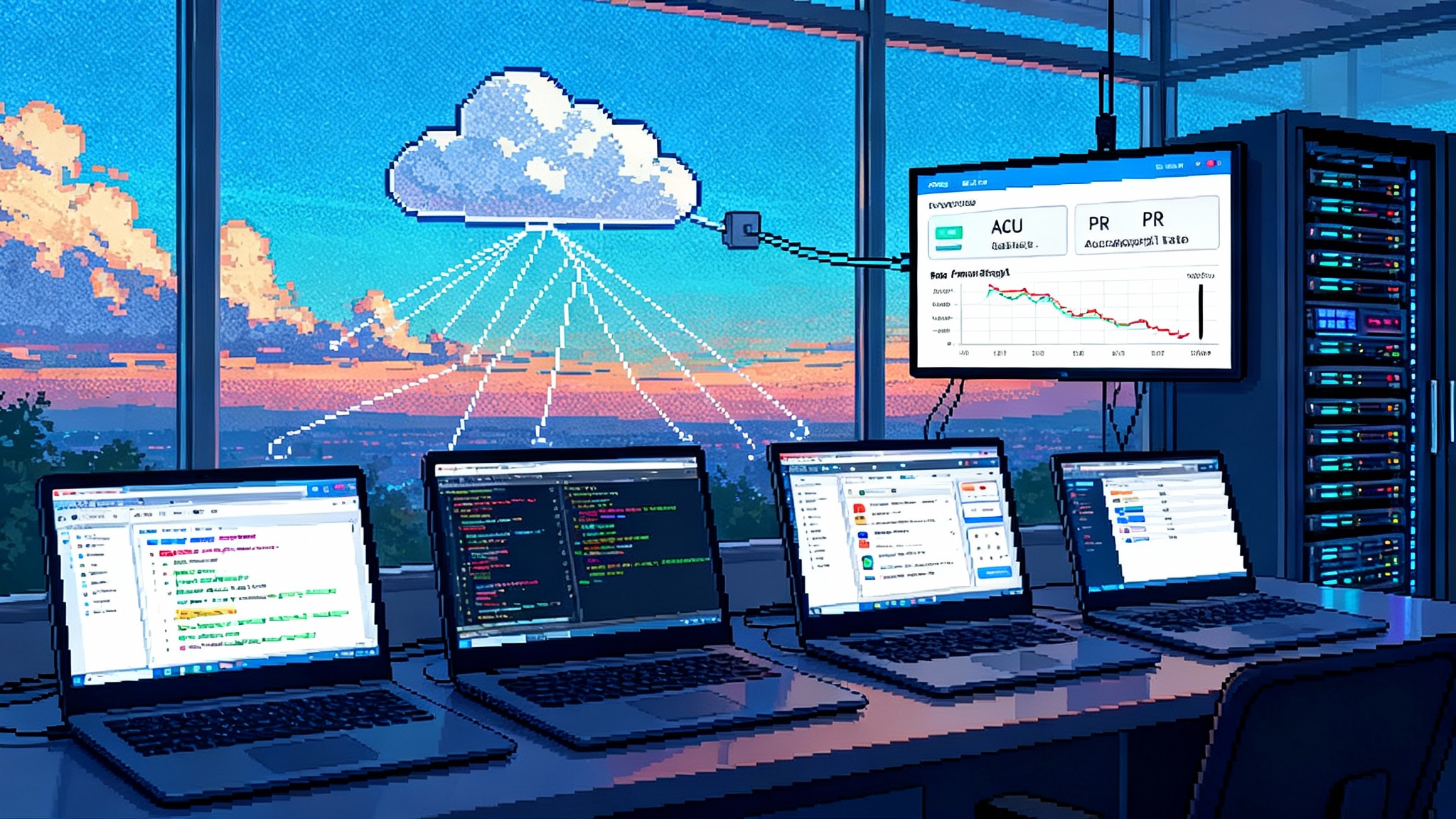

Model mesh procurement

Procurement shifts from provider contracts to weight catalogs. Instead of buying access to one model, you assemble a mesh of models and sizes that map to your workloads. You can evaluate on your data, pick the size that meets the service level, and standardize on a few families across teams. Contracts become about license scope, security posture, and update cadence rather than usage caps.

Expect a new class of requests for proposal where buyers ask vendors to deliver a model mesh: a small local tier for privacy sensitive tasks, a medium tier for everyday batch work, and an optional hosted tier for spikes and complex reasoning. Vendors will compete on weight quality, quantization fidelity, and the reliability of their update pipeline.

Hybrid edge and cloud inference

With GPT‑OSS in the mix, routing logic becomes policy driven. Personally identifiable information stays on device. Low latency interactions run on the laptop. Complex long‑context reports and heavy fine‑tunes can burst to the cloud. You will route by task, cost, and risk rather than by a default endpoint. Observability shifts from prompt logs to system metrics: tokens per second, memory headroom, thermal throttling, and queue depth.

Lower total cost of ownership

When you run on owned hardware, you amortize cost. A desktop with a competent graphics card can serve a team’s daily agent interactions at a fraction of the price of hosted inference once utilization is steady. The savings are not only per token. You avoid data egress, you cut latency, and you gain operational control. The transition is not all or nothing. Start with a pilot workload that has clear privacy constraints and predictable volume, then expand one service at a time.

Hands on: build a laptop‑grade personal research agent

Goal: a private assistant that reads local files, searches approved sources, summarizes findings, and drafts notes. It runs without an internet connection and respects a per‑project sandbox.

Hardware: a recent laptop with 16 gigabytes of memory. A discrete graphics card with 8 gigabytes of memory helps but is not required if you use a small quantized model.

- Install the runtime

- Install Ollama or LM Studio. Both offer installer packages. After installation, confirm that the service runs and that you can pull a model.

- Pull a GPT‑OSS build

- Choose a 7 to 8 billion parameter variant for general work. If your machine is modest, use a 4 bit quantized build. Pull the model and test a short prompt to confirm tokens per second are acceptable.

- Set up tools with Model Context Protocol

- File reader. Expose a read only file server that allows the model to browse a project folder and read text and PDF files.

- Web fetcher. Provide a tool that fetches pages from an allow list. You can gate the network so the agent cannot wander. For offline use, stage pages in a local cache folder the tool reads from.

- Notes writer. Implement a simple tool that appends to a Markdown notebook in your project directory.

- Add memory and retrieval

- Create a small SQLite database that stores research items with fields for title, source, date, and a brief summary. Use a local vector index to store embeddings for semantic recall.

- Define a system prompt and policy

- The agent should ask before reading files outside the project folder. It should cite every source in the notes it writes. It should never send content to remote services. Spell this out in the system prompt and enforce it at the tool layer.

- Try a workflow

- Ask: summarize the last ten project documents, find three open questions, and draft an outline for a brief. Watch the agent call the file reader, build summaries, write notes, and propose next steps. Disconnect your network to prove it works offline.

- Measure and iterate

- Track tokens per second, memory, and whether the agent overcalls tools. If summaries are weak, try a slightly larger GPT‑OSS variant or raise the context limit through chunking.

When this feels useful, wrap the agent in a small tray app so it is available in one click. Add a hotkey to send the current selection from your editor to the agent. You now have a private research teammate that lives on your machine and respects your boundaries.

Hands on: build an edge SRE teammate

Goal: a cautious helper that explains incidents, suggests commands, and drafts runbooks without direct shell access. It should be able to read logs, query metrics, and produce safe proposals for a human to run.

Hardware: a small form factor server or a workstation that lives near your cluster. This box runs the model and the tools. Place it on a management network that your monitoring stack can reach.

- Install and size the model

- Use a mid sized GPT‑OSS variant for better reasoning under pressure. Favor deterministic decoding for repeatable outputs.

- Connect read only tools through MCP

- Logs. A server that tails logs from a curated set of files or a log API. Redact secrets before the model sees anything.

- Metrics. A simple query tool that returns time series slices with labels and units. Keep responses small and structured.

- Playbook library. A tool that returns snippets from your known good runbooks with version tags.

- Define policy and guardrails

- The agent never executes commands. It drafts shell snippets in a fenced code block with a title and a risk note. A human runs or rejects the snippet. Include a strict schema in the prompt.

- The agent must always produce a hypothesis and a confidence rating with a short rationale, plus two alternative hypotheses.

- Add an A2A pathway

- Create a tiny specialist agent that knows your autoscaler. When the primary agent suspects capacity issues, it sends a structured message to the autoscaler agent which returns a compact diagnosis. This keeps the primary focused and auditable.

- Run a drill

- Feed a bursty traffic pattern into your staging system. Ask the agent to explain the error spike and propose two fixes. Review the output, run the commands yourself, and record outcomes. Iterate on the prompt until the proposals are precise and low risk.

This pattern builds trust. Your SRE teammate is fast, private, and bounded. It never pokes production without a human in the loop.

Security and governance essentials

Local first does not mean security last. Treat model weights and tool servers like any other production software. The enterprise security posture should evolve toward AI firewalls and guardian agents.

- Supply chain. Verify the provenance of weights and tools. Keep a signed manifest and track checksums.

- Sandboxing. Run the model and its tools in containers with minimal privileges. Restrict file access to project folders.

- Secrets. Do not give the model raw secrets. Tools should fetch scoped tokens right before a call and never return them to the model.

- Monitoring. Audit tool calls and model outputs. Store structured logs locally and rotate.

- Red teams. Write attack playbooks that try prompt injection, log poisoning, and tool exfiltration. Patch with allow lists and schema validation.

The enterprise playbook for the next 90 days

Here is a concrete plan to move from interest to impact.

- Pick two workloads. One individual productivity case such as research or documentation. One operational case such as log triage. Success means reduced cycle time and no privacy incidents.

- Assemble a small stack. Standardize on one runtime, one GPT‑OSS size for laptops, and one for edge servers. Use MCP for tools across both.

- Establish policy. Decide which data must never leave devices, which actions require a human, and what you will log. Write it down and enforce it in code.

- Run a pilot. Ten to twenty users, four weeks. Measure prompts per day, tokens per second, and errors. Track time saved compared to the baseline.

- Do a cost readout. Amortize hardware over three years, include power, and compare to your hosted spend for the same workflows. This is where total cost of ownership becomes tangible.

- Decide on mesh expansion. Add a hosted tier only where the accuracy gain beats the privacy and cost trade.

The near term roadmap: six to twelve months

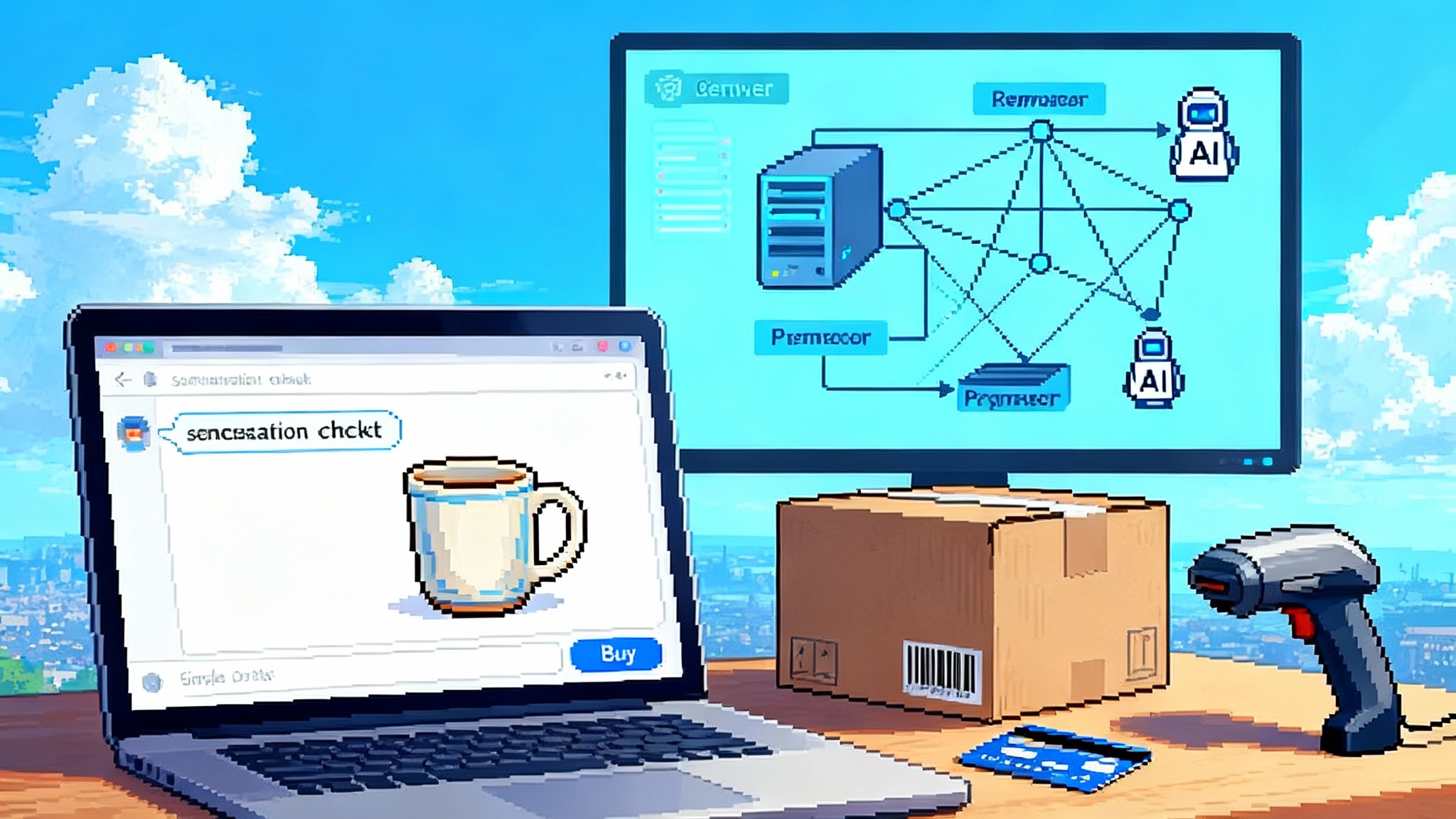

An app store for agents is no longer science fiction. With open weights on device and simple interop, packaging and distribution are the next frontier.

- Signed agent bundles. Agents ship as packages that include prompts, tools, and policies. They install on your device without a network connection. Updates are signed and diffed.

- Secure sharing. People share agents with colleagues through peer to peer transfers that preserve provenance. Think airdrop for teammates rather than a public marketplace.

- Usage and monetization. Tool vendors sell local capability packs with clear metering. You might buy a secure document converter, a domain specific calculator, or a specialized retrieval module. Payment is per install or per task, not per token in a cloud.

- Enterprise catalogs. Companies run internal stores for approved agents. Security teams review bundles and publish them with risk tags. Developers can submit updates that go through lightweight review.

- Interop convergence. Model Context Protocol hardens, and agent to agent messaging patterns settle into a few reliable shapes. Vendors align on schemas so that tools can be reused across models without rewrites.

The point of the store is not to centralize control. It is to make high trust sharing easy so that best practices spread without copying files in a chat thread.

What this means for builders

- Build local first, burst when needed. Start with a GPT‑OSS size that runs on your machine. Prove the workflow. Only then decide if any part needs the cloud.

- Design with tools and policies from day one. Most failure modes are not about the model. They are about a tool that had too much power or a policy that was never written.

- Think in meshes, not monoliths. Use small specialist agents and connect them with structured messages. It is easier to audit and to improve.

- Measure reality. Latency on device, errors per task, power draw. These numbers drive both user happiness and cost.

A final word

We have spent years treating the cloud as the only place where serious artificial intelligence could live. GPT‑OSS flips that script. Your laptop is now a capable home for private, fast, and affordable agents. Your edge server can host a trustworthy teammate that never leaks a log line. Enterprises can buy and run a mesh of models that match their work rather than their vendor’s billing page. The software patterns are simple, the parts are ready, and the payoffs are concrete.

The best way to understand the shift is to feel it. Build the research agent. Run the SRE drill. When you watch a local model help you think, write, and operate without leaving your machine, the future becomes obvious. The next wave of artificial intelligence will not just be bigger. It will be closer. And it will be yours to run.