Guardian Agents and AI Firewalls Are the New Enterprise Moat

Fall 2025 turned AI agents from demos into daily operators. That shift created a parallel market for guardian agents and AI firewalls between models, tools, and data. This blueprint shows how to build that runtime policy layer now.

The quiet breakthrough of fall 2025

In the space of a quarter, enterprise agents went from slick demos to trusted coworkers. Customer support agents triage live queues. Finance agents prepare close packages. Coding agents review pull requests, stage rollbacks, and file tickets. The headline is not that agents got smarter. It is that companies finally wired them to real tools, real data, and real authority.

As those connections snapped into place, a parallel market emerged almost overnight. Security and platform teams began deploying guardian agents and AI firewalls. These are runtime policy engines that sit between models, tools, and data. They observe, constrain, and explain what an agent is allowed to do in the moment. They do not replace traditional network firewalls or the model’s built in safety filters. They complement them, the way a seat belt complements airbags.

This shift is already visible in enterprise roadmaps. Amazon added policy based enforcement to Bedrock Guardrails in March, letting teams require that every model call attaches a specific guardrail via identity policy. That change turned safety from a best effort wrapper into an enforceable contract at the API boundary, especially when used with agents and tool use IAM policy based enforcement for Guardrails. At the same time, security vendors started speaking the language of agents, not only users and servers. Identity platforms now issue credentials to agents. Cloud networks advertise firewalls for AI traffic. Observability tools trace prompts and tool calls like they trace microservices. Enterprise platforms are standardizing around Microsoft’s Unified Agent Framework and the MCP interop layer for agents, which make policy hooks and tool permissions first class.

The result is a new layer in the stack. If you are shipping production grade agents, you will either build this layer or buy it.

What a guardian agent actually is

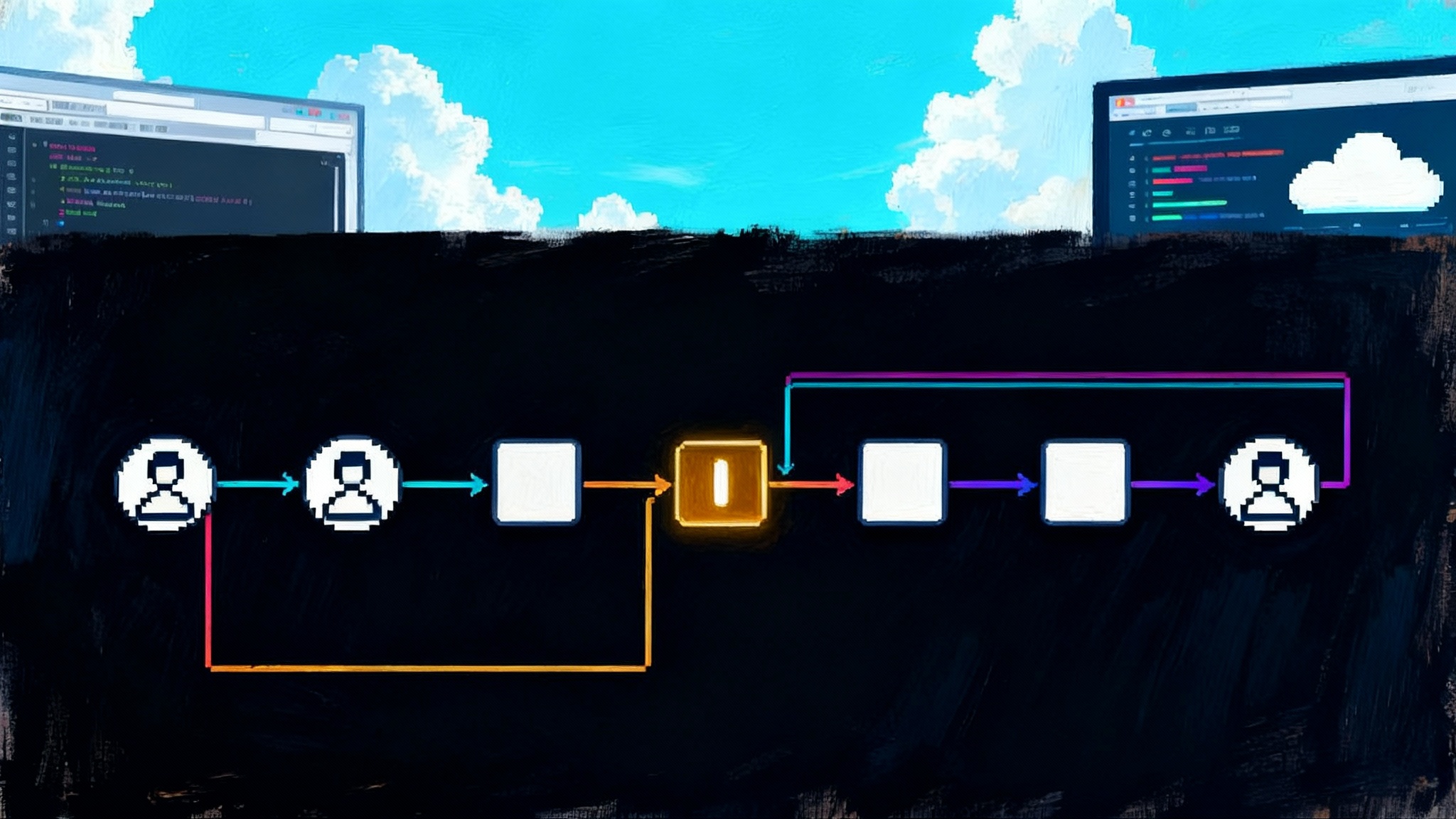

Picture the agent as a driver in a city. The model is the person behind the wheel. The tools are bridges, tunnels, and parking garages. Data stores are neighborhoods that carry different speed limits and access rules. A guardian agent is the traffic control system. It posts speed limits, sets red lights, diverts routes when there is a hazard, and leaves an audit trail of every intersection.

Concretely, a guardian agent or AI firewall does four things at runtime:

-

Enforces policies on inputs and outputs across the agent’s tool calls. It inspects requests and responses for sensitive data, prompt injection, or unsafe actions. It can redact, rewrite, or block.

-

Mediates credentials with least privilege. It gives agents ephemeral, scoped access that is specific to a tool, operation, and dataset. It rotates and revokes that access automatically.

-

Intercepts actions before they land. It asks for a second signal when risk spikes, from a human approver or from another automated checker.

-

Records a structured audit trail. It logs the who, what, when, where, and why of every step. That trail feeds forensics, analytics, and proofs of compliance.

This is not hypothetical. Identity leaders now treat agents as first class identities. Cloud platforms offer policy hooks that attach safety controls to every inference. Edge networks ship AI aware gateways that inspect prompts for exfiltration and abuse. And security teams are buying tools that explain agent behavior step by step in the same consoles they use for humans and servers.

Why now: the attack surface changed

A year ago, most risks lived in the prompt. Today, the risky part is the action. Agents click, post, book, file, and pay. They browse the web, parse invoices, call internal APIs, and launch jobs. The threats have kept pace. Security firms documented prompt injection through search results that exploits how agents retrieve and follow instructions from untrusted pages. Browser research showed a man in the prompt vector where extensions alter the text field an agent sees. October research on coding agents showed query agnostic indirect prompt injection that works across many user inputs, which means the attacker no longer needs to guess your exact query to cause harm query agnostic prompt injection on coding agents.

The pattern behind these papers is simple. If an agent can be tricked into believing an instruction is trusted, the damage is limited only by what that agent is allowed to do. That is why the market is moving to runtime controls that take away dangerous abilities by default, then grant them narrowly and temporarily.

A practical blueprint you can ship this quarter

You do not need to wait for a new regulation or a vendor mega suite. You can assemble a working guardian layer from parts you already own and a short list of new services. The blueprint has four pieces.

1) Tool I/O firewalls: the bouncer for every tool call

Objective: stop unsafe inputs from reaching tools and block sensitive outputs from leaving.

How it works:

-

Put a policy engine in front of every agent tool. If your agent can call an email API, a repository, a data lake, or a payments service, route each call through a gateway that can inspect and mutate the payload. A cloud AI gateway or an edge firewall for AI traffic can do this, as can a lightweight sidecar that your platform team maintains.

-

Write policies in simple, testable rules. Examples: block outbound messages that include raw tokens or secrets; disallow file writes outside a specified directory; redact social security numbers from any text leaving the model; rate limit risky tools to a small number of calls per minute.

-

Make rules model agnostic. Your firewall should not care if the call came from a proprietary model or an open model. Tie enforcement to the tool endpoint and the identity attached to the call. Use cloud features that let you require guardrails through identity policy so enforcement cannot be bypassed.

What to measure:

-

Percentage of tool calls that pass through the firewall. Aim for 100 percent.

-

Number of blocks and rewrites per day, broken down by tool and rule. Investigate spikes.

-

Mean time to update a policy after a new attack pattern is discovered. Keep it under one day.

2) Least privilege credentials: the valet key for agents

Objective: narrow agent blast radius by default.

How it works:

-

Issue agents their own identities. In your identity provider, treat each agent or agent capability as a principal. Give it a unique identifier, not a shared service account. Tie all permissions to that identity.

-

Use just in time, ephemeral credentials. Replace standing secrets with tokens that expire in minutes. Grant scope not only to a resource, but to the exact operation and data partition. For example, let the finance close agent read invoices in the current period and write only to a staging table, never to production.

-

Remove access at the end of every task. When the agent finishes, revoke access even if the token has time left. Build this into your orchestration layer so it is not forgotten in business logic.

-

Externalize secret handling. The agent never sees long lived keys. It asks a credential broker for a short lived token, the broker checks policy, and the firewall verifies that token before allowing the tool call.

What to measure:

-

Percentage of agent actions taken with temporary credentials. Target 95 percent or higher in month one.

-

Median token lifetime. Shorter is safer. A common starting point is five minutes.

-

Number of privileged roles assigned to agents. Fewer is better. Collapse privileges into granular scopes.

3) Action interceptors: circuit breakers with context

Objective: pause, escalate, or add checks before high risk actions complete.

How it works:

-

Define risk thresholds per tool. Examples: any payment over 500 dollars, any code merge to main, any data export over 10,000 rows, any vendor add in the finance system.

-

Use two signals. When risk exceeds the threshold, do not rely on the primary model to self reflect. Ask a second checker to verify. That can be a lightweight rules engine, a retrieval based verifier, or a separate model with a different system prompt and training data. In extreme cases, require a human click.

-

Intercept at the last responsible moment. Insert the interceptor between the agent and the tool, not inside the agent’s internal chain. That ensures it sees the final payload, not an earlier intention that could drift.

-

Keep a tight allowlist. For destructive methods, require explicit allowlisting. If the tool exposes delete, update, or wire, do not allow the agent to call those methods unless a policy specifically enables it.

What to measure:

-

Interceptor coverage: the percentage of risky actions that route through interceptors.

-

False block rate and false pass rate. Tune thresholds weekly until both are acceptable for your business.

-

Median approval time for human in the loop. Keep it low enough that users do not route around controls.

4) Audit trails: telemetry that explains behavior

Objective: produce an explainable, tamper evident record of what happened and why.

How it works:

-

Log at the policy boundary. For every tool call, record time, agent identity, user identity if present, tool endpoint, input hash, output hash, policy decisions, and the reason codes for any rewrite or block.

-

Capture context, not secrets. Store hashes and references, not raw payloads, unless you have clear retention policies and encryption.

-

Make logs immutable. Write to an append only store or enable write once retention with your cloud provider. Forward summaries to your security information and event management platform. Keep detailed traces in your developer observability tool so product teams can fix root causes.

-

Build explainer views. When a customer asks why the agent refused to send an email, show them the rule that blocked it and the redaction that would make it safe.

What to measure:

-

Percent of agent actions with complete logs. Target 100 percent.

-

Median time to investigate an incident using logs alone. Drive this down with better reason codes.

-

Number of customer visible explainers served per month. Treat this as a trust metric.

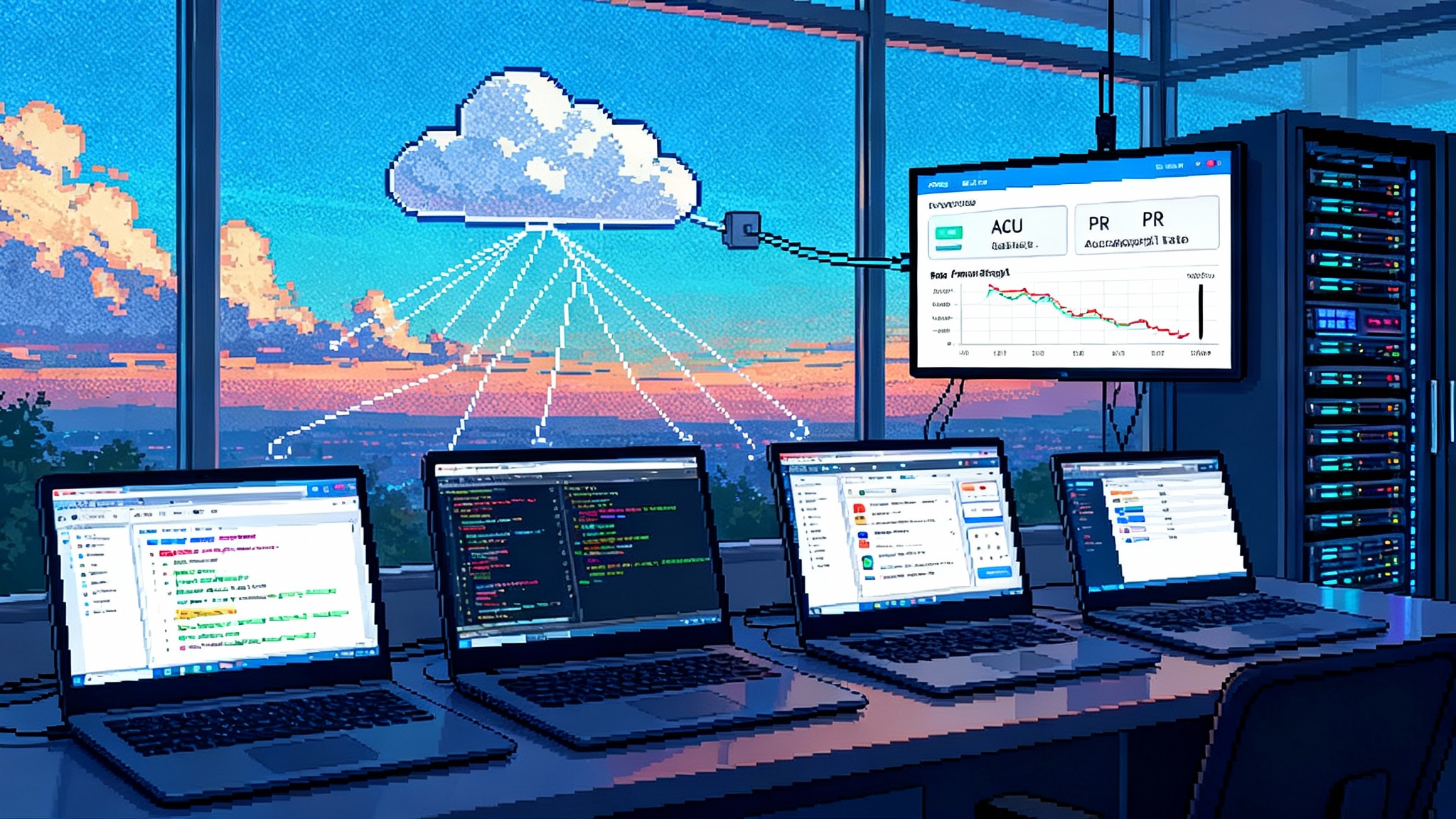

The market signal: safety became a feature

It is telling that security and cloud leaders now brand products in agent terms. Identity vendors introduced offerings that discover shadow agents, govern agent identities, and enforce least privilege at scale. Cloud providers let you attach guardrails through identity policy so that unsafe calls are rejected at the boundary. Network and edge clouds advertise AI firewalls that detect exfiltration and prompt injection and apply topic and content policies across models. Observability platforms treat agent traces and tool calls as first class telemetry. Platform suites are also pushing governance into the data layer, as seen in Agent Bricks as the lakehouse runtime.

Platform companies that ship agents also moved this way. Enterprise suites that rolled out agent platforms this fall are emphasizing controls that matter to risk owners: scoped credentials, data boundary enforcement, tool allowlists, and human in the loop approvals that can be configured per business process. Even traditional firewall and secure access vendors are acquiring AI security startups whose specialty is runtime governance for agents. Consolidation is a sign that buyers want an integrated control plane rather than a basket of one off filters.

On the research side, the message from October and November is clear. New attack classes are getting more general, not more niche. Query agnostic prompt injection for coding agents, typographic prompt injection for multimodal agents hidden in images, and in browser fuzzing that discovers injection paths in real time all point in the same direction. If you rely on clever prompt templates or a single model’s internal refusals, you will chase attackers and lose ground. If you enforce policy at the tool boundary with least privilege credentials and action interceptors, you reduce the cost of new attack classes because they hit a locked door.

A 90 day rollout plan

Week 1

-

Inventory agents, tools, and data sources. Build a simple catalog: who can the agent act as, what tools can it call, which data does it access, which actions are destructive.

-

Place a gateway in front of your agent tooling. Start with email, repositories, search, and any data export function. Turn on logging and basic redaction. Create a single blocklist for secrets and personal data.

-

Require that every model call attaches an approved guardrail profile. Enforce this with identity policy so application code cannot skip it.

Month 1

-

Convert standing credentials to short lived tokens issued by a broker service. Scope them to specific tools and operations. Remove long lived keys from code and configuration.

-

Define risk thresholds and add interceptors for the top five risky actions in your environment. Wire up a two signal check, with clear instructions for the human approver when a review is required.

-

Expand your firewall rules to include tool specific allowlists, rate limits, and output filters. Start capturing reason codes for every block or rewrite.

Quarter 1

-

Run chaos drills. Use recent attack classes to simulate abuse. Attempt prompt injection via web pages the agent visits, via retrieved documents, and via tool descriptions. Track how often controls, not people, prevent harm.

-

Publish an agent safety scorecard. Include policy coverage, least privilege coverage, interceptor coverage, and incident response times. Share wins and gaps with executives and product teams.

-

Bring procurement and legal into the loop. Update vendor questionnaires to require runtime controls: tool firewalls, least privilege credentials, action interceptors, and audit trails. Require attestations in contracts.

Why this will harden the ecosystem before regulation catches up

Regulation will come, but markets will move faster for three reasons.

-

The economics favor safety. Unchecked agents are cheap to launch and expensive to keep. The first injection incident that leaks a thousand sensitive documents or wires money to the wrong account will erase any savings. By contrast, a guardian layer is a fixed cost that lowers risk across every new agent you deploy. It turns each additional agent into a safer marginal investment.

-

Buyers already demand controls. Big customers will not sign without audit trails and enforceable policy. Insurers will nudge in the same direction as they price cyber coverage. Data controllers will require proof of least privilege and explainability to satisfy their own obligations under privacy and security frameworks.

-

Competition will reward it. The teams that can say yes to more use cases because they can prove control will win the internal roadmaps and the external contracts. Safety will show up as faster approvals, fewer incident hours, and higher customer adoption.

What good looks like by early 2026

If you execute this blueprint, your agent platform will look different in six months.

-

Every tool call passes through a policy engine that can block, rewrite, and log.

-

Every agent action uses a short lived, scoped credential that expires in minutes and is revoked at task end.

-

Every high risk action has a circuit breaker that brings in a second checker or a human.

-

Every incident investigation starts and ends with structured logs and reason codes.

-

Product teams ship faster because they do not need to invent safety for each feature. They adopt a platform contract and focus on business logic.

-

Your roadmap expands. When the platform proves that it can contain error and abuse, stakeholders trust the next agent to handle money, code, or sensitive documents.

The moment to act

Fall 2025 was the turning point. Agents stopped being experimental and started doing meaningful work in production. That flipped the risk equation, and the market responded with a layer of runtime safety that lives between models, tools, and data. You can build it now with parts you already own and a few new services that speak the language of agents.

The question is not whether you need guardian agents and AI firewalls. The question is whether you will frame them as a compliance tax or as a capability. The companies that choose the latter will ship more agents into more workflows with less drama. When safety becomes a feature, it becomes a moat.