The Agent Security Stack Goes Mainstream This Fall 2025

This fall’s releases turned agent security from slideware into an enterprise stack. Identity-first agents, guardian oversight, and enforceable guardrails are now shipping across the major platforms. Here is how the pieces fit, what patterns work in production, and a 90‑day plan to get ready for 2026.

Breaking: a new layer for AI just arrived

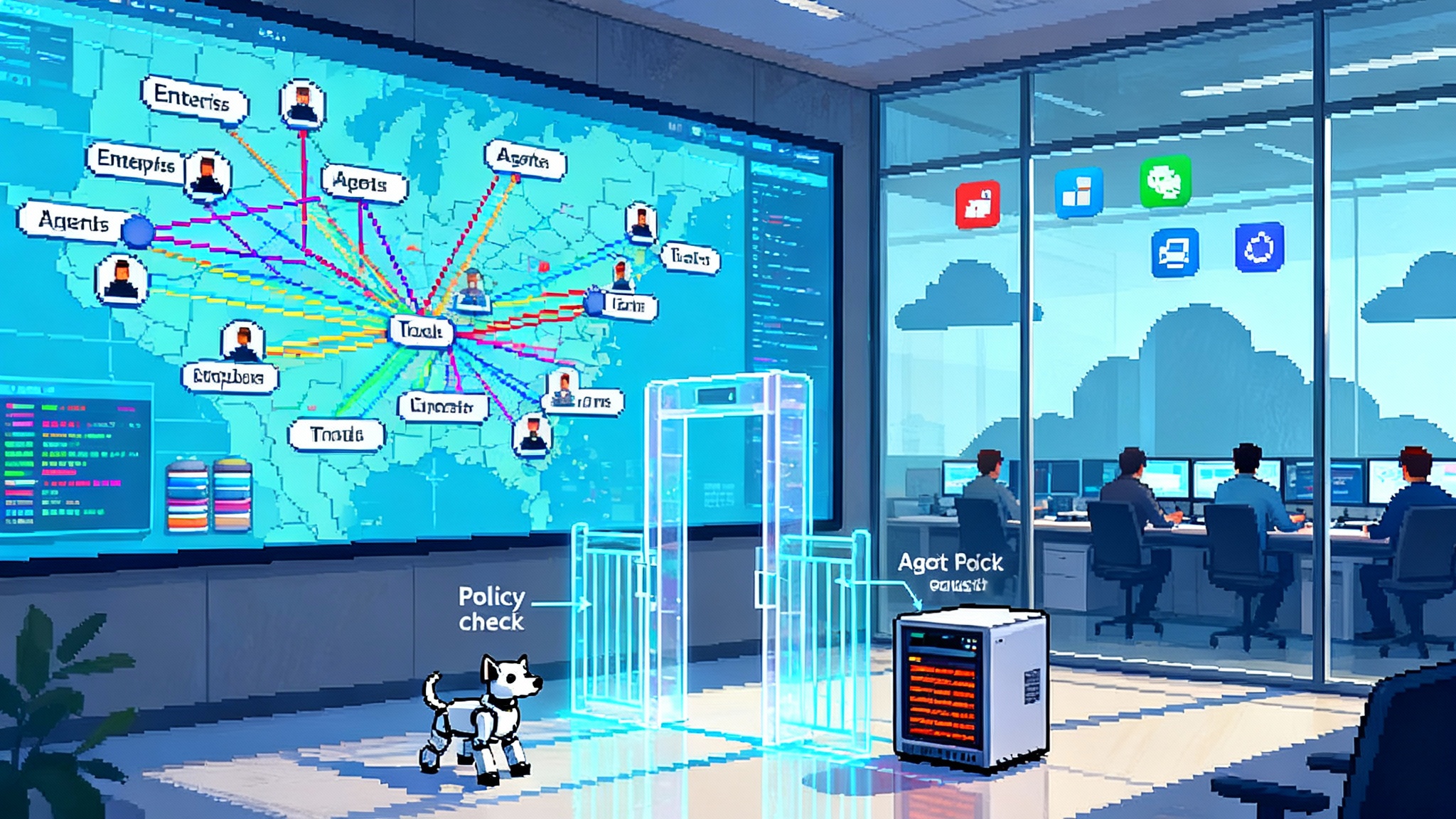

Across the fall 2025 release cycle, something clicked. Security teams finally received what they have been asking for since the first agent demos leapt off slides and into production: a coherent agent security layer. Microsoft introduced Entra Agent ID to give every enterprise agent a first-class identity with policies and logs, tying agents to the same Zero Trust controls used for people and services. That was a signal moment because it made identity the foundation of agent safety rather than an afterthought. Microsoft’s move framed the stack and set a bar others now have to meet, from discovery to governance to incident response. See the Microsoft Entra Agent ID overview.

At the same time, platform and security vendors converged on the rest of the missing pieces. Salesforce hardened how Agentforce runs with least-privilege defaults and admin controls, aligning with the Agentforce security patterns and playbook. ServiceNow shipped an AI Control Tower so enterprises can see and govern every agent and workflow. Cisco added identity-aware Zero Trust network access for agents. AWS deepened Bedrock Guardrails, including policy enforcement through identity integration. CyberArk brought privileged access discipline to agents, and Okta expanded time-boxed permissions with new privileged access tooling. The direction is unmistakable: safe autonomy is becoming the gating feature for enterprise AI in 2026.

Why securing agency, not just accuracy, is the unlock

Most AI safety conversations still gravitate to model accuracy. Useful, but not sufficient. Once an agent can read email, move money, modify customer records, or call developer tools, you are no longer validating a single output. You are delegating action. That changes the risk math.

Think of an agent like a new contractor with a badge to your building. Accuracy is how well they understand instructions. Agency is where their badge can take them, how long it stays active, whether their actions are supervised, and how quickly you can revoke it. If you scale accuracy without governing that badge, you scale both value and blast radius. Enterprises learned this with service accounts a decade ago. They will not repeat the mistake with agents.

The agent security stack, piece by piece

Below is the emerging stack that fall launches are crystallizing. You can mix and match vendors, but the architectural roles are becoming clear.

1) Agent identity and least privilege

- Agent IDs: Every agent gets a unique, verifiable identity at birth, not a shared token. That identity travels with the agent across tools and clouds so you can authenticate, authorize, and audit it like any non-human identity. Microsoft’s Entra Agent ID put this into mainstream enterprise tooling. Workday announced an Agent System of Record and an integration with Entra so that agents can be onboarded, offboarded, and governed alongside employees.

- Just-in-time permissions: Agents should receive permissions only for the task at hand and only for the time window needed. That minimizes lateral movement and reduces the value of stolen credentials. Expect privileged access leaders to extend the same controls they used for human administrators to autonomous agents.

- Least privilege by design: Agents default to no capabilities. Admins grant only the actions and data required, with clear separation between read and write, and between internal and external calls. Salesforce’s guidance for Agentforce emphasizes permission-set-led security and tight scoping of actions, which aligns with reasoning LLMs in production.

- Continuous session recording: Treat agent sessions like privileged human sessions. Capture inputs, tool calls, and outputs for forensics and model improvement, while respecting privacy and compliance constraints.

Outcome: You always know which agent did what, when, and under which policy. You can roll permissions back without breaking the rest of the system.

2) Guardian agents for automated oversight

Security and risk leaders have been piloting watchdogs for months. Guardian agents supervise other agents, check intent against policy, and intervene when behavior goes out of bounds. They reduce the human review burden while catching violations at machine speed.

Practical patterns are emerging:

- Reviewer: Scans proposed actions for policy violations, compliance flags, or sensitive data exposure before execution.

- Monitor: Observes multi-step plans and tool calls in real time. If the agent drifts off policy or tries to contact an untrusted domain, it routes to a human or a safer path.

- Protector: Holds a veto. It can block a financial transfer above a threshold, stop a bulk export of customer data, or quarantine a suspicious workflow.

Gartner's midyear prediction that guardian technology would become a material slice of the market captured a real operational need. The mechanism matters: guardian agents must be separable from the agents they oversee, measured against clear rules, and auditable.

Outcome: You move from spot checks to continuous, automated oversight with crisp escalation paths.

3) Agent firewalls and guardrails

Enterprises now install controls at the edge of agent conversations and tool use, not simply inside prompts. These are policy engines that analyze inputs, outputs, and sometimes the full chain of tool calls.

- Input sanitizers: Catch prompt injection, jailbreak attempts, toxic or regulated content, and malformed tool payloads before they hit the model or backends.

- Output filters: Prevent secrets disclosure, protect regulated data, and keep responses on topic.

- Session guardrails: Apply organization policy to every call. AWS’s guardrail enforcement model shows how this is shifting from best practice to technical control, with identity and policy in the loop rather than only application code.

- Micro-models for control: Lightweight classifiers and topic models run alongside agents to provide faster, cheaper guardrails than routing everything through a single large model.

Vendors offering these layers include network and edge providers, model platform providers, and open tooling. The details differ, but the intent is consistent: block risky content and actions early, cheaply, and observably.

Outcome: You reduce the noise hitting your models and tools, and you embed organizational policy in the path of every agent call.

4) Continuous red teaming and assurance

Annual pen tests do not keep up with agents that change weekly and face adversaries who iterate daily. Enterprises are adopting continuous red teaming that combines automated attack generation, curated benchmarks, and human operators.

Capabilities to look for:

- Multi-turn, agentic adversaries: Attackers do not stop at one prompt. Modern red teams simulate persistent adversaries that adapt tactics when blocked and try multi-agent exploit chains.

- Sandboxed tool abuse: Safe test environments where agents can attempt file system writes, network calls, or database actions without risking production systems.

- Safety regression testing: Every new prompt, tool, or model version runs through the same battery of policy, privacy, and abuse tests so teams catch regressions before incidents.

- Exploit explainability: Actionable reports that identify the root cause. For example, a model that treats external web content as authoritative without source validation, combined with an agent that lacks a Trusted URLs allowlist, leads to data exfiltration risk.

Outcome: You turn static guardrails into a living program that learns from new attack classes and hardens defenses every sprint.

Zero Trust grows up for non-human identities

Zero Trust was built on a few simple truths: never assume trust from location, verify continuously, and minimize privileges. In the agent era those truths apply to non-human identities and to multi-agent systems that span clouds and workplace platforms.

What changes in practice:

- Identity is the new perimeter for agents too: If an action has impact, it must come from a named agent identity, not a shared token. Attributes such as business owner, supported tools, data domains, and risk tier ride along with the identity to inform runtime policy decisions.

- Network trust shrinks to the minimum: Agent traffic must be authenticated and authorized, segmented by purpose, and observable. Network vendors are adding agent awareness to their Zero Trust access layers so policy can differentiate a billing bot from a developer assistant, even if both run in the same cluster.

- Device and workload posture for tool connectors: Agents that invoke browsers, desktop apps, or service endpoints must inherit posture checks from those endpoints. If the connector fails attestation, the agent cannot proceed.

- Break-glass design: When an incident triggers, your platform must be able to revoke one agent’s permissions or quarantine a family of agents by tag without touching unrelated systems.

This is Zero Trust applied to the fabric of software that now acts on your behalf.

The platforms are wiring it in

A year ago, agent security looked like a patchwork of startup tools and hand-rolled middleware. This fall, mainstream platforms are converging on first-party controls and standards.

- Microsoft anchored the stack with Entra Agent ID, Purview data controls for agents, and Defender signals flowing into developer consoles. Security teams can govern agents alongside users and service principals.

- Workday’s Agent System of Record integrates with Entra so that enterprises can manage agents across HR and finance workflows with the same joiner-mover-leaver rigor used for people.

- ServiceNow added an AI Control Tower to centralize visibility, governance, and lifecycle operations for agents, whether they are native or third party.

- Salesforce is pushing least-privilege patterns and permission-set-led security in Agentforce, along with admin controls that reduce agent overreach.

- AWS advanced guardrail enforcement at the identity and policy layer, not just in application code, which makes consistent controls more practical at scale.

- Cisco extended network controls with identity-aware access for agents and deeper policy observability, fusing Zero Trust into the network fabric.

- Interoperability is accelerating. Google launched an open Agent2Agent protocol so agents from different vendors can collaborate with enterprise-grade authentication, authorization, and audit semantics. Microsoft publicly committed to support the spec in its own agent platforms. This foundation aligns with our overview of the open agent stack and A2A details. See the original Agent2Agent protocol announcement.

The throughline is clear. The controls enterprises already use for humans and microservices are being adapted to agents and standardized across ecosystems.

Design patterns that work in production

- Agent identity first: Assign a unique identity to every agent and every tool adapter. Store owner, purpose, risk tier, and expiration on the identity. Ban shared tokens.

- Capability attestation: Before an agent can call a tool, require signed attestation about what that tool does, where it runs, and what data it touches. Reject unknown or unverifiable tools.

- Time-boxed scopes: Replace broad, long-lived API keys with scopes that expire quickly and are tied to the task context. If the agent needs more, it requests elevation through a workflow.

- Trusted URLs and domains: Maintain explicit allowlists for outbound agent calls, web retrieval, and webhook targets. Block everything else by default.

- Execution sandboxes: For code-writing or browser-using agents, run in isolated sandboxes with strict egress rules and resource quotas. Log system calls for later investigation.

- Two-channel confirmation: For high-impact actions, require a second channel such as a human approval, a guardian agent veto check, or a cryptographic token tied to the business owner.

- Observation by default: Log prompts, tool calls, and decisions with privacy controls and retention policies. Turn those logs into dashboards for security and operations teams.

- Kill switch and circuit breakers: Make it one click to disable an agent or a class of agents by tag. Use automatic breakers on anomaly triggers such as data volume spikes or policy violations.

- Continuous red teaming in CI: Add automated attack suites to your integration pipeline. Fail the build on new policy violations, not just broken tests.

A 90-day plan to get ready for 2026

- Inventory and classify agents: Create a catalog of every agent in production or pilot with owner, purpose, risk tier, data access, tool list, and external dependencies. You cannot secure what you cannot see.

- Assign Agent IDs and remove shared credentials: Move each agent to a unique identity with rotation and logging. Replace static tokens with short-lived, scoped credentials.

- Enforce least privilege: Start with read-only access where possible, split read from write, and prune unused permissions. Tie any elevation to approvals and alerts.

- Stand up a guardian: Begin with a reviewer that checks actions against policy in your highest risk workflows. Measure how often it catches issues and how many false positives it creates.

- Put a firewall in front of agents: Add input and output filters for prompt injection, sensitive data, and topic control. Start with your customer-facing agents and anything with web access.

- Wire in red teaming: Adopt a continuous red teaming tool that can simulate multi-turn attacks. Schedule weekly runs and track a safety score in your program dashboard.

- Build the kill switch: Prove you can disable a misbehaving agent in minutes, not hours. Practice the playbook.

- Update third-party contracts: Require agent identity, audit logs, Trusted URLs, and red team cooperation in your vendor agreements. If a partner’s agent will act in your environment, it must meet your controls.

What changes in 2026

- Safe autonomy becomes a buying criterion: Procurement checklists will require Agent IDs, least-privilege enforcement, guardian compatibility, red teaming attestation, and a kill switch. If your platform cannot check those boxes, it will not clear security review.

- Identity leaders become agent governors: Identity and access management teams will own policy for non-human identities at scale, including joiner-mover-leaver for agents and lifecycle integration with business systems.

- Interop with policy: As A2A spreads, cross-vendor multi-agent workflows will become common. The differentiator will be policy portability and audit metadata that can travel across clouds without losing meaning.

- New metrics: Security teams will report on agent trust posture, including percentage of agents on unique IDs, percentage under guardian oversight, average time to revoke, and red team pass rates.

- Regulatory attention: Auditors will ask how you prevent agent overreach and how you detect and respond to prompt injection and data exfiltration. Logs and reproducible tests will matter as much as policies.

The bottom line

Autonomy without accountability is a nonstarter in the enterprise. The market internalized that lesson this fall and began shipping the missing layers, from identity to oversight to edge defenses and continuous assurance. The effect is practical. Security leaders can now define and enforce what an agent may do, where and when it can do it, and who is responsible when something goes wrong.

The winners in 2026 will not be those who promise the boldest agents. They will be those who ship the most governable ones. If you treat agents like first-class identities, wrap them with real-time oversight, put firewalls and guardrails in their path, and red team them like adversaries, you earn the right to scale autonomy. That is how enterprise AI leaves the lab and becomes dependable infrastructure.