Agentforce 360 Arrives and a New Playbook for Agent Native SaaS

Salesforce’s Agentforce 360 moves enterprise AI from chat windows to core systems. Here’s why domain-aware agents inside CRM and ERP outperform generic stacks, how runtime governance is evolving, and a 90‑day plan to ship production agents in 2026.

Breaking: Salesforce turns CRM into an agent runtime

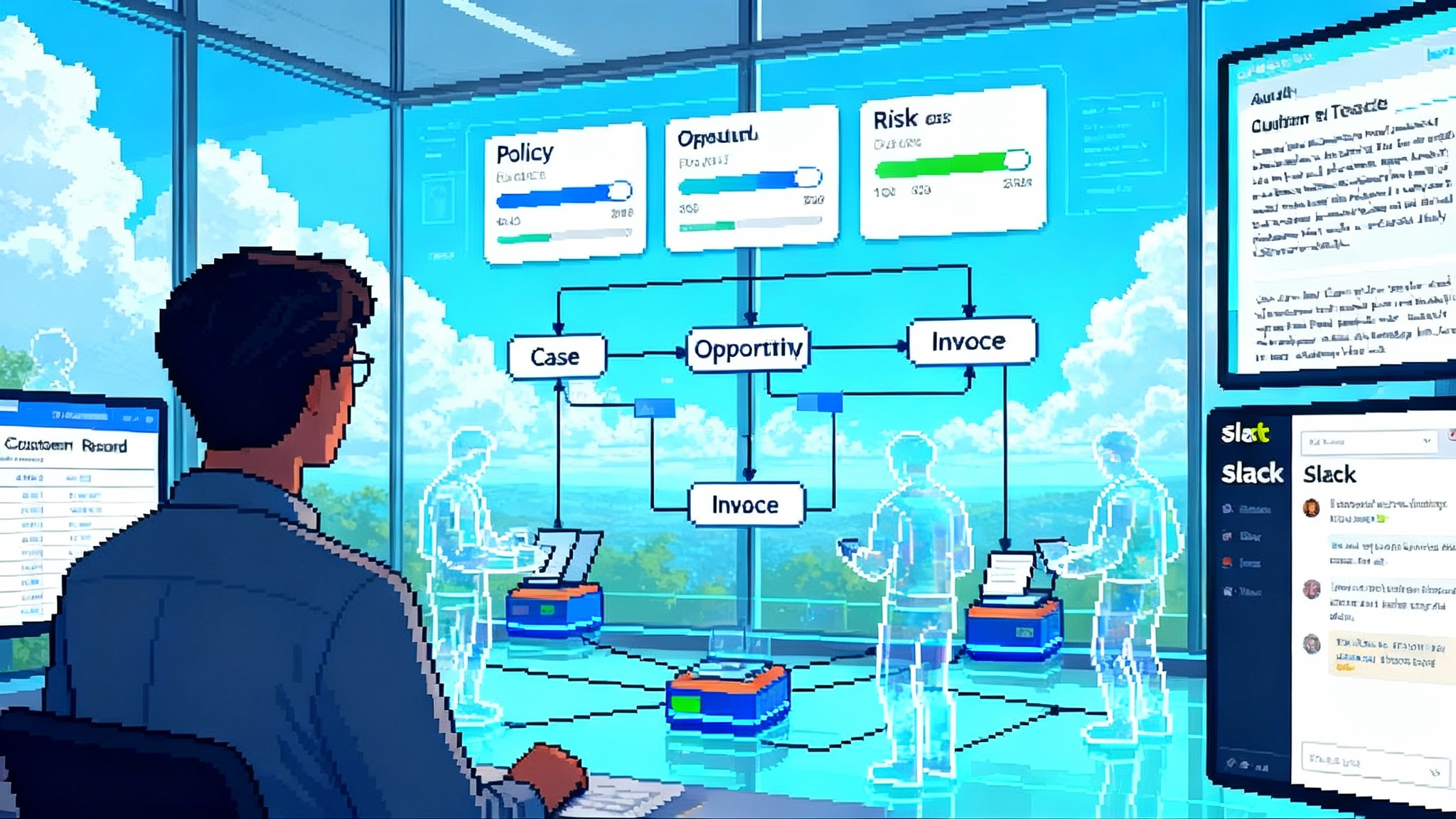

Salesforce has moved the center of gravity for enterprise AI from chat windows to core systems. With the general availability of Agentforce 360 in mid October 2025, the company positioned its customer platform as a place where humans and software agents share the same workspace, rules, and data. In practical terms, that means AI agents can read, write, and act across sales, service, marketing, and commerce objects while being governed by the same controls that already run the business. See the announcement in Salesforce launches Agentforce 360.

Salesforce says more than ten thousand customers have already deployed the underlying Agentforce capabilities, with case studies pointing to real deflection and response-time gains. External reporting notes a global launch and Slack integration for conversational control inside the enterprise. The signal is not the marketing language. It is that the agentic pattern has crossed from proofs of concept to production. For corroboration, see Reuters on Agentforce 360 launch scale.

We have seen this shift echoed in workspaces as runtimes with Notion and across reasoning LLMs in production.

Why vertically integrated, domain-aware agents will outpace generic stacks

Think of a generic agent stack as a brilliant intern with a universal toolbox but no building access card. You can describe a task, and the intern will try hard, but every step requires a handoff to get data, permissions, or a system to click. A domain-aware agent inside your customer platform is a tenured colleague who already has the badge, understands your data model, and knows the standard operating procedures. That colleague can move from insight to action because the environment and the tools are already connected.

Three forces make embedded agents faster and safer than general-purpose assemblages:

- Data adjacency. Enterprise platforms like CRM and ERP are already the source of truth for customers, orders, entitlements, and approvals. When an agent lives inside that environment, it does not need elaborate retrieval layers to reason over the objects that matter. It can ground its steps in records that have owners, histories, and policies.

- Actionability by default. Modern platforms expose fine-grained actions as first-class objects. In Salesforce, flows, Apex, and automations are already battle tested. In SAP, service orders and purchase requests are governed transactions. In ServiceNow, catalog items encode repeatable change. Agents that call these primitives inherit controls and observability rather than inventing them.

- Governance continuity. The compliance and security work you have already done for role-based access, field-level permissions, audit trails, and data residency does not evaporate. Agents become new users with policies, not shadow scripts that live outside the system of record.

That is why a support agent embedded in CRM can read the customer’s entitlement, scan relevant knowledge articles, collect the missing logs, and either resolve or route with a justified summary. A sales agent can qualify a new lead, look for similar past wins, schedule time with the right account executive, and update the opportunity with a transparent rationale you can review later. A finance agent in ERP can validate a vendor invoice against a purchase order and goods receipt, and then request a human sign-off only when a threshold or policy rule requires it.

The early Agentforce cadence matters here. Salesforce introduced Agentforce capabilities in late 2024, then iterated on reasoning, interoperability, and governance through multiple releases before the Agentforce 360 packaging. That rhythm reflects how much real-world plumbing is required to make agents durable in production.

The runtime governance layer takes shape

Agent platforms are learning that policy is not a document. Policy must be executable at runtime. Two constructs are becoming common, regardless of vendor, and you should expect them in any agent-native stack you deploy.

Policy cards

A policy card is an explicit instruction set that travels with an agent. It defines what the agent can see, what it can change, and what approvals it must request. It resembles a payment card with spending limits and merchant categories, but applied to data and actions. A policy card should include:

- Scope. The objects and fields the agent may read and write. For example, Cases: read all; create; update fields A, B, and C; never touch field D.

- Allowed actions. The flows, automations, and external tools the agent may call. Think of this as a whitelist of verbs.

- Guardrails. Quantitative limits such as maximum refund amount, maximum records updated per hour, or required human approval if an answer’s confidence score falls below a threshold.

- Audit tags. Labels that the agent must attach to every change so that downstream analytics can segment outcomes by agent, policy, and use case.

If your platform supports executable policy, the card can be enforced by the engine that resolves access control and monitors actions. If your platform does not, implement a policy middleware that wraps every tool call and blocks anything outside the card.

Autonomy risk scoring

Not all tasks are created equal. A safe degree of autonomy for a password reset is not the same as for a contract amendment. An autonomy risk score is a number between 0 and 100 that combines the impact of a mistake, the reversibility of the action, and the observability of the process. Use the score to select one of four operating modes:

- Suggest. The agent drafts a response or plan. A human approves each step.

- Constrained act. The agent executes actions under strict limits from the policy card, logs every step, and pings a human when a limit is reached.

- Supervised act. The agent performs a sequence of steps, and a human can interrupt. This is useful for long-running workflows such as complex case resolution.

- Free act with audit. Reserved for low-risk, easily reversible actions with strong logging and rollback.

As your metrics improve, you increase autonomy for the specific use case, not for the agent in general. This use-case level grading prevents the classic failure where one flashy demo leads to over-permissioning.

Agents also need reliable memory of what was done and why. Expect a trace view that records the chain of thought at the level of tools used, inputs, outputs, and confidence signals. Expect policy checks and approvals to be part of that trace. Vendors that build inside enterprise systems can log these traces to the same stores you already use for compliance, which aligns with reasoning LLMs in production.

Architecture patterns for agent-native SaaS

- Records as the environment. Treat your object model as the world the agent inhabits. The agent perceives through record queries and acts through record mutations and action calls. This keeps reasoning grounded in business reality.

- Skills as platform verbs. Bind agent skills to platform flows and APIs. A skill is not just a prompt. It is a contract that says: given inputs, call these verbs, return structured outcomes, and include audit tags. For open interfaces and interop, see the open agent stack.

- Context packs. Prepare first-party knowledge in reusable packs. For service, that might be entitlements, top solutions, and known failure signatures. For sales, it might be competitor counters, proof points, and discount policies. Keep packs versioned and testable.

- Simulation by default. Before you give an agent access to production, run it against synthetic historical traces. For service, feed last quarter’s cases and score whether the agent’s proposals match human actions. For sales, simulate lead triage and look for false positives that would waste account executive time.

- Human in the loop made specific. Do not write “requires approval.” Define the approver role, the signal that triggers approval, and the expected time to decision. Then instrument it.

The new vendor evaluation checklist

When your team puts together a request for proposal next year, cut through the hype with this concrete checklist:

- Native objects and verbs. Which objects can the agent read and write without custom integration work? Which platform verbs are first class to the agent?

- Governance primitives. Are policy cards executable? Are approvals and limits enforced by the engine that runs actions, or only by out-of-band code?

- Trace and rollback. Can I view a single agent’s reasoning steps with inputs and outputs? Can I revert an action or a batch of actions safely?

- Model choice without glue code. Can I swap or blend models for different tasks while keeping the same policy and trace layer? Can I restrict a model to specific autonomy modes?

- Workload economics. What is the cost per resolved ticket, per qualified lead, or per workflow completed, including model usage and platform runtime? Ask vendors to express cost in business outcomes, not tokens.

- Security boundary clarity. Does the agent run as a first-class user inside the platform with role-based access, or as an external service with a long-lived key? The former is usually cleaner to govern.

A 90-day blueprint to ship production agents in 2026

You want live value, not another pilot. Here is a pragmatic plan that a cross-functional team can execute between January and March 2026.

Days 0 to 30: pick one workflow and instrument it

- Choose a single, high-volume, low to medium risk workflow in a core system. Examples: password reset and entitlement lookup in service, lead triage in sales, employee onboarding in IT service, invoice matching in finance.

- Write the policy card. Name the objects, fields, and verbs, and set quantitative guardrails. Define the autonomy risk score and start in Suggest or Constrained act.

- Build the context pack. Extract the top 50 knowledge articles or playbooks, normalize responses, and remove stale or policy-violating content.

- Simulate with real history. For two weeks, run the agent against last quarter’s records in a sandbox. Measure proposed actions against what humans did.

- KPIs: simulation precision above 80 percent, average response latency under 8 seconds for Suggest mode, zero violations of guardrails in simulation, and trace completeness of 100 percent.

Days 31 to 60: go live in supervised mode and close the loop

- Deploy to a small group and run in Supervised act mode. The agent executes steps while a designated supervisor can interrupt or approve. This is not a demo. It handles real tickets or leads during business hours.

- Instrument human effort. Measure the time humans spend approving or correcting, not just final outcomes. The point is to learn where the agent creates real leverage.

- Expand verbs carefully. If the agent shows consistent safety and accuracy, grant one additional verb from the whitelist, such as issuing credits up to a small limit or creating follow-up tasks.

- Start reinforcement. Use the trace to identify common failure patterns. Update context packs and prompts weekly. Run a regression simulation before each update.

- KPIs: 15 to 25 percent deflection or automation rate for the chosen workflow, supervisor override rate under 20 percent, accuracy above 90 percent on audited samples, and no more than 1 percent of actions requiring rollback.

Days 61 to 90: graduate to constrained autonomy and scale

- Move to Constrained act for low-risk actions. Keep Suggest for anything touching money or compliance until you have two consecutive weeks of green metrics.

- Integrate into collaboration. Surface the agent in your chat workspace so humans can watch, nudge, and request audits on demand. This shortens the feedback loop between line workers and builders.

- Expand to a second workflow in the same department. Reuse the policy card skeleton and context pack pattern. The second workflow is almost always faster to bring online.

- Establish runbooks. Create one-pager runbooks for on-call responders that include kill switch steps, rollback procedures, and a checklist for policy updates.

- KPIs: 30 to 40 percent automation rate in the original workflow with customer satisfaction flat or up, cost per resolved interaction down at least 20 percent versus baseline, p95 latency under 5 seconds for automated steps, and a rising autonomy score for the use case.

Metrics that translate to finance and legal

Executives will support agents when the metrics connect to cost and risk.

- Cost per outcome. Express the full stack cost to resolve a case, qualify a lead, or complete an onboarding. Include model usage, platform runtime, and incremental licenses. Trend it weekly.

- Risk-adjusted autonomy. Publish the autonomy score by use case and the policy card limits. This shows legal what the agent can and cannot do, with numbers rather than adjectives.

- Audit depth. Track trace coverage and mean time to audit. If a regulator or customer asks why something happened, how quickly can you reconstruct the steps?

- Human leverage. Measure hours of repetitive work removed and hours reallocated to complex cases, not headcount moved. This reframes the conversation from replacement to value creation.

What to build first in 2026

If you need a short list, start where you already have clean data and clear verbs:

- Service triage and resolution for the top ten reason codes. Success looks like first-contact resolution up, average handle time down, and non-actionable escalations near zero.

- Lead triage and meeting scheduling for inbound demand. Success looks like qualification time cut in half and meeting hit rates up.

- IT service onboarding and access requests. Success looks like cycle time down and fewer manual touches per request.

- Invoice matching and small credit issuance in finance. Success looks like fewer exceptions and improved vendor satisfaction.

What can go wrong and how to avoid it

- Shadow integrations. If the agent depends on a brittle custom connector, you will lose weekends to outages. Use platform verbs first, and only add external tools that support trace and policy controls.

- Vague human approvals. If you do not name approvers, define triggers, and measure decision time, the agent will stall and humans will blame it. Make approvals specific and instrumented.

- Unowned knowledge. If no one owns the context pack, it will rot and the agent will learn bad habits. Assign a knowledge owner and review weekly.

- Linkless governance. If your policy card lives in a wiki, it is not real. Wire policy to runtime and block anything outside the card.

The competitive map for the agentic year

The serious action is moving inside platforms of record. Salesforce is coupling agents to Customer 360 objects, model choice, and collaboration surfaces. Microsoft is deepening Dynamics 365 and Copilot Studio across Office. ServiceNow is binding agents to catalogs and workflows. SAP is instrumenting agents against orders and financials. The common pattern is clear. Vendors that sit on operational truth are wrapping that truth with reasoning and safe action. The differences will show up in how fast you can codify policy, how cleanly you can trace and roll back, and whether cost per outcome falls as autonomy rises. Those are the scoreboard numbers to watch in 2026.

The bottom line

Agentforce 360 is an inflection point because it turns your existing customer platform into a safe place for software that acts, not just chats. The strongest advantage goes to agent-native stacks that live next to the records and verbs that run your business. The new runtime layer is not abstract. It is made of policy cards you can enforce, autonomy scores you can tune, and traces you can audit. If you follow the 90-day blueprint, you can ship a production agent in early 2026 that moves real numbers without creating new risk.

Start small. Instrument everything. Promote autonomy one use case at a time. If the metrics hold, scale with confidence. That is how the agentic enterprise becomes a stable part of the way you work, not a passing novelty.