Sora 2 makes video programmable, the API moment for video

OpenAI's Sora 2 now ships as a developer API with per-second pricing from 10 to 50 cents. With reusable cameos and multi‑clip storyboards, video becomes a programmable primitive that powers the next agentic stack.

The week video became code

Every platform shift has a moment when a messy craft turns into a primitive you can address from code. Payments had that moment with Stripe. Messaging had it with Twilio. Video just had it with Sora 2. This also tracks with the modular turn for real agents now reshaping developer workflows.

In October 2025 OpenAI turned Sora 2 into an application programming interface, with per‑second billing that starts at 10 cents and tops out at 50 cents depending on model and resolution. See the official Sora Video API pricing for the current tiers. That one page matters because it converts film into a line item you can reason about like compute or storage. You no longer ask if a machine can make the shot. You ask how many seconds you want to buy, at what fidelity, and how your software will orchestrate those seconds.

Alongside the API, OpenAI shipped two capabilities that unlock programming patterns developers actually need: reusable character cameos and multi‑clip assembly via storyboards. Cameos let a verified person become a reusable asset you can cast into any scene with consent controls. Storyboards give you a way to plan and stitch scenes into a coherent sequence rather than praying that a single prompt nails an entire story. OpenAI documented the storyboard rollout on October 15, 2025 in its October 15 release notes.

Put these together and you get something new: video becomes addressable. Not a one‑off prompt, but a programmable surface you can compose, schedule, test, cache, ship, and measure. That shift raises the stakes for orchestration, a theme we explored in orchestration the new AI battleground.

What changed, concretely

- Per‑second pricing. Instead of opaque credits, you can predict cost before you render. Think of it like buying compute time. At 10 cents per second for the base model at 720p, a 15‑second spot costs 1.50 dollars, a 30‑second spot costs 3 dollars, and a 60‑second cut costs 6 dollars. At 30 cents per second for the mid tier, those become 4.50, 9, and 18 dollars. At 50 cents per second for the high‑fidelity tier, they are 7.50, 15, and 30 dollars. That is before orchestration costs like narration, captions, or post effects you might add elsewhere.

- Reusable cameos. A cameo is a person’s approved likeness, captured once with a short verification. In product terms it behaves like a character prefab you can place into shots with rules about who can use it, and the person can revoke it. For teams, that means one step to add your presenter, founder, spokesperson, or a customer who has opted in, then reuse them across a whole campaign without another capture session.

- Multi‑clip assembly. Storyboards bring timeline‑like control to Sora. Instead of prompting for a perfect 30‑second video, you design three 10‑second beats, or six 5‑second shots, then cut, reorder, and extend. That is how editors actually work, and it is how autonomous systems will learn to work too.

This is not just more knobs. It is a fundamental composability shift. When assets like people and shots become addressable, agents can reason about them.

Unit economics you can actually plan around

Let’s walk through a simple ad workflow for a direct‑to‑consumer brand selling running shoes.

- Concept: six 5‑second clips, each focused on a specific message. Total duration 30 seconds.

- Casting: one approved cameo of the brand’s athlete spokesperson.

- Variants: five targeted edits for five audience segments. The base story stays the same while the scenes and copy vary by audience.

Now the math at three quality tiers:

- Base tier at 10 cents per second: 30 seconds x 0.10 dollars = 3 dollars per master, 15 dollars for five variants. Ten masters across a month = 30 dollars, fifty variants = 150 dollars.

- Mid tier at 30 cents per second: 9 dollars per master, 45 dollars per five variants. Ten masters = 90 dollars, fifty variants = 450 dollars.

- High tier at 50 cents per second: 15 dollars per master, 75 dollars per five variants. Ten masters = 150 dollars, fifty variants = 750 dollars.

Add orchestration: prompts, captioning, translation, call‑to‑action slates, and a lightweight human review pass. Even if those double the above, the cost to ship a weekly slate of segmented video ads now fits into a prototype budget for a growth team. The unit cost stops being a barrier and starts being a dial you turn based on return on ad spend.

Two practical implications follow:

-

Iteration beats perfection. If your first cut now costs single digits instead of hundreds, you can draft ten, test three, and scale one. That is how high performers already run creative operations. The API formalizes it.

-

Render time becomes a throughput constraint you can design around. If you need 100 clips by morning, break them into 10 parallel batches of 10, collect telemetry about failures, and retry only the errored batches. Video stops being a studio schedule and becomes a job queue.

Immediate build paths for founders and teams

Here are three concrete product directions that are feasible right now.

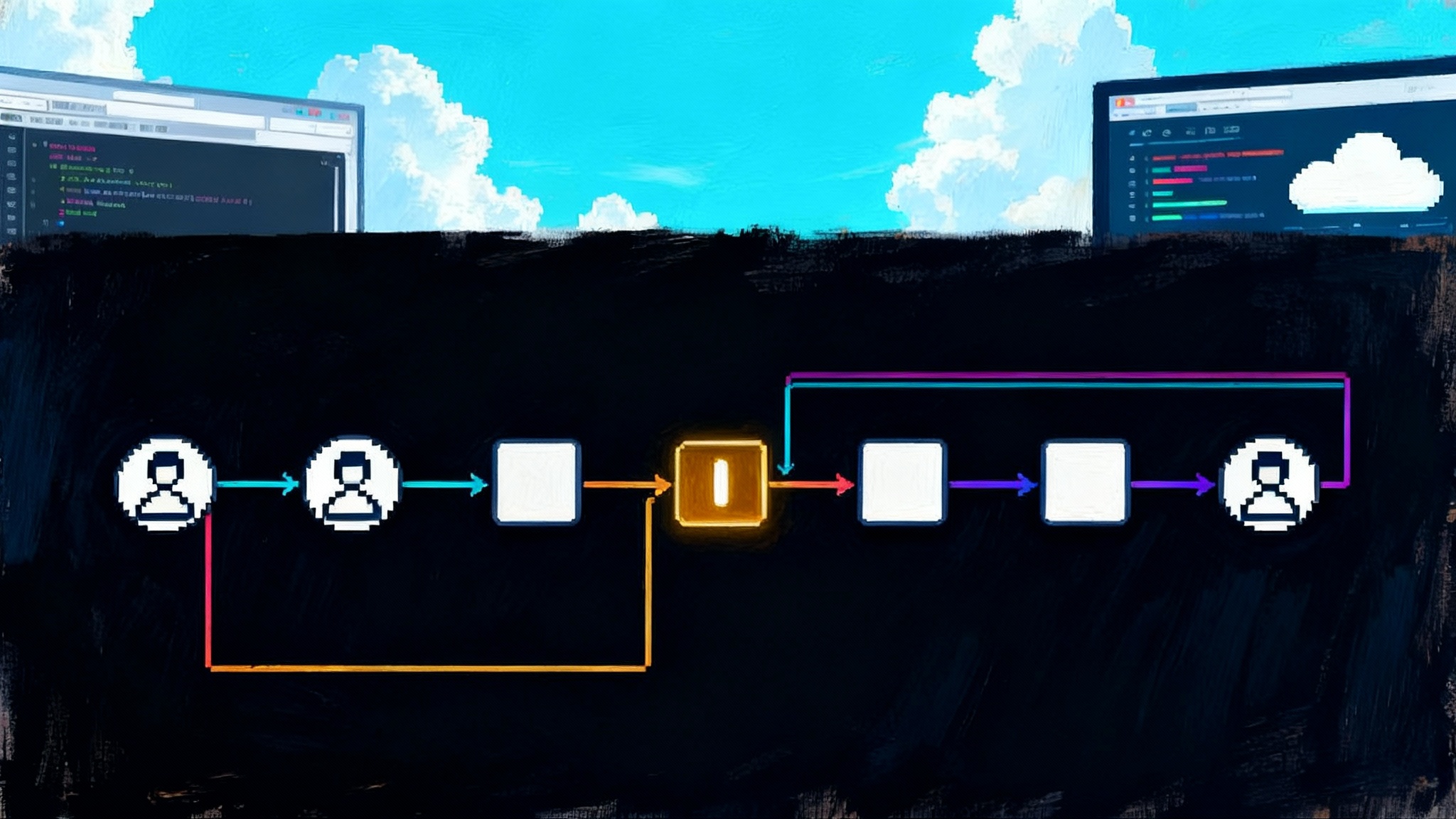

- Video‑native agents

- Director agent: translates goals into shot lists and storyboards, allocates seconds, and drafts prompts per shot.

- Casting agent: resolves which cameos are allowed for each campaign and inserts constraints like no public figures or no minors in certain contexts.

- Editor agent: stitches clips, enforces pacing, and generates a first pass of captions and callouts. It also generates a safe alternate cut for markets with stricter norms.

- Analyst agent: ships two or three variants, reads early telemetry, and requests specific reshoots or trims. It treats Sora like a camera that is always on call.

These agents do not need to be perfect. They just need to reduce human time on repetitive creative steps while keeping a person in the loop for taste and compliance. For a broader view of agent ecosystems, see how AI firewalls as the enterprise moat shape responsible deployment.

- Autonomous ad creative

- Input: product catalog, brand voice, audience segments, and a constraints file that encodes claims and disclosures the brand is allowed to make.

- Process: for each product and audience, the system drafts six shots totaling 15 or 30 seconds, casts the approved cameo, and localizes captions and voice in a single pass.

- Output: a set of cutdowns for social placements, each tagged with the audience hypothesis it tests. The system logs the cost per second, per variant, so the growth team sees both media spend and creative spend side by side.

- End‑to‑end synthetic media pipelines

- Previz for product launches where hardware is not final.

- Training and onboarding content that needs frequent updates without scheduling reshoots.

- Long‑tail explainers tailored to niche customer questions, each synthesized from approved product knowledge and narrated by a consistent cameo presenter.

In each case, the key design move is the same: make shots and people addressable resources with clear rules, then build small agents that manipulate those resources.

What developers need to know before they ship

- Duration and aspect ratios: plan your story in beats. A reliable approach is three 5‑second beats for 15 seconds or six 5‑second beats for 30 seconds. Keep camera moves clear and intentional.

- Audio comes baked in: Sora co‑generates dialogue, ambience, and effects. You can treat sound as another control in your prompt, or overwrite it later if you are adding licensed music.

- Watermarks and provenance: Sora outputs include visible watermarks today for many cases and embed metadata that follows the Coalition for Content Provenance and Authenticity standard. That makes detection and audit easier in downstream platforms.

- Consent by design: cameos are opt‑in and revocable. That is not just a policy point. It is a technical constraint you should model explicitly. If a user revokes a cameo at 2 p.m., your system must be able to find and remove any queued renders that would use it.

- Performance and retries: treat generation like a distributed job. Queue requests, set sensible timeouts, and use idempotency keys so a crashed worker does not buy the same 10 seconds twice.

Safety and intellectual property are first‑class constraints, not afterthoughts

OpenAI’s Sora 2 launch material emphasized provenance and consent. Videos carry visible watermarks in many scenarios, and metadata that aids verification. Cameos are permissioned by default, and public figure depictions are restricted. Treat these as guardrails that make your product better, not blockers to work around.

Practical steps that respect those constraints:

- Model a cameo as an object with an owner, permissible uses, and an expiration. Add checks in your pipeline before every render.

- Maintain a clean separation between claims and visuals in ad workflows. If legal approves a set of claims and disclaimers, store them in a machine‑readable file and have your agent pull only from that file.

- Keep an audit log that records which script generated which shot, with which cameo, at what time, and with what watermark settings. This is your safety ledger.

- Plan for rights management. Even synthetic dialogue can reference real brands or people if you are not careful. Add negative prompts and filters up front, then scan outputs with an entity recognizer before publishing.

The market will push back where it should. Expect name disputes and debates about likeness rights, especially as cameo quality improves. The way through is to build user control directly into your product and to design for discovery and removal of content that violates a person’s settings.

Five patterns that will win early

- Shot libraries as code: save prompts, framing, and movement as versioned snippets. Reuse them like components.

- Variant loops: generate three cuts, release two, and let performance decide which one gets the next 15 seconds of budget.

- Caching and reuse: if a product shot or scene works, reuse it in new cuts instead of regenerating from scratch. That saves both time and cost.

- Human‑in‑the‑loop editing: never ship a first render cold. Use Sora to get to a solid draft, then invest human taste where it matters.

- Compliance automation: a validator that checks every script and caption against a rule set is not optional. It is how you scale.

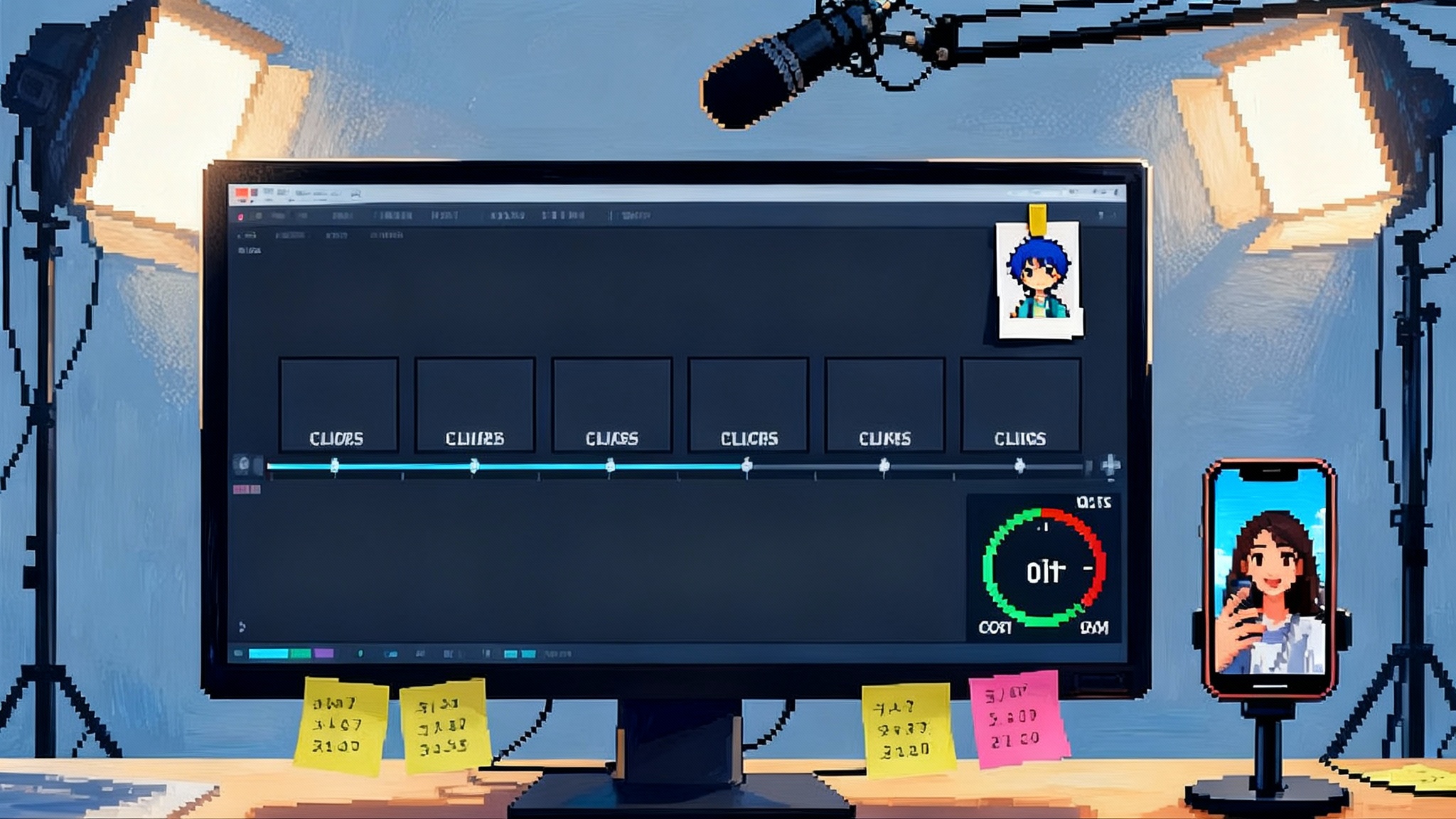

A short build guide: from prompt to pipeline

Here is a practical outline you can implement this week.

- Define your assets

- Cameos: create and store the unique identifiers and the rules that govern who can use them.

- Shots: a schema with fields like subject, setting, motion, camera, duration, and audio notes.

- Storyboards: an ordered list of shot identifiers with transitions and captions.

- Orchestrate generation

- Allocate a budget in seconds per scene. A simple first rule is to cap any single shot at 5 seconds and never exceed 30 seconds total for a first pass.

- Submit each shot as a job with its fields translated into a clear, specific prompt. Keep a retry policy on network or policy errors.

- When all shots complete, assemble them according to the storyboard. Insert captions, call‑to‑action screens, and any legal lines.

- Ship variants safely

- For each audience segment, clone the storyboard, adjust two shots and the captions, and render at the same length. Tag each render with its hypothesis.

- Scan every output for policy and brand safety. Reject anything that references public figures or unapproved claims.

- Publish, read telemetry, and decide which variants get more seconds at the higher tier.

- Measure and learn

- Track seconds purchased, seconds used, cost per finished second, and failure rates by scene type. You will quickly see which prompts cost more than they are worth.

- Keep a leaderboard of shots that perform well across campaigns. These become your stock footage library, only it is generated on demand.

What to watch next

- Latency and throughput: as request volume climbs, the real question becomes how many finished seconds you can reliably produce per hour per spend level. Expect queues and batch windows.

- Longer formats: the jump from 30 seconds to 2 minutes changes what is possible for education and onboarding. Expect story tools to mature fast.

- Talent markets for cameos: creators will want to license their cameos to brands with clear consent and revenue shares. Platforms that make this safe and simple will win.

- Standards for provenance: C2PA will matter more as downstream platforms ingest more synthetic video. Products that surface provenance in respectful ways will build trust.

For a broader industry context, see how the hybrid LLM era is unfolding across stacks adjacent to media.

The upshot

Sora 2 did not merely improve video generation. It turned video into a programmable surface with costs you can plan, controls you can encode, and safety you can model. The result is a shift from single‑shot magic tricks to end‑to‑end systems: agents that write, cast, shoot, and cut inside a budget measured in seconds. If you are building creative tools, ads, education, or support, the move is clear. Treat video like an addressable resource. Build your agents around shots and cameos. Instrument the pipeline for cost, quality, and consent. Then let the work teach you where to spend the next 15 seconds.